With the continuous development of Meituan's food delivery business, the food delivery advertising engine team has carried out engineering exploration and practice in various fields, and has achieved some results so far. We plan to share it with you in the form of serialization. This article is the first of the special serialization of "Meituan Food Delivery Advertising Engineering Practice". Aiming at the goal of improving business efficiency, this article introduces some thinking and practices of Meituan’s takeaway advertising engine in the process of platformization.

1 Introduction

Meituan Food Delivery has become one of the company's most important businesses, and commercial realization is an important part of the entire food delivery ecosystem. After years of development, the advertising business has covered multiple product lines such as list advertisements in the form of feed streams, display advertisements for KA and large merchants, search advertisements based on user queries, and innovative advertisements in some innovative scenarios. segmented business scenarios.

From a technical point of view, the process of an advertisement request can be divided into the following main steps: advertisement triggering, recall, refined arrangement, creative optimization, mechanism strategy and other processes. As shown in the figure below: that is, the user's intention is obtained through triggering, and then the advertisement candidate set is obtained through recall, and the stores in the candidate set are scored and sorted through estimation, and then the top stores are selected creatively, and finally obtained through some mechanism strategies. advertising results.

2 Analysis of the current situation

In the process of business iteration, with the continuous access of new business scenarios and the continuous iteration of the functions of the original business scenarios, the system becomes more and more complex, and the demand response of business iterations gradually slows down. In the early stage of business development, the architecture reconstruction of a single module, such as mechanism strategy and recall service, has been improved to a certain extent, but the following problems still exist:

- Low degree of reuse of business logic : Advertising business logic is more complex, such as the mechanism service module, its main function is to provide decision-making for the control center of advertisements and the mechanism of bidding and sorting of advertisements. It supports more than a dozen business scenarios online. There are many differences in each scenario, such as involving multiple recalls, billing models, sorting schemes, bidding mechanisms, budget control, and so on. In addition, there are a large number of business-defined logics. Since the relevant logic is the focus of the algorithm and business iteration, there are many developers and they are distributed in different projects and strategy groups. As a result, the business logic abstraction granularity standard is not unified enough, which makes different The degree of reuse between different services in the scene is low.

- learning cost : Due to the complexity of the code, it is more expensive for new students to familiarize themselves with the code, and it is difficult to get started. In addition, the online service has undergone microservice transformation very early, and the number of online modules exceeds 20. Due to historical reasons, the frameworks used by multiple different modules are quite different, and the development of different modules has a certain learning cost. In cross-module project development, it is difficult for a student to complete it independently, which makes the personnel efficiency not fully utilized.

- PM (Product Manager) Difficulty in information When the PM needs to confirm the relevant logic in the product design stage, the R&D students can only check the code first, and then confirm the logic. It is difficult to obtain information. In addition, because the PM is not clear about the design logic of the relevant modules, it is often necessary to ask the R&D personnel offline, which affects the work efficiency of both parties.

- QA (test) evaluation is difficult : QA completely relies on the technical solutions of R&D students when evaluating the functional scope, and most of them also confirm the scope and boundaries of functional changes through communication. While affecting efficiency, it is also easy to There is a "missing test" problem.

3 goals

In response to the above problems, from the beginning of 2020, we have launched the Meituan takeaway advertising engine platform project, aiming to achieve the following goals through the platform project.

Improve production and research efficiency

- High function reuse, improve development efficiency.

- Reduce the cost of collaboration among R&D personnel (RD), PM, and QA, and improve the efficiency of production-research collaboration.

Improve delivery quality

- Accurate scope of QA testing to improve the quality of delivery.

- Empower the business.

- PMs can understand the capabilities of other product lines through a visual platform page, empower each other, and help product iteration.

4 Overall Design

4.1 The overall idea

At present, there have been many researches in the direction of "platformization" in the industry, such as Alibaba's TMF, which is positioned in the field of platformization of pan-transaction systems. Separate plug-in architecture, the management domain is separated from the running domain. Alibaba's AIOS, on the other hand, is positioned in the field of search and push platform, mainly relying on the five core components at the bottom, and quickly combining and deploying the components in the customized mode of operator flow chart, thus realizing the rapid delivery of business.

When Meituan Takeaway started the platform project, starting from the business scenarios and business pain points, the core goals of our project were determined: Use the platform design concept to build suitable technical capabilities, and integrate the existing takeaway advertising business system and production research. The process has been transformed into a platform model, which can quickly support the delivery of advertisements and multiple businesses. We have learned from the mature ideas of platformization in the industry, and determined the overall idea of platform construction based on the standardization of business capabilities, supported by the technical ability to build a platform-based framework, and guaranteed by the upgrading of the production-research platform-based model. The overall idea can be divided into three Part: standardization of business capabilities, framing of technical capabilities, and platform-based new processes for production and research.

- Standardization of business capabilities : By sorting out the existing logic and carrying out standardized transformation, it provides a basic guarantee for multi-service scenarios and multi-module code reuse.

- Framework of technical capabilities : Provides the ability to combine and orchestrate to connect standardized logic, schedule and execute through the engine, and complete the visualization of the ability to help users quickly obtain information.

- platform-based production and research process : In order to ensure the overall efficiency improvement of R&D iterations after the project is launched, we have also optimized some mechanisms of the R&D process, mainly involving R&D personnel, PM, and QA.

That is, the guarantee of reuse is provided through standardization, the ability to carry platform-based landing through the framework, and the continuity of the overall efficiency improvement through the operation mechanism of the new production and research process. The modules involved in the entire advertising engine service follow the idea of platformization and support various upstream product scenarios, as shown in the following figure:

4.2 Business Standardization

4.2.1 Business Scenario and Process Analysis

Efficiency improvement is one of the most important goals of platformization, and the most important means to improve efficiency is to maximize the reuse of functions in the system. We first made a unified analysis of the current situation of the takeaway advertising business line and traffic, and came to the following two conclusions:

First, the major processes of each business line are basically similar, including several major steps such as preprocessing, recall, estimation, mechanism strategy, sorting, creativity, and result assembly; at the same time, there are many similarities in the same steps of different businesses. features and line-of-business-specific features. Second, in theory, these functions can be reused as a whole, but the current situation is that these functions are concentrated within the business line, and the reuse status between different business lines and different groups is not the same. The main reasons for this problem are:

- Different businesses are at different stages of development and have different iterative rhythms.

- There is a natural "isolation" in the organizational structure, such as recommendation and search businesses are divided into two different business groups.

Therefore, the main reason that hinders the further improvement of the reuse degree of food delivery advertisements is that the overall standardization degree is insufficient, and there is no unified standard among various business lines, so we must first solve the problem of standardization construction.

4.2.2 Standardization Construction

The breadth and depth of standardization construction determine the level of system reuse. Therefore, the construction goals of this standardization should cover all aspects. For all the services of the advertising system, we start from the three dimensions of business development, including the realization of functions, the data used by functions, and the process of function combination, to standardize the construction of unified advertising. thus making:

- at the individual development level : Developers don't need to pay attention to how to schedule the process, they only need to focus on the realization of new functions, and the development efficiency becomes higher.

- From the perspective of the overall system, : Each service does not need to be repeatedly developed for common functions, and the overall degree of reuse is higher, saving a lot of development time.

4.2.2.1 Standardization of functions

For the standardization of functions, we first divide the function into two parts according to whether it is related to business logic: business logic related and business logic irrelevant.

① Functions unrelated to business logic are unified and co-constructed through two-layer abstraction

- The standardized form of unified co-construction of all business lines is to perform two-layer abstraction. For a single, simple function point, the abstraction is the tool layer; for a certain aspect of the function that can be implemented and deployed independently, such as creative ability, the abstraction is the component layer. The tool layer and the component layer are unified to provide external services in the form of JAR packages. All projects use the relevant functions by referencing the unified JAR package to avoid repeated construction, as shown in the following figure:

② Functions related to business logic are layered and reused in the scope of reuse

- The functions related to business logic are the core of this standardization construction, and the goal is to achieve maximum business reuse. Therefore, we abstract the smallest inseparable business logic unit as the basic unit developed by business students, called Action. At the same time, according to the different reuse scope of Action, it is divided into three layers, which are the basic action that can be reused by all services, the module action that can be reused by multiple business lines, and the business action customized for a specific single business, that is, the extension point. All Actions are derived from Base Action, which defines the unified basic capabilities of all Actions.

- Different Action types are developed by different types of developers. For basic actions and module actions with a relatively large scope of influence, students with rich engineering experience will develop them; for business actions or extension points that only affect a single business, they will be developed by students with relatively weak engineering capabilities.

- At the same time, we abstract the combination of multiple Actions as Stage, which is a business module formed by the combination of different Actions. The purpose is to shield details, simplify the complexity of business logic flow charts, and provide more coarse-grained reuse capabilities.

4.2.2.2 Normalization of data

Data is the basic element for realizing functions, and the data sources of different businesses are similar. If the data is not standardized, it will be impossible to implement the standardization of functions, and it will not be possible to maximize the reuse of the data level. We divide data from data sources and data usage: input data, intermediate data, and output data for business capabilities are implemented through standardized data contexts; at the same time, for third-party external data and internal data such as word lists, unified data Container storage and interface acquisition.

① Use the context Context to describe the environmental dependencies of Action execution

- Each Action execution requires certain environmental dependencies, including input dependencies, configuration dependencies, environmental parameters, and dependencies on the execution status of other Actions. We abstract the first three types of dependencies into the business execution context, and restrict the use of Action by defining a unified format and usage.

- Considering that different levels of Actions use data dependencies from large to small, following the same hierarchical design, we designed a Context container with three layers of successive inheritance, and standardized and stored the three types of dependent data into the corresponding Context.

- The advantage of using standardized Context for data transfer is that Action can customize the acquisition of input data and the convenience of subsequent expansion; at the same time, standardized Context also has certain disadvantages, it cannot completely restrict Action's data access rights from the mechanism, and with the follow-up Iteration can also cause the Context to become increasingly bloated. After considering the pros and cons, we still use the standard Context mode at this stage.

② Unified processing of third-party external data

- For the use of third-party external data, mature engineering experience is required to evaluate factors such as call volume, load, performance, batch or unpacking in advance. Therefore, for all third-party external data, we uniformly encapsulate it as the basic Action, and then the business will be based on the situation. Customized use.

③ Full life cycle management of vocabulary data

- The vocabulary is generated according to business rules or policies, and needs to be loaded into the memory to use KV data. The vocabulary data before standardization has different degrees of lack in the ability to generate, pull, load, memory optimization, rollback, and downgrade. Therefore, we designed a vocabulary management framework based on message notification, which realized the full life cycle coverage of vocabulary version management, custom loading, regular cleaning, and process monitoring, and defined the access method of business standardization.

4.2.2.3 Standardization of call flow

Finally, it is the business invocation process that combines functions and data, and a unified process design mode is the core means of business function reuse and efficiency improvement. The best way to unify process design is to standardize business processes. Among them, the calling method of the third-party interface, let the students of the framework research and development use the centralized encapsulation method to unify. The calling timing of the interface is standardized based on the performance priority and load, and the principle of no repeated calls.

In specific practice, we first sort out the standardized functions used in business logic, then analyze the dependencies between these functions, and finally complete the standard design of the entire business logic process based on the principles of performance priority, load, and no repeated calls.

From a horizontal perspective, by comparing the similarity of different business logic processes, we have also extracted certain practical experience, taking the central control module as an example:

- For the third-party data of the user dimension, the unified encapsulation call is made after initialization.

- For the third-party data of the merchant dimension, the data used by the batch interface will be encapsulated and called uniformly after recall; the data not used by the batch interface will be uniformly encapsulated and called after fine sorting and truncation.

4.3 Technical Framework

4.3.1 Introduction to the overall framework

The platform is mainly composed of two parts, one part is the front desk part of the platform, and the other part is the platform development framework package. The front-end part is a web front-end for R&D personnel, PM and QA. The main function is to interact visually with the engine service integrated with the platform development framework package. We also give this platform a name, called Camp Platform, this is the meaning of the base camp, which means helping the business side to climb the business peak. The platform development framework package is integrated with the engine background service, providing functions such as engine scheduling isolation, capability accumulation, and information reporting, while ensuring that each module maintains the same standard framework and business capability style.

Each online service needs to introduce a platform development framework package, and we need to focus on how to balance the service performance and platform versatility. This is because the introduction of the platform framework will enhance the expansion of the original code details; in the C-side high-traffic scenario, the more general the platform framework is, the richer the underlying functions are, which is different from the simple "naked" code. It will bring some performance loss. Therefore, it is necessary to make a compromise between performance overhead and platform abstraction capability. Combining the characteristics of our own business, we give the security threshold that the loss of TP999 is within 5ms, and the general capabilities of each business are lowered to the framework and provided to the upper-layer online services.

In summary, the overall system architecture is designed as follows:

① The Camp platform provides management control and display functions. The platform consists of the following sub-module packages:

- The business visualization package provides static information about the capabilities of each backend system, including name, function description, configuration information, etc. These information will be used in the requirements assessment stage and business development stage.

- Full-map arrangement and package delivery, business development students can visually drag and drop the existing capabilities, and automatically generate a parallel and optimal execution process through the full-map service, and then adjust according to specific business scenarios, and finally generate a comprehensive In a directed acyclic graph, the nodes of the graph represent business capabilities, and the edges represent the dependencies between business capabilities. The graph will be dynamically sent to the corresponding background service for the execution framework to analyze and execute.

- The statistics monitoring package provides statistics and exception information during operation such as business capabilities and dictionaries, and is used to view the performance and abnormal conditions of each business capability, so as to achieve the purpose of perceiving the running status of each business capability.

② The platform development framework package is introduced by multiple services of the advertising engine, executes the orchestrated business process and provides external services. The platform framework development package consists of the following sub-module packages:

- The core package provides two functions. The first is the scheduling function, which executes the process choreography file issued by the platform, executes each business capability sequentially or in parallel according to the defined DAG execution order and execution conditions, and provides the necessary isolation and reliability. Performance assurance, while monitoring operation and reporting abnormal conditions. The second is the business collection and reporting function, which scans and collects business capabilities in the system and reports them to the platform Web service for business orchestration and visualization of business capabilities.

- Capability package, a collection of business capabilities, the business capabilities here have been defined in the previous chapter "4.2.2.1 Standardization of functions", that is, "abstract the smallest inseparable business logic unit as the basic unit developed by business students, It is called Action, also called ability.”

- A component package is a collection of business components. The business components here are also defined in the chapter "4.2.2.1 Standardization of Functions", that is, "A certain aspect of functions that can be implemented and deployed independently, such as creative capabilities, is abstracted as a component. ".

- The toolkit provides basic functions required by business capabilities, such as dictionary tools commonly used by engines, experimental tools, and dynamic degradation tools. The tools here are also defined in the chapter "4.2.2.1 Standardization of Functions", that is, a single, simple non-business function module is abstracted as a tool.

A typical development process is shown in the figure above. After developers develop business capabilities (1), the static information of business capabilities will be collected on the Camp platform (2). At the same time, the optimal DAG diagram is obtained through full-map dependency derivation ( 3) The business students will adjust the DAG diagram according to the actual business situation. During the operation of the engine online service, the latest DAG process will be obtained and the latest business process service will be provided externally (4, 5), and the dynamic information of the business operation will be reported at the same time. to the Camp platform (6).

In the following chapters, we will describe several key technical points in detail, including the automatic reporting of visualization-related components and the full-graph arrangement and execution scheduling related to DAG execution. Finally, this article will also introduce Let’s take a look at the work that is strongly related to the advertising business and the unified encapsulation of dictionaries in the platform.

4.3.2 Service Collection & Reporting

In order to facilitate the management and query of existing business capabilities, the platform development framework package will scan the @LppAbility annotation and @LppExtension annotation during compilation to report metadata to the Camp platform. Business students can query and visually drag and drop existing components in the Camp platform.

//原子能力(Action)

@LppAbility(name = "POI、Plan、Unit数据聚合平铺能力", desc = "做预算过滤之前,需要把对象打平",

param = "AdFlatAction.Param", response = "List<KvPoiInfoWrapper>", prd = "无产品需求", func = "POI、Plan、Unit数据聚合平铺能力", cost = 1)

public abstract class AdFlatAction extends AbstractNotForceExecuteBaseAction {

}

//扩展点

@LppExtension(name = "数据聚合平铺扩展点",

func = "POI、Plan、Unit数据聚合平铺", diff = "默认的扩展点,各业务线直接无差异", prd = "无", cost = 3)

public class FlatAction extends AdFlatAction {

@Override

protected Object process(AdFlatAction.Param param) {

//do something

return new Object();

}

}4.3.3 Full-map arrangement

In the advertisement delivery engine service, the DAG graph of each business will often have dozens or even hundreds of Actions. It is difficult to achieve the optimal parallelization of Action arrangement through traditional manual arrangement or business-driven arrangement. Therefore, the platform framework package adopts the data-driven idea, and through the data dependencies between Actions, the program automatically deduces the optimal DAG graph for parallelization, that is, the full graph arrangement, and then the business personnel will be based on business scenarios and traffic. The scene is customized and adjusted, dynamically delivered to the service node, and handed over to the scheduling engine for execution. In this way, the optimal parallelism in the scene can be achieved through automatic derivation + scene tuning.

① The basic principle of full-map automatic arrangement

We define the input parameter set of an Action x as the fields used when the Action x is executed, which is expressed as follows:

$input_x(A,B,C......N)$

Define the output parameter set of an Action y as the fields produced after the Action is executed, as follows:

$output_y(A,B,C......M)$

We consider Action x to depend on Action y when any of the following two conditions exist.

- input_x ∩ output_y ≠ ∅, that is, some/some input parameters of Action x are output by Action y.

- output_x ∩ output_y ≠ ∅, that is, Action x and Action y operate on the same field.

② Full-map automatic arrangement of the overall design

The full graph automatic arrangement is generally divided into two modules: parsing module and dependency analysis module.

parsing module : Parse out the input and output sets of each Action by analyzing the bytecode.

- The bytecode analysis uses the open source tool ASM. By simulating the Java runtime stack, maintaining the Java runtime local variable table, it parses out the fields that each Action execution depends on and the output fields.

Dependency Analysis Module : Use the reverse analysis method of three-color marking to analyze the dependencies between Actions, and prune the generated graph.

- Dependency pruning: The generated graph will have repeated dependencies. In order to reduce the complexity of the graph, dependency pruning is performed on the graph without changing the semantics of the graph. E.g:

③ Full-map automatic arrangement revenue effect

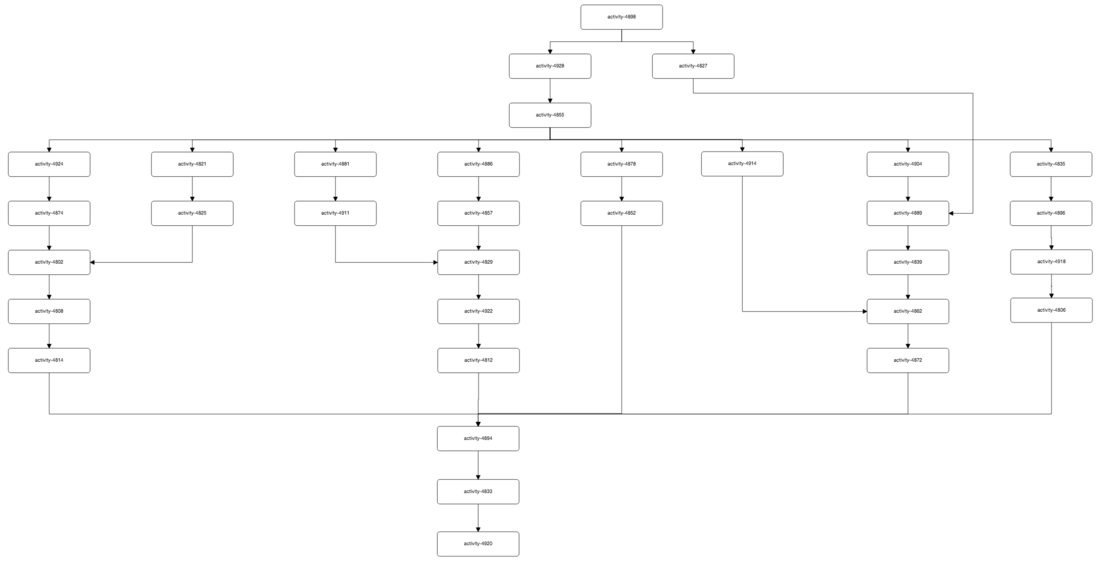

Automatically correct human orchestration errors and maximize orchestration parallelism. In an actual business scenario, the DAG comparison before and after full imageization is shown in the following figure:

The two Actions marked in blue will operate on the same Map at the same time. If they are executed concurrently, there will be thread safety risks. Because the method call stack is too deep, it is difficult for business development students to pay attention to this problem, resulting in wrong parallel arrangement. After full graph analysis, the orchestration is executed serially.

Mark the three groups of Actions in green, red and yellow. The two Actions in each group do not have data dependencies, and the business development students arrange them serially. After full graph analysis, the arrangement is parallel.

4.3.4 Scheduling Engine

The core function of the scheduling engine is to schedule the above-mentioned delivered DAG. Therefore, the engine needs to have the following two functions:

- Composition : Generate a specific DAG template diagram according to the arrangement and configuration of the Action.

- schedules : When traffic requests, execute Actions with correct dependencies.

The working principle of the entire scheduling engine is as follows:

In consideration of performance, the scheduling engine abandons the method of real-time composition of traffic requests, but adopts the method of "static composition + dynamic scheduling".

- static composition : When the service starts, the scheduling engine arranges and configures the configuration according to the issued DAG, initializes it as a Graph template and loads it into the memory. After the service starts, the templates of multiple DAGs are persisted to memory. When the web platform dynamically distributes the graph, the engine will compose the newest graph and completely hot-replace it.

- Dynamic scheduling : When the traffic requests, the business side specifies the corresponding DAG, and sends it to the scheduling engine together with the context information; the engine executes according to the Graph template, completes the scheduling of graphs and nodes, and records the entire scheduling process.

Since the ad serving engine serves C-end users, it has high requirements on the performance, availability, and scalability of the service. The design difficulties of the scheduling engine also fall in these three aspects, and we will briefly describe them next.

4.3.4.1 High Performance Practices

The process engine serves the C-side service. Compared with the traditional hard-coded scheduling, the scheduling performance of the engine should be at least the same or within an acceptable performance loss threshold. Below, we will introduce our performance practice from two representative aspects of scheduler design and scheduling thread tuning.

① Scheduler design

Meaning : How to make the nodes execute one by one; how to make other nodes sense and start executing when one node completes execution. As shown in the figure below, after node A is executed, how to notify nodes B and C and execute it. The common idea is that the hierarchical scheduling of nodes, its meaning and characteristics are as follows:

- Rely on hierarchical algorithms (such as breadth-first traversal) to calculate the nodes that need to be executed at each layer in advance; nodes are scheduled in batches without any notification and driving mechanism.

- When there are multiple nodes in the same batch, the longboard effect is prone to occur due to the different execution time of each node.

- In the graph scheduling of multiple serial nodes, it has better performance advantages.

Another common idea is the queue notification-driven mode based on the idea of pipeline:

- After a node is executed, it immediately sends a signal to the message queue; the consumer side executes subsequent nodes after receiving the signal. As shown in the DAG above, after the execution of B is completed, D/E receives the notification to start execution, and does not need to care about the status of C.

- Since the execution state of sibling nodes is not concerned, the longboard effect of hierarchical scheduling does not occur.

- In the graph scheduling of multiple parallel nodes, it has very good parallel performance; but in the graph of multiple serial nodes, the performance will be slightly worse due to the additional overhead of thread switching and queue notification.

As shown in the figure above, the scheduling engine currently supports both scheduling models. The hierarchical scheduler is recommended for graphs with multiple serial nodes, and the queue pipeline scheduler is recommended for graphs with multiple parallel nodes.

Hierarchical Scheduler

Relying on the layered algorithm mentioned above, the nodes are executed in batches, the serial nodes are executed in a single thread, and the parallel nodes are executed in a pool.

Queue Pipeline Scheduler

Both the outer graph task (GraphTask) and the inner node task (NodeTask) are executed in a pooled manner.

Node scheduling mechanism

- Scheduling mechanism: the process in between when the consumer receives the message and the node is executed. In the following DAG, the node needs to complete the following steps after receiving the message: check the DAG execution status, check the status of the parent node, check the execution condition of the node, modify the execution status, and execute the process of the node, as shown in the following figure:

- There are usually two ways to execute these steps: one is centralized scheduling, which is processed by a unified method; the other is decentralized scheduling, which is completed by each subsequent node alone.

- We use centralized scheduling: after a node is executed, it sends a message to the queue; there is a task dispatcher on the consumer side that is responsible for the consumption, and then the task is distributed.

The starting point for this is:

- As shown in the figure above, the three nodes of ABC are completed at the same time, and there are still a series of operations before the actual execution of the D node. If the lock control is not applied during this process, the D node will be executed three times; therefore, it needs to be locked to ensure thread safety. The centralized task dispatcher adopts a lock-free queue design to avoid the performance overhead caused by locking while ensuring thread safety.

- Another example is the situation of one parent and multiple children. Some common operations (checking graph/parent node status, anomaly detection, etc.) will be executed once for each child node, which will bring unnecessary system overhead. The centralized task dispatcher, on the other hand, uniformly processes common operations, and then distributes tasks to sub-nodes.

- In decentralized scheduling, the scope of responsibilities of nodes is too wide, not only needs to execute the core business code, but also needs to deal with the consumption of messages, the responsibilities are not single, and the maintainability is poor.

Therefore, in the actual development of the project, considering the difficulty of implementation, maintainability, and comprehensive consideration of performance and other factors, centralized scheduling is finally adopted.

② Scheduling thread tuning

The scheduling engine provides two APIs to the caller for DAG execution:

- asynchronously calls : The GraphTask is executed by the thread pool, and returns the Future of the outermost GraphTask to the business side. The business side can precisely control the maximum execution time of the DAG. At present, there are scenarios in which different advertising services are processed in the same request in takeaway advertisements, and the business side can freely combine the scheduling of sub-graphs according to the asynchronous interface.

- Synchronous call : The biggest difference from asynchronous calls is that synchronous calls will not be returned to the caller until the graph execution is completed/graph execution times out.

The underlying scheduler currently provides the two schedulers mentioned above. Specifically as shown in the figure below:

It can be seen that the scheduling engine uses the thread pool many times in the execution of internal tasks. In CPU-intensive services, if the request volume is too large or there are too many nodes, a large number of thread switching will inevitably affect the overall performance of the service. For the queue notification scheduler, we have made some scheduling optimizations to try to bring the performance back to before the scheduling engine is not connected.

Scheduling thread model tuning

- In the case of synchronous calls, since the main thread will not return directly, it is waiting for the execution of the DAG graph to complete. The scheduling engine takes advantage of this feature to let the main thread execute the outermost GraphTask, which reduces one thread switch when processing each request.

serial node execution optimization

- As shown in the above DAG diagram, there are some serial nodes (such as one-way A→B→C→D). When executing these 4 serial nodes, the scheduling engine will not switch threads, but one thread in turn Complete task execution.

- When executing serial nodes, the scheduling engine also no longer performs queue notification, but uses serial scheduling to minimize system overhead.

4.3.4.2 High Availability Practice

In terms of high availability, we briefly introduce our practice from isolation and monitoring. Its core principle is shown in the following figure:

① Business isolation

In advertising scenarios, there are often multiple sub-business lines in the same service, and the logic of each business line corresponds to a DAG. For the isolation of each business line within the same service, we use the " single instance-multi-tenant " solution. This is because:

- The process engine is active in the same process, and the single-instance solution is easier to manage.

- During the internal implementation of the process engine, some multi-tenant isolation work has been done on the granularity of the graph, so it is more inclined to the single-instance solution for external provision.

Except that DAG scheduling and Node scheduling are static codes, the idea of multi-tenant isolation is adopted for the storage of graphs, the selection and execution of DAGs, the selection and execution of Node nodes, and the node notification queue of each DAG.

② Scheduled task isolation

Scheduling tasks are mainly divided into two categories: DAG tasks (GraphTask) and node tasks (NodeTask). One of the GraphTasks corresponds to multiple NodeTasks, and its execution state depends on all NodeTasks. During execution, the scheduling engine isolates the execution of GraphTask and NodeTask by means of secondary thread pool isolation.

The starting point for this isolation is:

- Each thread pool has a single responsibility, the execution task is more single, and the corresponding process monitoring and dynamic adjustment are also more convenient.

- If a thread pool is shared, if there is an instantaneous QPS increase, the thread pool will be occupied by GraphTask, and NodeTask cannot be submitted, which will eventually lead to a deadlock of the scheduling engine.

Therefore, the two-level thread pool scheduling method is superior to the one-level thread pool scheduling in terms of fine thread management and isolation.

③ Process monitoring

For the monitoring of DAG scheduling, we divide it into three categories. They are exceptions, timeouts, and statistics, as follows:

- Exception: Graph/node execution exception, support configuration retry, custom exception handling.

- Timeout: Graph/node execution timed out, support downgrade.

- Statistics: graph/node execution times & time-consuming, providing optimized data reports.

4.3.4.3 High Availability Practice

The advertising business logic is complex, and there are a large number of experiments, branch judgments, conditional executions, etc. on the delivery link. And the iteration frequency and release frequency of the ad serving service are also very high. Therefore, in terms of scalability, the scheduling engine must first consider two issues: how to schedule conditional nodes, and how the orchestration configuration can take effect quickly without release.

① Node conditional execution

For the conditional execution of nodes, we need to explicitly increase the Condition expression when configuring the DAG. Before executing the node, the scheduling engine will dynamically calculate the value of the expression, and the node will be executed only if the execution conditions are met.

② Configure dynamic delivery

- As shown in the previous figure, we decouple the composition and scheduling through the intermediate state Graph template, and the orchestration configuration can be edited on the web platform and dynamically delivered to the service.

- Since the scheduling engine uses the thread pool many times in the scheduling process, for the dynamic update of the thread pool, we use the company's general components to dynamically configure and monitor the thread pool.

4.3.4.4 Scheduling Engine Summary

① Function

DAG core scheduling

- The scheduling engine provides the implementation of two common schedulers, which can provide better support for different business scenarios.

- The scheduling engine adopts the classic two-level scheduling model, and the DAG graph/node task scheduling is more isolated and controllable.

Node conditional execution

- A condition check function is added to the scheduling pre-conditions of nodes. Nodes that do not meet the conditions will not be executed. The scheduling engine will dynamically determine the execution conditions of nodes based on context and traffic conditions.

timeout processing

- DAG, Stage, and Node nodes all support timeout processing, simplify the timeout control of each internal business logic, and hand over the initiative to the framework for unified processing. On the premise of ensuring performance, improve the processing efficiency of internal logic.

node can be configured

- The same Node node will be used for each business scenario, but the processing logic of each business scenario is not nearly the same. In response to this situation, the configuration function of the node is added, and the framework transfers the configuration of the node into the logic to achieve configurability.

② Performance

- In the DAG scenario of multiple serial nodes, the performance can basically maintain the original bare write method.

- In the multi-parallel node DAG scenario, due to the influence of pooling, there is some performance loss in multi-thread pool preemption and switching; after repeated tuning and CPU hotspot management, the TP999 loss value can be controlled to 5ms within.

4.3.5 Business Component Layer Precipitation

As defined in "4.2.2.1 Standardization of Functions", business function modules that can be implemented and deployed independently are abstracted into business components. Extracting high-cohesion and low-coupling business components from business logic is an important means to improve code reuse capabilities. In practice, we found that the logic contained in different business components varies widely, and the specific implementation, design and code style are also uneven. Therefore, in order to unify the design ideas and implementation methods of business components, we have implemented a set of standardized component frameworks to reduce the repetitive work of new component development and reduce the learning and access costs of users.

The left side of the above figure shows the overall framework of business components. The bottom layer is a unified public domain and public dependencies, the upper layer is the implementation process of business component standards, and the aspect capability supports business logic. On the right is an example of a framework-based Smart Bidding component. The role of the framework is to:

① Unified public domain and dependency management

- The public domain refers to business entities that are used in different business components. We extract the common domain objects in the business and provide them as basic components to other business components to reduce the repeated definition of domain objects in different components.

- Business components have many internal and external dependencies. We have conducted a unified sorting and screening of public dependencies, and at the same time weighed various factors, and determined a reasonable use method. Finally, a complete and mature dependency framework is formed.

② Unified interface and process

- We abstract the business components into three stages: the data and environment preparation stage Prepare, the actual computing stage Process, and the post-processing stage Post. An abstract generic template interface is designed in each stage, and finally different business processes in the component are completed through different interface combinations. All classes provide both synchronous and asynchronous calling methods in the interface design.

③ Unified aspect capability

- At present, all service modules use Spring as the development framework. We have developed a series of aspect expansion capabilities by using its AOP function, including log collection, time-consuming monitoring, downgrade current limiting, data caching and other functions. These functions are designed with non-intrusive code to reduce the coupling between aspect capability and business logic. New business components can be completely reused through configuration.

Smart Bidding components are business components developed based on the above framework. The Smart Bidding component is an abstract aggregation of advertising bidding strategies, including multiple algorithms such as PID and CEM. The data such as user feature acquisition and experimental information analysis that the bidding strategy relies on are implemented by the Prepare template; the implementation of the specific PID and CEM algorithms is implemented by the Process template; the post-operations such as verification of the bidding results and parameter monitoring are uniformly implemented by the Post template. accomplish. The public domain objects and third-party dependencies used by the entire component are also managed by the framework.

4.3.6 Toolkit - Dictionary Management

The meaning of toolkit is also defined in "4.2.2.1 Standardization of Functions", that is, a single, simple non-business function module is abstracted as a tool. The construction of the toolkit is an important basis for improving the efficiency of advertising platform work, and its main function is to deal with auxiliary general processes or functions that have nothing to do with business logic. For example: there is a large amount of KV data in the advertising system that needs to be loaded into the memory for use, which we call the vocabulary file. In order to realize the full life cycle management of vocabulary files, the advertising platform has designed and developed vocabulary management tools, and has accumulated good practical results in the process of business use.

① Design of vocabulary management

The above picture is the overall structure of the vocabulary management platform. The vocabulary management platform adopts a layered design as a whole, with five layers from top to bottom:

- storage layer : mainly used for data storage and transfer. Among them, S3 within Meituan completes the storage of vocabulary files in the cloud, Zookeeper is mainly used to store the version information of the vocabulary, and the online service obtains the latest version update events by monitoring.

- Component layer : Each component can be regarded as an independent functional unit, providing a common interface for the upper layer.

- Plug-in layer : The role of business plug-ins is to provide unified plug-in definitions and flexible custom implementations. For example, the main purpose of the loader is to provide the vocabulary loading and storage functions in a unified format, and each vocabulary can dynamically configure its loader type.

- module layer : The module layer is mainly to look at a certain part of the different processes of the overall vocabulary file from the business point of view, and the modules complete the interaction through the event notification mechanism. For example, the vocabulary management module includes vocabulary version management, event monitoring, vocabulary registration, vocabulary addition/unloading, vocabulary access, etc.

- Process Layer : We define a complete vocabulary business behavior process as a process. The entire life cycle of the vocabulary can be divided into the process of adding vocabulary, updating the vocabulary, canceling the vocabulary, and rolling back the vocabulary.

② Business benefits of vocabulary management

The main advantages of platform-based dictionary management tools in business practice are:

- More flexible service architecture : Transparency of the vocabulary process. The user does not need to pay attention to the circulation process of the vocabulary, and uses a unified API to access.

- Unified business capabilities : Unified version management mechanism, unified storage framework, unified vocabulary format and loader.

- system high availability : rapid recovery and degradation capabilities, resource and task isolation, multi-priority processing capabilities and other multiple system guarantee functions.

4.4 New process of production and research

As mentioned above, due to the large number of advertising business lines and involving many upstream and downstream, after several years of rapid iteration of projects and strategies, the existing business logic has become extremely complex, resulting in some procedural problems in the daily iteration.

① Difficulty in obtaining PM information

When PM conducts product research and design, the current logic of the related modules involved is not very clear, and it is often solved by consulting R&D personnel offline, which affects the efficiency of both parties. At the same time, product design documents are purely based on business perspectives and processes. , which makes it difficult for QA and R&D personnel to intuitively obtain the change points and scope of changes during each review, and spend a lot of time communicating with each other to confirm the compatibility of boundaries and existing logic.

② The functional evaluation of R&D personnel is completely dependent on experience

When designing the scheme, it is difficult for developers to directly obtain whether the horizontally related modules have similar function points (reusable or scalable), resulting in a low reuse rate. Reference standards, often the project is delayed due to inaccurate workload assessment.

③ QA test and evaluation efficiency is low

QA relies entirely on the technical solutions of R&D students (RD) when evaluating the functional scope, and most of them confirm the scope and boundaries of functional changes through oral communication. While affecting the efficiency, it will also lead to some test problems. The entire project cycle is delayed, affecting the progress of the project. At the same time, the management of basic JAR packages after platformization is completely manual, and there is no unified test standard for some actions, especially basic actions. The above problems can be summarized as follows:

4.4.1 Objectives

With the help of platformization, for the whole process of project delivery (as shown in the figure below), a new process of production and research is implemented to solve the problems encountered by product, R&D and testers in the iteration, empower the business, and improve the delivery efficiency of the overall project with delivery quality.

4.4.2 Thinking and implementation

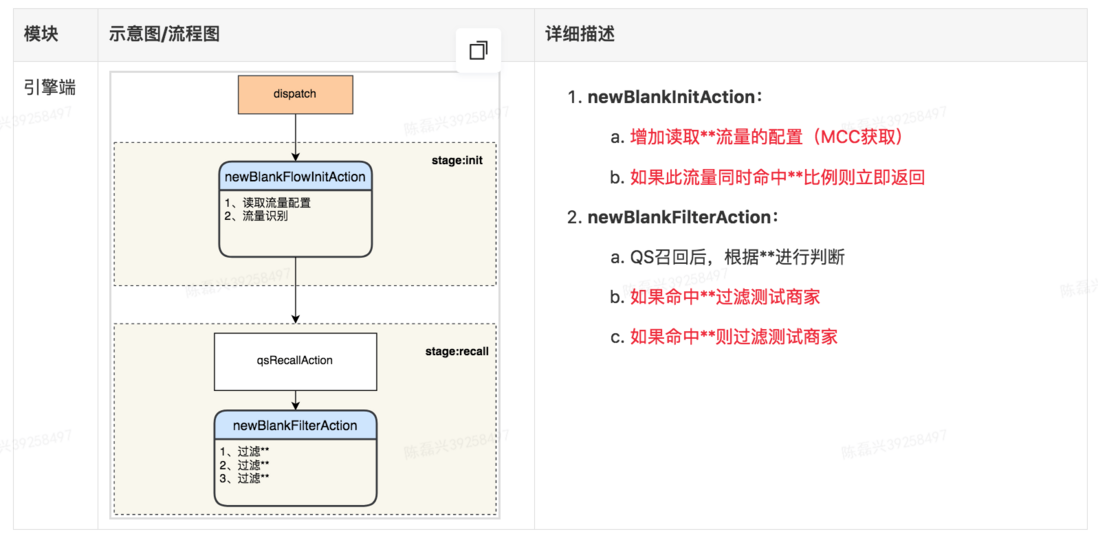

Implementing a new process of production and research based on platformization, that is, using Stage/Action to drive the delivery of the entire project, as shown in the following figure:

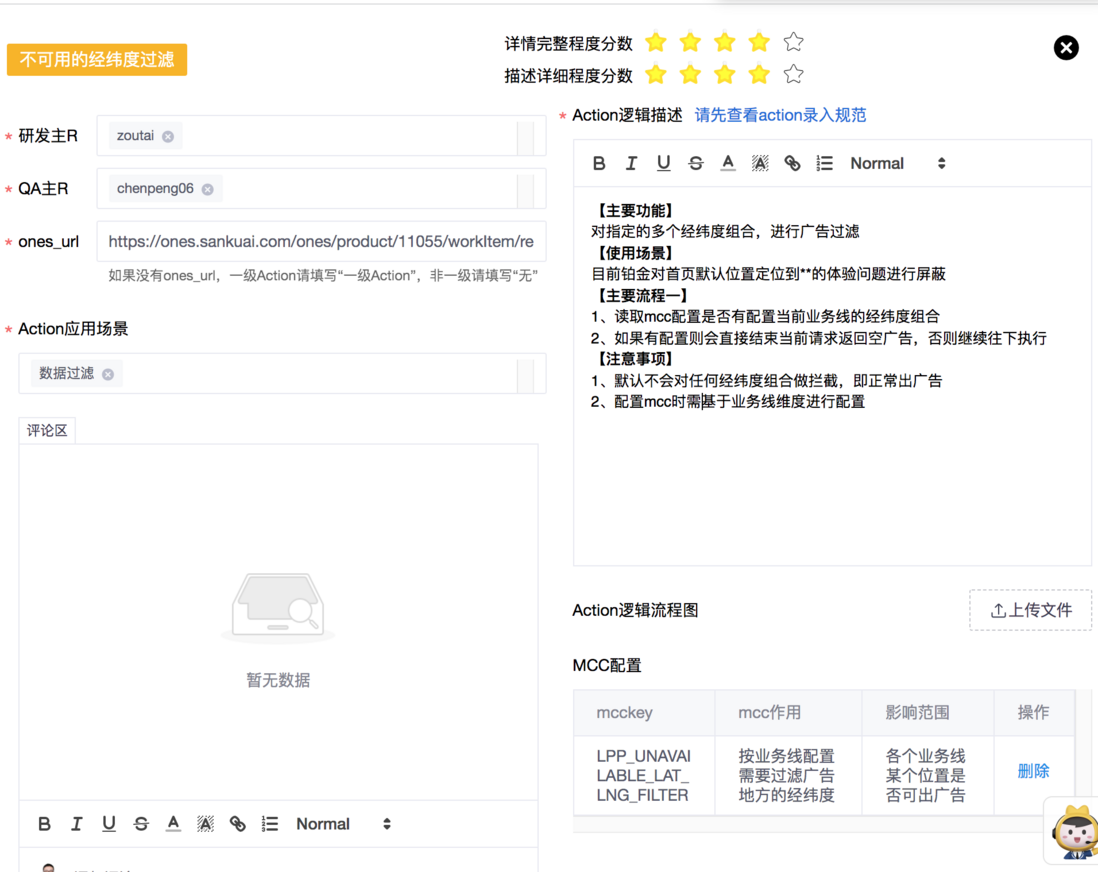

- For PM (Product) : Build Stage/Action visualization capabilities and apply them in project design.

- for RD (R&D) : Unified adoption of a new Stage/Action-based solution to design and develop a scheduling model.

- For QA (Testing) : Unified Communication Collaboration Language - Stage/Action, and drive improvement of related testing methods and testing tools

4.4.2.1 Product side

The following figure shows the application and practical effect after the construction of the production and research function. The first two are visualizations of the business capabilities of construction, providing PMs with a visualization function to understand the latest processes and detailed Action capabilities of each business. The third picture is the research and functional description of related businesses in product design (for data security reasons, the following Screenshots use non-real projects as examples).

4.4.2.2 R&D side

According to the different stages of R&D work in the project development cycle, we have formulated the process specifications before and after code development to ensure that R&D students can make full use of the platform's capabilities for design and development during the entire development cycle.

Before development

- Technical design: Based on the existing Action functions involved in each business and the visualization capability of Action DAG, conduct research, reference and reuse evaluation of horizontal businesses, and technical design for adding or changing Action functions.

- Project scheduling: Based on the addition, change, reuse and action level of Action capabilities in technical design, a relatively standardized evaluation of the development workload is carried out.

after development

- Action Precipitation: The system reports and regularly evaluates the reuse and expansion of the platform's Action capabilities.

- Process feedback: Track each project based on the platform, quantitatively report the relevant indicators in the delivery process, and collect feedback from project personnel.

4.4.2.3 Test side

- uses the Stage/Action unified communication and collaboration language : In the project links involving requirements design and review, scheme design and review, test case writing and review, etc., Stage/Action is used as the communication language for functional description and design, so as to In the follow-up process, the discovery of problems is as advanced as possible, and at the same time, each participant is more clear about the changes and test content, which provides support for QA to better evaluate the test scope, thereby better guaranteeing the project test quality.

- promotes full coverage of basic Aaction UTs : For basic actions, build unit tests, automatically trigger the unit test pipeline when merging code, output the success rate and coverage rate of executing unit tests, and evaluate the indicator baseline to ensure the efficiency of sustainable testing and quality.

- Improve JAR management tooling and automated analysis and testing: All first-level actions are written in the platform JAR package. For the management of such public JAR packages, develop exclusive management and maintenance tools to solve the problem of automatic single test coverage for upgrading public JARs And every time you upgrade the JAR version, you need to manually analyze the test efficiency problem of manual maintenance, and get through the whole process of integration test automation.

5 effects

① Improvement of production and research efficiency

System Capability Precipitation

- All business lines of food delivery advertising have completed the platform architecture upgrade, and continue to operate and iterate on this architecture.

- There are 50+ basic business capabilities, 140+ module sharing capabilities, and 500+ product line sharing capabilities.

Human efficiency improvement

Improvement of R&D efficiency: After the platform-based architecture of each business line was migrated, large business iterations were performed 20+ times, and the business iteration efficiency was improved by 28+% compared to the previous total. Especially in the access of new services, the same function does not need to be developed repeatedly, and the effect of improving efficiency is more obvious:

- The capacity has been reused 500+ times, and the capacity reuse ratio is 52+%;

- In the new service access scenario, Action reuse 65+%.

- Improvement of test automation indicators: With the help of JAR automated analysis, integration testing and process coverage construction, the advertising automation test coverage has increased by 15%, the test efficiency has increased by 28%, and the comprehensive automation score has also been significantly improved.

② Improve delivery quality and empower products

- Action-based changes and clear visual business links can help QA more accurately assess the scope of impact. The number of process issues and online issues both show a downward trend, with a drop of about 10%.

- Through the visualization of system capabilities, the system increases the transparency of the system, effectively helps products understand the existing capabilities of the system in the product research stage, and reduces business consulting and cross-product line knowledge barriers (see 4.4.2.1 for details).

6 Summary and Outlook

This paper introduces the thinking and implementation plan of takeaway advertising platform in construction and practice from three aspects: standardization, framework, and new process of production and research. After two years of exploration, construction and practice, the platformization of Meituan's takeaway advertising has begun to take shape and has effectively supported the rapid iteration of multiple business lines.

In the future, platformization will refine the standardization efforts, reduce the cost of business development, deepen the framework capabilities, and continue to improve in terms of stability, performance, and ease of use. In addition, we will continue to optimize the user experience, improve the operation mechanism, and continuously improve the iterative process of production and research in the direction of new production and research processes.

The above is some exploration and practice of takeaway advertising for business platformization, and exploration in other fields such as advertising engineering architecture. Please look forward to the next series of articles.

7 About the Author

Le Bin, Guoliang, Yulong, Wu Liang, Leixing, Wang Kun, Liu Yan, Siyuan, etc. are all from the Meituan Takeaway Advertising Technology Team.

Job Offers

Meituan's takeaway advertising technology team is continuously recruiting a large number of positions. We are looking for advertising background/algorithm development engineers and experts, located in Beijing. Interested students are welcome to join us. You can submit your resume to: yangguoliang@meituan.com (please specify the subject of the email: Meituan Takeaway Advertising Technology Team)

Read more technical articles collection by Meituan technical team

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。