question

As long as you use the cache, it may involve double storage and double writing of the cache and the database. As long as you use double writing, there will be data consistency problems. So how do you solve the consistency problem?

analyze

Let me make an explanation first. In theory, there are two processing ideas. One needs to ensure strong data consistency, so the performance will definitely be greatly reduced; in addition, we can use eventual consistency to ensure performance. The data is inconsistent, but ultimately the data is consistent.

How did the consistency problem arise?

For the read process:

- First, read the cache;

- If there is no value in the cache, read the value from the database;

- At the same time, this value is written into the cache.

Double update mode: unreasonable operation, resulting in data consistency problems

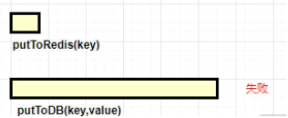

Let's take a look at a common wrong encoding method:

public void putValue(key,value){

// 保存到redis

putToRedis(key,value);

// 保存到MySQL

putToDB(key,value);//操作失败了

}For example, if I want to update a value, first flush the cache, and then update the database. But in the process, updating the database may fail and a rollback occurs. Therefore, in the end, "data in the cache" and "data in the database" are different, that is, there is a data consistency problem.

You may say: I update the database first, and then update the cache, right?

public void putValue(key,value){

// 保存到MySQL

putToDB(key,value);

// 保存到redis

putToRedis(key,value);

}This will still be problematic.

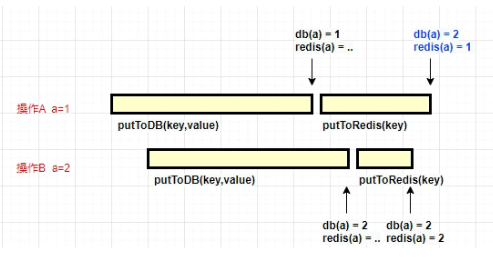

Consider the following scenario: operation A updates a with a value of 1, and operation B updates a with a value of 2. Since the operations of the database and Redis are not atomic, their execution time is not controllable. When the timing of two requests is out of order, cache inconsistency occurs.

Put it into practice, as shown in the figure above: A operation updates Redis after updating the database successfully; but before updating Redis, another update operation B completes . Then the Redis update action of operation A is different from the value in the database.

So what to do? In fact, , we just changed "cache update" to "delete" .

No longer update the cache, delete it directly, why?

- business perspective

The reason is very simple. In many cases, in complex caching scenarios, the cache is not only the value directly retrieved from the database. For example, a field of a table may be updated, and then the corresponding cache needs to query the data of the other two tables and perform operations to calculate the latest value of the cache.

- From a cost-effective point of view

Updating the cache can sometimes be expensive. If the cache is updated frequently, it is necessary to consider whether the cache will be accessed frequently?

For example, if the fields of a table involved in the cache are modified 20 times or 100 times in 1 minute, then the cache is updated 20 times or 100 times; but the cache is only read once in 1 minute. , there is a lot of cold data. In fact, if you just delete the cache, then in 1 minute, the cache is only recalculated once, and the overhead is greatly reduced. Only use the cache to count the cache.

"Post-delete cache" can resolve most inconsistencies

Because every time it is read, if it is judged that there is no value in Redis, the database will be read again, and this logic is no problem.

The only question is: do we delete the cache first? Or delete the cache afterwards?

The answer is to delete the cache afterwards.

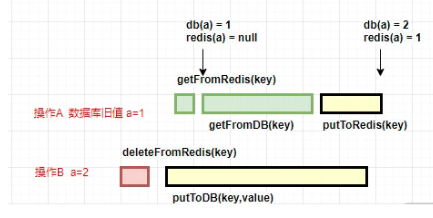

1. If you delete the cache first

Let's see what's wrong with deleting the cache first:

public void putValue(key,value){

// 删除redis数据

deleteFromRedis(key);

// 保存到数据库

putToDB(key,value);

}Just like the picture above. Operation B deletes the value of a key. At this time, another request A arrives, then it will break down to the database, read the old value, and then write to redis, no matter how long the operation of B to update the database lasts. time, there will be inconsistencies.

2. If you delete the cache later

Putting the delete action in the back ensures that the value read every time is the latest.

public void putValue(key,value){

// 保存到数据库

putToDB(key,value);

// 删除redis数据

deleteFromRedis(key);

}This is what we usually call the Cache-Aside Pattern, and it is also the pattern we usually use the most. Let's see how it works.

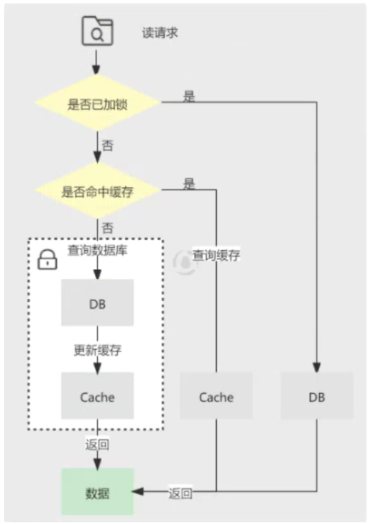

Let's take a look at the data reading process. The rule is " read cache first, then read db ". The detailed steps are as follows:

- Every time the data is read, it is read from the cache;

- If it is read, it will return directly, which is called cache hit;

- If the data in the cache cannot be read, a copy is obtained from the db, which is called a cache miss;

- Stuff the read data into the cache, and the next time you read it, you can hit it directly.

Let's look at the write request again, the rule is " update db first, then delete cache ", the detailed steps are as follows:

- write changes to the database;

- Delete the corresponding data in the cache.

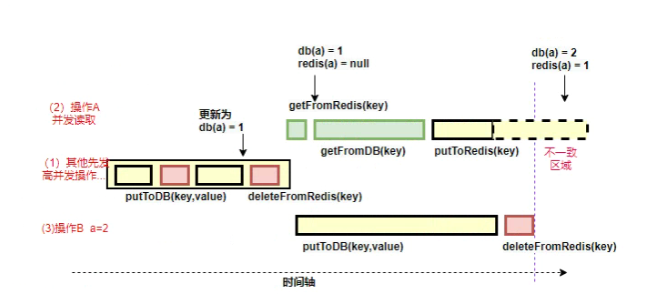

The big factory has high concurrency, and the "post-deletion cache" is still inconsistent

Is there no concurrency problem in this case? no. Suppose there will be two requests, one requesting A to do a query operation, and one requesting B to do an update operation, then the following situations will occur

- Cache just failed

- Request A to query the database and get an old value

- Request B to write the new value to the database

- Request B to delete the cache

- Request A to write the old value found to the cache

If the above situation occurs, it is true that dirty data will occur.

However, what is the probability of this happening?

The above situation has an innate condition, that is, the write database operation in step (3) takes less time than the read database operation in step (2), so that it is possible to make step (4) precede step (5). However, think about it, the read operation of the database is much faster than the write operation, so step (3) takes less time than step (2), and this situation is difficult to occur.

In general, read operations are faster than write operations, but we have to consider two extreme cases:

- One is this read operation A, which occurs at the end of update operation B, . (For example, the write operation takes 1s, and the read operation takes 100ms. The read operation starts when the write operation reaches 800ms, and ends when the write operation reaches 900ms, so the read operation is only 100ms faster than the write operation.)

- One is the operation time of this Redis operation of A, which takes a lot of . For example, this node happens to have STW. (The Stop-The-World mechanism in Java, referred to as STW, is that when the garbage collection algorithm is executed, all other threads of the Java application are suspended (except the garbage collector). A global pause phenomenon in Java, global pause , all Java code stops, native code can be executed, but cannot interact with JVM; these phenomena are mostly caused by gc)

So easily, the end time of read operation A exceeds the action that operation B deletes.

In the appearance of this scenario, not only requires cache invalidation and concurrent read and write execution, but also requires the execution of read requests to query the database earlier than the write requests to update the database, and the execution of read requests to be completed later than the write request . The conditions for this inconsistency scenario are very strict, and general business cannot reach this magnitude, so general companies do not deal with this situation, but high concurrency business is very common.

What if it is a scenario of read-write separation? The same problem occurs if you follow the execution sequence as described below:

- Request A to update the main library

- Request A to delete the cache

- Request B to query the cache, no hits, the query gets the old value from the library

- Sync from library is complete

- Request B to write the old value to the cache

If the master-slave synchronization of the database is relatively slow, the problem of data inconsistency will also occur. In fact, this is the case. After all, we are operating two systems. In a high concurrency scenario, it is difficult for us to guarantee the execution order between multiple requests, or even if we do, it may cost a lot in terms of performance. cost.

lock?

Locking can be used in write requests to ensure that the serial execution of "update database & delete cache" is an atomic operation (similarly, it is also possible to lock updates cached in read requests). Locking will inevitably lead to a decrease in throughput, so the locking scheme should be expected to reduce the performance loss.

How to solve the inconsistency problem of high concurrency?

Looking at the above scenario where this inconsistency occurs, in the final analysis, the "delete operation" happens before the "update operation".

Delayed double deletion

If I had a mechanism to ensure that the delete action was executed, that would solve the problem, at least reduce the time window for data inconsistencies.

The commonly used method is to delay double deletion, which is still to update first and then delete, the only difference is: we execute this delete action again shortly after, for example, 5 seconds later.

public void putValue(key,value){

putToDB(key,value);

deleteFromRedis(key);

// 数秒后重新执行删除操作

deleteFromRedis(key,5);

}This sleep time = time spent reading business logic data + hundreds of milliseconds. In order to ensure the end of the read request, the write request can delete the cached dirty data that the read request may bring.

This solution is not bad, only when it is dormant for a while, there may be dirty data, and general business will accept it.

In fact, when discussing the last solution, we did not consider the situation that operation database or operation cache may fail , and this situation exists objectively.

So here we briefly discuss, first of all, if updating the database fails, it doesn't really matter, because both the database and the cache are still old data at this time, and there is no inconsistency. Suppose deleting the cache fails? There will indeed be data inconsistencies at this point. In addition to the bottom-line solution of setting the cache expiration time, if we want to ensure that the cache can be deleted in time as much as possible, then we must consider retrying the deletion operation.

Remove cache retry mechanism

You can of course retry the delete operation directly in the code, but know that if the failure is caused by network reasons, retrying the operation immediately is likely to fail, so you may need to wait for a while between each retry , such as hundreds of milliseconds or even seconds. In order not to affect the normal operation of the main process, you may hand this thing over to an asynchronous thread for execution.

The delete action also has several options:

- If you open a thread to execute, there is a risk of losing updates as the JVM process dies;

- If placed in MQ, it will increase the complexity of coding.

So at this time, there is no solution that can travel the world. We have to comprehensively evaluate many factors to design, such as the level of the team, the construction period, the tolerance of inconsistency, etc.

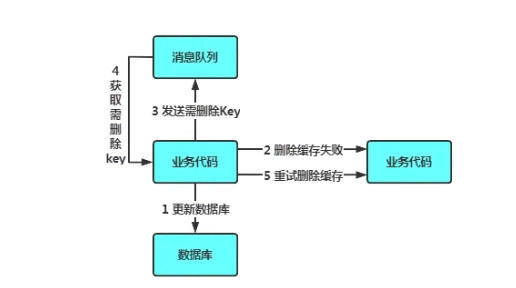

Asynchronous optimization method: message queue

- write request to update database

- Cache deletion failed for some reason

- Put the key that failed to delete into the message queue

- Consume the message of the message queue and get the key to be deleted

- Retry the delete cache operation

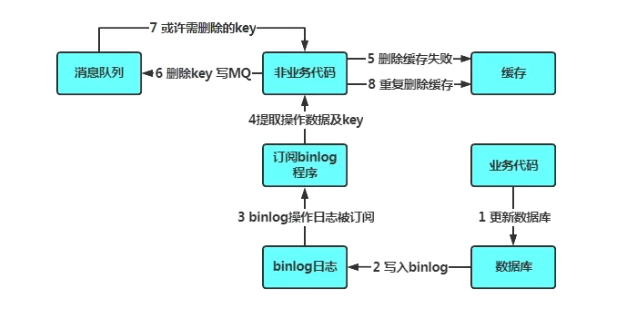

Asynchronous optimization method: synchronization mechanism based on subscription binlog

What if it is a read-write separation scenario? We know that the data synchronization between the master and slave of the database (taking Mysql as an example) is achieved through binlog synchronization, so here we can consider subscribing to binlog (which can be implemented using middleware such as canal), and extract the cache items to be deleted, Then it is written into the message queue as a message, and then slowly consumed and retried by the consumer.

- update database data

- The database will write the operation information to the binlog log

- The subscription program extracts the required data and key

- Start another piece of non-business code to get this information

- Attempt to delete the cache operation and found that the deletion failed

- Send this information to the message queue

- Retrieve the data from the message queue and retry the operation.

summary

We talked a lot about the cache coherence problem of Redis. As you can see, no matter what you do, consistency issues are always there, but the odds are getting smaller.

As the tolerance for inconsistency becomes lower and the concurrency becomes higher, the approaches we take become more extreme. Under normal circumstances, when you reach the step of delayed double deletion, it proves that your concurrency is large enough; if you go further down, it is all about the trade-off of high availability, cost, and consistency, and you have entered the scene of special handling. Even considering infrastructure, the strategy for each of these companies is different.

In addition to the Cache-Aside Pattern, the common consistency patterns include Read-Through, Write-Through, Write-Behind, etc. They all have their own application scenarios, you can take a deeper look.

refer to

Double-write consistency between database and cache

Database and cache consistency problem

Redis cache coherency design

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。