Due to the project requirements, the author recently deployed an ElasticSearch cluster on a Linux server, but found that the query speed often dropped suddenly during the running process. After logging in to the server, it was found that the physical memory was insufficient, resulting in frequent page swaps on the machine. Since it is only a temporary memory requirement, there is no need to improve the configuration, and the data stored in ElasticSearch is mainly text data, so the author thought of using ZRAM to compress the memory to avoid performance fluctuations caused by disk IO, and the effect is obvious. Since there are few articles about configuring ZRAM in Linux on the Internet, this article will introduce the process of configuring and using ZRAM in Linux, and take this opportunity to introduce the operation mechanism of ZRAM and Linux memory.

This article was originally published on , an unnamed , which was synchronized by the author himself to SegmentFault. Please indicate the original author's blog address or this link for reprinting, thank you!

0x01 ZRAM introduction

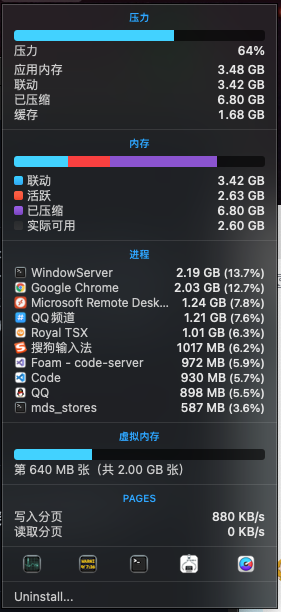

With the diversification and complexity of modern applications, the era when only 640KB of memory was needed to run all the software on the market is gone, and what is growing faster than applications is the user's need for multitasking. need. Today's mainstream operating systems provide the function of memory compression to ensure that active applications have as much available memory as possible:

macOS has enabled memory compression by default sinceOS X 10.9

Windows has enabled memory compression by default since Win10 TH2

Most Android phone manufacturers enable memory compression by default

Careful readers will find that memory compression is not clearly marked in the Android memory compression diagram, because Android (Linux) memory compression is implemented by the Swap mechanism, and in most cases, ZRAM technology is used to simulate Swap.

ZRAM was incorporated into the mainline with the Linux 3.14 kernel as early as 2014, but because Linux is very versatile, this technology is not enabled by default, only Android and a few Linux desktop distributions such as Fedora enable this technology by default to ensure multitasking Reasonable tiered storage of memory in scenarios.

0x02 ZRAM operating mechanism

The principle of ZRAM is to divide a memory area as a virtual block device (which can be understood as a memory file system that supports transparent compression). When the system memory is insufficient and pages are swapped, the pages that should be swapped out can be compressed and placed in memory. Since some of the pages that are "swapped out" are compressed, more physical memory is available.

Since ZRAM does not change the basic structure of the Linux memory model, we can only use the priority capability of Swap in Linux to treat ZRAM as a high-priority Swap, which also explains why Swap appears on mobile phones with fragile flash memory. The essence is still ZRAM.

As some mobile phones begin to use real solid-state drives, there are also mobile phones that put Swap on the hard drive, but ZRAM is generally preferred.

Due to the existence of this operating mechanism, ZRAM can be designed to be simple enough: the memory swap strategy is handed over to the kernel, and the compression algorithm is handed over to the compression library. Basically, ZRAM itself only needs to implement block device drivers, so it is highly customizable and flexible. This is also unmatched by systems such as Windows and macOS.

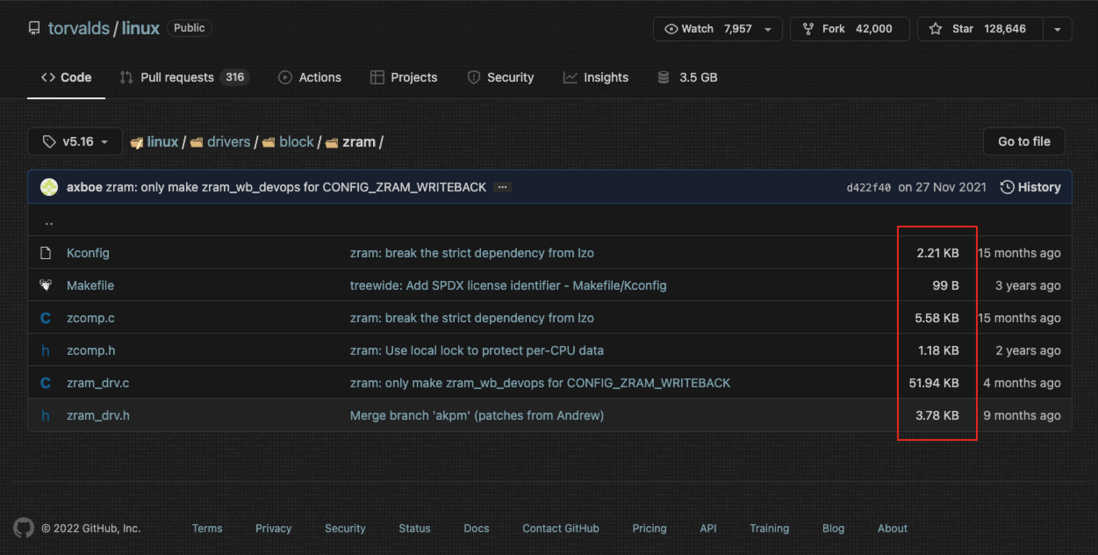

The source code of ZRAM in Linux5.16 is less than 100KB, and the implementation is very streamlined. Friends who are interested in Linux driver development can also start their research from here: linux/Kconfig at v5.16 · torvalds/linux

0x03 ZRAM configuration and self-start

There is very little information about ZRAM on the Internet, so the author plans to start the analysis from the relevant source code and official documents of ZRAM in the Linux mainline.

1. Confirm whether the kernel supports & whether ZRAM is enabled

Since ZRAM is a kernel module, it is necessary to first check whether this module exists in the kernel of the current Linux machine.

Before configuring, readers need to confirm whether their kernel version is above 3.14 . Some VPS still use Xen , OpenVZ and other virtualization/containerization technologies, and the kernel version is often stuck at 2.6 , so such machines cannot enable ZRAM. The machine I use as an example has a Linux kernel version of 5.10 , so ZRAM can be enabled:

However, it is not reliable to judge according to the kernel version, such as CentOS 7 . Although the kernel version is 3.10, it supports ZRAM. There are also very few distributions or embedded Linux that choose not to compile ZRAM in order to reduce resource consumption. Therefore, we better use the modinfo command to check Check if there is ZRAM support:

As shown in the figure, although this server is CentOS 7.8 , the kernel version is only up to 3.10, but it supports ZRAM, so it is more effective to use the modinfo command.

Some distributions have ZRAM enabled by default but not configured, we can use lsmod to check if ZRAM is enabled:

lsmod | grep zram2. Enable the ZRAM kernel module

If we are sure that ZRAM is not enabled, we can create a new file /etc/modules-load.d/zram.conf and enter zram in it. Restart the machine and execute lsmod | grep zram . When you see the output as shown in the figure below, it means that ZRAM has been enabled and can support auto-start at boot:

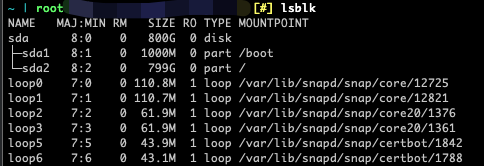

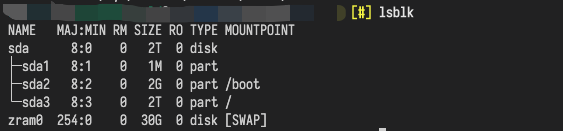

We mentioned above that ZRAM is essentially a block device driver, so what happens when we enter lsblk ?

It can be seen that there is no zram related words, this is because we need to create a new block device first.

Open the Kconfig file in the ZRAM source code , you can find the following instructions:

Creates virtual block devices called /dev/zramX (X = 0, 1, ...).

Pages written to these disks are compressed and stored in memory

itself. These disks allow very fast I/O and compression provides

good amounts of memory savings.It has several use cases, for example: /tmp storage, use as swap

disks and maybe many more.See Documentation/admin-guide/blockdev/zram.rst for more information.

It mentions a documentation Documentation/admin-guide/blockdev/zram.rst

According to the documentation, we can use the method of modprobe zram num_devices=1 to enable the kernel to open a ZRAM device when the ZRAM module is enabled (usually only one is needed), but this way of opening the device will still fail after restarting, which is inconvenient.

Fortunately, the existence of modprobe is mentioned in the documentation of modprobe.d : modprobe.d(5) - Linux manual page .

Continue to read the document of modprobe.d , we will find that it is mainly used for the predefined parameters of modprobe , that is, only need to enter modprobe zram , supplemented by the configuration in modprobe.d , the parameters can be automatically added. Since we use modules-load.d to realize the self-starting of the ZRAM module, we only need to configure the parameters in modprobe.d .

According to the document mentioned above, create a new file /etc/modprobe.d/zram.conf and enter options zram num_devices=1 in it to configure a ZRAM block device, which will also take effect after restarting.

3. Configure the zram0 device

After restarting, enter lsblk , but find that the required ZRAM device still does not appear?

Don't worry, this is because we haven't created a file system for this block device. Although lsblk sounds like it lists block devices, it actually reads the file system information in the /sys directory and compares it with udev device information comparison.

Read the document of udev : udev(7) - Linux manual page , which mentions that udev will read device information from the /etc/udev/rules.d directory. According to the instructions in the document, we create a new file named /etc/udev/rules.d/99-zram.rules and write the following content in it :

KERNEL=="zram0",ATTR{disksize}="30G",TAG+="systemd"Among them, the KERNEL attribute is used to specify the specific device, and the ATTR attribute is used to pass parameters to the device. Here we need to read the document of zram , which mentions:

Set disk size by writing the value to sysfs node 'disksize'. The value can be either in bytes or you can use mem suffixes. Examples:

# Initialize /dev/zram0 with 50MB disksize echo $((50*1024*1024)) > /sys/block/zram0/disksize # Using mem suffixes echo 256K > /sys/block/zram0/disksize echo 512M > /sys/block/zram0/disksize echo 1G > /sys/block/zram0/disksizeNote: There is little point creating a zram of greater than twice the size of memory since we expect a 2:1 compression ratio. Note that zram uses about 0.1% of the size of the disk when not in use so a huge zram is wasteful.

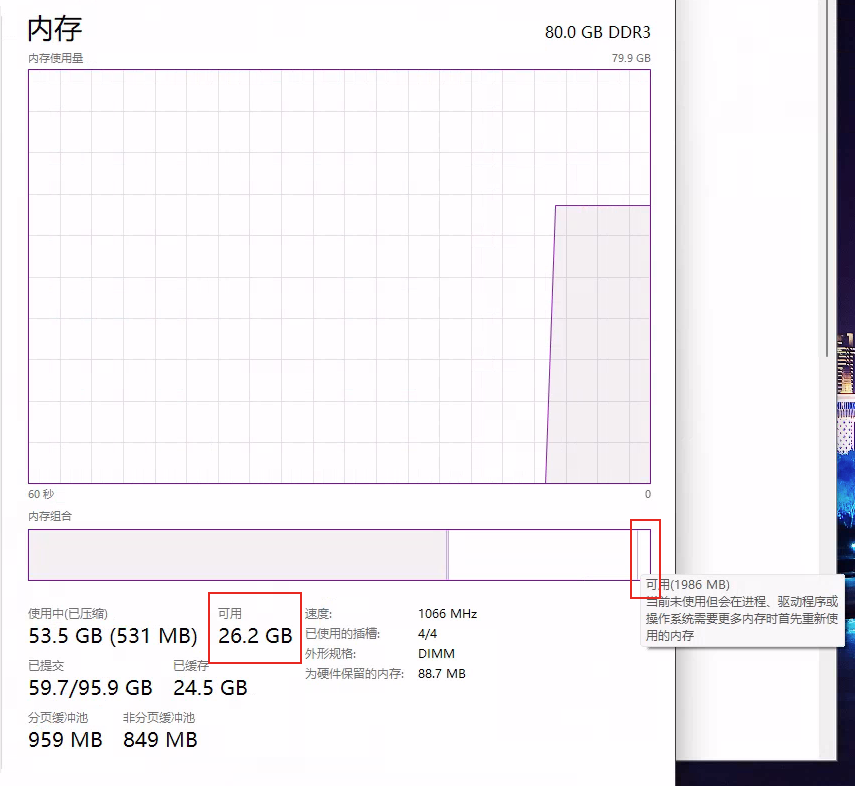

That is, the block device accepts a parameter named disksize , and it is not recommended to allocate more than twice the ZRAM space of the memory capacity. The author's Linux environment has 60G of memory. Considering the actual usage, 30G of ZRAM is set, so that ideally, you can get more than 60G - (30G / 2) + 30G > 75G memory space, which is enough to use (why do you want to do this calculation? Please read "ZRAM monitoring" A chapter). Readers can choose the size of ZRAM space according to their actual situation. Generally speaking, it can be set smaller at the beginning, and it can be expanded if it is not enough.

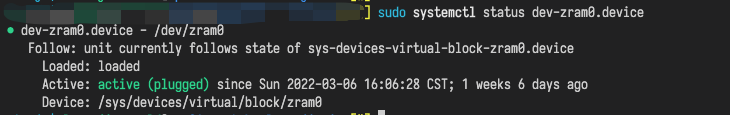

TAG attribute is used to mark the device type (who manages the device). According to the documentation of systemd.device : systemd.device(5) - Linux manual page , most block devices and network devices are recommended to be marked with a TAG of systemd , so that systemd can Consider this device as a Unit , which is easy to control the dependencies of the service (such as starting the service after the block device is loaded successfully, etc.), here we also mark it as systemd .

You can use methods such asdev-zram0.deviceordev-sda1.deviceto obtain the Device Unit of the device

After the configuration is complete, restart Linux again, and you can see the zram0 device in the lsblk command:

4. Configure the zram0 device as Swap

After obtaining a 30G ZRAM device, the next thing to do is to configure the device as Swap. Experienced readers should have guessed the next operation:

mkswap /dev/zram0

swapon /dev/zram0Yes, configuring a zram0 device as Swap is exactly the same as configuring a normal device/partition/file as Swap, but how do you make it happen automatically?

The first thing that comes to mind is to use fstab , but luckily, the 06237dc2fa3407 used by the modules-load.d kernel module cannot escape the clutches of Systemd: modules-load.d(5) - Linux manual page . Since you have been on the Systemd thief ship from the beginning, let's follow through!

Under the Systemd system, the boot-up command can be registered as a Service Unit. We create a new file /etc/systemd/system/zram.service and write the following content in it:

[Unit]

Description=ZRAM

BindsTo=dev-zram0.device

After=dev-zram0.device

[Service]

Type=oneshot

RemainAfterExit=true

ExecStartPre=/sbin/mkswap /dev/zram0

ExecStart=/sbin/swapon -p 2 /dev/zram0

ExecStop=/sbin/swapoff /dev/zram0

[Install]

WantedBy=multi-user.targetNext, run systemctl daemon-reload reload the configuration file, and then run systemctl enable zram --now . If there is no error, you can run swapon -s check the Swap status. If you see that there is a device named /dev/zram0 , congratulations! Now ZRAM has been configured and can be self-started~

In order to help readers understand the principles of ZRAM and Systemd, a fully manual configuration method is adopted here. If readers find it more troublesome, or have large-scale deployment needs, you can use systemd/zram-generator: Systemd unit generator for zram devices , most of the distributions that enable ZRAM by default (such as Fedora) use this tool, ZRAM can be enabled by running systemctl enable /dev/zram0 --now after writing the configuration file.5. Configure double-layer Swap (optional)

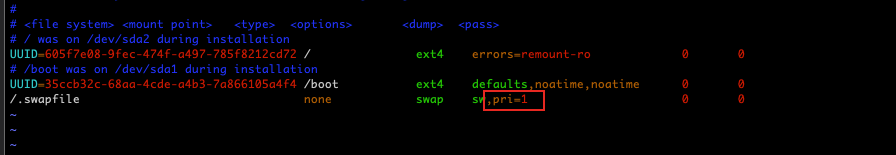

In the previous section, we configured ZRAM and set it to Swap, but ZRAM still does not take effect at this time. why? Sharp-eyed readers should have noticed that /.swapfile has a higher priority than /dev/zram0 , which results in that when Linux needs to swap memory, it will still preferentially swap pages into /.swapfile instead of ZRAM.

There are two ways to solve this problem: disable Swapfile, or reduce the priority of Swapfile. Here, in order to avoid OOM after ZRAM is exhausted, which leads to service disconnection, we adopt the latter, that is, configure double-layer Swap. After exhaustion, the low-priority Swapfile will continue to be used.

We open the configuration file of Swapfile (the author's configuration file is in /etc/fstab ), and add the parameters as shown in the following figure:

If you use other methods to configure Swapfile (such as Systemd), you only need to ensure that the -p parameter is carried when executing swapon . The lower the number, the lower the priority. The same is true for ZRAM, as in 06237dc2fa3601 above, the priority of zram.service is configured as 2.

After setting, restart Linux, execute swapon -s again to check the Swap status, and ensure that the ZRAM priority is higher than other Swap priorities:

0x04 ZRAM monitoring

After enabling ZRAM, how can we view the actual utility of ZRAM, such as the size before and after compression, and the status of compression ratio?

The most direct way is to look at the driver's source code , and the document . You can find that the function mm_stat_show() defines the output of the /sys/block/zram0/mm_stat file, from left to right:

$ cat /sys/block/zram0/mm_stat

orig_data_size - 当前压缩前大小 (Byte)

4096

compr_data_size - 当前压缩后大小 (Byte)

74

mem_used_total - 当前总内存消耗,包含元数据等 Overhead(Byte)

12288

mem_limit - 当前最大内存消耗限制(页)

0

mem_used_max - 历史最高内存用量(页)

1223118848

same_pages - 当前相同(可被压缩)的页

0

pages_compacted - 历史从 RAM 压缩到 ZRAM 的页

50863

huge_pages - 当前无法被压缩的页(巨页)

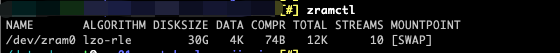

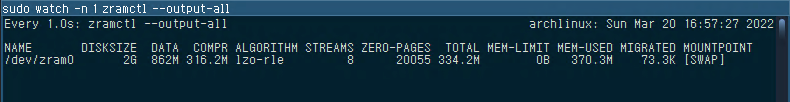

0This file is suitable for output to various monitoring software for monitoring, but whether it is Byte or page, these bare values are still inconvenient to read. Fortunately, the util-linux package provides a tool named zramctl (and systemctl is actually the relationship between Lei Feng and Lei Feng Pagoda ), execute zramctl after installing util-linux , you can get the result shown in the following figure:

According to the output of mm_stat above, we can zramctl the meaning of each value, or we can find the source code 16237dc2fa3786 of to understand the meaning and unit of each output:

static const struct colinfo infos[] = {

[COL_NAME] = { "NAME", 0.25, 0, N_("zram device name") },

[COL_DISKSIZE] = { "DISKSIZE", 5, SCOLS_FL_RIGHT, N_("limit on the uncompressed amount of data") },

[COL_ORIG_SIZE] = { "DATA", 5, SCOLS_FL_RIGHT, N_("uncompressed size of stored data") },

[COL_COMP_SIZE] = { "COMPR", 5, SCOLS_FL_RIGHT, N_("compressed size of stored data") },

[COL_ALGORITHM] = { "ALGORITHM", 3, 0, N_("the selected compression algorithm") },

[COL_STREAMS] = { "STREAMS", 3, SCOLS_FL_RIGHT, N_("number of concurrent compress operations") },

[COL_ZEROPAGES] = { "ZERO-PAGES", 3, SCOLS_FL_RIGHT, N_("empty pages with no allocated memory") },

[COL_MEMTOTAL] = { "TOTAL", 5, SCOLS_FL_RIGHT, N_("all memory including allocator fragmentation and metadata overhead") },

[COL_MEMLIMIT] = { "MEM-LIMIT", 5, SCOLS_FL_RIGHT, N_("memory limit used to store compressed data") },

[COL_MEMUSED] = { "MEM-USED", 5, SCOLS_FL_RIGHT, N_("memory zram have been consumed to store compressed data") },

[COL_MIGRATED] = { "MIGRATED", 5, SCOLS_FL_RIGHT, N_("number of objects migrated by compaction") },

[COL_MOUNTPOINT]= { "MOUNTPOINT",0.10, SCOLS_FL_TRUNC, N_("where the device is mounted") },

};Some values that are not output by default can be output through zramctl --output-all :

The output of this tool confuses Byte and page, confuses the highest historical, cumulative and current values, and displays parameters that are not set (such as memory limit) as 0B, so the output is only for reference, and the readability is still not high. , Generally speaking, you only need to understand DATA and COMPR fields.

Combining the output of zramctl and mm_stat , it is not difficult to find that the ZRAM size we configured is actually the uncompressed size, not the compressed size. We mentioned an algorithm earlier, when the ZRAM size is 30GB and the compression ratio is 2:1 , the available memory of 60G - (30G / 2) + 30G > 75G can be obtained, which is calculated by assuming that 30GB of uncompressed data can be compressed to 15G, occupying 15G of physical memory space, that is, 60G - (30G / 2) , and then adding ZRAM can store the maximum memory data of 30G.

The way to calculate the compression rate is DATA / COMPR . Taking the workload of ElasticSearch mentioned in the introduction as an example, as shown in the figure below, the default compression rate is 1.1G / 97M = 11.6 . Considering that the data of ElasticSearch is mainly plain text, and the mechanism of the JVM is to apply for memory to the system in advance, it is very satisfactory to be able to achieve such a compression rate.

0x05 ZRAM tuning

Although the settings of ZRAM are very simple, it still provides a large number of configurable items for users to adjust. If you are still not satisfied after configuring ZRAM by default, or you want to further explore the potential of ZRAM, you need to optimize it.

1. Choose the most suitable compression algorithm

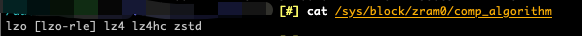

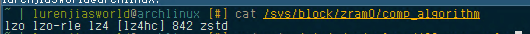

As shown in the figure above, the current default compression algorithm of ZRAM is generally lzo-rle , but in fact, there are many compression algorithms supported by ZRAM. We can obtain the supported algorithms through cat /sys/block/zram0/comp_algorithm . The currently enabled algorithm is enclosed by [] :

Compression is a time-for-space operation, which means that these compression algorithms do not have absolute advantages and disadvantages, but there are trade-offs in different situations. Some have high compression rates, some have large bandwidth, and some have low CPU consumption... On different hardware, different choices will also encounter different bottlenecks, so only real tests can help choose the most suitable compression algorithm.

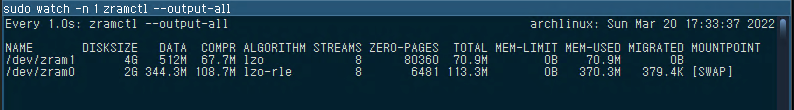

In order not to affect the production environment and avoid data leakage caused by dump memory, the author uses another virtual machine with the same hardware for testing. The virtual machine has 2G of RAM and 2G of ZRAM, and also runs ElasticSearch (but the amount of data is small) , and then use the stress tool to put 1GB of pressure on the memory. At this time, the inactive memory (that is, some data in ElasticSearch) will be swapped out to ZRAM, as shown in the figure:

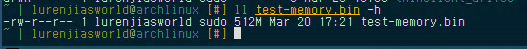

Use the following script to get 512M of memory and dump it into a file:

pv -s 512M -S /dev/zram0 > "./test-memory.bin"Next, the author releases the load, frees up enough physical memory, and uses the script modified from better_benchmarks.bash - Pastebin.com to test different algorithms and different page_cluster (mentioned in the next section) under the root user: LuRenJiasWorld/zram-config-benchmark.sh .

During the test we will see that new ZRAM devices are created, because the script will write the memory of the previous dump to these devices, and each device is configured with a different compression algorithm:

Before using this script, it is recommended to read the content of the script and select different configurations such as memory size, ZRAM device size, test algorithm and parameters according to your needs. Depending on the configuration, the test may last 20~40 minutes. It is not recommended to perform any other operations during this process to avoid disturbing the test results.

After the test is over, run the script with a common user to obtain the test results in CSV format, save them as files, and import them into Excel to view the results. The following is the test data in the author's environment:

algo | page-cluster| "MiB/s" | "IOPS" | "Mean Latency (ns)"| "99% Latency (ns)"

-------|-------------|-------------------|---------------|--------------------|-------------------

lzo | 1 | 9130.553415506836 | 1168710.932773| 5951.70401 | 27264

lzo | 2 | 11533.120699842773| 738119.747899 | 9902.73897 | 41728

lzo | 3 | 13018.8484358584 | 416603.10084 | 18137.20228 | 69120

lzo | 0 | 6360.546916032226 | 1628299.941176| 3998.240967 | 18048

zstd | 1 | 4964.754078584961 | 635488.478992 | 11483.876873 | 53504

zstd | 2 | 5908.13019465625 | 378120.226891 | 19977.785468 | 85504

zstd | 3 | 6350.650210083984 | 203220.823529 | 37959.488813 | 150528

zstd | 0 | 3859.24347590625 | 987966.134454 | 7030.453683 | 35072

lz4 | 1 | 11200.088793330078| 1433611.218487| 4662.844947 | 22144

lz4 | 2 | 15353.485367975585| 982623 | 7192.215964 | 30080

lz4 | 3 | 18335.66823135547 | 586741.184874 | 12609.004058 | 45824

lz4 | 0 | 7744.197593880859 | 1982514.554622| 3203.723399 | 9920

lz4hc | 1 | 12071.730649291016| 1545181.588235| 4335.736901 | 20352

lz4hc | 2 | 15731.791228991211| 1006834.563025| 6973.420236 | 29312

lz4hc | 3 | 19495.514164259766| 623856.420168 | 11793.367214 | 43264

lz4hc | 0 | 7583.852120536133 | 1941466.478992| 3189.297915 | 9408

lzo-rle| 1 | 9641.897805606446 | 1234162.857143| 5559.790869 | 25728

lzo-rle| 2 | 11669.048803505859| 746819.092437 | 9682.723915 | 41728

lzo-rle| 3 | 13896.739553243164| 444695.663866 | 16870.123274 | 64768

lzo-rle| 0 | 6799.982996323242 | 1740795.689076| 3711.587765 | 15680

842 | 1 | 2742.551544446289 | 351046.621849 | 21805.615246 | 107008

842 | 2 | 2930.5026999082033| 187552.218487 | 41516.15757 | 193536

842 | 3 | 2974.563821231445 | 95185.840336 | 82637.91368 | 366592

842 | 0 | 2404.3125984765625| 615504.008403 | 12026.749364 | 63232We can get a lot of information from the above table. The headers from left to right are:

- compression algorithm

page-clusterparameter (the next section explains its meaning)- Throughput bandwidth (bigger is better)

- IOPS (bigger is better)

- Average latency (smaller is better)

- 99th percentile delay (smaller is better) (used to get maximum delay).

Since the compression ratio in different page-cluster cases is the same, the table does not reflect this situation, we need to execute the following command to obtain the compression ratio information of each compression algorithm under the specified workload:

$ tail -n +1 fio-bench-results/*/compratio

==> fio-bench-results/842/compratio <==

6.57

==> fio-bench-results/lz4/compratio <==

6.85

==> fio-bench-results/lz4hc/compratio <==

7.58

==> fio-bench-results/lzo/compratio <==

7.22

==> fio-bench-results/lzo-rle/compratio <==

7.14

==> fio-bench-results/zstd/compratio <==

9.48Based on the above metrics, the author selected the best configuration on this system + this workload (named compression algorithm-page_cluster):

Maximum throughput: lz4hc-3 (19495 MiB/s)

Lowest latency: lz4hc-0 (3189 ns)

Maximum IOPS: lz4-0 (1982514 IOPS)

Highest compression ratio: zstd (9.48)

Overall Best: lz4hc-0

According to different workloads and requirements, readers can choose the parameters that suit them, or combine the multi-level Swap mentioned above to further layer the ZRAM, use the most efficient memory as the high-priority swap, and use the memory with the highest compression rate as the Medium and low priority Swap.

If there are differences in the architecture/hardware of the test machine and the production environment, you can copy the memory exported during the test to the production environment, provided that both run the same workload, otherwise the test memory has no reference value.

2. Configure ZRAM tuning parameters

In the last picture, we selected the best ZRAM algorithm and parameters, and then applied it to our production environment.

2.1. Configure the compression algorithm to obtain the best compression ratio

First, switch the compression algorithm from the default lzo-rle to lz4hc . According to the ZRAM documentation, you only need to write the compression algorithm into /sys/block/zram0/comp_algorithm . Considering the operation when we configure /sys/block/zram0/disksize , we re-edit /etc/udev/rules.d/99-zram.rules file and modify its content as follows:

- KERNEL=="zram0",ATTR{disksize}="30G",TAG+="systemd"

+ KERNEL=="zram0",ATTR{comp_algorithm}="lz4hc",ATTR{disksize}="30G",TAG+="systemd"It should be noted that the compression algorithm must be specified first, and then the disk size must be specified. Whether in the configuration file or directly on the echo parameter to the /sys/block/zram0 device, operations need to be performed in the order of the document.

Restart the machine and execute cat /sys/block/zram0/comp_algorithm again, you will find that the current compression algorithm has become lz4hc :

2.2. Configure page-cluster to avoid wasting memory bandwidth and CPU resources

After configuring comp_algorithm , let's configure page-cluster next.

There are a lot of points sold above. In short, the function of page-cluster is to read more pages 2^n each time data is read from the swap device. Some block devices or file systems have the concept of clusters, and the data read is also read by clusters. The size of the memory page is 4KiB, and the data of one cluster read each time is 32KiB, then the extra data read out is also replaced by the memory, which can avoid waste and reduce the number of frequent disk reads. This is in the Linux documentation. Also mentioned: linux/vm.rst · torvalds/linux . The default size of page-cluster is 3, that is, the data of 4K*2^3=32K will be read from disk every time.

After understanding the principle of page-cluster , we will find that ZRAM is not a traditional block device. The default design of the memory controller is to read by page, so this optimization for disk devices is negative in the ZRAM scenario. It will lead to prematurely hitting the memory bandwidth bottleneck and CPU decompression bottleneck and lead to performance degradation, which can explain why with the increase of page-cluster in the above table, the throughput also increases, but the IOPS becomes smaller, if the memory bandwidth And there is no bottleneck in CPU decompression, then IOPS should theoretically remain the same.

Considering that page-cluster is a redundant optimization both in theory and in actual testing, we can directly set it to 0. Edit /etc/sysctl.conf directly and add a new line at the end:

vm.page-cluster=0Run sysctl -p for this setting to take effect without rebooting.

2.3. Make the system use Swap more aggressively

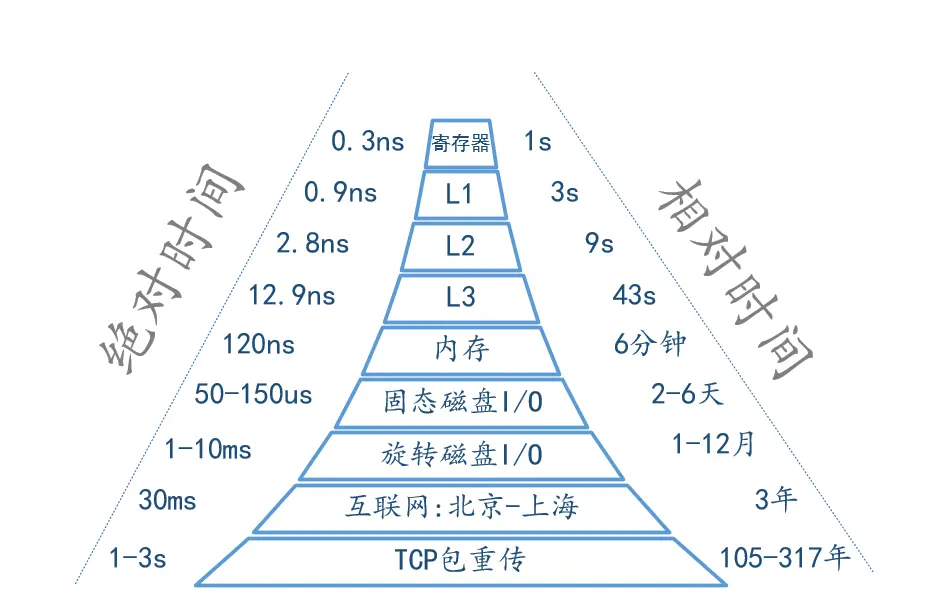

In the above performance test, the minimum average read latency under the lz4hc compression parameter is 3203ns, or 0.003ms. In contrast, on the author's computer, the delay of directly reading the memory is about 100ns (0.0001ms, some hardware can reach 50ns), the delay of reading one SSD is generally 0.05ms, and the delay of reading one mechanical hard disk is 0.05ms. Between 1ms and 1000ms.

Comparing the absolute time and relative time of reading and writing storage devices at different levels, we can see the exponential difference between them. Image from cpu Memory Access Speed, Disk and Network Speed, Numbers Everyone Should Know | las1991 , first from PPT of Jeff Dean : Dean keynote-ladis2009 .

See also rule-of-thumb-latency-numbers-letter.pdf for more details.

Compared with 1ms, 0.003ms is more than 300 times the difference, while 0.0001ms is only more than 30 times compared to 0.003ms, which means that ZRAM is more like RAM than Swap. In this case, we can naturally make Linux more aggressive Use Swap efficiently, and let ZRAM get more free memory space as early as possible.

Here we need to know two things: swappiness and memory fragmentation.

Swappiness is a kernel parameter used to determine "how much the kernel tends to swap out to Swap when memory is insufficient". The larger the value, the greater the tendency. Regarding Swappiness, the next chapter will continue to explain its role. For now, we can consider this The higher the value in ZRAM configuration, the better, even 100(%) doesn't matter.

Memory fragmentation is a phenomenon that appears in a long-running operating system, which means that there is free memory, but the memory cannot be applied for because there is not enough continuous free space. Regardless of whether the system has an MMU or not, it will encounter the problem that there is no more contiguous space allocated to the required program in physical memory/virtual memory/DMA to varying degrees (the contiguous space that used to be available is due to the irregular release of memory after the memory is released. become discontinuous, those data that are left and scattered in the memory space are so-called memory fragmentation). Generally speaking, Linux will defragment memory when there is memory fragmentation and thinks it is necessary to defragment .

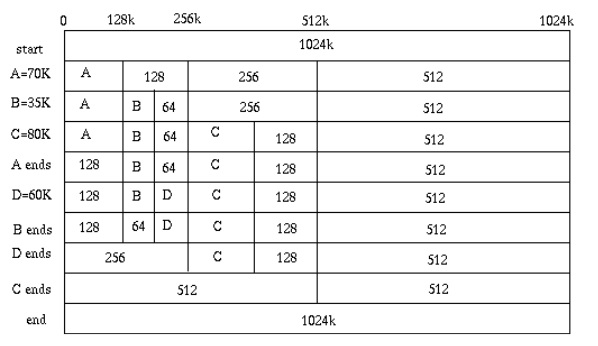

In order to avoid memory fragmentation, Linux memory allocation and sorting follow the Buddy System, that is, the memory pages are graded to the power of 2^n, from 1 to 1024, a total of 11 levels, each level can be regarded as a set of page frames. For example, if a process needs 64 pages of memory, the operating system will first look for any free space in the linked list of the 64 page frame. If not, it will go to the linked list of the 128 page frame to find it. If there is, the lower end of the 128 page frame will be 64 The page (left) is allocated, and the high-end 64 pages are pointed to a new 64-page frame linked list. When the memory is released, since the requested memory is aligned to the lower end of the page frame, when the memory is released, an entire page frame can be released.

The picture comes from Buddy system partner allocator implementation - youxin - Blog Park

The design of the Buddy System is very ingenious and the implementation is very elegant. Interested readers can read Buddy System Partner Distributor Implementation - youxin - Blog Park to learn more about its design, so I won't go into details here. There are still a lot of such ingenious designs in Linux. Knowing more will help improve programming taste. The author is also in the process of learning and hopes to make progress together with readers.

As mentioned above, memory fragmentation is generally a problem that occurs after the memory is fully utilized and used for a long time, while ZRAM will cause page feeds when the available memory is insufficient. After the pressure rises, it is easy to appear unresponsive in an instant, and it may be too late to fragment the memory, resulting in ZRAM unable to obtain memory, further causing system instability.

Therefore, another operation we need to do is to make the memory as ordered as possible, and trigger defragmentation as soon as possible when the page frame of the required level is insufficient, so as to fully squeeze the memory. The parameter for configuring the memory defragmentation condition in Linux is vm.extfrag_threshold . When the page frame fragmentation index is lower than this threshold, defragmentation will be triggered. For the calculation method of the fragmentation index, please refer to about the linux memory fragmentation index - _Memo - Blog Park . In general,

With all that said, we go ahead and add the following two lines after the /etc/sysctl.conf file:

# 默认是 500(介于 0 和 1000 之间的值)

vm.extfrag_threshold=0

# 默认是 60

vm.swappiness=100These two parameters are determined by the author based on the monitoring information of server memory usage, ZRAM usage and defragmentation timing. They may not be suitable for all scenarios. Not setting these parameters will have no effect. If you are not sure or do not want to do experiments, do not It doesn't matter if you set it, it's just for understanding.

It should be noted that there are a lot of fallacies on the Internet about the swappiness parameter. For example, the maximum value of swappiness is 100. Most of them treat this value as a percentage. Next, the author will explain why this argument is wrong.

3. Why ZRAM is not used

The example mentioned in the introduction is already a year ago, when it was shared in a smaller circle. I remember that on the second day after the sharing, a friend came to me and asked why he had configured ZRAM and configured swappiness to 100, but ZRAM was not used at all.

Thinking about it now, this is actually a very common problem, so although the title of this section is "Why is ZRAM not being used", the next thing I want to share is "When exactly does page swapping occur?" this question.

There are often a lot of Myths on the Internet about how to configure software, especially scenarios related to performance optimization. This is still true of open source software, not to mention closed source software such as Windows. Outdated and false information, mixed with placebo effect, has become a major feature of today's performance optimization guides. These Myths are not necessarily intentional by the author, but they also reflect It eliminates the inertial thinking that exists in both the author and the reader.

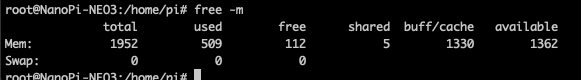

Before understanding swappiness , let's first understand the memory recycling mechanism of Linux. Like other modern operating systems, Linux tends to use memory in advance. When we run free -m command, we can see the following parameters, which represent:

- total: total memory

- used: The amount of memory used by the process (some systems also include shared+buffer/cache, which is the amount of memory used by the operating system)

- free: the amount of memory not used by the operating system

- shared: the amount of memory shared between processes

- buff/cache: buffers and buffers

- available: the maximum amount of memory the process can use

When we say that Android always eats as much memory as it has, we usually mean that the amount of free in memory is less, which is relative to Windows, because Windows defines free as the meaning of available under Linux, which does not mean Windows Design without buff/cache:

Microsoft-style Chinese classic operation: Confuse Free and Available, I will share the real meaning of various memory parameters under Windows when I have the opportunity (much more confusing than Linux, and it is difficult to justify it).

To put it simply, buffer is the write cache, and cache is the read cache. These two are collectively referred to as file pages. Linux regularly flushes the write cache, but usually does not periodically flush the read cache, which occupies the available space in memory. , when the process has new memory requirements, Linux will still allocate it to the process from free , until the memory of free cannot meet the process requirements, then Linux will choose to reclaim the file page for the process to use.

However, if all the file pages are used by the process, it is equivalent to the direct failure of the Linux file caching mechanism. Although the memory usage is satisfied, it will affect the IO performance. Therefore, in addition to reclaiming the file pages, Linux will also choose to store the pages. Swap out, that is, using the Swap mechanism to save temporarily unused memory data (usually anonymous pages, that is, heap memory) in the disk, which is called swapping out.

Generally speaking, Linux only chooses to reclaim memory when memory is tight, so how to measure tightness? After reaching the tense state, what standard do you use to exit the tense state? This is to mention a design of Linux: the memory watermark ( Watermark ).

The kernel thread kswapd0 responsible for reclaiming memory defines a set of standards for measuring memory pressure, that is, the memory water level. We can obtain the water level parameters through the /proc/zoneinfo file:

This file has a commonality with the /proc/buddyinfo mentioned above, that is, they all partition the memory, such as DMA, DMA32, Normal, etc. For details, you can read Brief Analysis of Linux Memory Management Mechanism - Fleet - Blog Park , not here To repeat, under the x86_64 architecture, here we only look at the memory in the Normal area.$ cat /proc/zoneinfo

...

Node 0, zone Normal

pages free 12273184 # 空闲内存页数,此处大概空闲 46GB

min 16053 # 最小水位线,大概为 62MB

low 1482421 # 低水位线,大概为 5.6GB

high 2948789 # 高水位线,大概为 11.2GB

...

nr_free_pages 12273184 # 同 free pages

nr_zone_inactive_anon 1005909 # 不活跃的匿名页

nr_zone_active_anon 60938 # 活跃的匿名页

nr_zone_inactive_file 878902 # 不活跃的文件页

nr_zone_active_file 206589 # 活跃的文件页

nr_zone_unevictable 0 # 不可回收页

...It should be noted that due to the NUMA architecture, each Node has its own memory space, so if there are multiple CPUs, the watermark and statistics of each memory area are independent.

First, let's explain the water level line. There are four cases here:

- When

pages freeis less thanpages min, it means that all the memory is exhausted. At this time, it means that the memory pressure is too large, and synchronous recovery will be triggered, which means that the system is stuck, and the allocated memory is blocked. Start to try defragmentation and memory compression. If it does not work, then Start executing OOM Killer untilpages freeis greater thanpages high. - When

pages freeis betweenpages minandpages low, it means that the memory pressure is high, and thekswapd0thread starts to reclaim memory untilpages freeis greater thanpages high. - When

pages freeis betweenpages lowandpages high, it means that the memory pressure is normal and the operation is generally not performed. - When

pages freeis greater thanpages high, it means that there is basically no pressure on the memory, and there is no need to reclaim the memory.

Next, we introduce the function of the parameter swappiness . As can be seen from the above process, the swappiness parameter determines the memory recovery strategy of the kswapd0 thread. Since there are two types of memory pages that can be reclaimed: anonymous pages and file pages, swappiness determines the ratio of anonymous pages compared to file pages that are swapped out, because file pages are swapped out by directly writing them back to disk or destroying them , this parameter can also be interpreted as "How aggressively Linux reclaims anonymous pages when of memory at ".

Like other things in the world, there is no absolute good or bad, and it is difficult to score these things with a 100-point system, but we can evaluate the cost-effectiveness of doing a thing through the stakes, and achieve it with the least cost and the highest benefit. target, the same is true for Linux. According to the source code in the mm/vmscan section, we can find that Linux brings swappiness into the following algorithm:

anon_cost = total_cost + sc->anon_cost;

file_cost = total_cost + sc->file_cost;

total_cost = anon_cost + file_cost;

ap = swappiness * (total_cost + 1);

ap /= anon_cost + 1;

fp = (200 - swappiness) * (total_cost + 1);

fp /= file_cost + 1;Among them, anon_cost and file_cost refer to the difficulty of LRU scanning for anonymous pages and file pages, respectively.

Assuming that the process needs memory, the difficulty of scanning two memory pages is the same, and the remaining memory is less than the low water mark, that is, when swappiness defaults to 60, Linux will choose to reclaim more file pages, and when swappiness is equal to 100, Linux will equally reclaim file pages and anonymous pages, and when swappiness is greater than 100, Linux will choose to reclaim more anonymous pages.

According to Linux's document , if you use ZRAM and other IO devices that are faster than traditional disks, you can set swappiness to a value over 100, which is calculated as follows:

For example, if the random IO against the swap device is on average 2x faster than IO from the filesystem, swappiness should be 133 (x + 2x = 200, 2x = 133.33).After such an explanation, I believe the reader should understand the meaning of swappiness and why when the system load is not high, no matter how swappiness is set, the newly applied memory space will still not be written into ZRAM.

Only when the memory is insufficient and swappiness is high, the ZRAM configuration will have considerable benefits, but the swappiness should not be too high, otherwise the file pages will reside in the memory, causing a large number of anonymous pages to accumulate in the slower ZRAM device , but degrades performance. And because swappiness cannot distinguish different levels of Swap devices, if ZRAM and Swapfile layering are used, this parameter needs to be set more conservatively, usually 100 is the most appropriate value.

4. There is no silver bullet

After the detailed explanation above, I hope the reader has a more detailed and systematic understanding of the memory model of ZRAM and Linux. So is ZRAM a panacea? The answer is also no.

If ZRAM were everything, all distributions should have ZRAM enabled by default, but that's not the case. Not to mention that the configuration of ZRAM depends on the system hardware and architecture, there is no one-size-fits-all parameter, and the key problem is the performance of ZRAM. Let's go back to the table output from the performance test and compare the best ZRAM parameters with the data directly accessing the memory disk. We will get the following results:

algo | page-cluster| "MiB/s" | "IOPS" | "Mean Latency (ns)"| "99% Latency (ns)"

-------|-------------|-------------------|---------------|--------------------|-------------------

lz4hc | 0 | 7583.852120536133 | 1941466.478992| 3189.297915 | 9408

raw | 0 | 22850.29382917787 | 6528361.294582| 94.28362 | 190It can be seen that in terms of performance, even with the best ZRAM configuration, compared to direct memory access, there is still a performance gap of more than three times, not to mention 30 times the memory access latency (the reason why Apple Silcon chips can rely on lower power consumption) The performance is the same or even ahead of Intel in terms of performance, and the low latency of memory access and memory/video memory replication + exaggerated memory bandwidth is indispensable).

Successful performance optimization is by no means a once-and-for-all configuration. If it's really that simple, why isn't the software optimized before leaving the factory? The author believes that performance optimization requires a full understanding of the architecture and principles, early prediction of requirements and goals, and continuous attempts, experiments and comparisons.

These three are missing a lot, and they are not isolated from each other. Often, making the best choice requires constant trade-offs among them. "Max", "Lowest Latency", "Highest IOPS", "Highest Compression". Later, the author tried these items separately. When benchmarking ElasticSearch, the performance was not as good as the default compression algorithm. What is the meaning of these so-called "most" ?

In this article, the author spent more than 20 hours to complete the writing by taking advantage of the weekend of epidemic isolation. In order to avoid mistakes and omissions in the process, I searched a lot of information and conducted a lot of experiments. I just hope to bring readers a comprehensive sense of Enjoy, make knowledge sharing more interesting and in-depth, inspire readers to expand their thinking, and combine their own cognition to make this article more valuable than knowledge sharing. Some of the content may have omissions or even fallacies due to the author's personal ability limitation, time limit, improper revision and other reasons. If you have better opinions, you are welcome to correct them.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。