The Meituan machine learning platform developed the Booster GPU training architecture based on the in-house deeply customized TensorFlow. The overall design of the architecture fully considers the characteristics of algorithms, architectures, and new hardware, and has been deeply optimized from multiple perspectives such as data, computing, and communication. Ultimately, its cost-effectiveness is 2 to 4 times that of CPU tasks. This article mainly describes the design, implementation, performance optimization and business implementation of the Booster architecture, hoping to help or inspire students who are engaged in related development.

1 Background

In the recommendation system training scenario, the in-depth customized version of TenorFlow (TF) [1] within Meituan supports a large number of businesses within Meituan through CPU computing power. However, with the development of business, the number of samples for single training of the model is increasing, and the structure is becoming more and more complex. Taking the fine-arrangement model recommended by Meituan Takeaway as an example, the sample size for a single training has reached 10 billion or even 100 billion, an experiment consumes thousands of cores, and the CPU usage of the optimized training task has reached more than 90%. In order to support the rapid development of business, the frequency and concurrency of model iteration experiments are increasing, which further increases the demand for computing power. Under the premise of limited budget, how to achieve high-speed model training with high cost performance, so as to ensure efficient model development iteration, is an urgent problem that we need to solve.

In recent years, the hardware capabilities of GPU servers have advanced by leaps and bounds. The new generation of NVIDIA A100 80GB SXM GPU servers (8 cards) [2] can achieve: 640GB of video memory, 1~2TB of memory, 10+TB of SSD, in terms of communication. It can do: 600GB/s two-way communication between cards, 800~1000Gbps/s multi-machine communication, in terms of computing power: GPU 1248TFLOPS (TF32 Tensor Cores), CPU 96~128 physical cores. If the training architecture can take full advantage of the new hardware, the cost of model training will be greatly reduced. However, the TensorFlow community does not have an efficient and mature solution in the recommendation system training scenario. We also tried to use the optimized TensorFlow CPU Parameter Server[3] (referred to as PS) + GPU Worker mode for training, but it only has certain benefits for complex models. Although NVIDIA's open source HugeCTR[4] has excellent performance on classic deep learning models, it still needs more work to be used directly in the production environment of Meituan.

Meituan’s basic research and development machine learning platform training engine team, together with the algorithm performance team of the Soutui Technology Department of Daojia, and the NVIDIA DevTech team, established a joint project team. Based on the in-depth customization of TenorFlow and NVIDIA HugeCTR in Meituan, a high-performance GPU training architecture Booster for recommender system scenarios was developed. Currently deployed in the Meituan food delivery recommendation scenario, the offline effect of the multi-generation model's comprehensive alignment algorithm is compared with the previous, optimized CPU tasks, and the cost performance is increased by 2 to 4 times . Since Booster has better compatibility with native TensorFlow interfaces, the original TensorFlow CPU tasks can be migrated with only one line of code. In this way, Booster can quickly conduct preliminary verification on multiple business lines of Meituan. Compared with the previous CPU tasks, the average cost performance has been increased by more than 2 times. This article will focus on the design and optimization of the Booster architecture, as well as the entire process of landing in the Meituan takeaway recommendation scenario, hoping to help or inspire everyone.

2 GPU training optimization challenges

GPU training has been widely used in deep learning models in CV, NLP, ASR and other scenarios in Meituan, but it has not been widely used in recommender system scenarios. characteristics have a strong relationship.

Recommender system deep learning model features

- Large amount of read samples : training samples are in the range of tens to hundreds of terabytes, while scenarios such as CV are usually within hundreds of gigabytes.

- Large amount of model parameters : There are large-scale sparse parameters and dense parameters at the same time, requiring hundreds of GB or even TB of storage, while CV and other scene models are mainly dense parameters, usually within tens of GB.

- The computational complexity of the model is relatively low : the single-step execution of the recommendation system model on the GPU takes only 10~100ms, the single-step execution of the CV model on the GPU is 100~500ms, and the single-step execution of the NLP model on the GPU is 500ms~1s.

GPU server features

- The GPU card has strong computing power, but the video memory is still limited : if you want to fully utilize the GPU computing power, you need to put various data used for GPU computing into the video memory in advance. From 2016 to 20 years, the computing power of NVIDIA Tesla GPU cards [5] has increased by more than 10 times, but the size of video memory has only increased by about 3 times.

- The resources of other dimensions are not very sufficient : Compared with the increase of GPU computing power, the growth rate of the CPU and network bandwidth of a single machine is slower. Compared with CPU servers, GPU servers are not very cost-effective.

In summary, model training in CV, NLP and other scenarios is a computationally intensive task, and most models can be installed in the video memory of a single card, which matches the advantages of GPU servers very well. However, in the recommendation system scenario, since the model is relatively less complex, the sample size of remote reading is large, and the feature processing consumes a lot of CPU, which brings great pressure to the single-machine CPU and network. At the same time, in the face of a large number of model parameters, the GPU memory of a single machine cannot be put down. The disadvantages of these GPU servers are precisely hit by the recommendation system scenario.

Fortunately, the NVIDIA A100 GPU server, the hardware upgrade makes up for the shortcomings of video memory, CPU, and bandwidth, but if the system is not properly implemented and optimized, there will still not be too high cost-effective benefits. In the process of implementing the Booster architecture, we mainly face the following challenges:

- Data Streaming System : How to use multiple network cards and multiple CPUs to achieve high-performance data pipelines, so that the supply of data can keep up with the consumption speed of GPUs.

- Hybrid parameter calculation : For large-scale sparse parameters that cannot be directly installed in GPU memory, how to make full use of the high computing power of GPUs and the high bandwidth between GPU cards to achieve a set of large-scale sparse parameter calculations, while also taking into account the dense parameters. calculate.

3 System Design and Implementation

Faced with the above challenges, it is more difficult to design purely from the perspective of the system. Booster adopts the design idea of "algorithm + system" Co-design, which greatly simplifies the design of this generation of system. On the system implementation path, considering the expected delivery time and implementation risks of the business, we did not implement the multi-machine and multi-card version of Booster in one step. Instead, the first version first implemented the GPU single-machine multi-card version. This article focuses on the stand-alone version. Doka work . In addition, relying on the powerful computing power of the NVIDIA A100 GPU server, the computing power of a single machine can meet the single experimental needs of most of Meituan's businesses.

3.1 Rationalization of parameter scale

The use of large-scale sparse discrete features has led to a sharp expansion of the Embedding parameters of the depth estimation model. Models with a size of several terabytes were once popular in the major business scenarios pushed by the industry. However, the industry soon realized that under the circumstance of limited hardware costs, too large models have added a heavy burden to production deployment, operation and maintenance and experimental iterative innovation. Academic studies have shown [10-13] that the model effect strongly depends on the information capacity of the model, not the amount of parameters. Practice has proved that the former can be improved by optimizing the model structure, while the latter still has a lot of room for optimization under the premise of ensuring the effect. Facebook proposed Compositional Embedding [14] in 2020 to achieve several orders of magnitude compression of recommended model parameters. Alibaba also published related work [15], compressing the estimation model of core business scenarios from several terabytes to tens of gigabytes or even smaller. In general, the industry's approach mainly has the following ideas:

- De-intersection feature : The cross feature is generated by the Cartesian product between single features, which will generate a huge feature ID value space and the corresponding Embedding parameter table. Since the development of the depth prediction model, a large number of methods have been used to model the interaction between single features through the model structure, avoiding the expansion of the embedding scale caused by cross features, such as FM series [16], AutoInt [17], CAN [18] Wait.

- Refinement of features : Especially based on the idea of NAS, the adaptive feature selection of deep neural networks can be realized at a lower training cost, such as Dropout Rank [19] and FSCD [20] and other works.

- Compress the number of Embedding vectors : perform composite ID encoding and Embedding mapping on the feature values, and realize rich feature Embedding expressions with the number of Embedding vectors far smaller than the feature value space, such as Compositional Embedding[14], Binary Code Hash Embedding[21] ] and so on.

- Compressed Embedding vector dimension : The dimension of a feature Embedding vector determines the upper limit of its representation information, but not all feature values have such a large amount of information, which requires Embedding expression. Therefore, each feature value can be adaptively learned to reduce the Embedding dimension, thereby compressing the total number of parameters, such as AutoDim [22] and AMTL [23] and other works.

- Quantization compression : Use half-precision or even int8 and other more aggressive methods to quantize and compress model parameters, such as DPQ [24] and MGQE [25].

The model recommended by Meituan Waimai once reached more than 100 GB. By applying the above solutions, we controlled the model to be less than 10 GB under the premise that the loss of model prediction accuracy is controllable.

Based on this basic assumption of the algorithm, we define the design goal of the first stage to support the parameter scale below 100G . This can better adapt to the video memory of the A100 and store it on a single machine with multiple cards. The two-way bandwidth between GPU cards is 600GB/s, which can give full play to the processing power of the GPU, and can also meet the needs of most models of Meituan.

3.2 System Architecture

The architecture design of GPU-based systems should fully consider the characteristics of the hardware in order to give full play to the advantages of performance. The hardware topology of our NVIDIA A100 server is similar to that of NVIDIA DGX A100 [6]. Each server contains: 2 CPUs, 8 GPUs, and 8 network cards. The architecture diagram of the Booster architecture is as follows:

The whole system mainly includes three core modules: data module, calculation module, communication module:

- Data module : Meituan has developed a set of data distribution system that supports multiple data sources and multiple frameworks. On the GPU system, we have transformed the data module to support data download from multiple network cards, and considering the characteristics of NUMA Awareness, each CPU A data distribution service is deployed on both.

- Computing module : Each GPU card starts a TensorFlow training process to perform training.

- Communication module : We use Horovod [7] for inter-card communication in distributed training, and we start a Horovod process on each node to perform corresponding communication tasks.

The above design conforms to the native design paradigm of TensorFlow and Horovod. Several core modules can be decoupled from each other and iterate independently, and if the latest features of the open source community are merged, there will be no architectural impact on the system.

Let's take a look at the brief execution flow of the entire system. The execution logic inside the TensorFlow process started on each GPU card is as follows:

The entire training process involves several key modules such as parameter storage, optimizer, and inter-card communication. For the input features of samples, we divide them into sparse features (ID class features) and dense features. In actual business scenarios, sparse features usually have a large amount of IDs, and the corresponding sparse parameters are stored in the HashTable data structure. Moreover, due to the large amount of parameters, a single GPU card cannot be stored. We will use ID Modulo to Partition to Stored in the video memory of multiple GPU cards. For sparse features with a small amount of IDs, the business usually uses a multi-dimensional matrix data structure to express (the data structure in TensorFlow is Variable). Due to the small amount of parameters, the GPU single card memory can be put down. We use the Replica method. A parameter is placed in the video memory of the GPU card. For dense parameters, the Variable data structure is usually used, which is placed in the GPU memory in a Replica manner. The internal implementation of the Booster architecture will be described in detail below.

3.3 Key Implementations

3.3.1 Parameter storage

As early as under the PS architecture of the CPU scenario, we have implemented a whole set of logic for large-scale sparse parameters. Now to move this logic to the GPU, the first thing to implement is the GPU version of HashTable. We investigated the implementation of various GPU HashTables in the industry, such as cuDF, cuDPP, cuCollections, WarpCore, etc., and finally chose to implement the TensorFlow version of GPUHashTable based on cuCollections. The reason is mainly because in actual business scenarios, the total amount of large-scale sparse features is usually unknown, and feature crossover may occur at any time, resulting in a large change in the total amount of sparse features, which leads to the ability of "dynamic expansion". It will become an essential function of our GPU HashTable, and only the implementation of cuCollections can achieve dynamic expansion. We implemented a special interface (find\_or\_insert) based on the GPU HashTable of cuCollections, optimized the large-scale read and write performance, and then encapsulated it into TensorFlow, and implemented the function of low-frequency filtering on it, which is aligned in ability CPU version of the sparse parameter storage module.

3.3.2 Optimizer

At present, the optimizer for sparse parameters is not compatible with the optimizer for dense parameters. Based on GPU HashTable, we have implemented a variety of sparse optimizers, and have implemented functions such as optimizer momentum Fusion, mainly implementing Adam and Adagrad. , FTRL, Momentum and other optimizers. For actual business scenarios, these optimizers have been able to cover the use of most businesses. The dense part parameters can directly use the sparse/dense optimizer natively supported by TensorFlow.

3.3.2 Communication between cards

During actual training, our processing flow is different for different types of features:

- Sparse features (ID features, large scale, stored in HashTable): Since the input sample data of each card is different, the feature vector corresponding to the input sparse features may be stored on other GPU cards. In the specific process, in the forward direction of training, we use the AllToAll communication between cards to partition the ID features of each card to other cards in the form of Modulo. Each card then goes to the GPUHashTable in the card to query the sparse feature vector, and then passes the card through the card. In the AllToAll communication between the two cards, the ID features and corresponding feature vectors obtained by the first AllToAll from other cards are returned to the original path. Through the two inter-card AllToAll communication, the ID features input by each card sample are obtained the corresponding feature vectors. . The reverse of the training will be communicated through AllToAll between cards again, and the gradient of the sparse parameters will be partitioned to other cards in the modulo way. optimization. The detailed process is shown in the following figure:

- Sparse features (small scale, using Variable storage): Compared with the difference between using HashTable, since each GPU card has a full amount of parameters, you can directly find the model parameters in the card. When the gradient is aggregated in reverse, the gradients on all cards will be obtained through AllGather between cards, averaged, and then handed over to the optimizer to perform parameter optimization.

- Dense features : Dense parameters also have full parameters for each card. The parameters can be directly obtained in the card to perform training. Finally, the dense gradients of multiple cards are aggregated through AllReduce between cards to execute the dense optimizer.

During the entire execution process, sparse parameters and dense parameters are all placed in GPU memory, model calculations are also all processed on GPU, and the communication bandwidth between GPU cards is fast enough, which can give full play to the powerful computing power of GPU.

To summarize here, the core difference between the Booster training architecture and the PS architecture in the CPU scenario is:

- Training mode : PS architecture is asynchronous training mode, Booster architecture is synchronous training mode.

- Parameter distribution : Under the PS architecture, model parameters are stored in the PS memory. Under the Booster architecture, the sparse parameters (HashTable) are distributed in the eight-card single-machine partition mode, and the dense parameters (Variable) are stored in each card in the Replica mode. Therefore, Booster The Worker role under the architecture takes into account the functions of the PS/Worker role under the PS architecture.

- Communication mode : TCP (Grpc/Seastar) is used for communication between PS/Worker under PS architecture, NVSwitch (NCCL) is used for communication between Workers under Booster architecture, and the bidirectional bandwidth between any two cards is 600GB/s, which is also Booster architecture. One of the reasons for the large increase in training speed.

Since the input data of each card is different, and the model parameters are stored in both the Partition and the Replica between the cards, the Booster architecture has both model parallelism and data parallelism. In addition, since NVIDIA A100 requires CUDA version >= 11.0, and TensorFlow 1.x version only supports CUDA11.0 with NV1.15.4. Most of Meituan's business scenarios are still using TensorFlow 1.x, so all our transformations are developed on the basis of NV1.15.4 version.

The above is an introduction to the overall system architecture and internal execution process of Booster. The following mainly introduces some performance optimization work we have done based on the initially implemented Booster architecture.

4 System performance optimization

After implementing the first version of the system based on the above design, we found that the end-to-end performance did not meet expectations. The SM utilization (SM Activity indicator) of the GPU was only 10% to 20%, which did not have much advantage over the CPU. . In order to analyze the performance bottleneck of the architecture, we use NVIDIA Nsight Systems (hereinafter referred to as nsys), Perf, uPerf and other tools to finally locate the data layer, computing layer, communication layer, etc. Several performance bottlenecks were identified, and corresponding performance optimizations were made respectively. In the following, we will take a recommendation model of Meituan Waimai as an example, and introduce the performance optimization work we have done one by one from the data layer, computing layer, and communication layer of the GPU architecture.

4.1 Data Layer

As mentioned above, the deep learning model of the recommendation system has a large sample size, the model is relatively uncomplicated, and the data I/O itself is the bottleneck. If data I/O operations on dozens of CPU servers must be completed on a single GPU server, the pressure of data I/O will become greater. Let's first look at the sample data flow under the current system, as shown in the following figure:

Core process : The data distribution process reads the HDFS sample data (TFRecord format) into memory through the network, and then transmits the sample data to the TensorFlow training process through Shared Memory. After the tensrFlow training process receives the sample data, it follows the native TensrFlow feature parsing logic, and after getting the feature data, passes the GPU MemcpyH2D to the GPU memory. Through modular stress testing analysis, we found that there are major performance problems in several processes such as sample pulling of the data distribution layer, feature analysis of the TensrFlow layer, and feature data MemcpyH2D to GPU (shown in the yellow process in the figure). The following Introduce in detail the performance optimization work we have done in these blocks.

4.1.1 Sample Pull Optimization

The sample pulling and batch assembly are completed by the data distribution process. The main optimization work we do here is to first execute the data distribution process independently in NUMA through numactl, avoiding data transmission between NUMA; secondly, data download The expansion from a single network card to multiple network cards increases the data download bandwidth; finally, the transmission channel between the data distribution process and the TensrFlow process is extended from a single Shared Memory to an independent Shared Memory for each GPU card, avoiding the need for a single Shared Memory. It brings about the memory bandwidth problem, and implements the capability of zero-copying the input data during feature parsing inside TensrFlow.

4.1.2 Feature Analysis and Optimization

At present, the sample data of most of Meituan's internal businesses is still in TFRecord format, and TFRecord is actually in ProtoBuf (PB for short) format. PB deserialization is very CPU-intensive, and the CPU usage of the ReadVarint64Fallback method is more prominent. The actual profiling results are as follows:

The reason is that the training samples of the CTR scene usually contain a large number of int64 type features, int64 is stored in PB as Varint64 type data, and the ReadVarint64Fallback method is used to parse the int64 type features. Ordinary int64 data type needs to occupy 8 bytes, and Varint64 uses variable length storage length for different data ranges. When PB parses data of Varint type, it must first determine the length of the current data. Varint uses 7 bits to store the data, and the upper 1 bit stores the marker bit. The marker bit indicates whether the next byte is valid. If the highest bit of the current byte is 0, it means that The current Varint data ends at this byte. Most of the ID features in our actual business scenarios are the values after hashing, and the expression in the Varint64 type will be relatively long, which leads to multiple judgments in the process of feature parsing whether the data is over, and multiple displacements and splicing to generate the final data. , which makes the CPU have a large number of branch predictions and temporary variables in the parsing process, which greatly affects performance. The following is the parsing flow chart of 4-byte Varint:

This processing flow is very suitable for batch optimization with the SIMD instruction set. Taking the 4-byte Varint type as an example, our optimization process mainly includes two steps:

- SIMD search for the highest bit : perform AND operation on each byte of Varint type data with 0xF0 through the SIMD instruction, and find the first byte whose result is equal to 0. This byte is the end position of the current Varint data.

- SIMD processing Varint : It stands to reason that each byte after the high bit of the Varint data is cleared to zero by the SIMD instruction is shifted to the right by 3/2/1/0 bytes in turn, and the final int type data can be obtained, but SIMD does not have such a instruction. Therefore, we complete this function by processing the upper 4 bits and the lower 4 bits of each byte through SIMD instructions. We process the high and low 4bits of the Varint data into int\_h4 and int\_l4 respectively, and then do the OR operation to get the final int type data. The specific optimization process is shown in the following figure (4 bytes of data):

For the processing of Varint64 type data, we directly divide it into two Varint type data for processing. Through these two steps of SIMD instruction set optimization, the sample parsing speed is greatly improved, and the CPU usage is reduced by 15% while the GPU end-to-end training speed is improved. Here we mainly use the SSE instruction set optimization, and also tried AVX and other larger length instruction sets, but the effect is not obvious, and it was not used in the end. In addition, the SIMD instruction set will seriously reduce the CPU frequency on old machines, so the official community has not introduced this optimization, and the CPUs of our GPU machines are relatively new and can be optimized using the SIMD instruction set.

4.1.3 MemcpyH2D pipeline

After parsing the samples to obtain the feature data, the feature data needs to be pulled to the GPU to perform the model calculation. Here, the MemcpyH2D operation of CUDA is required. We analyzed the performance of this block through nsys and found that the GPU has a lot of pause time during execution. The GPU needs to wait for the feature data Memcpy to be transferred to the GPU before performing model training, as shown in the following figure:

For the data flow of the GPU system, it needs to be transferred to the video memory closest to the GPU processor in advance, so that the computing power of the GPU can be exerted. Based on the prefetch function of TensorFlow, we implemented the GPU version of the PipelineDataset, and copied the data to the GPU memory before computing. It should be noted that in the process of copying CPU memory to GPU memory, the CPU memory needs to use Pinned Memory instead of native Paged Memory, which can speed up the MemcpyH2D process.

4.1.4 Hardware Tuning

During the performance optimization of the data layer, the server group, network group, and operating system group of Meituan’s internal basic R&D platform also helped us make related adjustments:

- In terms of network transmission, in order to reduce the processing overhead of the network protocol stack and improve the efficiency of data copying, we optimize the network card configuration, enable LRO (Large-Receive-Offload), TC Flower's hardware offload, Tx-Nocache-Copy and other features, and finally Network bandwidth increased by 17%.

- In terms of CPU performance optimization, after performance profiling analysis, it is found that memory latency and bandwidth are bottlenecks. So we tried 3 NPS configurations, integrated business scenarios and NUMA features, and chose NPS2. In addition, combined with other BIOS configurations (such as APBDIS, P-state, etc.), it can reduce memory latency by 8% and increase memory bandwidth by 6%.

Through the above optimization, the network limit bandwidth is increased by 80%, and the H2D bandwidth of the GPU is increased by 86% under the bandwidth required by the business. In the end, the performance gain of 10%+ was also obtained at the data analysis level.

After data layer sample pulling, feature analysis, MemcpyH2D and hardware optimization, the end-to-end training speed of the Booster architecture has been increased by 40%, and the training cost performance has reached 1.4 times that of the CPU. The data layer is no longer the performance bottleneck of the current architecture.

4.2 Computing Layer

4.2.1 Embedding pipeline

As early as when optimizing TensorFlow training performance in the CPU scenario, we have implemented the function of Embedding Pipeline[1]: we split the entire computation graph into two subgraphs, Embedding Graph (EG) and Main Graph (MG), which are asynchronous and independent. Execute, achieve the overlap on the execution (the entire splitting process can be transparent to the user). EG mainly covers the extraction of Embedding Key from samples, query and assembly of Embedding vector, Embedding vector update and other links; MG mainly includes dense sub-network calculation, gradient calculation, and dense parameter update.

The interaction between the two subgraphs is as follows: EG transfers the Embedding vector to MG (from the perspective of MG, it reads the value from a dense Variable), and MG transfers the gradient corresponding to the Embedding parameter to EG. The expressions of the above two processes are the computation graphs of TensorFlow. We use two Python threads and two TensorFlow Sessions to execute the two computation graphs concurrently, so that the two stages overlap, thereby achieving greater training throughput.

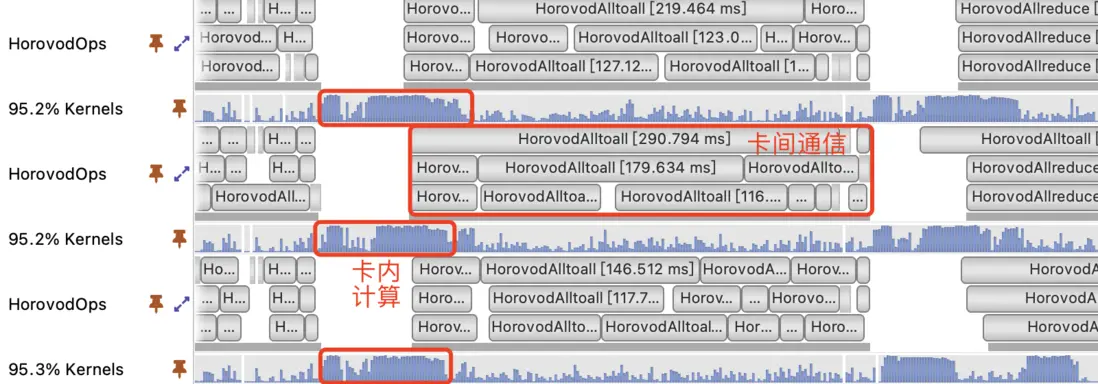

We also implemented this process under the GPU architecture, and added the inter-card synchronization process to it. The AllToAll communication of large-scale sparse features and the AllToAll communication of reverse gradients are executed in EG, and the reverse of ordinary sparse features The inter-card AllGather synchronization of gradients and the inter-card AllReduce synchronization of reverse gradients of dense parameters are performed in the MG. It should be noted that in the GPU scenario, EG and MG execute CUDA Kernel on the same GPU Stream. We have tried to execute EG and MG on separate GPU Streams respectively, and the performance will be worse. The underlying reason is related to the bottom layer of CUDA. Implementation-related, the issue itself is still waiting to be resolved.

4.2.2 Operator Optimization and XLA

Compared with the optimization at the CPU level, the optimization on the GPU is more complicated. First of all, for TensorFlow operators, there are some implementations without GPU. When these CPU operators are used in the model, the data between memory and video memory will be copied back and forth with the upstream and downstream GPU operators, which affects the overall performance. The frequently used and influential operators are implemented on the GPU. In addition, for the TensorFlow generation framework, the operator granularity is very fine, which can facilitate users to flexibly build various complex models, but this is a disaster for GPU processors, and a large amount of Kernel Launch and memory access overhead make it impossible to Make full use of GPU computing power. For optimization on GPU, there are usually two directions, manual optimization and compiled optimization. In terms of manual optimization, we reimplemented some commonly used operators and layers (Unique, DynamicPartition, Gather, etc.).

Taking the Unique operator as an example, the unique operator of the native TensorFlow requires that the order of the output elements is the same as the order of the input elements. In actual scenarios, we do not need this restriction. We modified the GPU implementation of the Unique operator to reduce the number of GPU Kernel for extra execution due to output ordering.

In terms of compilation optimization, currently we mainly use XLA[9] provided by the TensorFlow community to do some automatic optimization. The normal opening of XLA in native TensorFlow 1.15 can achieve 10-20% end-to-end performance improvement. However, XLA does not support the dynamic shape of operators well, and this situation is very common in the model of the recommendation system scenario, which leads to the XLA acceleration performance not meeting expectations, or even negative optimization, so we have made the following mitigations Work:

- Local optimization : For our manually introduced dynamic shape operators (such as Unique), we marked subgraphs and did not perform XLA compilation. XLA only optimizes subgraphs that can be stably accelerated.

- OOM bottom line: XLA will cache the intermediate results of compilation according to the operator type, input type, shape and other information to avoid repeated compilation. However, due to the sparse scene and the particularity of GPU architecture implementation, there are natural output shapes such as Unique and DynamicPartition that are dynamic operators, which causes these operators and the operators connected after these operators to fail when executing XLA compilation. XLA cache and recompile, more and more new cache, but the old cache will not be released, eventually lead to CPU memory OOM. We implemented LRUCache inside XLA to actively eliminate the old XLA cache to avoid OOM problems.

- Const Memcpy elimination : When XLA uses TF\_HLO to rewrite TensorFlow operators, it will mark some data that has been fixed at compile time with Const marks. However, the Output of these Const operators can only be defined on the Host side, in order to send the Output of the Host side. It is necessary to add MemcpyH2D to the Device side, which occupies the original H2D Stream of TensorFlow and affects the copying of sample data to the GPU side in advance. Since XLA's Const Output has been solidified at compile time, it is not necessary to do MemcpyH2D at every step. We cache the Output on the Device side. When using the Output later, read it directly from the cache to avoid redundant MemcpyH2D.

For the optimization of XLA, it should be a problem fix. What can be done at present is that XLA can be turned on normally in the GPU scenario, and the training speed can be improved by 10-20%. It is worth mentioning that for the problem of dynamic shape operator compilation, Meituan’s internal basic R&D machine learning platform/deep learning compiler team has already come up with a thorough solution, and we will jointly solve this problem in the future.

After the Embedding pipeline and XLA-related optimization of the computing layer, the end-to-end training speed of the Booster architecture has been increased by 60%, and the GPU single-machine eight-card training cost performance is 2.2 times that of the CPU under the same resources.

4.3 Communication layer

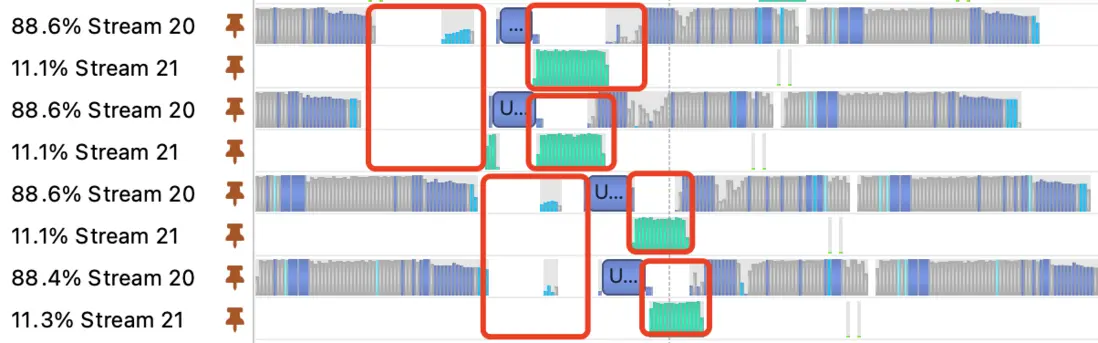

In the process of single-machine multi-card training, we found through Nsight Systems analysis that the time-consuming ratio of inter-card communication is very high, and the GPU usage rate is also very low during this period, as shown in the following figure:

As can be seen from the figure, the inter-card communication takes a long time during training, and the GPU usage rate is also very low during the communication period. Inter-card communication is a key bottleneck that affects the improvement of training performance. After dismantling the communication process, we found that the negotiation time of inter-card communication (AllToAll, AllReduce, AllGather, etc.) is much higher than the time of data transmission:

To analyze the specific reasons, take AllToAll, which is responsible for large-scale sparse parameter communication, as an example. Through the Nsight Systems tool, we observed that the long communication negotiation time is mainly caused by the relatively late execution time of the operator on a certain card. Since the TensorFlow operator scheduling is not strictly ordered, the embedding\_lookup operator of the same feature is actually executed at different time points on different cards. The first execution of the embedding\_lookup operator on a card is in another A card may be the last one to be executed, so we suspect that the inconsistency of operator scheduling on different cards leads to different times when each card initiates communication, and ultimately leads to a long communication negotiation time. We have also demonstrated through several sets of simulation experiments that it is indeed caused by operator scheduling. For this problem, the most direct idea is to transform the core scheduling algorithm of the TensorFlow computational graph, but this problem has always been a complex problem in academia. We changed the way of thinking and alleviated this problem by integrating key operators. Through statistics, we selected operators related to HashTable and Variable.

4.3.1 HashTable correlation operator fusion

We designed and implemented a graph optimization process. This process will automatically merge the HashTables that can be merged in the graph and the corresponding embedding\_lookup process. The merging strategy mainly merges HashTables with the same embedding\_size into one piece. At the same time, in order to avoid ID conflicts between the original features after the HashTable is merged, we introduced the function of automatic unified feature encoding, adding different offsets to different original features and classifying them into different feature domains, realizing the training time. Unified feature coding.

We tested on an actual business model. The graph optimization merged 38 HashTables into 2 HashTables, and merged 38 embedding\_lookup times into 2 times, which reduced the number of embedding\_lookup related operators in EmbeddingGraph. 90%, the number of synchronous communication between cards is reduced by 90%. In addition, after the operators are merged, the GPU operators in the embedding\_lookup are also merged, which reduces the number of Kernel Launches and makes the execution speed of EmbeddingGraph faster.

4.3.2 Variable correlation operator fusion

Similar to the optimization idea of HashTable Fusion, we observed that the business model usually contains dozens to hundreds of TensorFlow native Variables. These Variables need to be synchronized between cards during training. Similarly, too many Variables lead to synchronization between cards. longer negotiation time. We use the Concat/Split operator to automatically merge all the Trainable Variables together, so that the reverse of the entire MG only generates a few gradient Tensors, which greatly reduces the number of synchronizations between cards. At the same time, after the completion of Variable Fusion, the number of operators actually executed in the optimizer is also greatly reduced, which speeds up the execution speed of the calculation graph itself.

It should be noted that there are two types of Variables in TensorFlow. One is the Dense Variable in which all parameter values of each Step participate in training, such as the Weight of MLP; the other is the Variable specially used for embedding\_lookup. Each Step Only some of the values are involved in training, which we call Sparse Variable. For the former, doing Variable merging will not affect the algorithm effect. For the latter, its reverse gradient is the IndexedSlices object, and the inter-card synchronization uses AllGather communication by default. If the Lazy optimizer is used to optimize Sparse Variables in the business model, that is, each Step only optimizes and updates a certain variable in the Variable. For some lines, merging Sparse Variables at this time will cause its reverse gradient to change from an IndexedSlices object to a Tensor object, and the synchronization between cards becomes an AllReduce process, which may affect the algorithm effect. For this case, we provide a switch to control whether to merge Sparse Variables by the business. After our actual measurement, incorporating Sparse Variables on a recommendation model will improve the training performance by 5-10%, while the impact on the actual business effect is within one thousandth of a point.

The optimization of the fusion of these two operators not only optimizes the inter-card communication performance, but also improves the intra-card computing performance to a certain extent. After the optimization of the fusion of these two operators, the end-to-end training speed of the GPU architecture is increased by 85% without affecting the effect of business algorithms.

4.4 Performance Index

After completing the performance optimization of the data layer, computing layer, and communication layer, compared with our TensorFlow[3] CPU scenario, the GPU architecture has achieved 2-4 times the cost-effectiveness (different business models have different benefits). Based on a recommended model of Meituan Takeaway, we used a single GPU node (A100 single machine with eight cards) and a CPU Cluster of the same cost to compare the training performance of native TensorFlow 1.15 and our optimized TensorFlow 1.15. The specific data are as follows:

It can be seen that the training throughput of our optimized TensorFlow GPU architecture is more than 3 times that of the native TensorFlow GPU, and more than 4 times that of the optimized TensorFlow CPU scenario.

Note: Native TensorFlow uses tf.Variable as the parameter storage for Embedding.

5 Business landing

For the Booster architecture to be implemented in business production, it is not only necessary to have a good system performance, but also to pay attention to the completeness of the training ecosystem and the effect of the training output model.

5.1 Completeness

For a complete model training experiment, in addition to running the training task, it is often necessary to run the model evaluation (Evaluate) or model prediction (Predict) task. We encapsulate the training architecture based on the TensorFlow Estimator paradigm, so that a set of code on the user side can support the Train, Evaluate, and Predict tasks in GPU and CPU scenarios in a unified manner, and switch flexibly through switches. Users only need to pay attention to the development of the model code itself. We have encapsulated all architectural changes into the engine, and users can migrate from the CPU scene to the GPU architecture with only one line of code:

tf.enable_gpu_booster()In actual business scenarios, users usually use the train\_and\_evaluate mode to evaluate the model effect while running the training task. After the Booster architecture is installed, the Evaluate speed cannot keep up with the normal output checkpoint speed of the training because the training runs too fast. Based on the GPU training architecture, we support the capability of Evaluate on GPU. Businesses can apply for an A100 GPU to be used for Evaluate. The speed of evaluating a single GPU is 40 times that of a single Evaluate process in a CPU scenario. At the same time, we also support the ability of Predict on GPU. The speed of Predict on a single machine with eight cards is 3 times that of a CPU at the same cost.

In addition, we also provide more complete options for task resource configuration. On the basis of single-machine eight-card (A100 single machine can be configured with at most 8 cards), we support single-machine single-card, dual-card, and four-card tasks, and get through single-card/dual-card/four-card/eight-card/CPU The Checkpoint of the PS architecture enables users to freely switch between these training modes and continue training at breakpoints, which is convenient for users to choose a reasonable resource type and amount of resources to run experiments. 's model.

5.2 Training effect

Compared with the CPU training in the PS/Worker asynchronous mode, the single-machine multi-card training time cards are fully synchronized, thus avoiding the influence of the asynchronous training gradient update delay on the training effect. However, since the actual Batch Size of each iteration in synchronous mode is the total number of samples per card, and in order to make full use of the computing power of the A100 card, we will try to maximize the Batch Size of each card (the number of samples in a single iteration). turn up. This makes the actual training batch size (10,000 to 100,000) much larger than the PS/Worker asynchronous mode (1,000 to 10,000). We need to face the problem of training hyperparameter tuning under large batches [26, 27]: on the premise of keeping the Epoch unchanged, expanding the batch size will reduce the number of effective parameter updates, which may lead to poor model training.

We adopt the principles of Linear Scaling Rule [28] to guide the adjustment of the learning rate. If the training batch size is N times larger than the batch size in PS/Worker mode, you can also increase the learning rate by N times. This method is simple and easy to operate, and the practical effect is not bad. Of course, it should be noted that if the learning rate of the original training method is already very aggressive, the adjustment range of the learning rate for large batch size training needs to be appropriately reduced, or more complex training strategies such as learning rate Warmup should be used [29]. We will do a more in-depth exploration of the hyperparameter optimization mode in the follow-up work.

6 Summary and Outlook

In the training scenario of the Meituan recommendation system, as the model becomes more and more complex, the marginal effect of optimization on the CPU becomes lower and lower. Meituan developed the Booster GPU training architecture based on the in-depth customized TensorFlow and NVIDIA HugeCTR. The overall design fully considers the characteristics of algorithms, architecture, and new hardware, and is deeply optimized from multiple perspectives such as data, computing, and communication. Compared with the previous CPU tasks, the cost-effectiveness is increased by 2 to 4 times. In terms of function and completeness, it supports various training interfaces of TensorFlow (Train/Evaluate/Rredict, etc.), and supports the mutual import of CPU and GPU models. In terms of ease of use, TensorFlow CPU tasks can complete the GPU architecture migration with only one line of code. At present, a large-scale production application has been realized in the Meituan takeout recommendation scene. In the future, we will fully promote it to the home search recommendation technology department and the entire business line of Meituan.

Of course, Booster still has a lot of room for optimization based on NVIDIA A100 single-machine multi-card, such as sample compression, serialization, feature analysis at the data level, multi-graph operator scheduling at the computing level, compilation and optimization of dynamic shape operators, and quantization at the communication level. communication, etc. At the same time, in order to support the business models within Meituan more widely, the next version of Booster will also support larger models, as well as GPU training of multiple machines and multiple cards.

7 About the Author

Jiaheng, National Day, Zheng Shao, Xiaoguang, Pengpeng, Yongyu, Junwen, Zhengyang, Ruidong, Xiangyu, Xiufeng, Wang Qing, Feng Yu, Shifeng, Huang Jun, etc., from the basic research and development platform of Meituan- Machine Learning Platform Training Engine & Daojia R&D Platform - Booster Joint Project Team of Search Recommendation Technology Department.

8 References

- [1] https://tech.meituan.com/2021/12/09/meituan-tensorflow-in-recommender-systems.html

- [2] https://images.nvidia.cn/aem-dam/en-zz/Solutions/data-center/nvidia-ampere-architecture-whitepaper.pdf

- [3] https://www.usenix.org/system/files/conference/osdi14/osdi14-paper-li\_mu.pdf

- [4] https://github.com/NVIDIA-Merlin/HugeCTR

- [5] https://en.wikipedia.org/wiki/Nvidia\_Tesla

- [6] https://www.nvidia.com/en-us/data-center/dgx-a100

- [7] https://github.com/horovod/horovod

- [8] https://github.com/NVIDIA/nccl

- [9] https://www.tensorflow.org/xla

- [10] Yann LeCun, John S. Denker, and Sara A. Solla. Optimal brain damage. In NIPS, pp. 598–605. Morgan Kaufmann, 1989.

- [11] Kenji Suzuki, Isao Horiba, and Noboru Sugie. A simple neural network pruning algorithm with application to filter synthesis. Neural Process. Lett., 13(1):43–53, 2001.

- [12] Suraj Srinivas and R. Venkatesh Babu. Data-free parameter pruning for deep neural networks. In BMVC, pp. 31.1–31.12. BMVA Press, 2015.

- [13] Jonathan Frankle and Michael Carbin. The lottery ticket hypothesis: Finding sparse, trainable neural networks. In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- [14] Hao-Jun Michael Shi, Dheevatsa Mudigere, Maxim Naumov, and Jiyan Yang. Compositional embeddings using complementary partitions for memory-efficient recommendation systems. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 165 -175. 2020.

- [15] https://mp.weixin.qq.com/s/fOA\_u3TYeSwAeI6C9QW8Yw

- [16] Jianxun Lian, Xiaohuan Zhou, Fuzheng Zhang, Zhongxia Chen, Xing Xie, and Guangzhong Sun. 2018. xDeepFM: Combining Explicit and Implicit Feature Interactions for Recommender Systems. arXiv preprint arXiv:1803.05170 (2018).

- [17] Weiping Song, Chence Shi, Zhiping Xiao, Zhijian Duan, Yewen Xu, Ming Zhang, and Jian Tang. Autoint: Automatic feature interaction learning via self-attentive neural networks. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, pp. 1161-1170. 2019.

- [18] Guorui Zhou, Weijie Bian, Kailun Wu, Lejian Ren, Qi Pi, Yujing Zhang, Can Xiao et al. CAN: revisiting feature co-action for click-through rate prediction. arXiv preprint arXiv:2011.05625 (2020).

- [19] Chun-Hao Chang, Ladislav Rampasek, and Anna Goldenberg. Dropout feature ranking for deep learning models. arXiv preprint arXiv:1712.08645 (2017).

- [20] Xu Ma, Pengjie Wang, Hui Zhao, Shaoguo Liu, Chuhan Zhao, Wei Lin, Kuang-Chih Lee, Jian Xu, and Bo Zheng. Towards a Better Tradeoff between Effectiveness and Efficiency in Pre-Ranking: A Learnable Feature Selection based Approach. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 2036-2040. 2021.

- [21] Bencheng Yan, Pengjie Wang, Jinquan Liu, Wei Lin, Kuang-Chih Lee, Jian Xu, and Bo Zheng. Binary Code based Hash Embedding for Web-scale Applications. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pp. 3563-3567. 2021.

- [22] Xiangyu Zhao, Haochen Liu, Hui Liu, Jiliang Tang, Weiwei Guo, Jun Shi, Sida Wang, Huiji Gao, and Bo Long. Autodim: Field-aware embedding dimension searchin recommender systems. In Proceedings of the Web Conference 2021, pp. 3015-3022. 2021.

- [23] Bencheng Yan, Pengjie Wang, Kai Zhang, Wei Lin, Kuang-Chih Lee, Jian Xu, and Bo Zheng. Learning Effective and Efficient Embedding via an Adaptively-Masked Twins-based Layer. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pp. 3568-3572. 2021.

- [24] Ting Chen, Lala Li, and Yizhou Sun. Differentiable product quantization for end-to-end embedding compression. In International Conference on Machine Learning, pp. 1617-1626. PMLR, 2020.

- [25] Wang-Cheng Kang, Derek Zhiyuan Cheng, Ting Chen, Xinyang Yi, Dong Lin, Lichan Hong, and Ed H. Chi. Learning multi-granular quantized embeddings for large-vocab categorical features in recommender systems. In Companion Proceedings of the Web Conference 2020, pp. 562-566. 2020.

- [26] Nitish Shirish Keskar, Dheevatsa Mudigere, Jorge Nocedal, Mikhail Smelyanskiy, and Ping Tak Peter Tang. On large-batch training for deep learning: Generalization gap and sharp minima. arXiv preprint arXiv:1609.04836 (2016).

- [27] Elad Hoffer, Itay Hubara, and Daniel Soudry. Train longer, generalize better: closing the generalization gap in large batch training of neural networks. Advances in neural information processing systems 30 (2017).

- [28] Priya Goyal, Piotr Dollár, Ross Girshick, Pieter Noordhuis, Lukasz Wesolowski, Aapo Kyrola, Andrew Tulloch, Yangqing Jia, and Kaiming He. Accurate, large minibatch sgd: Training imagenet in 1 hour. arXiv preprint arXiv:1706.02677 (2017 ).

- [29] Chao Peng, Tete Xiao, Zeming Li, Yuning Jiang, Xiangyu Zhang, Kai Jia, Gang Yu, and Jian Sun. Megdet: A large mini-batch object detector. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition , pp. 6181-6189. 2018.

Read more collections of technical articles from the Meituan technical team

Frontend | Algorithm | Backend | Data | Security | O&M | iOS | Android | Testing

| Reply keywords such as [2021 stock], [2020 stock], [2019 stock], [2018 stock], [2017 stock] in the public account menu bar dialog box, you can view the collection of technical articles by the Meituan technical team over the years.

| This article is produced by Meituan technical team, and the copyright belongs to Meituan. Welcome to reprint or use the content of this article for non-commercial purposes such as sharing and communication, please indicate "The content is reproduced from the Meituan technical team". This article may not be reproduced or used commercially without permission. For any commercial activities, please send an email to tech@meituan.com to apply for authorization.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。