This article is translated from "Pulsar Isolation Part III: Separate Pulsar Clusters Sharing a Single BookKeeper Cluster", the original link: https://streamnative.io/blog/release/2022-01-25-moving-toward-a-zookeeperless-apache- pulsar/ . Author David Kjerrumgaard, Apache Pulsar Committer, StreamNative Evangelist.

Translator Profile

Li Wenqi, who works for Microsoft STCA, likes to study various middleware technologies and distributed systems in his spare time.

first! Running Pulsar without ZooKeeper

Apache Pulsar™ is sometimes seen as a more complex system, in part because Pulsar uses Apache ZooKeeper™ to store metadata. From the beginning of its design, Plusar uses ZooKeeper to store key metadata information such as broker information assigned to topics, topic security and data retention policies. This additional component, ZooKeeper, reinforces the impression that Pulsar is a complex system.

In order to simplify the deployment of Pulsar, the community initiated an initiative - Pulsar Improvement Plan PIP-45 to reduce the dependency on ZooKeeper and replace it with a pluggable framework. This pluggable framework allows users to select alternative metadata and coordination systems according to the actual deployment environment, thereby reducing the necessary dependencies of Pulsar at the infrastructure level.

Implementation and Future Plans of PIP-45

The code for PIP-45 has been committed to the master branch and will be released in Pulsar 2.10. For the first time, Apache Pulsar users can run Pulsar without ZooKeeper.

Unlike Apache Kafka's ZooKeeper replacement strategy, the purpose of PIP-45 is not to internalize the distributed coordination capabilities of the Apache Pulsar platform itself. Instead, it allows users to replace ZooKeeper with the appropriate technology components for their environment.

In non-production environments, users now have the option of using a lightweight alternative that keeps metadata in memory or on local disk. Developers can reclaim the computing resources they previously needed to run Apache ZooKeeper on their notebooks.

In a production environment, Pulsar's pluggable framework enables users to utilize components already running in their own software stack as an alternative to ZooKeeper.

As you can imagine, a plan of this magnitude consists of multiple steps, some of which have already been realized. This article will walk you through the steps achieved so far (steps 1-4) and outline what still needs to be done (steps 5-6). Note that the features discussed in this article are in beta and may change in actual releases.

Step 1: Define the Metadata Storage API

PIP-45 provides an interface across technical components for metadata management and distributed coordination, allowing the use of non-ZooKeeper methods to manage metadata, etc., increasing the flexibility of the system.

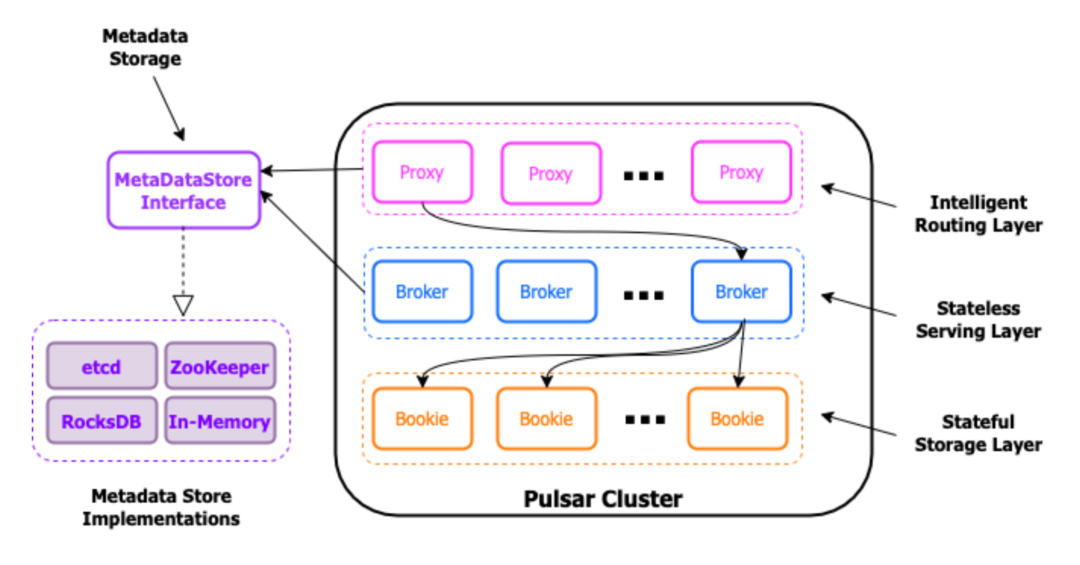

The ZooKeeper client API has historically been present throughout the Apache Pulsar codebase, so we first needed to consolidate all these API calls through a single common MetadataStore interface. The structure of this interface is based on Pulsar's requirements for interacting with metadata and some scene semantics provided by existing metadata storage engines such as ZooKeeper and etcd.

Figure 1: Supports the development of different implementations of the MetadataStore interface, thereby replacing the direct dependency on Apache ZooKeeper with the interface, and providing users with the flexibility to choose according to their own environment.

This step not only decouples Pulsar from the ZooKeeper API, but also builds a pluggable framework so that different interface implementations can be interchanged in the development environment.

These new interfaces allow Pulsar users to easily replace Apache ZooKeeper with other metadata management systems or other coordination services based on the value of the metadataURL property in the broker configuration file. The framework will automatically generate the correct instance based on the URL prefix. For example, if the metadataURL configuration property value starts with Rocksdb:// , RocksDB will be used as the implementation of the interface.

Step 2: Create a ZooKeeper-based implementation

Once these interfaces are defined, a default implementation based on Apache ZooKeeper is created to provide a smooth transition to the new pluggable framework for existing Pulsar.

Our main goal at this stage is to prevent any breaking changes due to an upgrade of Pulsar to a new version for those who want to keep Apache ZooKeeper. Therefore, we need to ensure that the existing metadata currently stored in ZooKeeper can also be saved in the same location and in the same format after the upgrade.

ZooKeeper-based implementations allow users to continue to choose to use ZooKeeper as the metadata storage layer, and until the etcd version is complete, this is currently the only implementation available in production environments.

Step 3: Create a RocksDB-based implementation

After addressing these backward compatibility issues, the next step is to provide a non-ZooKeeper based implementation to demonstrate the pluggability of the framework. The easiest way to verify the framework is to implement MetaDataStore based on RocksDB in standalone mode.

This not only proves that the framework has the ability to switch between different MetaDataStore implementations, but also greatly reduces the total amount of resources required to fully run a fully independent Pulsar cluster. The implementation of this step has a direct impact on developers who choose to develop and test locally (usually in Docker containers).

Step 4: Create a memory-based implementation

Reducing the metadata store is also beneficial for unit and integration testing. We found that the in-memory implementation of MetaDataStore is more suitable for testing scenarios, which reduces the cost of repeatedly starting a ZooKeeper cluster to perform a suite of tests and then shutting it down.

At the same time, it not only reduces the amount of resources required to run a full suite of Pulsar integration tests, but also reduces the testing time.

By leveraging the in-memory implementation of MetaDataStore, the build and release cycle of the Pulsar project will be greatly shortened, and builds, tests, and releases will be able to make changes to the community faster.

Step 5: Create an Etcd-based implementation

Given the cloud-native design of Pulsar, the most obvious replacement for ZooKeeper is etcd. etcd is a consistent, highly available key-value store used as a store for all cluster metadata in Kubernetes.

In addition to its growing and active community, widespread use of the project, and improvements in performance and scalability, etcd has long been available in Kubernetes as part of the control plane. Since Pulsar naturally supports running in Kubernetes, the running etcd instance can be directly accessed in most production environments, so users can directly use the existing etcd without adding additional ZooKeeper to bring more operating costs.

Figure 2: When running Pulsar in Kubernetes, users can use an existing etcd instance to simplify deployment

Using the existing etcd service running within the Kubernetes cluster as a metadata store, users can completely eliminate the need to run ZooKeeper. This not only reduces the resources occupied by the infrastructure of the Pulsar cluster, but also reduces the operational burden required to run and operate complex distributed systems.

The performance boost that comes with etcd is a particularly exciting technological advance - etcd aims to solve some of the problems associated with ZooKeeper. For starters, etcd is written entirely in Go, ZooKeeper is mainly written in Java, and Go is generally considered a more performant programming language than Java.

Additionally, etcd uses the newer Raft consensus algorithm, which is not much different in fault tolerance and performance from the Paxos algorithm used by ZooKeeper. However, it is easier to understand and implement than the ZaB protocol used by ZooKeeper.

The biggest difference between etcd's Raft implementation and Kafka (KRaft) implementation is that the latter uses a pull-based model for synchronous updates, which has a slight disadvantage in latency. The Kafka version of the Raft algorithm, also implemented in Java, may suffer from long pauses during garbage collection. In the etcd version of the Raft algorithm based on the Go language, this problem does not exist.

Step 6: Scaling the Metadata Layer

Today, the biggest obstacle to scaling a Pulsar cluster is the storage capacity of the metadata layer. When using ZooKeeper to store this metadata, the data must be kept in memory to provide good latency performance. It can be summed up in one sentence : "The disk is the death point of ZooKeeper" .

However, data storage in etcd is a B-tree data structure, rather than the hierarchical tree structure used by ZooKeeper, which is stored on disk and mapped into memory to provide low-latency access.

The point of this is that it effectively increases the storage capacity of the metadata layer from memory scale to disk scale, allowing us to store large amounts of metadata. Comparing ZooKeeper and etcd, the storage capacity has expanded from a few G of ZooKeeper's memory to more than 100 G of etcd disk .

Installation and more details

Over the past few years, Pulsar has become one of the most active Apache projects , and as demonstrated by the PIP-45 project, a vibrant community continues to drive innovation and improvement of the project.

Can't wait to try Pulsar for ZooKeeper? Download the latest version of Pulsar and run it in standalone mode , or refer to the documentation .

In addition to removing the strong dependency on ZooKeeper, Apache Pulsar version 2.10.0 includes 1000 commits from 99 contributors, introducing up to 300 important updates . There are also many exciting technical developments in the upcoming new release:

- The introduction of TableView reduces the cost of building key-value pair views for users;

- Add a multi-cluster automatic failover strategy on the client side;

- Added message retry exponential backoff delay strategy;

- ...

In the live broadcast of TGIP-CN 037 last Sunday, Apache Pulsar PMC member and StreamNative chief architect Li Penghui introduced the features of the upcoming Apache Pulsar 2.10 version. Please pay attention to this week's Apache Pulsar public account push~

However, you can scan the code to browse the review video in advance:

references

- PIP-117: Change Pulsar standalone defaults

- Apache ZooKeeper vs. etcd3

- Performance optimization of etcd in web scale data scenario

Follow the public account "Apache Pulsar" to get more technical dry goods

Join the Apache Pulsar Chinese exchange group👇🏻

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。