foreword

I have always wanted to have a comic version of the avatar, but I am too clumsy to use a lot of software to "pinch it out", so I was thinking whether it is possible to implement such a function based on AI and deploy it to the serverless architecture to make it easier to How many people will try to use it?

backend project

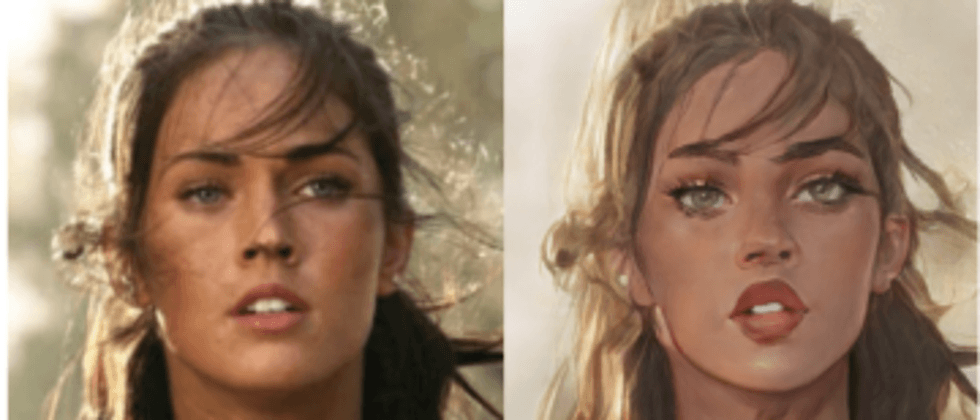

The back-end project adopts the v2 version of AnimeGAN, a well-known animation style conversion filter library in the industry. The effect is as follows:

The specific information about this model will not be introduced and explained in detail here. By combining with the Python web framework, the AI model is exposed through the interface:

from PIL import Image

import io

import torch

import base64

import bottle

import random

import json

cacheDir = '/tmp/'

modelDir = './model/bryandlee_animegan2-pytorch_main'

getModel = lambda modelName: torch.hub.load(modelDir, "generator", pretrained=modelName, source='local')

models = {

'celeba_distill': getModel('celeba_distill'),

'face_paint_512_v1': getModel('face_paint_512_v1'),

'face_paint_512_v2': getModel('face_paint_512_v2'),

'paprika': getModel('paprika')

}

randomStr = lambda num=5: "".join(random.sample('abcdefghijklmnopqrstuvwxyz', num))

face2paint = torch.hub.load(modelDir, "face2paint", size=512, source='local')

@bottle.route('/images/comic_style', method='POST')

def getComicStyle():

result = {}

try:

postData = json.loads(bottle.request.body.read().decode("utf-8"))

style = postData.get("style", 'celeba_distill')

image = postData.get("image")

localName = randomStr(10)

# 图片获取

imagePath = cacheDir + localName

with open(imagePath, 'wb') as f:

f.write(base64.b64decode(image))

# 内容预测

model = models[style]

imgAttr = Image.open(imagePath).convert("RGB")

outAttr = face2paint(model, imgAttr)

img_buffer = io.BytesIO()

outAttr.save(img_buffer, format='JPEG')

byte_data = img_buffer.getvalue()

img_buffer.close()

result["photo"] = 'data:image/jpg;base64, %s' % base64.b64encode(byte_data).decode()

except Exception as e:

print("ERROR: ", e)

result["error"] = True

return result

app = bottle.default_app()

if __name__ == "__main__":

bottle.run(host='localhost', port=8099)The entire code is partially improved based on the Serverless architecture:

- When the instance is initialized, the model is loaded, and it is possible to reduce the impact of frequent cold starts;

- In function mode, often only the /tmp directory is writable, so the pictures will be cached in the /tmp directory;

- Although it is said that function computing is "stateless", there is actually a case of multiplexing, and all data are randomly named when they are stored in tmp;

- Although some cloud vendors support binary file upload, most serverless architectures are not friendly to binary upload support, so the Base64 upload scheme is still used here;

The above code is more related to AI. In addition, there needs to be an interface for obtaining a list of models and related information such as model paths:

import bottle

@bottle.route('/system/styles', method='GET')

def styles():

return {

"AI动漫风": {

'color': 'red',

'detailList': {

"风格1": {

'uri': "images/comic_style",

'name': 'celeba_distill',

'color': 'orange',

'preview': 'https://serverless-article-picture.oss-cn-hangzhou.aliyuncs.com/1647773808708_20220320105649389392.png'

},

"风格2": {

'uri': "images/comic_style",

'name': 'face_paint_512_v1',

'color': 'blue',

'preview': 'https://serverless-article-picture.oss-cn-hangzhou.aliyuncs.com/1647773875279_20220320105756071508.png'

},

"风格3": {

'uri': "images/comic_style",

'name': 'face_paint_512_v2',

'color': 'pink',

'preview': 'https://serverless-article-picture.oss-cn-hangzhou.aliyuncs.com/1647773926924_20220320105847286510.png'

},

"风格4": {

'uri': "images/comic_style",

'name': 'paprika',

'color': 'cyan',

'preview': 'https://serverless-article-picture.oss-cn-hangzhou.aliyuncs.com/1647773976277_20220320105936594662.png'

},

}

},

}

app = bottle.default_app()

if __name__ == "__main__":

bottle.run(host='localhost', port=8099)As you can see, my approach at this time is to add a function to expose as a new interface, so why not add such an interface to the project just now? But to maintain one more function?

- The loading speed of the AI model is slow. If the interface for obtaining the AI processing list is integrated, it will inevitably affect the performance of the interface;

- The memory required to configure the AI model will be more, but the memory required for the interface to obtain the AI processing list is very small, and the memory will have a certain relationship with the billing, so the separation will help reduce the cost;

Regarding the second interface (the interface for obtaining the AI processing list), it is relatively simple, and there is no problem, but for the interface of the first AI model, there are some headaches:

- The dependencies required by the model may involve some binary compilation processes, so it cannot be used directly across platforms;

- The model file is relatively large (pure Pytorch is more than 800M), and the upload code of function calculation is only 100M at most, so this project cannot be uploaded directly;

So here you need to use the Serverless Devs project to deal with it:

Refer to https://www.serverless-devs.com/fc/yaml/readme to complete the writing of s.yaml:

edition: 1.0.0

name: start-ai

access: "default"

vars: # 全局变量

region: cn-hangzhou

service:

name: ai

nasConfig: # NAS配置, 配置后function可以访问指定NAS

userId: 10003 # userID, 默认为10003

groupId: 10003 # groupID, 默认为10003

mountPoints: # 目录配置

- serverAddr: 0fe764bf9d-kci94.cn-hangzhou.nas.aliyuncs.com # NAS 服务器地址

nasDir: /python3

fcDir: /mnt/python3

vpcConfig:

vpcId: vpc-bp1rmyncqxoagiyqnbcxk

securityGroupId: sg-bp1dpxwusntfryekord6

vswitchIds:

- vsw-bp1wqgi5lptlmk8nk5yi0

services:

image:

component: fc

props: # 组件的属性值

region: ${vars.region}

service: ${vars.service}

function:

name: image_server

description: 图片处理服务

runtime: python3

codeUri: ./

ossBucket: temp-code-cn-hangzhou

handler: index.app

memorySize: 3072

timeout: 300

environmentVariables:

PYTHONUSERBASE: /mnt/python3/python

triggers:

- name: httpTrigger

type: http

config:

authType: anonymous

methods:

- GET

- POST

- PUT

customDomains:

- domainName: avatar.aialbum.net

protocol: HTTP

routeConfigs:

- path: /*Then proceed:

1. Dependent installation: s build --use-docker

2. Deployment of the project: s deploy

3. Create a directory in the NAS and upload dependencies:

s nas command mkdir /mnt/python3/python

s nas upload -r 本地依赖路径 /mnt/python3/pythonAfter completion, the project can be tested through the interface.

In addition, the WeChat applet needs the background interface of https, so the certificate information related to https also needs to be configured here, which will not be expanded here.

applet project

The applet project still uses colorUi, and the entire project has only one page:

Page related layout:

<scroll-view scroll-y class="scrollPage">

<image src='/images/topbg.jpg' mode='widthFix' class='response'></image>

<view class="cu-bar bg-white solid-bottom margin-top">

<view class="action">

<text class="cuIcon-title text-blue"></text>第一步:选择图片

</view>

</view>

<view class="padding bg-white solid-bottom">

<view class="flex">

<view class="flex-sub bg-grey padding-sm margin-xs radius text-center" bindtap="chosePhoto">本地上传图片</view>

<view class="flex-sub bg-grey padding-sm margin-xs radius text-center" bindtap="getUserAvatar">获取当前头像</view>

</view>

</view>

<view class="padding bg-white" hidden="{{!userChosePhoho}}">

<view class="images">

<image src="{{userChosePhoho}}" mode="widthFix" bindtap="previewImage" bindlongpress="editImage" data-image="{{userChosePhoho}}"></image>

</view>

<view class="text-right padding-top text-gray">* 点击图片可预览,长按图片可编辑</view>

</view>

<view class="cu-bar bg-white solid-bottom margin-top">

<view class="action">

<text class="cuIcon-title text-blue"></text>第二步:选择图片处理方案

</view>

</view>

<view class="bg-white">

<scroll-view scroll-x class="bg-white nav">

<view class="flex text-center">

<view class="cu-item flex-sub {{style==currentStyle?'text-orange cur':''}}" wx:for="{{styleList}}"

wx:for-index="style" bindtap="changeStyle" data-style="{{style}}">

{{style}}

</view>

</view>

</scroll-view>

</view>

<view class="padding-sm bg-white solid-bottom">

<view class="cu-avatar round xl bg-{{item.color}} margin-xs" wx:for="{{styleList[currentStyle].detailList}}"

wx:for-index="substyle" bindtap="changeStyle" data-substyle="{{substyle}}" bindlongpress="showModal" data-target="Image">

<view class="cu-tag badge cuIcon-check bg-grey" hidden="{{currentSubStyle == substyle ? false : true}}"></view>

<text class="avatar-text">{{substyle}}</text>

</view>

<view class="text-right padding-top text-gray">* 长按风格圆圈可以预览模板效果</view>

</view>

<view class="padding-sm bg-white solid-bottom">

<button class="cu-btn block bg-blue margin-tb-sm lg" bindtap="getNewPhoto" disabled="{{!userChosePhoho}}"

type="">{{ userChosePhoho ? (getPhotoStatus ? 'AI将花费较长时间' : '生成图片') : '请先选择图片' }}</button>

</view>

<view class="cu-bar bg-white solid-bottom margin-top" hidden="{{!resultPhoto}}">

<view class="action">

<text class="cuIcon-title text-blue"></text>生成结果

</view>

</view>

<view class="padding-sm bg-white solid-bottom" hidden="{{!resultPhoto}}">

<view wx:if="{{resultPhoto == 'error'}}">

<view class="text-center padding-top">服务暂时不可用,请稍后重试</view>

<view class="text-center padding-top">或联系开发者微信:<text class="text-blue" data-data="zhihuiyushaiqi" bindtap="copyData">zhihuiyushaiqi</text></view>

</view>

<view wx:else>

<view class="images">

<image src="{{resultPhoto}}" mode="aspectFit" bindtap="previewImage" bindlongpress="saveImage" data-image="{{resultPhoto}}"></image>

</view>

<view class="text-right padding-top text-gray">* 点击图片可预览,长按图片可保存</view>

</view>

</view>

<view class="padding bg-white margin-top margin-bottom">

<view class="text-center">自豪的采用 Serverless Devs 搭建</view>

<view class="text-center">Powered By Anycodes <text bindtap="showModal" class="text-cyan" data-target="Modal">{{"<"}}作者的话{{">"}}</text></view>

</view>

<view class="cu-modal {{modalName=='Modal'?'show':''}}">

<view class="cu-dialog">

<view class="cu-bar bg-white justify-end">

<view class="content">作者的话</view>

<view class="action" bindtap="hideModal">

<text class="cuIcon-close text-red"></text>

</view>

</view>

<view class="padding-xl text-left">

大家好,我是刘宇,很感谢您可以关注和使用这个小程序,这个小程序是我用业余时间做的一个头像生成小工具,基于“人工智障”技术,反正现在怎么看怎么别扭,但是我会努力让这小程序变得“智能”起来的。如果你有什么好的意见也欢迎联系我<text class="text-blue" data-data="service@52exe.cn" bindtap="copyData">邮箱</text>或者<text class="text-blue" data-data="zhihuiyushaiqi" bindtap="copyData">微信</text>,另外值得一提的是,本项目基于阿里云Serverless架构,通过Serverless Devs开发者工具建设。

</view>

</view>

</view>

<view class="cu-modal {{modalName=='Image'?'show':''}}">

<view class="cu-dialog">

<view class="bg-img" style="background-image: url("{{previewStyle}}");height:200px;">

<view class="cu-bar justify-end text-white">

<view class="action" bindtap="hideModal">

<text class="cuIcon-close "></text>

</view>

</view>

</view>

<view class="cu-bar bg-white">

<view class="action margin-0 flex-sub solid-left" bindtap="hideModal">关闭预览</view>

</view>

</view>

</view>

</scroll-view>The page logic is also relatively simple:

// index.js

// 获取应用实例

const app = getApp()

Page({

data: {

styleList: {},

currentStyle: "动漫风",

currentSubStyle: "v1模型",

userChosePhoho: undefined,

resultPhoto: undefined,

previewStyle: undefined,

getPhotoStatus: false

},

// 事件处理函数

bindViewTap() {

wx.navigateTo({

url: '../logs/logs'

})

},

onLoad() {

const that = this

wx.showLoading({

title: '加载中',

})

app.doRequest(`system/styles`, {}, option = {

method: "GET"

}).then(function (result) {

wx.hideLoading()

that.setData({

styleList: result,

currentStyle: Object.keys(result)[0],

currentSubStyle: Object.keys(result[Object.keys(result)[0]].detailList)[0],

})

})

},

changeStyle(attr) {

this.setData({

"currentStyle": attr.currentTarget.dataset.style || this.data.currentStyle,

"currentSubStyle": attr.currentTarget.dataset.substyle || Object.keys(this.data.styleList[attr.currentTarget.dataset.style].detailList)[0]

})

},

chosePhoto() {

const that = this

wx.chooseImage({

count: 1,

sizeType: ['compressed'],

sourceType: ['album', 'camera'],

complete(res) {

that.setData({

userChosePhoho: res.tempFilePaths[0],

resultPhoto: undefined

})

}

})

},

headimgHD(imageUrl) {

imageUrl = imageUrl.split('/'); //把头像的路径切成数组

//把大小数值为 46 || 64 || 96 || 132 的转换为0

if (imageUrl[imageUrl.length - 1] && (imageUrl[imageUrl.length - 1] == 46 || imageUrl[imageUrl.length - 1] == 64 || imageUrl[imageUrl.length - 1] == 96 || imageUrl[imageUrl.length - 1] == 132)) {

imageUrl[imageUrl.length - 1] = 0;

}

imageUrl = imageUrl.join('/'); //重新拼接为字符串

return imageUrl;

},

getUserAvatar() {

const that = this

wx.getUserProfile({

desc: "获取您的头像",

success(res) {

const newAvatar = that.headimgHD(res.userInfo.avatarUrl)

wx.getImageInfo({

src: newAvatar,

success(res) {

that.setData({

userChosePhoho: res.path,

resultPhoto: undefined

})

}

})

}

})

},

previewImage(e) {

wx.previewImage({

urls: [e.currentTarget.dataset.image]

})

},

editImage() {

const that = this

wx.editImage({

src: this.data.userChosePhoho,

success(res) {

that.setData({

userChosePhoho: res.tempFilePath

})

}

})

},

getNewPhoto() {

const that = this

wx.showLoading({

title: '图片生成中',

})

this.setData({

getPhotoStatus: true

})

app.doRequest(this.data.styleList[this.data.currentStyle].detailList[this.data.currentSubStyle].uri, {

style: this.data.styleList[this.data.currentStyle].detailList[this.data.currentSubStyle].name,

image: wx.getFileSystemManager().readFileSync(this.data.userChosePhoho, "base64")

}, option = {

method: "POST"

}).then(function (result) {

wx.hideLoading()

that.setData({

resultPhoto: result.error ? "error" : result.photo,

getPhotoStatus: false

})

})

},

saveImage() {

wx.saveImageToPhotosAlbum({

filePath: this.data.resultPhoto,

success(res) {

wx.showToast({

title: "保存成功"

})

},

fail(res) {

wx.showToast({

title: "异常,稍后重试"

})

}

})

},

onShareAppMessage: function () {

return {

title: "头头是道个性头像",

}

},

onShareTimeline() {

return {

title: "头头是道个性头像",

}

},

showModal(e) {

if(e.currentTarget.dataset.target=="Image"){

const previewSubStyle = e.currentTarget.dataset.substyle

const previewSubStyleUrl = this.data.styleList[this.data.currentStyle].detailList[previewSubStyle].preview

if(previewSubStyleUrl){

this.setData({

previewStyle: previewSubStyleUrl

})

}else{

wx.showToast({

title: "暂无模板预览",

icon: "error"

})

return

}

}

this.setData({

modalName: e.currentTarget.dataset.target

})

},

hideModal(e) {

this.setData({

modalName: null

})

},

copyData(e) {

wx.setClipboardData({

data: e.currentTarget.dataset.data,

success(res) {

wx.showModal({

title: '复制完成',

content: `已将${e.currentTarget.dataset.data}复制到了剪切板`,

})

}

})

},

})Because the project will request the background interface for many times, I will request the method for additional abstraction:

// 统一请求接口

doRequest: async function (uri, data, option) {

const that = this

return new Promise((resolve, reject) => {

wx.request({

url: that.url + uri,

data: data,

header: {

"Content-Type": 'application/json',

},

method: option && option.method ? option.method : "POST",

success: function (res) {

resolve(res.data)

},

fail: function (res) {

reject(null)

}

})

})

}After completion, configure the background interface and release the audit.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。