Starting from this article, we will use a series to explain the complete practice of microservices from requirements to online, from code to k8s deployment, from logging to monitoring, etc.

Actual project address: https://github.com/Mikaelemmmm/go-zero-looklook

1. Project Introduction

The whole project uses microservices developed by go-zero, which basically includes go-zero and some middleware developed by related go-zero authors. The technology stack used is basically the self-developed components of the go-zero project team, basically go -zero the whole family bucket.

The project directory structure is as follows:

- app: All business codes include api, rpc and mq (message queue, delay queue, timed task)

- common: common components error, middleware, interceptor, tool, ctxdata, etc.

- data: This project contains data generated by this directory depending on all middleware (mysql, es, redis, grafana, etc.). All content in this directory should be in the git ignore file and do not need to be submitted.

deploy:

- filebeat: docker deploy filebeat configuration

- go-stash: go-stash configuration

- nginx: nginx gateway configuration

- prometheus : prometheus configuration

script:

- gencode: generate api, rpc, and create kafka statements, copy and paste to use

- mysql: sh tool for generating models

- goctl: The template of the project's goctl, goctl generates a custom code template. For the usage of tempalte, please refer to the go-zero document, and copy it to .goctl in the home directory.

The goctl version used in this project is v1.3.0

- doc : Documentation for the project series

Second, use the technology stack

- go-zero

- nginx gateway

- filebeat

- kafka

- go-stash

- elasticsearch

- kibana

- prometheus

- grafana

- jaeger

- go-queue

- asynq

- asynqmon

- dtm

- docker

- docker-compose

- mysql

- redis

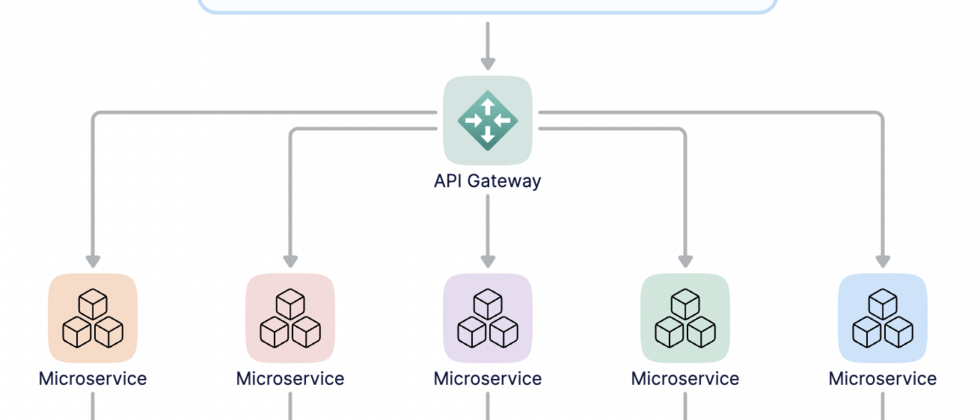

3. Project Architecture Diagram

4. Business Architecture Diagram

V. Construction of the project environment

This project uses the air hot loading function to instantly modify the code to take effect in time, and does not need to restart every time, the code is automatically reloaded in the container after the code is changed, and the service does not need to be started locally. Yes, the actual operation is the golang environment of cosmtrek/air in the container. So using goland and vscode is the same

1. Clone code & update dependencies

$ git clone git@github.com:Mikaelemmmm/go-zero-looklook.git

$ go mod tidy2. The environment that the startup project depends on

$ docker-compose -f docker-compose-env.yml up -djaeger: http://127.0.0.1:16686/search

asynq (delayed, timed message queue): http://127.0.0.1:8980/

kibana: http://127.0.0.1:5601/

Elastic search: http://127.0.0.1:9200/

Prometheus: http://127.0.0.1:9090/

Grafana: http://127.0.0.1:3001/

Mysql: View by self-client tool (Navicat, Sequel Pro)

- host : 127.0.0.1

- port : 33069

- username : root

- pwd : PXDN93VRKUm8TeE7

Redis: View by self-tool (redisManager)

- host : 127.0.0.1

- port : 63799

- pwd : G62m50oigInC30sf

Kafka: Check it with your own client tool

- host : 127.0.0.1

- port : 9092

3. Pull the project dependency mirror

Because this project is hot loaded with air, it runs in the air+golang image, and docker-compose can also be used directly, but considering that the dependencies may be relatively large, which will affect the startup project, so it is best to pull this image first. Then go to start the project, and pull the mirror command that the air+golang project depends on as follows

$ docker pull cosmtrek/air:latest4. Import mysql data

Create database looklook_order && import deploy/sql/looklook_order.sql data

Create database looklook_payment && import deploy/sql/looklook_payment.sql data

Create database looklook_travel && import deploy/sql/looklook_travel.sql data

Create database looklook_usercenter && import looklook_usercenter.sql data

5. Start the project

$ docker-compose up -d [Note] The dependency is the docker-compose.yml configuration in the project root directory

6. Check the running status of the project

Visit http://127.0.0.1:9090/ , click "Status" on the menu above, click Targets, the blue one means the startup is successful, and the red one is the unsuccessful startup

[Note] If it is the first time to pull a project, each project container builds and pulls dependencies for the first time. Depending on the network situation, some services may be relatively slow, so it will cause the project to fail to start or the dependent services to fail to start. I also failed to start myself. This is normal. If the project fails to start, such as order-api, we can go to the log at this time.

$ docker logs -f order-api Obviously, it takes too long to start order-rpc, and order-api has been waiting for him to start. Order-rpc does not start successfully within a certain period of time, and order-api is impatient (timed out), even if order-rpc starts later , it doesn't matter. At this time, you can restart the order-api again. This is only the first time you create a container. After that, as long as the container is not destroyed, it will not. Let's go to the project root directory and restart it.

$ docker-compose restart order-api[Note] Be sure to go to the project root directory to restart, because docker-compose.yml is in the project root directory

Then we look at it, here we use docker logs to see

__ _ ___

/ /\ | | | |_)

/_/--\ |_| |_| \_ , built with Go 1.17.6

mkdir /go/src/github.com/looklook/app/order/cmd/api/tmp

watching .

watching desc

watching desc/order

watching etc

watching internal

watching internal/config

watching internal/handler

watching internal/handler/homestayOrder

watching internal/logic

watching internal/logic/homestayOrder

watching internal/svc

watching internal/types

!exclude tmp

building...

running...

You can see that the order-api has been successful, and then go to prometheus to take a look

You can see that prometheus also shows success. Similarly, check the others once, and the startup is successful.

7. Access the project

Since we use the gateway made by nginx, the nginx gateway is configured in docker-compose and also in docker-compose. The port exposed by nignx to the outside world is 8888, so we access it through port 8888

$ curl -X POST "http://127.0.0.1:8888/usercenter/v1/user/register" -H "Content-Type: application/json" -d "{\"mobile\":\"18888888888\",\"password\":\"123456\"}"

返回:

{"code":200,"msg":"OK","data":{"accessToken":"eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2NzM5NjY0MjUsImlhdCI6MTY0MjQzMDQyNSwiand0VXNlcklkIjo1fQ.E5-yMF0OvNpBcfr0WyDxuTq1SRWGC3yZb9_Xpxtzlyw","accessExpire":1673966425,"refreshAfter":1658198425}}[Note] If the access to nginx fails, the access can be ignored. It may be that nginx depends on the back-end service. Before the back-end service was not started, nginx was not started here. Just restart nginx once, and restart it in the project root directory.

$ docker-compose restart nginx6. Log collection

Collect project logs to es (filebeat collects logs -> kafka -> go-stash consumes kafka logs -> outputs to es, kibana views es data)

So we need to create the topic of the log in kafka in advance

Enter the kafka container

$ docker exec -it kafka /bin/shCreate the topic of the log

$ cd /opt/kafka/bin

$ ./kafka-topics.sh --create --zookeeper zookeeper:2181 --replication-factor 1 -partitions 1 --topic looklook-logVisit kibana http://127.0.0.1:5601/ to create a log index

Click on the menu in the upper left corner (the three horizontal lines), find Analytics -> click discover

Then on the current page, Create index pattern->input looklook-*->Next Step->select @timestamp->Create index pattern

Then click the menu in the upper left corner, find Analytics->click discover, the logs are displayed (if not displayed, go to check filebeat, go-stash, use docker logs -f filebeat to view)

7. Introduction to the mirror image of this project

All services started successfully, they should be as follows, compare by yourself

- nginx : gateway (nginx->api->rpc)

- cosmtrek/air : The environment image that our business code development depends on. The reason why we use this is because air is hot loaded. It is very convenient to write code in real time. This image is air+golang. In fact, after we start our own business service, we of business services are running in this image

- wurstmeister/kafka: kafka for business use

- wurstmeister/zookeeper: kafka-dependent zookeeper

- redis: redis used by business

- mysql: database for business use

- prom/prometheus: monitor business

- grafana/grafana : The UI of prometheus is ugly and used to display the data collected by prometheus

- elastic/filebeat : collect logs to kafka

- go-stash : consume logs in kafka, desensitize, filter and output to es

- docker.elastic.co/elasticsearch/elasticsearch : store collected logs

- docker.elastic.co/kibana/kibana: show elasticsearch

- jaegertracing/jaeger-query, jaegertracing/jaeger-collector, jaegertracing/jaeger-agent: link tracking

- go-stash : After filebeat collects logs to kafka, go-stash consumes kafka for data desensitization, filters the contents of logs, and finally outputs them to es

Eight, project development suggestions

- The app decentralizes all business service codes

- The common base library for all services

- The data project relies on the data generated by middleware. In actual development, this directory and the data generated in this directory should be ignored in git

- Generate api, rpc code:

Generally, when we generate api and rpc code, the command to manually type goctl is too long and can't be remembered, so we can just copy the code in deploy/script/gencode/gen.sh. For example, I added a new business in the usercenter service, changed the password, and after writing the api file, I went to the usercenter/cmd/api/desc directory, and directly copied the generate api command in deploy/script/gencode/gen.sh to run Just

$ goctl api go -api *.api -dir ../ -style=goZeroThe same is true for generating rpc. After writing the proto file, paste and copy the generate rpc command in deploy/script/gencode/gen.sh and run it.

goctl >= 1.3 Enter the "service/cmd/rpc/pb" directory and execute the following command

$ goctl rpc protoc *.proto --go_out=../ --go-grpc_out=../ --zrpc_out=../

$ sed -i "" 's/,omitempty//g' *.pb.gogoctl < 1.3 Enter the "service/cmd" directory and execute the following command

$ goctl rpc proto -src rpc/pb/*.proto -dir ./rpc -style=goZero

$ sed -i "" 's/,omitempty//g' ./rpc/pb/*.pb.go[Note] It is recommended that when generating the rpc file, execute the following command one more time to delete the omitempty generated by protobuf, otherwise it will not return if the field is nil

Generate kafka code:

Because this project uses go-queue's kq as a message queue, and kq relies on kafka, it actually uses kafka as a message queue, but kq requires us to build the topic in advance by default, and it is not allowed to be automatically generated by default, so the command is also When you are ready, just copy the topic code for creating kafka in deploy/script/gencode/gen.shkafka-topics.sh --create --zookeeper zookeeper:2181 --replication-factor 1 -partitions 1 --topic {topic}- Generate model code and run deploy/script/mysql/genModel.sh parameters directly

- We split the .api files in the api project and put them in the desc folder of each api, because it may not be easy to view if all the content is written in the api, so we split and wrote all the methods to In an api, other entities, req and rep are unified into a folder and defined separately.

- The template is used to redefine the model and error handling. The custom goctl template used in this project is under the project data/goctl

9. Follow-up

Since there are a little more technology stacks involved in the project, it will be explained step by step in chapters, so stay tuned.

project address

https://github.com/zeromicro/go-zero

Welcome go-zero and star support us!

WeChat exchange group

Follow the official account of " Microservice Practice " and click on the exchange group to get the QR code of the community group.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。