In the previous article , we explained the principle of bookie server from three aspects: components, threads, and read and write processes. In this article, we will describe in detail how write operations are efficiently written and quickly dropped to disk through the cooperation of various components and threading models. We try to analyze at the architectural level.

This series of articles is based on BookKeeper version 4.14 configured in Apache Pulsar.

There are many threads calling the Journal and LedgerStorage APIs during write operations. In the previous article , we already know that Journal is a synchronous operation and DbLedgerStorage is an asynchronous operation in the write operation.

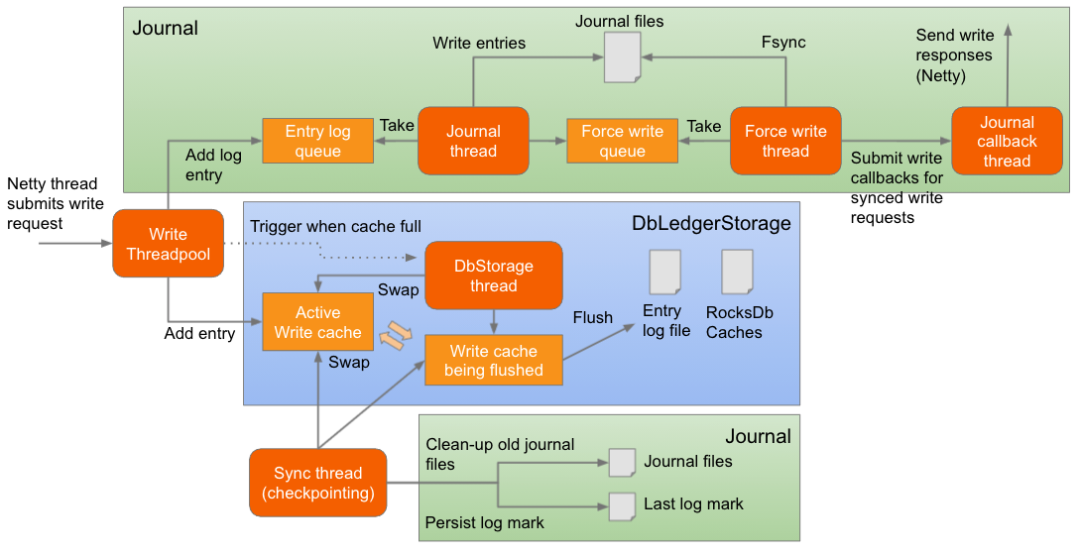

Figure 1: How each thread handles write operations

We know that multiple instances of Journal and DbLedgerStorage can be configured, each with its own thread, queue and cache. So when it comes to certain threads, caches and queues, they may exist in parallel.

Netty thread

Netty threads handle all TCP connections and all requests on those connections. And forward these write requests to the write thread pool, which includes the entry request to be written, handle the callback when the request ends, and send the response to the client.

write thread pool

The write thread pool does not have much to do, so it doesn't need a lot of threads (default is 1). Each write request adds an Entry to the Write Cache of DbLedgerStorage, and if successful, adds the write request to the Journal's memory queue (BlockingQueue). At this point, the work of the writing thread is completed, and the rest of the work is handed over to other threads.

Each DbLedgerStorage instance has two write caches, one active and one idle. The idle cache can flush data to disk in the background. When DbLedgerStorage needs to flush data to disk (after the active write cache is full), the two write caches are swapped. While the idle write cache is flushing data to disk, an empty write cache can continue to provide write services. As long as the data in the idle write cache is flushed to disk before the active write cache is full, there should be no problem.

The refresh operation of DbLedgerStorage can be triggered by the synchronization thread (Sync Thread) periodically executing the checkpoint mechanism or through the DbStorage thread (DbStorage Thread, each DbLedgerStorage instance corresponds to a DbStorage thread).

If the write cache is full when the write thread tries to add an Entry to the write cache, the write thread submits the flush operation to the DbStorage thread; if the swapped write cache has completed the flush operation, the two write caches will immediately Execute the swap operation (swap), and then the write thread adds the Entry to the newly swapped write cache, and this part of the write operation is completed.

However, if the active write buffer is full and the swapped out write buffer is still flushing, the write thread will wait for a while and eventually reject the write request. The time to wait for the write cache is controlled by the parameter in the configuration file dbStorage_maxThrottleTimeMs , the default value is 10000 (10 seconds).

By default, there is only one thread in the write thread pool. If the flush operation is too long, this will cause the write thread to block for 10 seconds, which will cause the task queue of the write thread pool to quickly fill up with write requests, thereby rejecting additional writes. ask. This is the back pressure mechanism of DbLedgerStorage. Once the flushed write cache can be written again, the blocking state of the write thread pool will be released.

The size of the write cache defaults to 25% of the available direct memory, which can be set by dbStorage_writeCacheMaxSizeMb in the configuration file. The total available memory is allocated to the two write caches in each DbLedgerStorage instance, one DbLedgerStorage instance per ledger directory. If there are 2 ledger directories and 1GB of free write cache memory, each DbLedgerStorage instance will be allocated 500MB, of which each write cache will be allocated 250MB.

DbStorage thread

Each DbLedgerStorage instance has its own DbStorage thread. When the write cache is full, this thread is responsible for flushing the data to disk.

Sync thread

This thread is outside the Journal module and the DbLedgerStorage module. Its job is mainly to periodically perform checkpoints, checkpoints

There are the following:

- Ledger's brush operation (long-term storage)

- Mark the location in the Journal where data has been safely flushed to the ledger disk by writing the log mark file to the disk.

- Clean up old Journal files that are no longer needed

This synchronization prevents two different threads from flushing at the same time.

When DbLedgerStorage is flushed, the swapped write cache will be written to the current entry log file (there will also be a log split operation), first these entries will be sorted by ledgerId and entryId, and then the entry will be written to entry log files and write their locations to the Entry Locations Index. This ordering when writing entries is to optimize the performance of read operations, which we will cover in the next article in this series.

Once all write-requested data has been flushed to disk, the swapped-out write-cache is flushed to be swapped with the active write-cache again.

Journal thread

The Journal thread is a loop, it gets the entry from the memory queue (BlockingQueue), writes the entry to disk, and periodically adds forced write requests to the Force Write queue, which triggers the fsync operation.

Journal does not execute the write system call for each entry obtained in the queue, it accumulates the entries and writes them to disk in batches (this is how BookKeeper flushes the disk), which is also called group commit. The following conditions will trigger the flush operation:

- Maximum wait time is reached (configured by

journalMaxGroupWaitMSec, the default value is 2ms) - Reached the maximum accumulated bytes (configured by

journalBufferedWritesThreshold, the default value is 512Kb) - The accumulated number of entries reaches the maximum value (configured by

journalBufferedEntriesThreshold, the default value is 0, 0 means not to use this configuration) - When the last entry in the queue is taken out, that is, the queue changes from non-empty to empty (configured through

journalFlushWhenQueueEmpty, the default value isfalse)

Every time the disk is flushed, a Force Write Request is created, which contains the entry to be flushed.

Force write thread

The forced write thread is a loop that gets the forced write request from the forced write queue and performs an fsync operation on the journal file. The mandatory write request includes the entries to be written and the callbacks for these entry requests, so that after persisting to disk, the callbacks for these entry write requests can be submitted to the callback thread for execution.

Journal callback thread

This thread executes the callback for the write request and sends the response to the client.

Frequently asked questions

- The bottleneck for write operations is usually disk IO in Journal or DbLedgerStorage. If the journal or synchronization operation (Fsync) is too slow, then the Journal thread and the forced write thread (can't quickly get entries from their respective queues. Similarly, if the DbLedgerStorage is too slow to flush the disk, then the Write Cache cannot be emptied and cannot be Swap quickly.

- If Journal encounters a bottleneck, the number of tasks in the task queue of the writing thread pool will reach the upper limit, the entry will be blocked in the Journal queue, and the writing thread will also be blocked. Once the thread pool task queue is full, write operations will be rejected at the Netty layer because the Netty threads will not be able to submit more write requests to the writer thread pool. If you use a flame graph, you will find that the writer threads in the writer thread pool are busy. If the bottleneck is DbLedgerStorage, then DbLedgerStorage itself can refuse writes, and after 10 seconds (by default), the writer thread pool will quickly fill up, causing Netty threads to refuse write requests.

- If the disk IO is not the bottleneck, but the CPU utilization is very high, it is very likely that high-performance disks are used, but the CPU performance is relatively low, resulting in lower processing efficiency of Netty threads and various other threads. This situation can be easily located through the monitoring indicators of the system.

Summarize

This article explains the write operation flow at the Journal and DBLedgerStorage level, and how the threads involved in the write operation work. In the next article, we will cover read operations.

Related Reading

This article is translated from "Apache BookKeeper Internals - Part 2 - Writes" by Jack Vanlightly.

Translator Profile

Qiu Feng@360 Technology middleware product line member of the middle platform infrastructure department, mainly responsible for the development and maintenance of Pulsar, Kafka and surrounding supporting services.

Forward this article to the circle of friends to collect 30 likes, scan the code to add Pulsar Bot 👇🏻👇🏻👇🏻Wechat to receive the screenshot of the circle of friends 👆🏻👆🏻👆🏻 a technical book "In-depth Analysis of Apache Pulsar". Limited to 5 copies ~ first come first served, while stocks last!

The cloud-native era message queue and stream fusion system provides a unified consumption model and supports both message queue and stream scenarios. It can not only provide enterprise-level read and write service quality and strong consistency guarantee for queue scenarios, but also provide high-quality streaming scenarios. Throughput and low latency; adopting the storage and computing separation architecture, supporting enterprise-level and financial-level functions such as large clusters, multi-tenancy, millions of topics, cross-regional data replication, persistent storage, tiered storage, and high scalability.

GitHub address: http://github.com/apache/pulsar/

Scenario Keywords :

Asynchronous decoupling peak shaving and valley filling cross-city synchronous message bus

Stream storage batch stream fusion real-time warehouse financial risk control

Click to read the original English text (open with VPN)

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。