basic introduction

Persistent caching is one of the very powerful features brought by webpack5. In one sentence, the build result is persistently cached to the local disk, and the secondary build (non-watch module) directly uses the result of the disk cache to skip the time-consuming processes such as resolve and build in the build process, thereby greatly improving the compilation and build time. efficiency.

The main solution of persistent cache is to optimize the compilation process and reduce the time-consuming problem of compilation. Through the design of the new cache system, the entire construction process is made more efficient and safe. Prior to this, there were also many solutions from the official or community to solve the time-consuming compilation and improve the compilation efficiency.

For example, the officially provided cache-loader can cache the result processed by the previous loader to the disk. The next time you go through this process (pitch), the cached content will be used according to certain rules to skip the subsequent loader. deal with. However, cache-loader can only cover the content of the file processed by loader , the scope of the cached content is relatively limited, in addition, cache-loader the cache is in the construction process The process of caching data also has some performance overhead, which will affect the entire compilation and construction speed, so it is recommended to use it with a loader that takes a long time to compile. In addition, cache-loader is by comparing the timestamps of the metadata file. This cache invalidation strategy is not very safe. For details, see the case I encountered before .

In addition, there are babel-loader , eslint-loader built-in cache function, DLL and so on. The core purpose is that the content that has been processed does not need to go through the original process again. These solutions can solve the compilation efficiency problem in certain scenarios.

basic use

Persistent caching is an out-of-the-box feature, but not enabled by default. Why doesn't webpack enable this feature by default? In fact, this is also explained in the persistence document : webpack has built-in (de)serialized data process and does it out of the box, but for project users, it is necessary to fully understand some basics of persistent cache Configurations and strategies to ensure the security of builds compiled during actual development (cache invalidation strategy).

First look at the basic configuration rules cache use:

// webpack.config.js

module.exports = {

cache: {

// 开启持久化缓存

type: 'fileSystem',

buildDependencies: {

config: [__filename]

}

}

} Complete the basic configuration of the persistent cache in the cache field. When the type is fileSystem, the ability to enable the persistent cache (in watch mode is used in conjunction with the hierarchical cache), and special attention should be paid to buildDependencies configuration, which is related to the security of the entire build process. Commonly used in some configuration information related to the project. For example, if you are using @vue/cli to develop a project, then vue.config.js needs to be used as a project buildDependencies , in addition webpack In the internal processing process, all loaders are also used as buildDependenceis . Once buildDependencies is changed, the entire cache will be invalidated at the startup stage of the compilation process, and then a new Build process.

In addition, another configuration related to persistent cache is: snapshot . snapshot -related configuration determines the cache memory generation snapshot policy when adopted ( timestamps | content hash | timestamps + content hash ), and this strategy will ultimately affect whether the cache is invalid, that is, whether webpack decides to use the cache.

const path = require('path')

module.exports = {

// ...

snapshot: {

// 针对包管理器维护存放的路径,如果相关依赖命中了这些路径,那么他们在创建 snapshot 的过程当中不会将 timestamps、content hash 作为 snapshot 的创建方法,而是 package 的 name + version

// 一般为了性能方面的考虑,

managedPaths: [path.resolve(__dirname, '../node_modules')],

immutablePaths: [],

// 对于 buildDependencies snapshot 的创建方式

buildDependencies: {

// hash: true

timestamp: true

},

// 针对 module build 创建 snapshot 的方式

module: {

// hash: true

timestamp: true

},

// 在 resolve request 的时候创建 snapshot 的方式

resolve: {

// hash: true

timestamp: true

},

// 在 resolve buildDependencies 的时候创建 snapshot 的方式

resolveBuildDependencies: {

// hash: true

timestamp: true

}

}

}Different ways of creating snapshots have different performance, security considerations and applicable scenarios . For details, please refer to the relevant documents .

It should be noted that the meanings of cache.buildDependencies and snapshot.buildDependencies are not consistent. cache.buildDependencies is which files or directories as buildDependencies (internal webpack default all loader as buildDependencies ) and snapshot.buildDependencies is the way to define these buildDependencies to create snapshot ( hash/timestamp ).

The build output cache has been completed inside webpack, but for an application project, high-frequency business development iteration rhythm, basic library upgrade, third-party library access, etc., for this part of the update, webpack is obviously One thing that needs to be done is to perceive its changes, and at the same time invalidate the cache to rebuild a new module, and rewrite the cache after the build is over. This is also a very important feature of webpack in the design of persistent cache: security.

Workflow & Principle Introduction

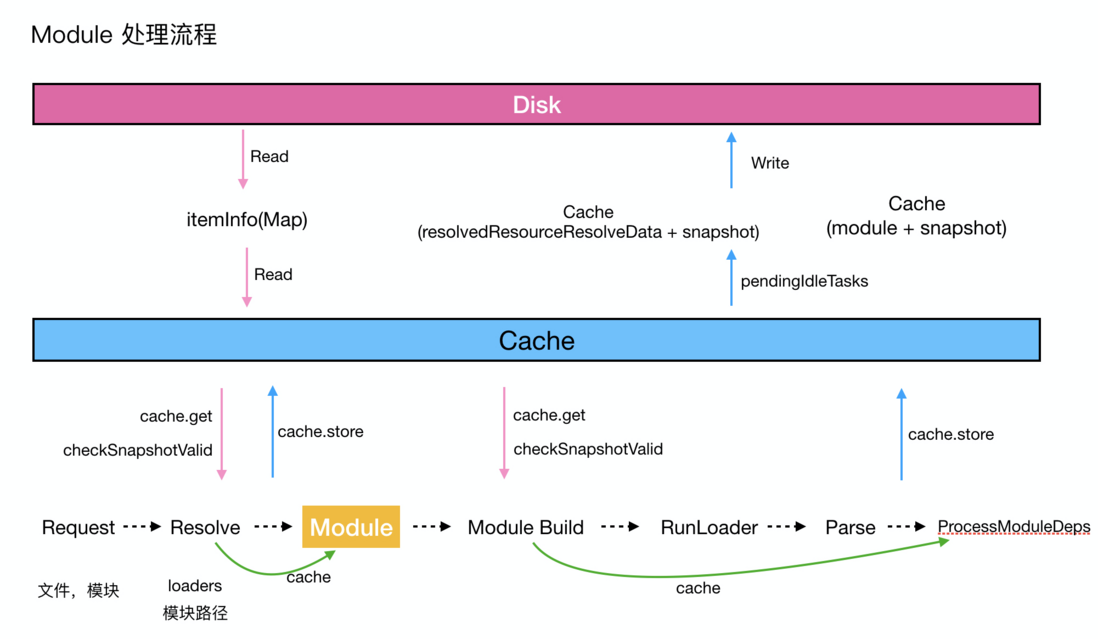

First, let's look at the processing flow of the next module , which generally goes through:

-

resolve(path search, file path to be processed, loader path, etc.) -

build(build) -

generate(code generation)

and so on.

module Before it is created, it needs to go through a series of resolve processes, such as the file path to be processed, loader and so on.

module After being created, it needs to go through the build process: basically, it is handed over to the loader for processing and then parsed. After this process, the dependencies are processed.

Especially for the resolve and build stages, there are some optimization suggestions or related plug-ins to improve their efficiency. For example, in the resolve stage, the suffix type of the matching path in the resolve stage can be reduced by resolve.extensions . Its core purpose is to reduce the process of resolve to improve efficiency. In the build stage, use cache-loader for some time-consuming operations to cache the corresponding processing results and so on.

So in the persistent cache scheme, how to design and deal with these scenarios?

First look at the persistent cache plugins related to resolve 419c07fc65de0a5cd65329224536c9cb---: ResolverCachePlugin.js

class CacheEntry {

constructor(result, snapshot) {

this.result = result

this.snapshot = snapshot

}

// 部署(反)序列化接口

serialize({ write }) {

write(this.result)

write(this.snapshot)

}

deserialize({ read }) {

this.result = read()

this.snapshot = read()

}

}

// 注册(反)序列化数据结构

makeSerializable(CacheEntry, 'webpack/lib/cache/ResolverCachePlugin')

class ResolverCachePlugin {

apply(compiler) {

const cache = compiler.getCache('ResolverCachePlugin')

let fileSystemInfo

let snapshotOptions

...

compiler.hooks.thisCompilation.tap('ResolverCachePlugin', compilation => {

// 创建 resolve snapshot 相关的配置

snapshotOptions = compilation.options.snapshot.resolve

fileSystemInfo = compilation.fileSystemInfo

...

})

const doRealResolve = (

itemCache,

resolver,

resolveContext,

request,

callback

) => {

...

resolver.doResolve(

resolver.hooks.resolve,

newRequest,

'Cache miss',

newResolveContext,

(err, result) => {

const fileDependencies = newResolveContext.fileDependencies

const contextDependencies = newResolveContext.contextDependencies

const missingDependencies = newResolveContext.missingDependencies

// 创建快照

fileSystemInfo.createSnapshot(

resolveTime,

fileDependencies,

contextDependencies,

missingDependencies,

snapshotOptions,

(err, snapshot) => {

...

// 持久化缓存

itemCache.store(new CacheEntry(result, snapshot), storeErr => {

...

callback()

})

}

)

}

)

}

compiler.resolverFactory.hooks.resolver.intercept({

factory(type, hook) {

hook.tap('ResolverCachePlugin', (resolver, options, userOptions) => {

...

resolver.hooks.resolve.tapAsync({

name: 'ResolverCachePlugin',

stage: -100

}, (request, resolveContext, callback) => {

...

const itemCache = cache.getItemCache(identifier, null)

...

const processCacheResult = (err, cacheEntry) => {

if (cacheEntry) {

const { snapshot, result } = cacheEntry

// 判断快照是否失效

fileSystemInfo.checkSnapshotValid(

snapshot,

(err, valid) => {

if (err || !valid) {

// 进入后续的 resolve 环节

return doRealResolve(

itemCache,

resolver,

resolveContext,

request,

done

)

}

...

// 使用缓存数据

done(null, result)

}

)

}

}

// 获取缓存

itemCache.get(processCacheResult)

})

})

}

})

}

} The process of webpack doing resolve caching is very clear: by hijacking resolverFactory.hooks.resolver resolver.hooks.resolve , it should be noted that the execution timing stage: -100 this resolve hook-- stage: -100 , which also means that this hook is executed very early.

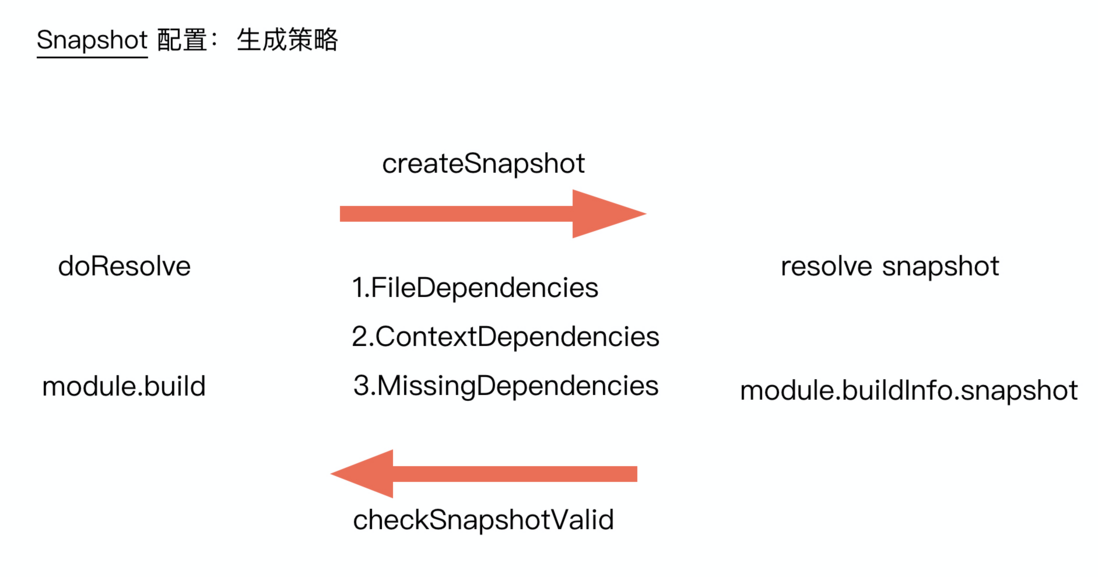

Use the identifier to uniquely identify the content of the persistent cache: resolveData and snapshot. Next, determine whether the snapshot is invalid. If it is invalid, go through the resolve logic again. If there is no failure, return to resolveData and skip the resolve process. In the actual process of resolve, after the process is over, the first thing to do is based on fileDependencies , contextDependencies , missingDependencies and in webpack. The resolve configuration of the snapshot is used to generate the content of the snapshot. At this point, the result of the resolve and the snapshot have been generated. Next, call the persistent cache interface itemCache.store to put the cache action into the cache queue.

Next, let's take a look at the content related to persistent caching in module build.

After a module is created, you need to add this module to the entire moduleGraph. First, use _modulesCache.get(identifier) to get the cached data of this module. If there is cached data, use the cached data. If not, use the cached data. The data used in the creation of the module.

class Compilation {

...

handleModuleCreation(

{

factory,

dependencies,

originModule,

contextInfo,

context,

recusive = true,

connectOrigin = recursive

},

callback

) {

// 创建 module

this.factorizeModule({}, (err, factoryResult) => {

...

const newModule = factoryResult.module

this.addModule(newModule, (err, module) => {

...

})

})

}

_addModule(module, callback) {

const identifer = module.identifier()

// 获取缓存模块

this._modulesCache.get(identifier, null, (err, cacheModule) => {

...

this._modules.set(identifier, module)

this.modules.add(module)

...

callback(null, module)

})

}

} Next, enter the buildModule stage. Before actually entering the subsequent build process, a more important task is to use the module.build method to judge whether the current module needs to go through the build process again. There are also several layers of different judgment logic. , for example, buildInfo.cachable is specified in the loader processing process, or the current module does not have a snapshot to check and also needs to go through the build process again. Finally, when the snapshot exists, it is necessary to check whether the snapshot is invalid. Once it is judged that the snapshot of the module is not invalid, that is, the logic of the cache is taken, and then the process of the module being processed by the loader and being parsed will be skipped in the end. Because all the progress of this module is obtained from the cache, including all the dependencies of this module, then it enters the stage of recursively processing dependencies. If the snapshot fails, then go through the normal build process (loader processing, parse, collect dependencies, etc.), after the build process is over, it will use the fileDependencies , contextDependencies collected during the build process contextDependencies , missingDependencies and the module configuration of snapshot in webpack.config to generate the content of the snapshot. At this time, the current module compilation process is over, and the snapshot has been generated, and the persistent cache will be called next. The interface this._modulesCache.store(module.identifier(), null, module) puts this cache action into the cache queue.

// compilation.js

class Compilation {

...

_buildModule(module, callback) {

...

// 判断 module 是否需要被 build

module.needBuild(..., (err, needBuild) => {

...

if (!needBuild) {

this.hooks.stillValidModule.call(module)

return callback()

}

...

// 实际 build 环节

module.build(..., err => {

...

// 将当前 module 内容加入到缓存队列当中

this._modulesCache.store(module.identifier(), null, module, err => {

...

this.hooks.succeedModule.call(module)

return callback()

})

})

}

)

}

}

// NormalModule.js

class NormalModule extends Module {

...

needBuild(context, callback) {

if (this._forceBuild) return callback(null, true)

// always build when module is not cacheable

if (!this.buildInfo.cachable) return callback(null, true)

// build when there is no snapshot to check

if (!this.buildInfo.snapshot) return callback(nuull, true)

...

// check snapshot for validity

fileSystemInfo.checkSnapshotValid(this.buildInfo.snapshot, (err, valid) => {

if (err) return callback(err);

if (!valid) return callback(null, true);

const hooks = NormalModule.getCompilationHooks(compilation);

hooks.needBuild.callAsync(this, context, (err, needBuild) => {

if (err) {

return callback(

HookWebpackError.makeWebpackError(

err,

"NormalModule.getCompilationHooks().needBuild"

)

);

}

callback(null, !!needBuild);

});

});

}

}A picture to sort out the above process:

By analyzing the resolve process before the module is created and the build process after it is created, I have a basic understanding of the process used by the upper layers in the entire cache system. A more important point is the security design of the cache, that is, in the process of persistent caching , the content that needs to be cached is on the one hand, and the snapshot related to this cache also needs to be cached, which is the basis for judging whether the cache is invalid .

The watch phase compares the snapshot: the change of the file triggers a new compilation. In module.needBuild _snapshotCache it is judged whether the compilation process needs to be re-compiled according to the snapshot. but in order to get a key Object of the Map module.buildInfo.snapshot stage is when undefined , so it would be _checkSnapshotValidNoCache , in fact snapshot information a Aspects are persistently cached to the disk. In addition, when the snapshot is generated, the timestamp and content hash of different modules are also cached in the memory, so in the _checkSnapshotValidNoCache execution stage is also preferentially obtained from the cache information and compare them.

The second hot start comparison snapshot: the memory _snapshotCache no longer exists, first read the content of the snapshot module.buildInfo.snapshot from the cache, and then proceed to _checkSnapshotValidNoCache

So for snapshots, what content needs to be paid attention to?

The first point is the path dependency that is strongly related to the current content to be cached, including: fileDependencies , contextDependencies , missingDependencies , in the process of generating snapshot , these path dependencies need to be included, and are also the basis for judging whether the cache is invalid;

The second point is the configuration strategy related to snapshots, which also determines how snapshots are generated (timestampes, content hash), speed, and reliability.

Timestamps are low cost, but easy to fail, and content hashes are more expensive, but more secure.

In the case of local development, frequent changes are involved, so the less expensive timestamps verification method is used. However, for buildDependencies, relatively speaking, one pass is a configuration file for consumption in the compilation process. For a single build process, the frequency of changes is relatively small, and generally these configuration files will affect the compilation of a large number of modules, so use content hash.

In CI scenarios, for example, if it is a git clone operation (storage of cached files), generally timestamps will change, but in order to speed up the compilation speed, content hash is used as the verification rule for snapshots. Another scenario is the git pull operation. The timestamps of some content will not change frequently, so the policy rule of timestamps + content hash is used.

For the specific generation strategy of snapshot in this part, please refer to FileSystemInfo.js about the implementation of the createSnapshot method.

Another point to pay attention to is the impact of buildDependencies on cache security. After the build starts, webpack will first read the cache, but before deciding whether buildDependencies use the cache, there is a very important judgment basis for- buildDependencies resolveDependencies , buildDependencies snapshot to check, only when the two snapshots and cache data are not invalidated, the cache data can be enabled invalid. Its effect is equivalent to a new compilation and construction process of the project.

In addition, I would like to talk about the underlying design of the entire persistent cache: the process design of the persistent cache is very independent and completely decoupled from the compile process of the project application.

There is a very important class in it Cache , which connects the compile process of the entire project application and the process of persistent cache.

// lib/Cache.js

class Cache {

constructor() {

this.hooks = {

get: new AsyncSeriasBailHook(['identifer', 'etag', 'gotHandlers']),

store: new AsyncParallelHook(['identifer', 'etag', 'data']),

storeBuildDependenceies: new AsyncParallelHook(['dependencies']),

beginIdle: new SyncHook([]),

endIdle: new AsyncParallelHook([]),

shutdown: new AsyncParallelHook([])

}

},

get(identifier, etag, callback) {

this.hooks.get.callAsync(identifer, etag, gotHandler, (err, result) => {

...

})

},

store(identifer, etag, data, callbackk) {

this.hooks.store.callAsync(identifer, etag, data, makeWebpackErrorCallback(callback, 'Cache.hooks.store'))

}

} When compile process which needs to be cached read or write operation is invoked Cache exposed upper Examples get , store method, then Cache --Interact with the cache system through the exposed Cache hooks.get and hooks.store . As mentioned before, in the process of using persistent cache, webpack actually starts hierarchical cache, namely: memory cache ( MemoryCachePlugin.js , MemoryWithGcCachePlugin.js ) and file cache ( IdleFileCachePlugin.js ).

Memory caching and file caching are registered Cache exposed on hooks.get , hooks.store , so that when thrown among the compile process get / store The event is also connected to the cached process.

In the stage of get , in the continuous construction link in watch mode, the memory cache (a Map data) is preferentially used. In the second build, in the case of no memory cache, use the file cache.

In the stage of store , instead of writing the cache content to disk immediately, all write operations are cached in a queue, and the write operation is performed only after the compile stage is over.

For data structures that need to be persistently cached:

- Interface to deploy (de)serialize data separately by convention (

serialize,deserialize) - Register serialized data structure (

makeSerializable)

After the compilation, it enters the stage of (deserializing) the cached data, which is actually deployed on the corresponding calling data structure (de)serialize The interface promotes (deserializes) the serialized data.

On the whole, the technical solution for persistent caching provided by webpack5 is more complete, reliable, and has better performance than some of the solutions for building, compiling, and improving efficiency mentioned at the beginning, which are mainly reflected in:

- Out of the box, the persistence feature can be turned on with a simple configuration;

- Completeness: The cache system designed by v5 covers the time-consuming process in the compile process in a finer-grained manner. For example, not only the module resolve and build phases mentioned above, but also persistence is used in the code generation and sourceMap phases. cache. In addition, for developers, functional features based on persistent cache features can also be developed in accordance with the conventions of the entire cache system, thereby improving compilation efficiency;

- Reliability: Compared with the v4 version, a more secure cache comparison strategy based on content hash is built in, namely timestamp + content hash, which can achieve a balance between development efficiency and security in different development environments and scenarios;

- Performance: The compile process is decoupled from the persistent cache. The action of persisting cached data in the compile phase will not hinder the entire process, but will be cached in a queue.

cache

for developers

- The custom module and dependency developed based on the persistent cache feature need to deploy the relevant interface according to the agreement;

- Relying on the caching strategy provided by the framework, build safe and reliable dependencies and caches;

for users

- Need to understand the problem solved by persistent cache;

- Cache, snapshot and other related generation strategies, configurations and applicability in different development environments (dev, build) and scenarios (CI/CD);

- Cache invalidation policy rules;

Related documents:

The article was first published on Personal Blog : If you think it's good, please give it a star~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。