Text|Cheng Zhengzheng (flower name: Ze Rui)

AutoNavi software development engineer

Responsible for the exploration, development and maintenance of AutoNavi's new scenario business Has certain research and practice on domain-driven, network communication, and data consistency

The 23009 words of this article take about 25 minutes to read

The first time I followed the SOFA community was while developing a troubleshooting component and found that there was a similar component in SOFARPC. In the design of SOFARPC, the entrance adopts a design method of seamless insertion, so that the single-machine fault elimination capability is introduced without destroying the principle of open and closed. And it is based on the core design and bus design, so as to be pluggable and zero intrusion, and the entire fault elimination module is dynamically loaded through SPI. The collection of statistical information is also event-driven. After the RPC synchronous or asynchronous call is completed, the corresponding event will be sent to the event bus EventBus. The corresponding event is received by the event bus to execute subsequent fault rejection logic.

Based on the above excellent design, I also use it as my own, and thus open the road of open source exploration in the SOFA community. After studying SOFABoot, SOFARPC, MOSN, etc., I feel that the code level of each project is very high, which is very helpful for my own code improvement.

SOFARegistry is an open source registry that provides functions such as service publishing, registration and subscription, and supports massive service registration and subscription requests. As a source code lover, although I have read SOFA's architecture articles and have a general understanding of the design philosophy, but because I have not understood the details from the code, I actually have a little understanding. Just with the help of the source code analysis activity developed by SOFARegistry, I chose the task of SlotTable based on my own interests.

SOFARegistry stores service data in shards, so each data server will only bear part of the service data. The specific data is stored in which data server is provided by a routing table called SlotTable, and sessions can be paired with SlotTable. The corresponding data derver reads and writes service data, and the data follower corresponding to the slot can address the leader through SlotTable for data synchronization.

The leader of Meta is responsible for maintaining SlotTable. Meta will maintain a list of data, and will use this list and monitoring data reported by data to create SlotTable. Subsequent data goes online and offline will trigger Meta to modify SlotTable, and SlotTable will be distributed to the cluster through heartbeat. each node.

Contributor Foreword

SOFARegistry stores service data in shards, so each data server will only bear part of the service data. The specific data is stored in which data server is provided by a routing table called SlotTable, and sessions can be paired with SlotTable. The corresponding data derver reads and writes service data, and the data follower corresponding to the slot can address the leader through SlotTable for data synchronization.

The leader of Meta is responsible for maintaining SlotTable. Meta will maintain a list of data, and will use this list and monitoring data reported by data to create SlotTable. Subsequent data goes online and offline will trigger Meta to modify SlotTable, and SlotTable will be distributed to the cluster through heartbeat. each node.

SlotTable is a very core concept in SOFARegistry, referred to as routing table. Simply put, SOFARegistry needs to store the publish and subscribe data on different machine nodes to ensure the horizontal expansion of the data storage. So what data is stored on different machines is saved by SlotTable.

SlotTable saves the mapping relationship between slots and machine nodes. The data is located on a certain slot through Hash, and the corresponding machine node is found through the slot, and the data is stored on the corresponding machine. There are many details that we need to understand in this process. For example, how each slot corresponds to the leader and follower nodes. If the machine load is not balanced, how is the update of the SlotTable performed? These are very interesting details to implement.

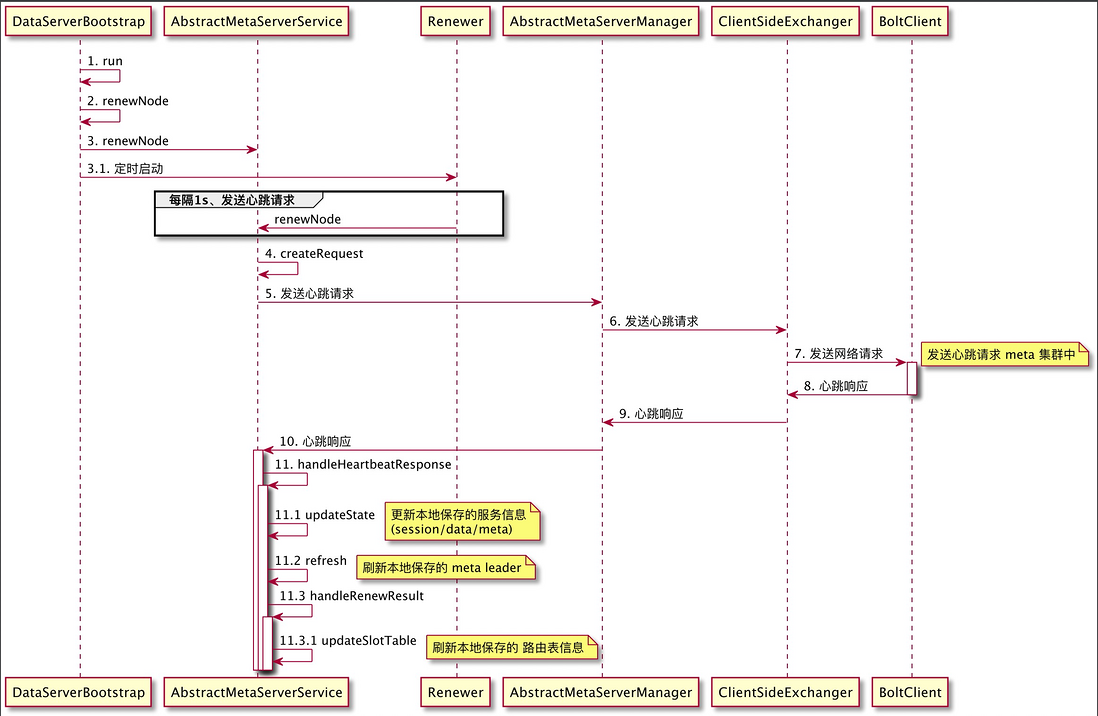

1. DataServer update SlotTable routing table process.

As shown in the figure above, the session and data nodes will regularly report the heartbeat to the Meta node. The Meta node maintains the data and session node list information, and will return the SlotTable routing table information in the heartbeat request. The data node saves the routing table SlotTable locally.

2. SlotTable update balance algorithm

As can be seen from the previous article, SOFARegistry uses data sharding to store on the DataServer node, so the following question is how to shard the data?

SOFARegistry is pre-allocated.

The traditional consistent Hash algorithm has the characteristic that the data distribution range is not fixed. This characteristic makes the service registration data need to be re-stored and arranged after the server node goes down, goes offline, and expands, which brings difficulties to data synchronization. Most data synchronization operations are performed using the content recorded in the operation log. In the traditional consistent hash algorithm, the operation log of the data is divided by node shards, and the change of the node leads to the change of the data distribution range.

In the computer field, most problems can be solved by adding an intermediate layer, so the data synchronization problem caused by the unfixed data distribution range can also be solved by the same idea.

The problem here is that after the node goes offline, if the data is synchronized with the current surviving node ID consistent Hash value, the data operation log of the failed node cannot be obtained, since the data cannot be stored in a place that will change. Data synchronization, then if the data is stored in a place that will not change, can the feasibility of data synchronization be guaranteed? The answer is yes, this middle layer is the pre-sharding layer, and the problem of data synchronization can be solved by mapping the data to the pre-sharding layer, which does not change.

At present, the main representative projects in the industry, such as Dynamo, Casandra, Tair, Codis, Redis cluster, etc., all adopt the pre-sharding mechanism to realize this layer that will not change.

The data storage range is divided into N slots in advance. The data directly corresponds to the slot, and the operation log of the data corresponds to the corresponding solt. Synchronization feasibility. In addition, the concept of "routing table" needs to be introduced. As shown in Figure 13, the "routing table" is responsible for storing the mapping relationship between each node and N slots, and ensures that all slots are allocated to each node as evenly as possible. In this way, when a node goes online or offline, only the content of the routing table needs to be modified. Keeping the slot unchanged not only ensures elastic expansion and contraction, but also greatly reduces the difficulty of data synchronization.

In fact, the mapping relationship between the above Slot and Node is expressed in the source code in the form of SlotTable and Slot . The source code is shown in the following code block.

public final class SlotTable implements Serializable {

public static final SlotTable INIT = new SlotTable(-1, Collections.emptyList());

// 最后一次更新的时间 epoch

private final long epoch;

//保存了 所有的 slot 信息; slotId ---> slot 对象的映射

private final Map<Integer, Slot> slots;

}

public final class Slot implements Serializable, Cloneable {

public enum Role {

Leader,

Follower,

}

private final int id;

//当前slot的leader节点

private final String leader;

//最近更新时间

private final long leaderEpoch;

//当前slot的follow节点

private final Set<String> followers;

}Since the node is changing dynamically, the mapping between Slot and node is also changing all the time, so our next focus is the change process of SlotTable. The change of the SlotTable is triggered in the Meta node. When a service goes online or offline, the change of the SlotTable will be triggered. In addition, the change of the SlotTable will be executed regularly.

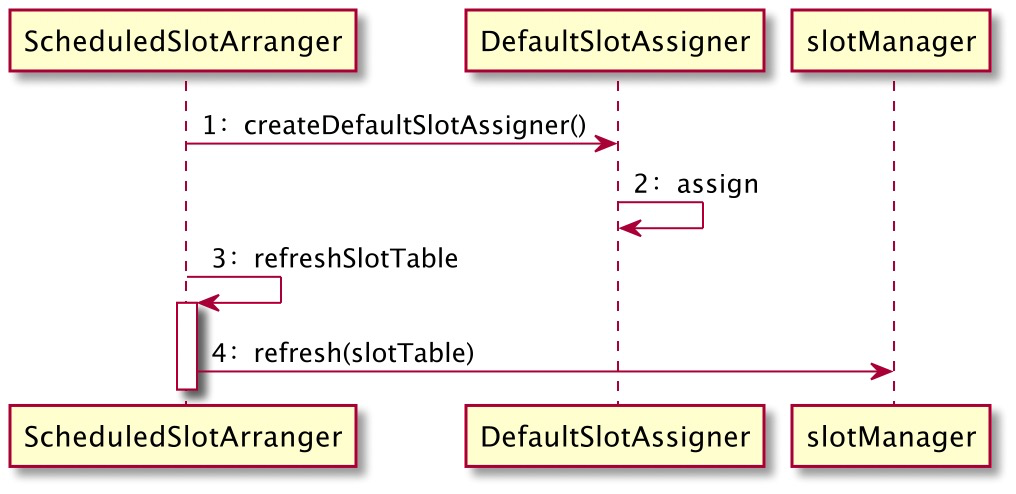

The entire synchronization update steps of SlotTable are shown in the figure.

code reference

com.alipay.sofa.registry.server.Meta.slot.arrange.ScheduledSlotArranger#arrangeSync.

The periodic change of SlotTable is realized by instantiating the daemon thread when initializing ScheduledSlotArranger to regularly execute the arrangeSync method of the internal task Arranger to realize the change of SlotTable. The general process is as follows.

Because the update of the SlotTable is responsible for the update of the master node in the MetaServer.

So the first step in updating SlotTable is to determine whether it is the primary node. The master node is responsible for the real SlotTable change step.

The second step is to get the latest DataServer node, because reassigning SlotTable is essentially reassigning the mapping relationship between DataServer nodes and slot slots. Therefore, it is definitely necessary to obtain the information of the currently surviving DataServer node, so as to facilitate slot allocation.

(The surviving DataServer is obtained here, that is, the DataServer that maintains the heartbeat with the MetaServer. The bottom layer is from

com.alipay.sofa.registry.server.Meta.lease.impl.SimpleLeaseManager , you can check the relevant source code if you are interested).

The third part is the allocation pre-check. In fact, some boundary conditions are judged, such as whether the DataServer is empty, whether the size of the DataServer is greater than the configured minDataNodeNum, and only if these conditions are met can the changes be made.

The fourth step is to execute the trayArrageSlot method and enter the inside of the method.

First, the internal lock of the process is obtained, which is actually a ReentrantLock. The main purpose here is to avoid the timed task from executing the allocation of SlotTable multiple times at the same time.

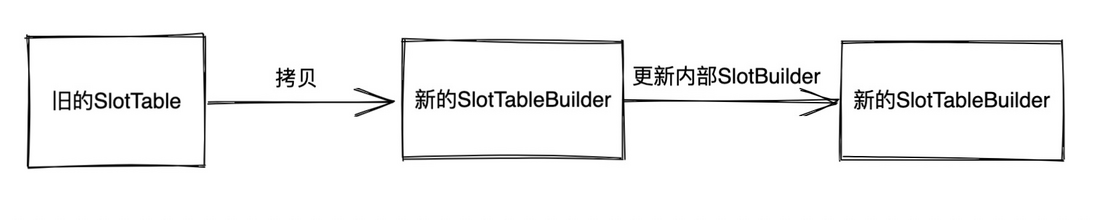

private final Lock lock = new ReentrantLock();Then it is to create SlotTableBuilder based on the current Data node information, where is the SlotTableBuilder here? Going back to the way of updating SlotTable, it is generally to create a new SlotTable object, and then use this newly created object to replace the old SlotTable object, so as to complete the operation of changing SlotTable. Consistency problems caused by such concurrency are difficult to control. So based on this, SlotTableBuilder can be seen from its name that it is the creator of SlotTable and internally aggregates SlotBuilder objects. In fact, similar to SlotTable, SlotTable aggregates Slot information internally.

Before looking at the SlotTable change algorithm, let's take a look at the creation process of SlotTableBuilder. The structure of SlotBuilder is shown below.

public class SlotTableBuilder {

//当前正在创建的 Slot 信息

private final Map<Integer, SlotBuilder> buildingSlots = Maps.newHashMapWithExpectedSize(256);

// 反向查询索引数据、通过 节点查询该节点目前负责哪些 slot 的数据的管理。

private final Map<String, DataNodeSlot> reverseMap = Maps.newHashMap();

//slot 槽的个数

private final int slotNums;

//follow 节点的数量

private final int followerNums;

//最近一次更新的时间

private long epoch;

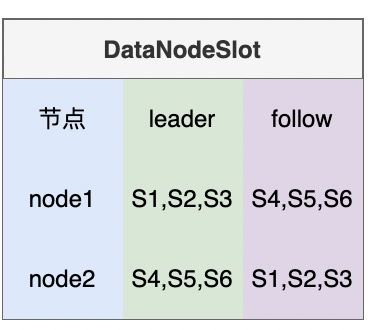

}From the SlotTableBuilder, it can be seen that a buildingSlots is aggregated internally to identify the Slot being created. Because SlotTable is composed of Slots , this is also easy to understand. In addition, SlotTableBuilder also aggregates a reverseMap, which represents the reverse query index. The key of this map is dataServer and the value is the DataNodeSlot object. The source code of DataNodeSlot is as follows.

/**

通过 Slot 找 leader和follows.

本质上是通过节点找 Slot,当前节点作为leaders的slot、和以当前节点作为 follower 的节点.

也就是说 当前我这个节点、我在那些 slot 中作为 leader, 对应的是 Set<Integer> leaders.

以及我当前这个节点在哪些 slot 中作为 follow,对应存储在 Set<Integer> follows.

**/

public final class DataNodeSlot {

private final String dataNode;

private final Set<Integer> leaders = Sets.newTreeSet();

private final Set<Integer> followers = Sets.newTreeSet();

}A graph to express DataNodeSlot is shown below. It can be seen that it is the exact opposite mapping from Figure 1. Find the slot information associated with the node through the node. Because this layer of query is often used later, this relationship is directly saved. For the convenience of the later presentation, here are several presentation methods.

- The node is used as the leader's slot set and we call it: the node leader's slot set.

- The node is used as the follow slot set and we call it: the node follow slot set.

- All nodes associated with SlotTable are collectively referred to as: node list of SlotTable

Go back to SlotTableBuilder to create

private SlotTableBuilder createSlotTableBuilder(SlotTable slotTable,

List<String> currentDataNodeIps,

int slotNum,int replicas) {

//通过 NodeComparator 包装当前新增的、删除的的节点.

NodeComparator comparator = new NodeComparator(slotTable.getDataServers(), currentDataNodeIps);

SlotTableBuilder slotTableBuilder = new SlotTableBuilder(slotTable, slotNum, replicas);

//执行 slotTableBuilder 的初始化

slotTableBuilder.init(currentDataNodeIps);

//在这里将已经下线的 data 节点删除掉、

//其中已经删除的 是通过 NodeComparator 内部的getDiff方法 实现的。

comparator.getRemoved().forEach(slotTableBuilder::removeDataServerSlots);

return slotTableBuilder;

}The method parameter SlotTable is to obtain the old SlotTable object through the SlotManager object. currentDataNodeIps represents the current surviving dataServer (maintaining the connection with MetaServer through heartbeat) and then passed into the createSlotTableBuilder method. The createSlotTableBuilder method internally calculates and wraps the difference between the old "SlotTable's node list" and the incoming currentDataNodeIps through the NodeComparator object. Including the new and deleted DataServers in the current CurrentDataNodeIps. Then call the init method of SlotTableBuilder. Perform initialization of SlotTableBuilder.

The init source code of SlotTableBuilder is as follows.

public void init(List<String> dataServers) {

for (int slotId = 0; slotId < slotNums; slotId++) {

Slot slot = initSlotTable == null ? null : initSlotTable.getSlot(slotId);

if (slot == null) {

getOrCreate(slotId);

continue;

}

//1. 重新新建一个 SlotBuilder 将原来的 Slot 里面的数据拷贝过来

//2. 拷贝 leader节点

SlotBuilder slotBuilder =

new SlotBuilder(slotId, followerNums, slot.getLeader(), slot.getLeaderEpoch());

//3. 拷贝 follow 节点。

slotBuilder.addFollower(initSlotTable.getSlot(slotId).getFollowers())

buildingSlots.put(slotId, slotBuilder);

}

//4. 初始化反向查询索引数据、通过 节点查询该节点目前管理哪些 slot

initReverseMap(dataServers);

}It can be seen from the above code that init actually does such a thing: initialize the slotBuilder object inside the SlotBuilder, and copy all the leader and follow nodes of the old SlotTable. Note that when instantiating SlotTableBuilder, the old SlotTable is passed in, which is the initSlotTable object here.

The initReverseMap in the last step of the init method can see from the name that an instantiated reverse routing table, reverse lookup table, and search function from Node node to Slot are constructed, because a data node is often used in subsequent processing. The leader role of those slots, and the follower roles of which slots. So here is a layer of index processing.

Go back to the last step of the createSlotTableBuilder method in the ScheduledSlotArranger class. At this time, the SlotTableBulder has completed the data copy of the old SlotTable.

comparator.getRemoved().forEach(slotTableBuilder::removeDataServerSlots);Above we said that the comparator object holds the comparison information between the new dataServer and the old 'SlotTable's node list'.

So the nodes that have been deleted in the new dataServer, we need to delete them from SlotTableBuilder. The internal deletion logic is also to iterate all SlotBuilders to compare whether the leader and the current node are the same, delete the same, and follow the same.

public void removeDataServerSlots(String dataServer) {

for (SlotBuilder slotBuilder : buildingSlots.values()) {

//删除该 SlotBuilder follow 节点中的 dataServer

slotBuilder.removeFollower(dataServer)

//如果该 SlotBuilder 的 leader 节点是 dataServer ,

//那么设置该 slotBuilder 的leader节点为空、需要重新进行分配

if (dataServer.equals(slotBuilder.getLeader())) {

slotBuilder.setLeader(null);

}

}

reverseMap.remove(dataServer);

}To sum up, the process of creating SlotTableBuilder is to instantiate SlotTableBuilder (internal SlotBuilder) according to the old SlotTable, calculate the difference between the old 'SlotTable's node list' and the current latest dataServer, and update the SlotBuilder-related leader and follow values inside SlotTableBuilder .

At this point, the construction process of SlotTableBuilder has actually been completed. Thinking about what to do next?

Think about it, if we trigger the reassignment of SlotTable is that a dataA node is offline, then in slotTableBuilder::removeDataServerSlots this step will delete the leader or follower of the Slot managed by dataA in the SlotTableBuilder we are creating, then The leader or follower of the slot is likely to become empty. That is to say, the Slot has no data node to process the request. So we decide whether to perform the reassignment operation according to whether there is a Slot assigned to complete the assignment in the current SlotBuilder, and whether there is an uncompleted assigned Slot code block as follows.

public boolean hasNoAssignedSlots() {

for (SlotBuilder slotBuilder : buildingSlots.values()) {

if (StringUtils.isEmpty(slotBuilder.getLeader())) {

//当前 Slot的leader节点为空

return true;

}

if (slotBuilder.getFollowerSize() < followerNums) {

//当前 Slot的follow节点的个数小于配置的 followerNums

return true;

}

}

return false;

}Create a SlotTableBuilder and there are Slots that have not been allocated, and perform the real allocation process, as shown in the following figure.

It can be seen from the figure that the allocation process is finally delegated to DefaultSlotAssigner. DefaultSlotAssigner instantiates the view/MigrateSlotGroup of SlotTableBuilder/currentDataServers currently being created in the construction method, of which MigrateSlotGroup

Internally saved are those Slots that lack leader and follower

public class MigrateSlotGroup {

//哪些 Slot 缺少 leader

private final Set<Integer> leaders = Sets.newHashSet();

//哪些Slot 缺少 follow 以及缺少的个数

private final Map<Integer, Integer> lackFollowers = Maps.newHashMap();

}The assign code is as follows. The code first assigns the slot that lacks the leader, and then assigns the slot that lacks the follower

public SlotTable assign() {

BalancePolicy balancePolicy = new NaiveBalancePolicy();

final int ceilAvg =

MathUtils.divideCeil(slotTableBuilder.getSlotNums(), currentDataServers.size());

final int high = balancePolicy.getHighWaterMarkSlotLeaderNums(ceilAvg);

//分配缺少leader的slot

if (tryAssignLeaderSlots(high)) {

slotTableBuilder.incrEpoch();

}

//分配缺少 follow 的 slot

if (assignFollowerSlots()) {

slotTableBuilder.incrEpoch();

}

return slotTableBuilder.build();

}leader node assignment

Enter the tryAssignLeaderSlots method to view the details of the specific allocation algorithm. The specific implementation is explained by means of code comments.

private boolean tryAssignLeaderSlots(int highWatermark) {

//按照 follows 节点的数量 从大到小排序 0比较特殊排在最后面,0 为什么比较特殊呢、因为无论怎么分配、

//最终选择出来的leader一定不是该slot的follow、因为该slot的follow为空

//优先安排 follow节点比较少的 Slot

//其实这点也可以想明白的。这些没有 leader 的 slot 分配顺序肯定是要根据 follow节点越少的优先分配最好

//以防止这个 follow 也挂了、那么数据就有可能会丢失了。

List<Integer> leaders =

migrateSlotGroup.getLeadersByScore(new FewerFollowerFirstStrategy(slotTableBuilder));

for (int slotId : leaders) {

List<String> currentDataNodes = Lists.newArrayList(currentDataServers);

//选择 nextLeader 节点算法?

String nextLeader =

Selectors.slotLeaderSelector(highWatermark, slotTableBuilder, slotId)

.select(currentDataNodes);

//判断nextLeader是否是当前slot的follow节点 将follow节点提升为主节点的。

boolean nextLeaderWasFollower = isNextLeaderFollowerOfSlot(slotId, nextLeader);

// 将当前 slot 的 leader 节点用选择出来的 nextLeader 替换

slotTableBuilder.replaceLeader(slotId, nextLeader);

if (nextLeaderWasFollower) {

//因为当前 Slot 将 follow节点提升为leader节点了、那么该 Slot 肯定 follows 个数又不够了、需要再次分配 follow 节点

migrateSlotGroup.addFollower(slotId);

}

}

return true;

}The core selects the nextLeader method in the above allocation leader code.

String nextLeader =

Selectors.slotLeaderSelector(highWatermark, slotTableBuilder, slotId)

.select(currentDataNodes);Select a suitable leader node through Selectors.

Continue to trace inside the DefaultSlotLeaderSelector.select method. Similarly, we use code comments to explain the specific implementation.

public String select(Collection<String> candidates) {

//candidates: 当前所有的候选节点,也是 tryAssignLeaderSlots 方法传入的 currentDataServers

Set<String> currentFollowers = slotTableBuilder.getOrCreate(slotId).getFollowers();

Collection<String> followerCandidates = Lists.newArrayList(candidates);

followerCandidates.retainAll(currentFollowers);

//经过 followerCandidates.retainAll(currentFollowers)) 之后 followerCandidates

//仅仅保留 当前 Slot 的 follow 节点

//并且采取了一个策略是 当前 follow 节点作为其他 Slot 的leader最少的优先、

//用直白的话来说。

//当前 follower 越是没有被当做其他 Slot 的leader节点、那么

//证明他就是越 '闲' 的。必然优先考虑选择它作为leader 节点。

String leader = new LeastLeaderFirstSelector(slotTableBuilder).select(followerCandidates);

if (leader != null) {

DataNodeSlot dataNodeSlot = slotTableBuilder.getDataNodeSlot(leader);

if (dataNodeSlot.getLeaders().size() < highWaterMark) {

return leader;

}

}

//从其他的机器中选择一个,优先选择充当 leader 的 slot 个数最少的那一个 DataServer

return new LeastLeaderFirstSelector(slotTableBuilder).select(candidates);

}I believe it is easy to understand SOFARegistry's approach through the source code comment of the select method above. To sum up, first find the leader from the follow node of the current slot, because in this case, data migration is not required, which is equivalent to the failure of the master node, and the backup node is promoted as the master node to achieve high availability. But which one to choose, SOFARegistry's strategy is to find the most "idle" one among all follow nodes, but if the number of Slots managed by all its follow nodes as leader nodes is greater than highWaterMark, then it proves that the Slot's All the follow nodes are too "busy", then the one with the least "number of slots managed by the leader node" will be selected from all the surviving machines, but this situation actually has data synchronization overhead.

follow node assignment

Similarly, it is detailed through source code annotations.

private boolean assignFollowerSlots() {

//使用 FollowerEmergentScoreJury 排序得分策略表明

// 某一个 slot 缺少越多 follow、排序越靠前。

List<MigrateSlotGroup.FollowerToAssign> followerToAssigns =

migrateSlotGroup.getFollowersByScore(new FollowerEmergentScoreJury());

int assignCount = 0;

for (MigrateSlotGroup.FollowerToAssign followerToAssign : followerToAssigns) {

// 当前待分配的 slotId

final int slotId = followerToAssign.getSlotId();

// 当前 slotId 槽中还有多少待分配的 follow 从节点。依次迭代分配。

for (int i = 0; i < followerToAssign.getAssigneeNums(); i++) {

final List<String> candidates = Lists.newArrayList(currentDataServers);

// 根据上文中的 DataNodeSlot 结构、依据 节点被作为follow 的slot的个数从小到大排序。

// follows 个数一样、按照最少作为 leader 节点进行排序。

// 其实最终目的就是找到最 "闲" 的那一台机器。

candidates.sort(Comparators.leastFollowersFirst(slotTableBuilder));

boolean assigned = false;

for (String candidate : candidates) {

DataNodeSlot dataNodeSlot = slotTableBuilder.getDataNodeSlot(candidate);

//跳过已经是它的 follow 或者 leader 节点的Node节点

if (dataNodeSlot.containsFollower(slotId) || dataNodeSlot.containsLeader(slotId)) {

continue;

}

//给当前 slotId 添加候选 follow 节点。

slotTableBuilder.addFollower(slotId, candidate);

assigned = true;

assignCount++;

break;

}

}

}

return assignCount != 0;

}As mentioned earlier, MigrateSlotGroup holds information about the slots that need to be reassigned leaders and followers. The main steps of the algorithm are as follows.

- Find all Slot messages that don't have enough follow

- Sort according to the principle of missing the more followers, the higher the priority

- Iterate over all Slot information that is missing follow here is wrapped by MigrateSlotGroup.FollowerToAssign

- The inner loop iteration is missing the follow size, the follow needed to add to the slot

- Sort candidate dataServers according to "free, busy" Chengdu

- Execute the add follow node

At this point, we have completed the node allocation for the slot that lacks the leader or follower.

SlotTable Balance Algorithm

After understanding the change process and algorithm of SlotTable, I believe that everyone has their own understanding of this. Then the balancing process of SlotTable is actually similar. For details, please refer to the source code com.alipay.sofa.registry.server.Meta.slot.balance.DefaultSlotBalancer。

Because in the process of frequent online and offline of nodes, the load of some nodes (the number of slots responsible for management) is bound to be too high, and the load of some nodes is very low, so a dynamic balance mechanism is needed to ensure the relative load of nodes. balanced.

The entry is inside the DefaultSlotBalancer.balance method

public SlotTable balance() {

//平衡 leader 节点

if (balanceLeaderSlots()) {

LOGGER.info("[balanceLeaderSlots] end");

slotTableBuilder.incrEpoch();

return slotTableBuilder.build();

}

if (balanceHighFollowerSlots()) {

LOGGER.info("[balanceHighFollowerSlots] end");

slotTableBuilder.incrEpoch();

return slotTableBuilder.build();

}

if (balanceLowFollowerSlots()) {

LOGGER.info("[balanceLowFollowerSlots] end");

slotTableBuilder.incrEpoch();

return slotTableBuilder.build();

}

// check the low watermark leader, the follower has balanced

// just upgrade the followers in low data server

if (balanceLowLeaders()) {

LOGGER.info("[balanceLowLeaders] end");

slotTableBuilder.incrEpoch();

return slotTableBuilder.build();

}

return null;

}Due to space limitations, only the leader node balancing process is analyzed here. The rest of the process of balanceLeaderSlots() in the above source code is similar to it, and interested readers can also find source code analysis by themselves.

Go inside the balanceLeaderSlots method.

private boolean balanceLeaderSlots() {

//这里就是找到每一个节点 dataServer 作为leader的 slot 个数的最大天花板值---->

//容易想到的方案肯定是平均方式、一共有 slotNum 个slot、

//将这些slot的leader归属平均分配给 currentDataServer

final int leaderCeilAvg = MathUtils.divideCeil(slotNum, currentDataServers.size());

if (upgradeHighLeaders(leaderCeilAvg)) {

//如果有替换过 leader、那么就直接返回、不用进行 migrateHighLeaders 操作

return true;

}

if (migrateHighLeaders(leaderCeilAvg)) {

//经过上面的 upgradeHighLeaders 操作

//不能找到 follow 进行迁移、因为所有的follow也都很忙、在 exclude 当中、

//所以没法找到一个follow进行迁移。那么我们尝试迁移 follow。

//因为 highLeader 的所有 follower 都是比较忙、所以需要将这些忙的节点进行迁移、期待给这些 highLeader 所负责的 slot 替换一些比较清闲的 follow

return true;

}

return false;

}We focus on the upgradeHighLeaders method, and similarly use source code annotations

private boolean upgradeHighLeaders(int ceilAvg) {

//"如果一个节点的leader的slot个数大于阈值、那么就会用目标slot的follow节点来替换当前leader" 最多移动 maxMove次数

final int maxMove = balancePolicy.getMaxMoveLeaderSlots();

//理解来说这块可以直接将节点的 leader 个数大于 ceilAvg 的 节点用其他节点替换就可以了、为什么还要再次向上取整呢?

//主要是防止slotTable出现抖动,所以设定了触发变更的上下阈值 这里向上取整、是作为一个不平衡阈值来使用、

// 就是只针对于不平衡多少(这个多少可以控制)的进行再平衡处理

final int threshold = balancePolicy.getHighWaterMarkSlotLeaderNums(ceilAvg);

int balanced = 0;

Set<String> notSatisfies = Sets.newHashSet();

//循环执行替换操作、默认执行 maxMove 次

while (balanced < maxMove) {

int last = balanced;

//1. 找到 哪些节点的 leader 个数 超过 threshold 、并对这些节点按照leader 的个数的从大到小排列。

final List<String> highDataServers = findDataServersLeaderHighWaterMark(threshold);

if (highDataServers.isEmpty()) {

break;

}

// 没有任何 follow 节点能用来晋升到 leader 节点

if (notSatisfies.containsAll(highDataServers)) {

break;

}

//2. 找到可以作为新的leader的 节点,但是不包含已经不能添加任何leader的节点、因为这些节点的leader已经超过阈值了。

final Set<String> excludes = Sets.newHashSet(highDataServers);

excludes.addAll(findDataServersLeaderHighWaterMark(threshold - 1));

for (String highDataServer : highDataServers) {

if (notSatisfies.contains(highDataServer)) {

//如果该节点已经在不满足替换条件队列中、则不在进行查找可替换节点操作

continue;

}

//找到可以作为新的leader的 节点,但是不包含已经不能添加任何leader的节点、因为这些节点的leader已经超过阈值了。

//算法过程是:

//1. 从 highDataServer 所负责的所有 slot 中找到某一个 slot、这个 slot 满足一个条件就是: 该 slot 的 follow 节点中有一个最闲(也就是 节点的leader的最小)

//2. 找到这个 slot、我们只需要替换该 slot 的leader为找到的follow

//其实站在宏观的角度来说就是将 highDataServer 节点leader 的所有slot的follow节点按照闲忙程度进行排序、

//找到那个最闲的、然后让他当leader。这样就替换了 highDataServer 当leader了

Tuple<String, Integer> selected = selectFollower4LeaderUpgradeOut(highDataServer, excludes);

if (selected == null) {

//没有找到任何 follow节点用来代替 highDataServer节点、所以该节点不满足可替换条件、加入到 notSatisfies 不可替换队列中. 以便于外层循环直接过滤。

notSatisfies.add(highDataServer);

continue;

}

//找到 highDataServer 节点的某一个可替换的 slotId

final int slotId = selected.o2;

// 找到 slotId 替换 highDataServer 作为leader 的节点 newLeaderDataServer

final String newLeaderDataServer = selected.o1;

// 用 newLeaderDataServer 替换 slotId 旧的 leader 节点。

slotTableBuilder.replaceLeader(slotId, newLeaderDataServer);

balanced++;

}

if (last == balanced) break;

}

return balanced != 0;

}Enter the key to find the replaceable slotId and the process of the new leader node, and similarly use the source code annotation method.

/*

从 leaderDataServer 所leader的所有slot中、选择一个可以替换的slotId

和新的leader来替换leaderDataServer

*/

private Tuple<String, Integer> selectFollower4LeaderUpgradeOut(

String leaderDataServer, Set<String> excludes) {

//获取当前 leaderDataServer 节点 leader 或者 follow 的slotId 视图。DataNodeSlot 结构我们上文有说过。

final DataNodeSlot dataNodeSlot = slotTableBuilder.getDataNodeSlot(leaderDataServer);

Set<Integer> leaderSlots = dataNodeSlot.getLeaders();

Map<String, List<Integer>> dataServers2Followers = Maps.newHashMap();

//1. 从 dataNodeSlot 获取 leaderDataServer 节点leader的所有slotId: leaderSlots

for (int slot : leaderSlots) {

//2. 从slotTableBuilder 中找出当前 slot 的follow

List<String> followerDataServers = slotTableBuilder.getDataServersOwnsFollower(slot);

//3. 去掉excludes ,得到候选节点,因为 excludes 肯定不会是新的 leader 节点

followerDataServers = getCandidateDataServers(excludes, null, followerDataServers);

//4. 构建 候选节点到 slotId 集合的映射关系。

for (String followerDataServer : followerDataServers) {

List<Integer> followerSlots =

dataServers2Followers.computeIfAbsent(followerDataServer, k -> Lists.newArrayList());

followerSlots.add(slot);

}

}

if (dataServers2Followers.isEmpty()) {

//当 leaderDataServer 节点的follow 都是 excludes 中的成员时候、那么就有可能是空的。

return null;

}

List<String> dataServers = Lists.newArrayList(dataServers2Followers.keySet());

//按照 候选节点的 leader的 slot 个数升序排序、也就是也就是找到那个最不忙的,感兴趣可以查看 leastLeadersFirst 方法内部实现。

dataServers.sort(Comparators.leastLeadersFirst(slotTableBuilder));

final String selectedDataServer = dataServers.get(0);

List<Integer> followers = dataServers2Followers.get(selectedDataServer);

return Tuple.of(selectedDataServer, followers.get(0));

}So far, we have completed the replacement of the high-load leader node. If there is any replacement during this process, then return directly. If it has not been replaced, we will continue to execute the migrateHighLeaders operation in DefaultSlotBalancer. Because if there is no leader replacement after the upgradeHighLeaders operation in DefaultSlotBalancer, it proves that the high-load leader node is also busy with its follower nodes, so what needs to be done is to migrate these busy follower nodes. We continue to view the specific process through source code comments.

private boolean migrateHighLeaders(int ceilAvg) {

final int maxMove = balancePolicy.getMaxMoveFollowerSlots();

final int threshold = balancePolicy.getHighWaterMarkSlotLeaderNums(ceilAvg);

int balanced = 0;

while (balanced < maxMove) {

int last = balanced;

// 1. find the dataNode which has leaders more than high water mark

// and sorted by leaders.num desc

final List<String> highDataServers = findDataServersLeaderHighWaterMark(threshold);

if (highDataServers.isEmpty()) {

return false;

}

// 2. find the dataNode which could own a new leader

// exclude the high

final Set<String> excludes = Sets.newHashSet(highDataServers);

// exclude the dataNode which could not add any leader

excludes.addAll(findDataServersLeaderHighWaterMark(threshold - 1));

final Set<String> newFollowerDataServers = Sets.newHashSet();

// only balance highDataServer once at one round, avoid the follower moves multi times

for (String highDataServer : highDataServers) {

Triple<String, Integer, String> selected =

selectFollower4LeaderMigrate(highDataServer, excludes, newFollowerDataServers);

if (selected == null) {

continue;

}

final String oldFollower = selected.getFirst();

final int slotId = selected.getMiddle();

final String newFollower = selected.getLast();

slotTableBuilder.removeFollower(slotId, oldFollower);

slotTableBuilder.addFollower(slotId, newFollower);

newFollowerDataServers.add(newFollower);

balanced++;

}

if (last == balanced) break;

}

return balanced != 0;

}3. How session and data nodes use routing tables

Above we learned that the SlotTable routing table is obtained from the Meta node in the heartbeat and updated to the local, so how the session and data nodes use the routing table. First, let's take a look at how the session node uses the SlotTable routing table. The session node is responsible for the client's publish and subscribe request, and reads and writes the data of the data node through the SlotTable routing table; the local SlotTable routing table of the session node is stored in SlotTableCacheImpl.

public final class SlotTableCacheImpl implements SlotTableCache {

// 不同计算 slot 位置的算法抽象

private final SlotFunction slotFunction = SlotFunctionRegistry.getFunc();

//本地路由表、心跳中从 Meta 节点获取到。

private volatile SlotTable slotTable = SlotTable.INIT;

//根据 dataInfoId 获取 slotId

@Override

public int slotOf(String dataInfoId) {

return slotFunction.slotOf(dataInfoId);

}

}The SlotFunctionRegistry in the source code registers two algorithm implementations. They are the implementation of crc32 and md5 respectively, and the source code is as follows.

public final class SlotFunctionRegistry {

private static final Map<String, SlotFunction> funcs = Maps.newConcurrentMap();

static {

register(Crc32cSlotFunction.INSTANCE);

register(MD5SlotFunction.INSTANCE);

}

public static void register(SlotFunction func) {

funcs.put(func.name(), func);

}

public static SlotFunction getFunc() {

return funcs.get(SlotConfig.FUNC);

}

}The implementation of randomly selecting an algorithm, such as MD5SlotFunction, and calculating slotId according to dataInfoId is as follows.

public final class MD5SlotFunction implements SlotFunction {

public static final MD5SlotFunction INSTANCE = new MD5SlotFunction();

private final int maxSlots;

private final MD5HashFunction md5HashFunction = new MD5HashFunction();

private MD5SlotFunction() {

this.maxSlots = SlotConfig.SLOT_NUM;

}

//计算 slotId的最底层逻辑。可见也是通过取hash然后对 slot槽个数取余

@Override

public int slotOf(Object o) {

// make sure >=0

final int hash = Math.abs(md5HashFunction.hash(o));

return hash % maxSlots;

}

}Knowing that the specific data slotId is obtained through the SlotTable according to the DataInfoId, let's see when the calculation of the slotId of the datInfoId is triggered in the session node. We can think about it, the general session node is used to process the client's publish and subscribe requests, then when there is a publish request, the published data will also write the published metadata to the data node, so you must know where the data is stored. On a machine, you need to find the corresponding slotId according to the dataInfoId, and then find the corresponding leader node, and forward the publishing request to the node for processing through the network communication tool. The session data receiving and publishing request processing handler is PublisherHandler.

As shown in the above sequence diagram, in the last commitReq method of DataNodeServiceImpl, the release request will be added to the internal BlockingQueue, and the Worker object inside DataNodeServiceImpl will consume the internal execution of the real data writing process in the BlockingQueue. For detailed source code, please refer to:

private final class Worker implements Runnable {

final BlockingQueue<Req> queue;

Worker(BlockingQueue<Req> queue) {

this.queue = queue;

}

@Override

public void run() {

for (; ; ) {

final Req firstReq = queue.poll(200, TimeUnit.MILLISECONDS);

if (firstReq != null) {

Map<Integer, LinkedList<Object>> reqs =

drainReq(queue, sessionServerConfig.getDataNodeMaxBatchSize());

//因为 slot 的个数有可能大于 work/blockingQueue 的个数、所以

//并不是一个 slot 对应一个 work、那么一个blockQueue 中可能存在发往多个slot的数据、这里

//有可能一次发送不完这么多数据、需要分批发送、将首先进入队列的优先发送了。

LinkedList<Object> firstBatch = reqs.remove(firstReq.slotId);

if (firstBatch == null) {

firstBatch = Lists.newLinkedList();

}

firstBatch.addFirst(firstReq.req);

request(firstReq.slotId, firstBatch);

for (Map.Entry<Integer, LinkedList<Object>> batch : reqs.entrySet()) {

request(batch.getKey(), batch.getValue());

}

}

}

}

}

private boolean request(BatchRequest batch) {

final Slot slot = getSlot(batch.getSlotId());

batch.setSlotTableEpoch(slotTableCache.getEpoch());

batch.setSlotLeaderEpoch(slot.getLeaderEpoch());

sendRequest(

new Request() {

@Override

public Object getRequestBody() {

return batch;

}

@Override

public URL getRequestUrl() {

//通过 slot 路由表找到对应的 leader data节点,这

//个路由表是 心跳中从 Meta 节点获取来的。

return getUrl(slot);

}

});

return true;

}This task of source code parsing allowed me to carefully study the SlotTable data pre-sharding mechanism, and truly understand how an industrial-level data sharding is implemented, how high availability is done, and various trade-offs in function implementation. .

Therefore, I would like to share this analysis of SOFARegistry SlotTable with you. You are welcome to leave a message for guidance.

I was also fortunate to participate in the community meeting of SOFARegistry. Under the guidance of the seniors in the community, I carefully understood the design considerations of specific details and discussed the future development of SOFARegistry together.

Welcome to join and participate in the source code analysis of the SOFA community

At present, the SOFARegistry source code analysis task has been completed, and there is still 1 task to be claimed for Layotto source code analysis. Interested friends can try it out.

Layotto

Tasks to be claimed: WebAssembly related

https://github.com/mosn/layotto/issues/427

The source code analysis activities of other projects will also be launched in the future, so stay tuned...

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。