What should I do if the Redis data cache is full? "We know that when the Redis cache is full, the data can be deleted to make room for new data through the elimination strategy.

The elimination strategy is as follows:

key to set expiration time

volatile-ttl、volatile-random、volatile-lru、volatile-lfu The data range eliminated by these four strategies is the data with the expiration time set.

all keys

allkeys-lru、allkeys-random、allkeys-lfu These three elimination strategies will be eliminated when the memory is insufficient, regardless of whether these key-value pairs are set to expire.

This means that it will be deleted even if it has not expired. Of course, if the expiration time has passed, it will be deleted even if it is not selected by the elimination strategy.

volatile-ttl 和 volatile-randon is very simple, the point is volatile-lru 和 volatile-lfu , they involve LRU algorithm and LFU algorithm.

Today, the code brother will take you to solve the LRU algorithm of Redis...

Approximate LRU Algorithm

What is the LRU algorithm?

LRU The whole process of the algorithm is Least Rencently Used .

The core idea is "if the data has been accessed recently, the probability of being issued stable in the future is also higher".

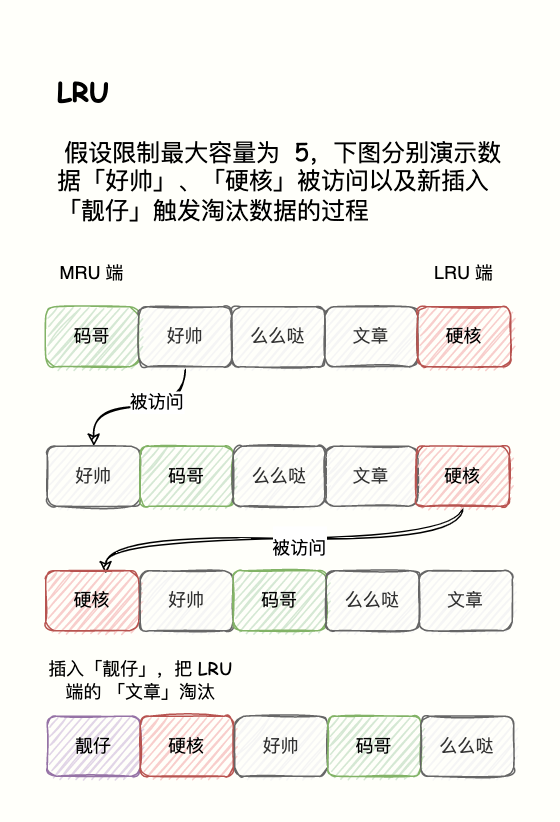

We organize all the data into a linked list:

- MRU : Represents the header of the linked list, representing the most recently accessed data;

- LRU : Represents the tail of the linked list, representing the least recently used data.

It can be found that LRU updates and insertion of new data occur at the beginning of the linked list, and deletion of data occurs at the end of the linked list .

The accessed data will be moved to the MRU side, and the data before the accessed data will be moved back one bit accordingly.

Is it possible to use a singly linked list?

If you choose a singly linked list and delete this node, you need O(n) traversal to find the predecessor node. Therefore, using a doubly linked list can also be completed in O(1) when deleting.

Does Redis use this LRU algorithm to manage all cached data?

No, because the LRU algorithm needs to use a linked list to manage all the data, it will cause a lot of extra space consumption.

In addition, a large number of nodes are accessed, which will lead to frequent movement of linked list nodes, thus reducing the performance of Redis.

Therefore, Redis simplifies the algorithm. The Redis LRU algorithm is not a real LRU. Redis samples a small number of keys and eliminates the keys that have not been accessed for the longest time in the sampled data.

This means that Redis cannot retire the oldest accessed data from the database.

An important point of the Redis LRU algorithm is that the number of samples can be changed to adjust the accuracy of the algorithm, making it approximately close to the real LRU algorithm, while avoiding memory consumption, because only a small number of samples need to be sampled each time instead of all the data.

The configuration is as follows:

maxmemory-samples 50How it works

Do you still remember that the data structure redisObjec t has a lru field, which is used to record the timestamp of the last access to each data.

typedef struct redisObject {

unsigned type:4;

unsigned encoding:4;

/* LRU time (relative to global lru_clock) or

* LFU data (least significant 8 bits frequency

* and most significant 16 bits access time).

*/

unsigned lru:LRU_BITS;

int refcount;

void *ptr;

} robj;When Redis eliminates data, it randomly selects N pieces of data into the candidate set for the first time, and eliminates the data with the smallest lru field value.

When the data needs to be eliminated again , the data will be re-selected into the candidate set created for the first time, but there is a selection criterion: the lru value of the data entering the set must be less than the smallest lru value in the candidate set.

If the number of new data entering the candidate set reaches the value set by maxmemory-samples 15b598e41f7876eea335f68e56959f33---, then the smallest data in the candidate set lru is eliminated.

In this way, the number of linked list nodes is greatly reduced, and the linked list nodes do not need to be moved every time the data is accessed, which greatly improves performance.

Java implements LRU Cahce

LinkedHashMap implementation

It is fully realized by Java LinkedHashMap , which can be realized by combination or inheritance. "Code Brother" is completed in the form of combination.

public class LRUCache<K, V> {

private Map<K, V> map;

private final int cacheSize;

public LRUCache(int initialCapacity) {

map = new LinkedHashMap<K, V>(initialCapacity, 0.75f, true) {

@Override

protected boolean removeEldestEntry(Map.Entry<K, V> eldest) {

return size() > cacheSize;

}

};

this.cacheSize = initialCapacity;

}

} The point is that on the third constructor of LinkedHashMap , this construction parameter accessOrder should be set to true, which LinkedHashMap to maintain the access order internally.

In addition, you need to rewrite removeEldestEntry() , if this function returns true , it means to remove the node that has not been visited for the longest time, thus realizing the elimination of data.

implement it yourself

The code is taken from LeetCode 146. LRU Cache . There are comments in the code.

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

/**

* 在链头放最久未被使用的元素,链尾放刚刚添加或访问的元素

*/

class LRUCache {

class Node {

int key, value;

Node pre, next;

Node(int key, int value) {

this.key = key;

this.value = value;

pre = this;

next = this;

}

}

private final int capacity;// LRU Cache的容量

private Node dummy;// dummy节点是一个冗余节点,dummy的next是链表的第一个节点,dummy的pre是链表的最后一个节点

private Map<Integer, Node> cache;//保存key-Node对,Node是双向链表节点

public LRUCache(int capacity) {

this.capacity = capacity;

dummy = new Node(0, 0);

cache = new ConcurrentHashMap<>();

}

public int get(int key) {

Node node = cache.get(key);

if (node == null) return -1;

remove(node);

add(node);

return node.value;

}

public void put(int key, int value) {

Node node = cache.get(key);

if (node == null) {

if (cache.size() >= capacity) {

cache.remove(dummy.next.key);

remove(dummy.next);

}

node = new Node(key, value);

cache.put(key, node);

add(node);

} else {

cache.remove(node.key);

remove(node);

node = new Node(key, value);

cache.put(key, node);

add(node);

}

}

/**

* 在链表尾部添加新节点

*

* @param node 新节点

*/

private void add(Node node) {

dummy.pre.next = node;

node.pre = dummy.pre;

node.next = dummy;

dummy.pre = node;

}

/**

* 从双向链表中删除该节点

*

* @param node 要删除的节点

*/

private void remove(Node node) {

node.pre.next = node.next;

node.next.pre = node.pre;

}

}Don't be stingy with praise, and give positive feedback to others when they do well. Focus less on voting with "compliments" and focus on voting with "deals."

To judge whether a person is awesome, not by how many people praise him online, but by how many people are willing to do business with him or appreciate, pay, and place orders.

Because praise is too cheap, and those who are willing to trade with him are the real trust and support.

Brother Code has written nearly 23+ Redis articles so far, given away many books, and received many praises and a small amount of praise. Thanks to the readers who have praised me, thank you.

I'm "Brother Code", you can call me handsome, please like the good article, about the LFU algorithm, we'll see you in the next article.

good history

- What should I do if the Redis memory is full?

- Will the expired data of Redis be deleted immediately?

- How to solve Redis cache breakdown (invalidation), cache penetration, and cache avalanche?

references

https://redis.io/docs/manual/eviction/

https://time.geekbang.org/column/article/294640

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。