I. Introduction

Following the " Ant Group Service Mesh Implementation and Challenges " in 2019, Ant Group has continued to explore and evolve in the direction of Service Mesh for nearly 3 years. What new changes have occurred in these 3 years, and what are the thinking about the future? On the 4th anniversary of the open source of SOFAStack, you are welcome to enter the chapter "Review and Prospect of Ant Group Service Mesh Progress" to discuss and exchange.

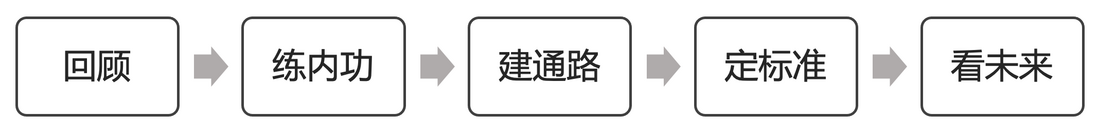

This exchange will unfold in the following order:

2. The development history of Ant Group Service Mesh

- In March 2018, Ant Group's Service Mesh started, and the MOSN data plane was born. From the beginning, it insisted on the core open source, and the internal capabilities took the road of expansion.

- On 6.18 in 2019, we connected MOSN on the three major combined deployment applications and successfully supported the 6.18 promotion.

- In 2019, all the applications of Ant's big promotion on Double 11 in 2019 passed the double promotion smoothly.

- In 2020, MOSN's steady internal development will increase the access application coverage to 90%, and external commercialization will begin to emerge. 90% of the standard applications of Ant Group's entire station have completed Mesh access. In the commercial version, the SOFAStack "dual-mode micro-service" architecture has also been successfully implemented in many large financial institutions such as Jiangxi Rural Credit and China CITIC Bank.

- In 2021, with the gradual maturity of meshing and the gradual enrichment of multi-language scenarios, the scalability problems brought about by the direct support of meshing for middleware protocols will gradually become prominent, and the concept of Dapr's application runtime will also gradually rise. This year We open sourced Layotto, hoping to solve the problem of coupling between applications and back-end middleware by unifying the application runtime API, further decoupling applications and infrastructure, and solving the problem of vendor binding of applications in multi-cloud runtime.

- In 2022, with the gradual improvement of the infrastructure capabilities of Meshing, we began to consider if Meshing brings more value to the business. In the era of Mesh 1.0, we have lowered the capabilities related to middleware as much as possible to improve the foundation. The iterative efficiency of facilities, in the era of Mesh 2.0, we expect a mechanism to allow the business side to have relatively general capabilities, as well as to sink on demand, and to have a certain degree of isolation to avoid sinking from affecting Mesh data agents. main link. This part will do some introductions in the future section.

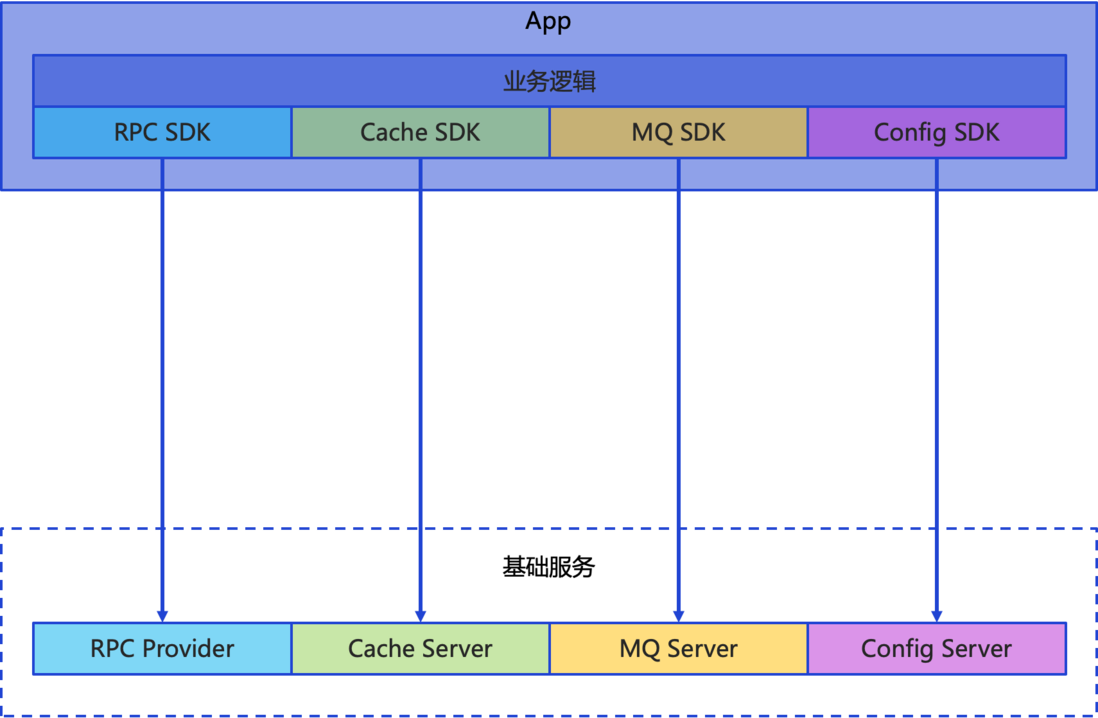

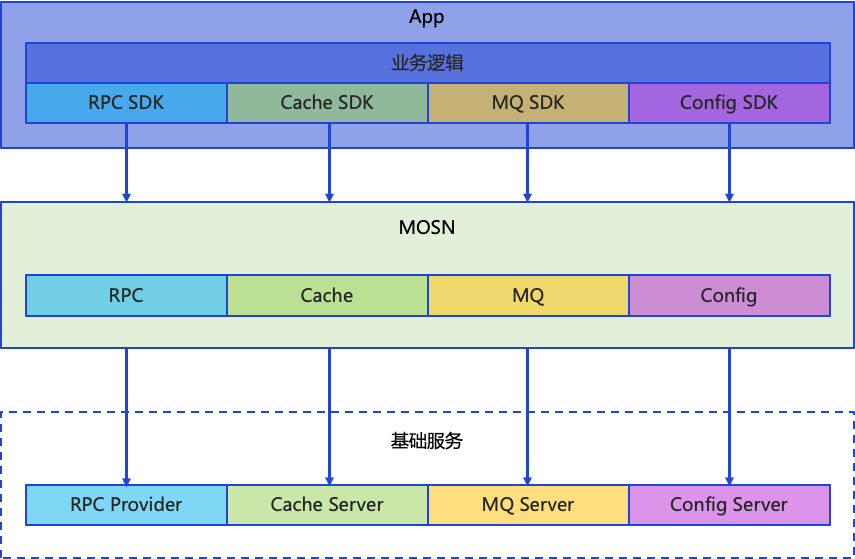

The graphical way briefly describes the stages of the evolution of the Service Mesh architecture:

- In the SOA era, the clients of middleware are directly integrated into the business process:

- Meshing stage 1: Middleware capabilities sink, and applications and infrastructure are partially decoupled:

- Application runtime phase: Decouple the type of application and specific infrastructure, and only rely on standard API programming:

3. East-West Traffic Scale Challenges

The meshed data plane MOSN carries the very core east-west communication link between applications. Currently, it covers thousands of applications within Ant Group and tens of W+ of containers. The massive scale brings data such as long connection expansion and service discovery data. huge volume and difficult service management. Next, let's talk about some classic problems we encountered and solved in the process of evolution.

3.1 The problem of long connection expansion

There are complex calling relationships behind massive-scale applications, and some basic services are relied on by most applications. Due to the mechanism that the caller is fully connected to the service provider, a single Pod of a basic service needs to carry nearly 10W long connections on a daily basis. There is generally an upper limit on QPS. Let’s take a Pod with 1000 QPS as an example. In the scenario of a 10w long connection, the QPS on each long connection is very low. Assuming that the requests for all connections are equal, on average, each long connection has only 100 seconds of QPS. A request is made.

In order to ensure the availability of long connections, the communication protocol Bolt of SOFA RPC defines a heartbeat packet. The default heartbeat packet is every 15s, so the distribution of requests on a long connection is roughly as shown in the following figure:

In the appeal scenario, on a long connection, the number of requests for heartbeat packets is much larger than the number of business requests. In daily operation, MOSN is used to maintain the memory overhead of the available handles for the long connection, as well as the CPU overhead of sending heartbeat packets. It cannot be ignored under massive scale clusters.

Based on the above problems, we found two solutions:

- Reduce the heartbeat frequency on the premise of ensuring that the connection is available

- Reduce the number of connections between applications under the premise of ensuring load balancing

3.1.1 Heartbeat Backoff

Since the main function of heartbeat is to find out whether a long connection is unavailable as early as possible, we usually think that a long connection can be determined to be unavailable after three heartbeat timeouts. In the life cycle of a long connection, the proportion of unavailable scenarios It is very low. If we double the detection period of the long connection, we can reduce the CPU consumption of the heartbeat by 50%. In order to ensure the timeliness of detection, when the heartbeat abnormality (such as heartbeat timeout, etc.) occurs, the heartbeat cycle is reduced to improve the judgment efficiency when the long connection is unavailable. Based on the above ideas, we designed the long connection heartbeat backoff strategy in MOSN:

- When there is no service request on the long connection and the heartbeat responds normally, gradually lengthen the heartbeat period by 15s -> 90s

- When the request fails or the heartbeat times out on the long connection, reset the heartbeat period back to 15s

- When there is a normal service request on the long connection, downgrade the heartbeat request in this heartbeat cycle

Through the above methods of heartbeat backoff, MOSN's normal heartbeat CPU consumption is reduced to 25% of the original.

3.1.2 Service List Fragmentation

From the optimization of heartbeat backoff, it can be seen that in the scenario of massive long connections, the request frequency on a single long connection is very low, so maintaining so many long connections is not only friendly to load balancing, other benefits are not great. , then we have another optimization direction, which is to reduce the number of long connections established between the client and the server.

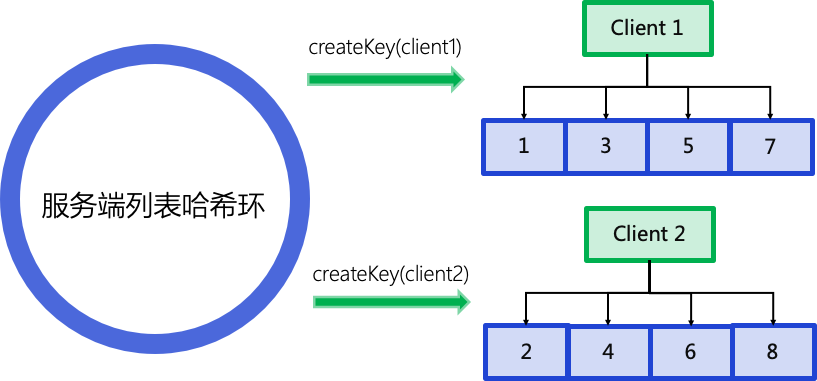

MOSN uses a consistent hashing strategy to group server machines: in the client's memory, first add the full server machine list to the consistent hash ring, and then calculate the machine list in the case of expected sharding based on the configuration Number N, and then according to the client machine IP, obtain N machine lists from the consistent hash ring as the machine's shard list. The hash ring calculated by each client is the same, and different machine IPs make the final selected machine shard list different. It realizes the effect that different client machines hold different server cluster shards.

By optimizing the sharding of the service list, the number of long connections established by the client to the server is drastically reduced. In the scenario of 6w long connections and 50% load balancing sharding: the CPU of a single machine is reduced by about 0.4 Core, and the memory is reduced by about 500M.

3.2 Massive Service Discovery Problems

The service discovery capability of MOSN does not use Pilot, but directly connects with SOFARegistry (service registry) internally. One of the reasons for using this architecture is the interface-level service discovery of RPC. The amount of Pubs and Subs of nodes is huge, and the amount of applications is massive. The node change push generated by frequent operation and maintenance poses great challenges to Pilot's performance and timeliness. The community has found many performance problems in the process of using Pilot to distribute CDS on a slightly larger scale and submitted PRs to solve them. Ant Group has a 200W Pub in one computer room. Under the scale of 2000W Sub, Pilot is completely unable to carry it. The architecture of SOFARegistry is that the storage and connection layers are separated, and the storage is memory sharded storage. The connection layer can also be expanded horizontally indefinitely, and it can also achieve second-level change push under massive internal node changes.

Although there is no problem with the push capability of SOFARegistry, the push data generated after massive node changes will lead to a large number of cluster reconstructions in MOSN. After the list is released and the cluster is successfully constructed, a large amount of temporary memory will be generated, and CPU computational consumption. These spiked memory applications and CPU usage may directly affect the stability of the request broker link. To solve this problem, we have also considered two optimization directions:

- Convert full push to incremental push between SOFARegistry and MOSN

- Service discovery model switched from interface level to application level

The effect of the first point is that each time the list push changes to 1/N of the original push size, N depends on the number of groups when the application changes. The change that the second point can bring is more obvious. We assume that an application will publish 20 interfaces, and the service discovery data generated by the Pod of 100 applications is 20*100=2000 of data, the data of interface granular service discovery The total amount will continue to grow as the number of application interfaces grows several times the number of application nodes; and application-level service discovery can control the total number of nodes at the level of the number of application pods.

3.2.1 Evolution of Application-Level Service Discovery

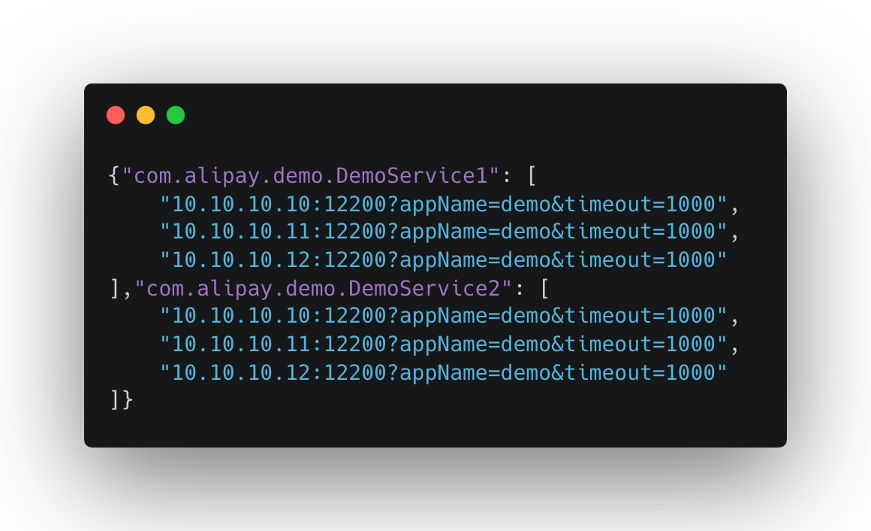

Example of interface-level service discovery (repeated in multiple services on the same node):

Application-level service discovery example (structured representation of the relationship between applications, services, address lists):

Through structural changes to application and interface-related information, the number of nodes for service discovery can be reduced by one to two orders of magnitude.

The evolution of interface-level service discovery to application-level service discovery is a huge change for the RPC framework. Dubbo 3.0 in the community has implemented application-level service discovery, but this kind of upgrade compatibility across major versions has a lot of considerations. It is more difficult to change the wheels on the train to evolve at the framework layer. As Ant Group's internal Service Mesh has covered 90% of standard applications, we can be more radical in the evolution of service discovery. Combined with MOSN + SOFARegistry 6.0, we have realized the compatibility of interface-level service discovery and application-level service discovery, as well as For the smooth handover solution, through the iterative upgrade of the MOSN version, the handover from interface-level to application-level service discovery has been completed.

Through the above improvements, the service discovery data volume of the production cluster is reduced by 90% and the volume of Sub data by 80%, and the whole process is completely insensitive to the application, which is also the actual convenience brought by the decoupling of mesh business and infrastructure. .

3.2.2 MOSN Cluster Structure Optimization

After solving the problem of excessive data volume change through application-level service discovery, we also need to solve the problem of CPU consumption and temporary memory application spikes in the list change scenario. In this problem, through the analysis of memory application, Registry Client After receiving the list information pushed by the server, it needs to undergo deserialization, construct the Cluster model required by MOSN and update the Cluster content. The most important part is the Subset construction in the Cluster construction process. By using the object pool and minimizing the copying of byte[] to String, memory allocation is reduced, and the implementation of Subset is optimized through Bitmap, making the entire Cluster construction more efficient and low memory application.

After the above optimization, during the operation and maintenance of super-large cluster applications, the temporary memory application for subscriber list changes is reduced to 30% of the original consumption, and the CPU usage during the list change period is reduced to 24% of the original consumption.

3.3 Intelligent Evolution of Service Governance

After MOSN sinks the request link, we have made a lot of attempts in service governance, including client-side refined traffic drainage, single-machine pressure measurement and drainage, business link isolation, application-level cross-unit disaster recovery, and single-machine fault elimination. , various current limiting capabilities, etc., I will only introduce the intelligent exploration we have done in the current limiting scenario.

The Sentinel project in the open source community has done a very good practice in the direction of current limiting. MOSN communicated with the Sentinel team in the early stage of current limiting, hoping to expand based on Sentinel's Golang SDK. Standing on the shoulders of giants, we will do more attempts.

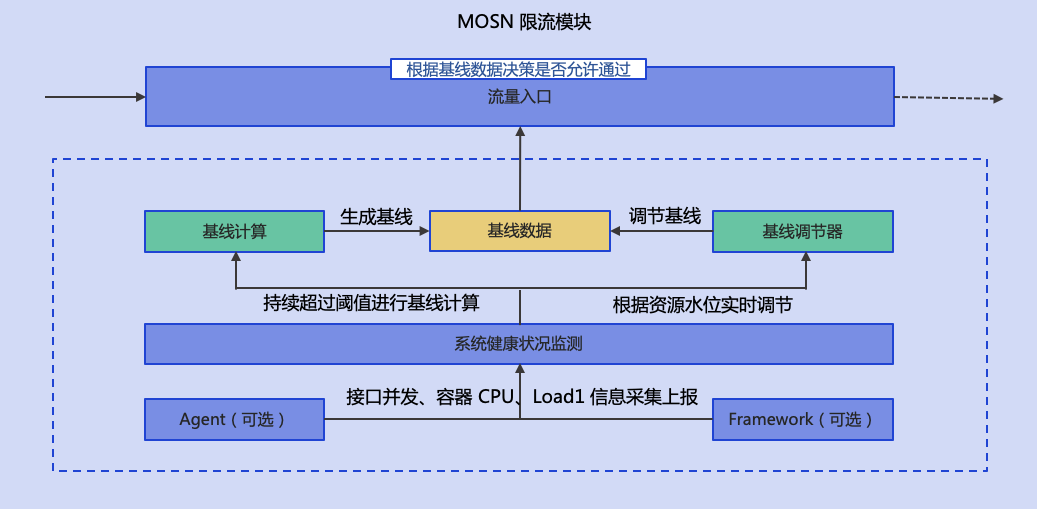

Based on Sentinel's pluggable Slot Chain mechanism, we have expanded the implementation of many current limiting modules internally, such as adaptive current limiting Slot, cluster current limiting Slot, fuse Slot, log statistics Slot, etc.

Before MOSN has the current limiting capability, there are also current limiting components in the Java process. The business commonly used is a single-machine current-limiting value. Generally, there will be an accurate current-limiting value. TPS that can be carried in a healthy manner, this value will gradually change with the continuous iteration of the business application itself, the increase of functions, and the more complex links. Each system can have an accurate estimate of the current limit value that should be configured for its own application under the condition of satisfying the total TPS.

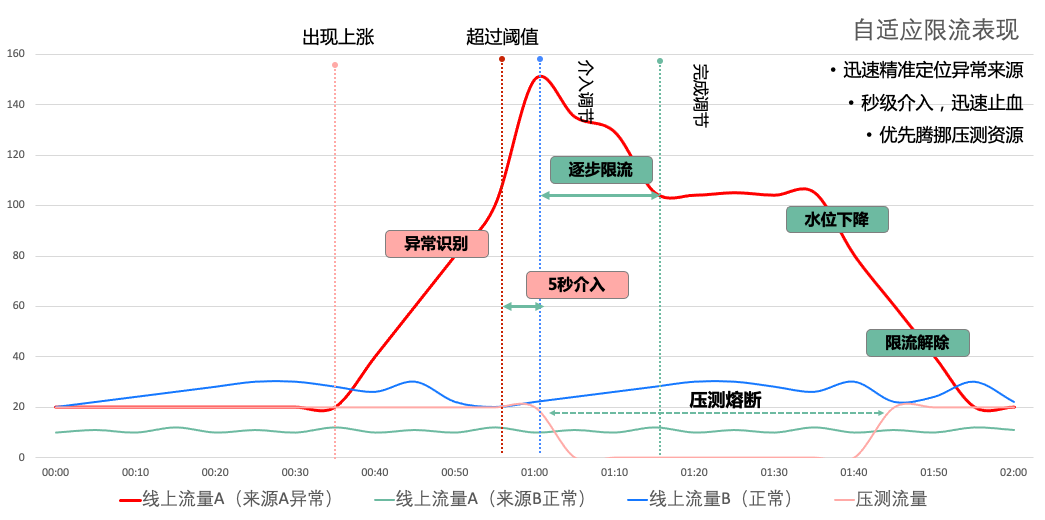

In order to solve the problem of difficult configuration of current limiting, we tried to implement adaptive current limiting in MOSN. According to the collection and reporting of the current interface concurrency, CPU, and Load1 information of the container, combined with the request of each interface in the recent sliding windows When the load exceeds a certain baseline, the current limiting component can automatically identify which interfaces should be throttled to avoid resource usage exceeding the healthy water level.

In the actual production environment, adaptive current limiting can quickly and accurately locate the source of abnormality, and intervene in seconds to quickly stop bleeding. At the same time, it can also identify the type of flow, and prioritize reducing the pressure measurement flow to make the production flow as successful as possible. Before the big promotion, it is no longer necessary for each application owner to configure the current limit value for each interface of its own application, which greatly improves the happiness of research and development.

Fourth, the north-south traffic is opened up

As the data plane of Service Mesh, MOSN mainly focuses on east-west traffic. In addition to east-west traffic, north-south traffic is divided and conquered by various gateways.

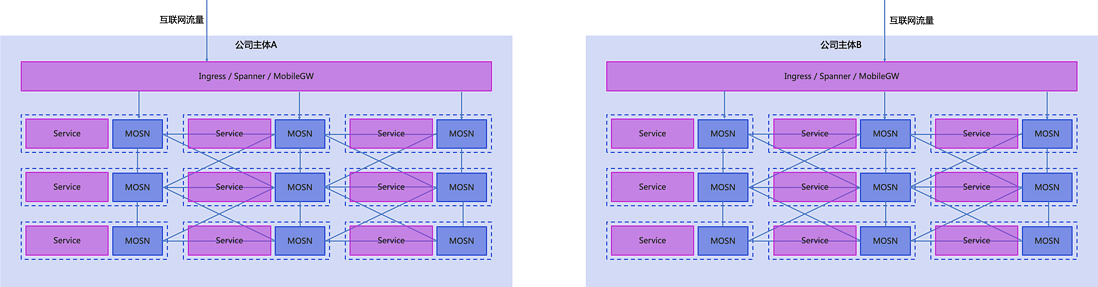

There are many different company entities under Ant Group, which serve different business scenarios. Each entity has its corresponding site to deploy applications to provide services to the outside world. The most common north-south traffic is the Internet traffic entrance. This role is played by Ant Group. Spanner carries. In addition to Internet traffic portals, there may also be information exchange between multiple entities. If multiple entities in the same group need to go through a public network for information exchange, the stability will be greatly reduced, and the bandwidth will be more expensive. In order to solve the problem of efficient intercommunication across subjects, we have built a bridge between multiple subjects through SOFAGW, so that the communication between applications across subjects is as simple as the RPC communication within the same subject, and it also has link encryption, authentication, and auditability. and other capabilities to ensure the compliance of calls between multiple subjects.

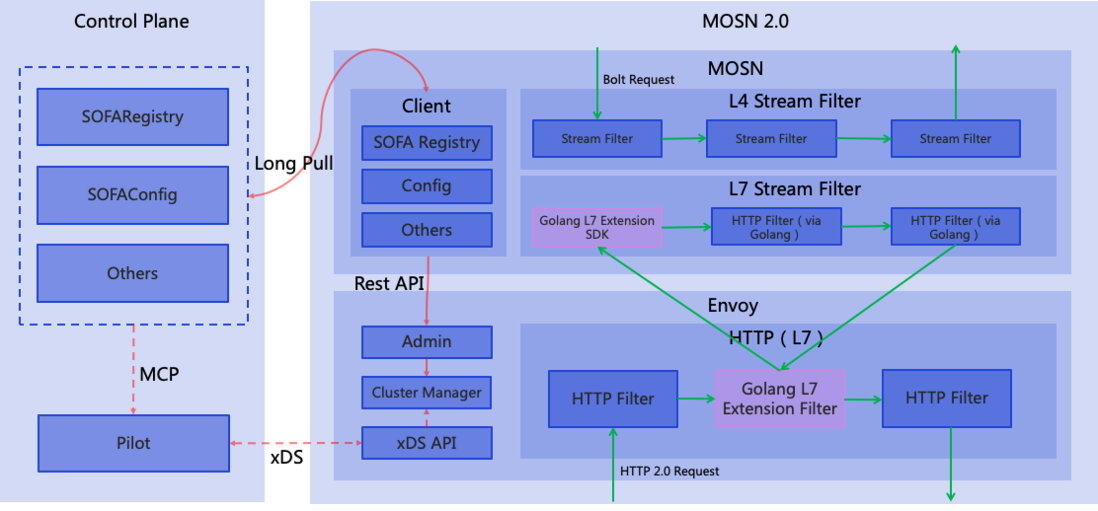

SOFAGW is built on the MOSN 2.0 architecture. It can not only use Golang for efficient research and development, but also enjoy the ultra-high performance brought by Envoy in Http2 and other protocol processing.

Briefly introduce the MOSN 2.0 architecture. Envoy provides an extensible Filter mechanism to allow users to insert their own logic in the protocol processing link. MOSN implements a layer of CGO-based Filter extension layer. Envoy's Filter mechanism is carried out. To upgrade, we can use Golang to write Filter and then embed Envoy to be called by CGO Filter.

SOFAGW builds its own gateway proxy model on top of MOSN 2.0, obtains configuration information, service discovery information, etc. through the interaction between SOFA's Golang client and the control plane, and then reassembles the Envoy's Cluster model and inserts it into the Envoy instance through the Admin API. Through Golang's Filter extension mechanism, SOFAGW realizes the capabilities of LDC service routing, stress measurement traffic identification, current limiting, identity authentication, traffic replication, and invocation auditing within Ant Group. Since Envoy's Http2 protocol processing performance is 2 to 4 times higher than that of pure Golang GRPC implementation, SOFAGW chooses to hand over the Triple (Http2 on GRPC) protocol processing to Envoy, and the Bolt (SOFA RPC private protocol) protocol processing is still handed over to Envoy. Leave it to MOSN to handle.

Through the above architecture, SOFAGW has realized the trusted intercommunication of all entities within Ant Group, and achieved a good balance between high performance and rapid iterative development.

Five, application runtime exploration

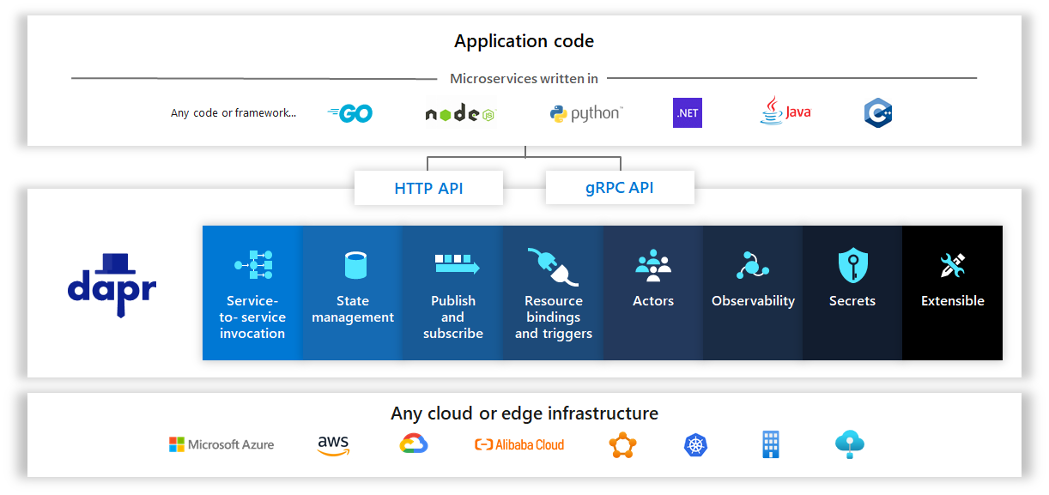

With the exploration of Service Mesh entering the deep water area, after we have deposited many capabilities into the data plane of Mesh, we also feel the convenience and limitations of direct sinking of each protocol. The convenience is that the application can be smoothly connected without modification at all. , the limitation is that each private protocol needs to be connected independently, and the application using the A protocol cannot directly run on the B protocol. In a multi-cloud environment, we hope that applications Write Once,Run on any Cloud! can achieve this vision, we need to further decouple the application from the infrastructure, so that the application does not directly perceive the specific implementation of the underlying layer, but Is to use the distributed semantic API to write programs. This kind of thinking has been explored by Dapr as a pioneer in the community:

(The picture above is from Dapr's official documentation)

Dapr provides various atomic APIs under the distributed architecture, such as service invocation, state management, publish-subscribe, observability, security, etc., and realizes the docking implementation components of different distributed primitives on different clouds.

(The picture above is from Dapr's official documentation)

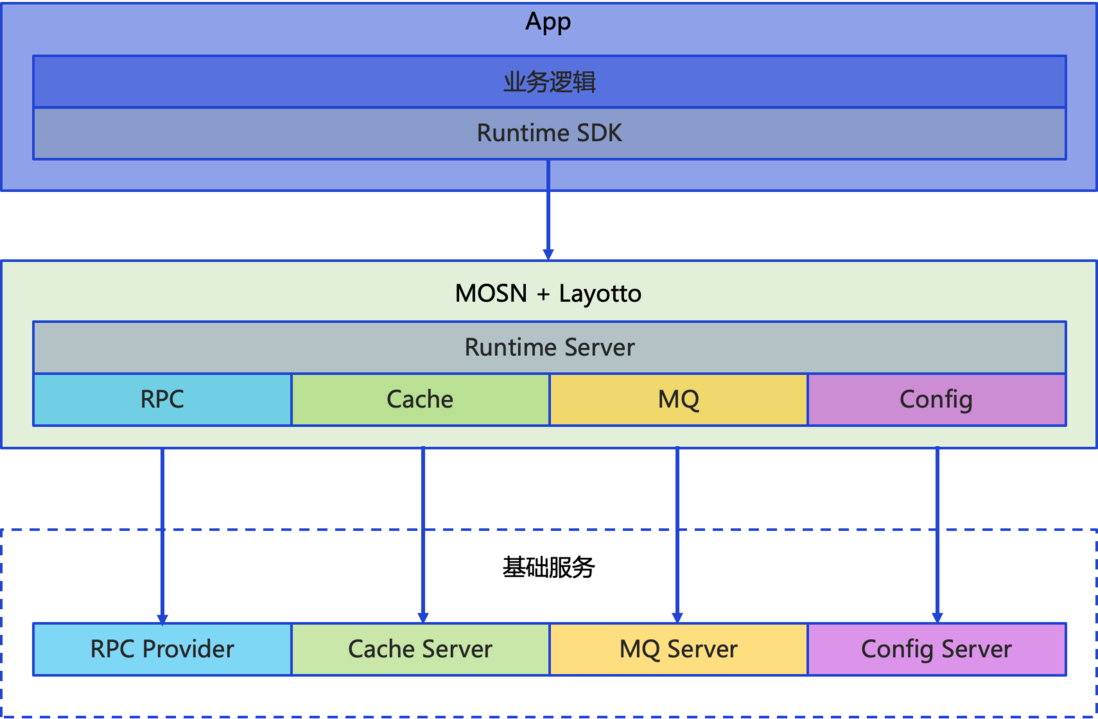

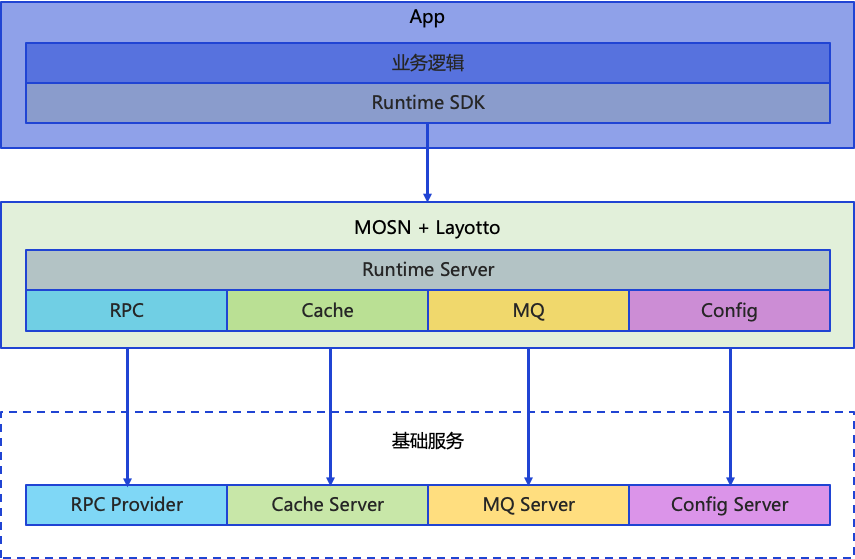

Dapr is equivalent to a more non-invasive distributed primitive provided to applications on top of Service Mesh. In mid-2021, we will open source Layotto based on MOSN. Layotto is equivalent to a collection of Application Runtime and Service Mesh:

We abstracted the application runtime API through Layotto and evolved the internal Service Mesh to the following architecture:

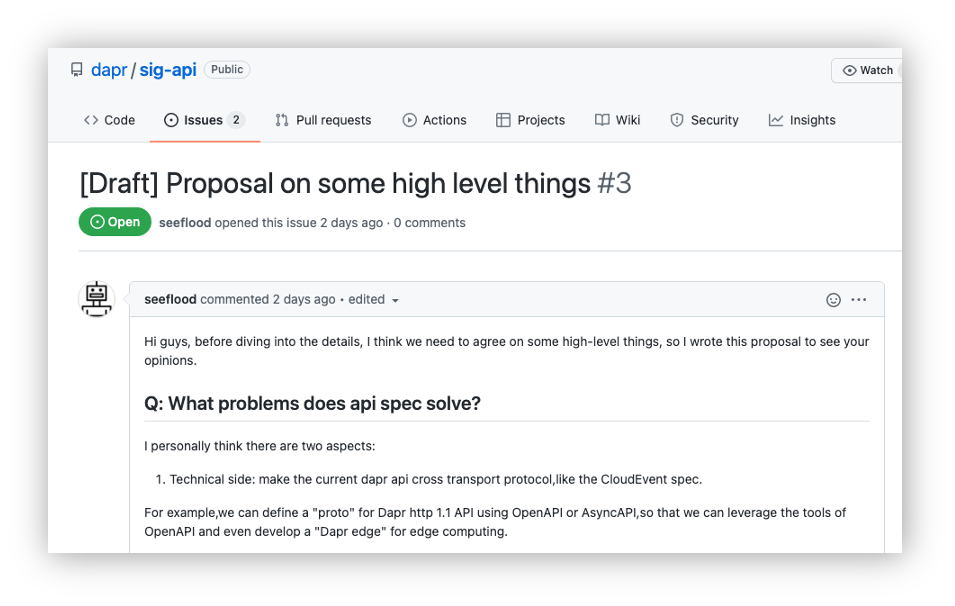

Of course, the application runtime is a new concept. If this layer of API abstraction cannot be neutral enough, it still needs to face the situation that the user needs to choose 1 from N, so we are also working with the Dapr community to formulate the standard of the Application Runtime API. The Dapr Sig API Group is organized to promote the standardization of APIs, and it is expected that more interested students will join together.

Looking forward to everyone's applications in the future Write once, Run on any Cloud! .

6. Mesh 2.0 exploration

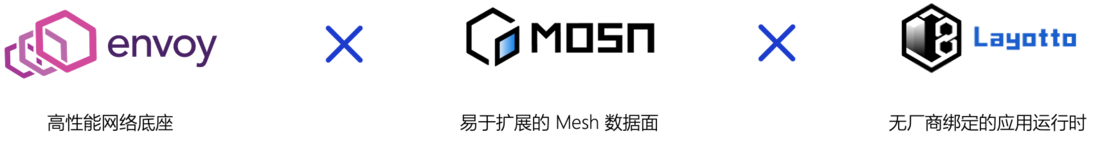

In 2022, we will continue to explore forward. Based on MOSN 2.0, we will have a high-performance network base, an easily scalable Mesh data plane, and based on Layotto, we will have a vendor-free application runtime.

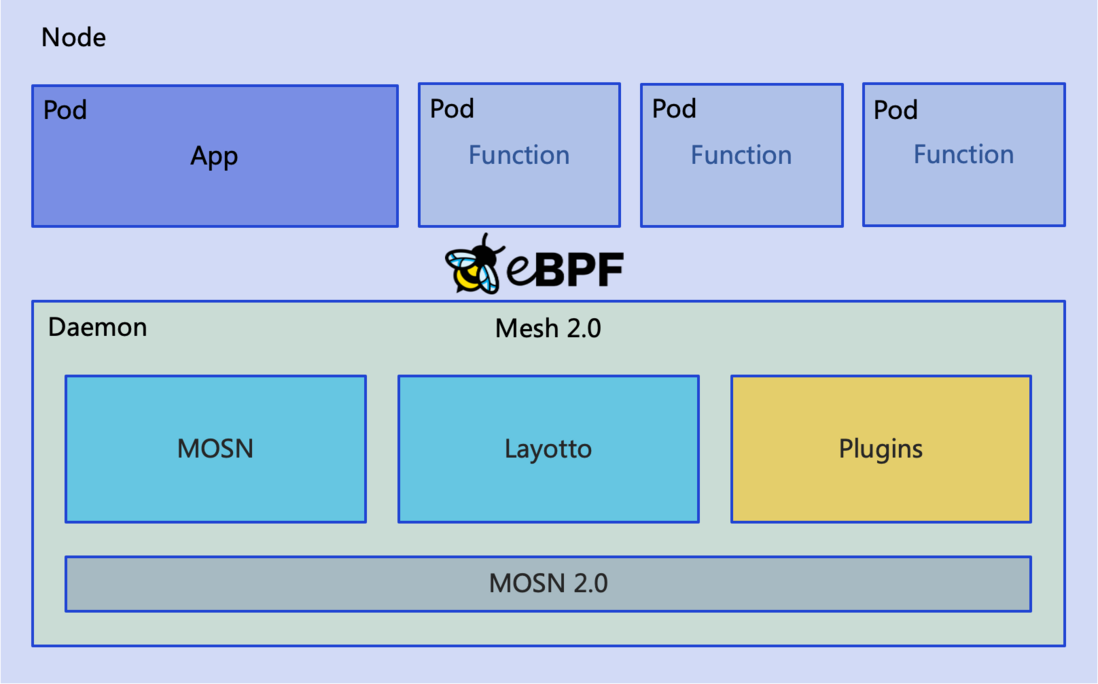

In the next step, we expect to further sink the mesh data plane based on eBPF, from Pod granularity to Node granularity, while serving more scenarios, such as Function, Serverless, and based on the good scalability of MOSN 2.0, we hope to further try The relatively general capabilities of business applications can also be precipitated and used as a custom plug-in for the Mesh data plane to provide services for more applications, helping businesses achieve relatively general business capabilities and iteratively upgrade quickly.

It is believed that in the near future, Mesh 2.0 can serve many common scenarios within Ant Group, and also bring some new possibilities to the community. The above is all the content shared this time. I hope everyone can gain something from the communication on the development process of Ant Group's Service Mesh.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。