When I first learned C and C++ languages, they all handed over the management of memory to developers. This method is the most flexible but also the most prone to problems, and has extremely high requirements for personnel; some advanced languages such as Java, JavaScript, Both C# and Go have languages that solve the problem of memory allocation and recycling, lower the development threshold and release productivity. However, it brings a burden to students who want to understand the principle in depth. This article mainly sorts out personal understanding from the perspective of memory allocation, and the garbage collection mechanism of Go will be introduced in subsequent articles.

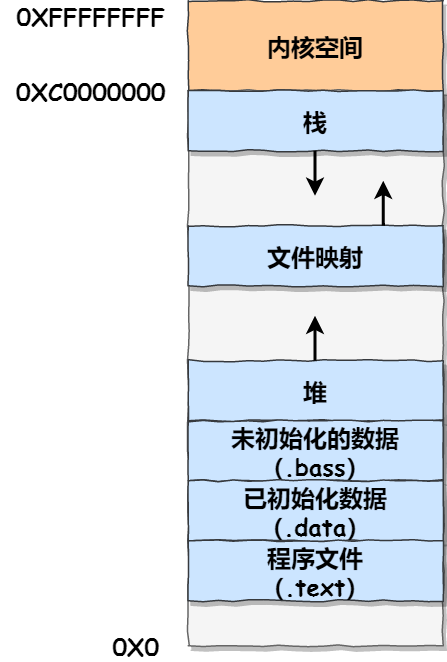

process memory

- Program file segment (.text), including binary executable code;

- Initialized data section (.data), including static constants;

- Uninitialized data segment (.bss), including uninitialized static variables; (bss and data are generally used as static storage areas)

- Heap segments, including dynamically allocated memory, grow upwards from low addresses;

- File mapping segments, including dynamic libraries, shared memory, etc., start from low addresses and grow upwards (related to hardware and kernel versions (opens new window));

- The stack segment, including local variables and the context of function calls, etc. The stack size is fixed, typically 8 MB. Of course, the system also provides parameters so that we can customize the size;

(The above is from Kobayashi coding )

The above is a view in process units. There may be multiple threads in the process. The stack space of each thread is independent, but they are all located in the stack area of the process, and the heap area of the process is shared by all threads, as shown in the following figure shown

For the GMP management mechanism in the Go language, only M corresponds to the thread in the operating system, so the necessary (rp, bp, pc pointers) are reserved in the goroutine. When the goroutine executes, it corresponds to the specified stack space address in the area.

That's a bit far, back to the topic of this article.

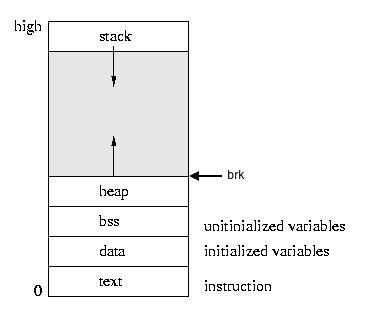

There are generally three ways of memory allocation: static storage area (root objects, static variables, constants), stack (temporary local variables in functions), heap (malloc, new, etc.);

Generally, the longest discussion is the stack and the heap. The characteristics of the stack can be considered as linear memory, the management is simple, and the allocation is faster than that on the heap. The memory allocated on the stack generally does not need programmers to care, and programming languages have special stack frames. Management (Generally speaking, the stack space of a thread is 2M or 8M. If it cannot be changed, it will crash. The goroutine in Go language is 2kb. Go language has its own stack expansion and shrinking capabilities. 64-bit system will crash if it exceeds 1G). (The linear memory mentioned here is not necessarily continuous in the real physical memory of the machine. This is because the operating system provides virtual memory, which makes each program seem to monopolize the entire physical memory, but is actually a continuous address space for the program. , not necessarily continuous from the perspective of the operating system, you can refer to this article )

Because the heap area is shared by multiple threads, a set of mechanisms is needed for allocation (considering memory fragmentation, fairness, and conflict resolution); different memory allocation management methods have different application scenarios. Before explaining the Go memory allocation strategy in detail, let's look at a simple memory allocation.

heap memory allocation

The heap memory is continuous at the beginning. When the program is running, everyone goes to the heap to apply for their own use space. If no processing is done, there will be two main problems:

The first memory fragmentation problem:

Suppose the heap has 100M, thread A applies for 500M, thread B applies for 200M, and thread C applies for 300M. At this time, the heap space is A (500) B (200) C (300), and then A and C release the space, and the space becomes Free area (500m) Thread B space (200M) Free area (300M) At this time, thread D needs to leave 600M, and it will find a piece of space that has not been completed at this time for thread D;

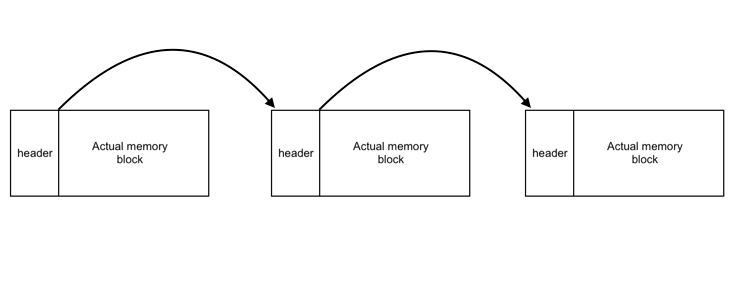

Therefore, in some high-level languages, the heap space allocation is managed in a manner similar to the page allocation of the operating system, dividing into small blocks, and each small block contains some metadata (such as user data size, whether it is free, head pointer, tail pointer) ), user data area, alignment padding area;

Because the area of a page in modern operating systems is generally 4kb, each time a heap memory block is allocated, the user data area will also be set to a multiple of 4kb. At the same time, additional areas are needed to store metadata information, but the metadata size is not necessarily A multiple of 4 bytes (like C++ can set 4-byte alignment https://blog.csdn.net/sinat_28296297/article/details/77864874 ), in addition to taking into account the performance problems caused by the CPU's pseudo-shared cache , so some extra free space is needed to make up (that's what alignment bytes mean).

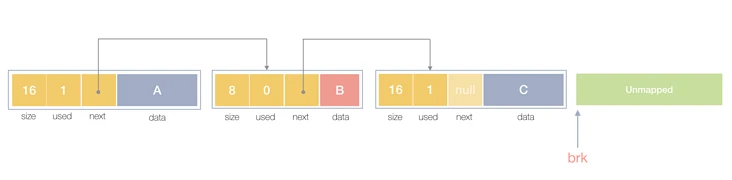

Then if you only manage the heap memory in the form of a linked list, it looks like the following:

The second is the problem of concurrency conflicts

Because multiple threads apply for resources in the heap memory at the same time, if there is no control, there will inevitably be conflicts and overwrite problems, so the common solution is to use locks, but locks inevitably bring performance problems; so there are various The solution takes into account performance and fragmentation and pre-allocation strategies for memory allocation.

A simple memory allocator

We first follow the above introduction to implement a simple memory allocator , that is, implement a malloc and free method.

Here we collectively refer to the three areas of data, bss, and heap as "data segment", and the end of the datasegment is determined by a pointer brk (program break) pointing here. If you want to allocate more space on the heap, you only need to request the system to move the brk pointer from low to high, and return the corresponding memory first address. When freeing the memory, you only need to move the brk pointer down.

In Linux and unix systems, we call the sbrk() method here to manipulate the brk pointer:

- sbrk(0) gets the address of the current brk

- Call sbrk(x), when x is a positive number, request to allocate x bytes of memory space, when x is negative, request to release x bytes of memory space

Now write a simplified version of malloc:

void *malloc(size_t size) {

void *block;

block = sbrk(size);

if (block == (void *) -1) {

return NULL;

}

return block;

}Now the question is we can allocate memory, but how to free it? Because freeing memory requires sbrk to move the brk pointer down, but we do not currently record the size information of this area;

There is another problem. Suppose we now apply for two pieces of memory, A\B, B is behind A. If the user wants to release A at this time, and the brk pointer is at the end of B, then if we simply move brk The pointer will destroy B, so for the A area, we cannot directly return it to the operating system, but wait for B to be released at the same time and then return it to the operating system. At the same time, we can also use A as a cache, and wait for the next time. When there is memory less than or equal to the A area that needs to be applied for, you can use the A memory directly, or you can merge AB for unified allocation (of course, there will be memory fragmentation problems, which I will not consider here).

So now we divide the memory according to the block structure. For the sake of simplicity, we use a linked list to manage it; then in addition to the memory area applied by the user itself, some additional information is needed to record the size of the block and the next block. position, whether the current block is in use. The whole structure is as follows:

typedef char ALIGN[16]; // padding字节对齐使用

union header {

struct {

size_t size; // 块大小

unsigned is_free; // 是否有在使用

union header *next; // 下一个块的地址

} s;

ALIGN stub;

};

typedef union header header_t;Here, a structure and a 16-byte array are encapsulated into a union, which ensures that the header will always point to an address aligned to 16 bytes (the size of the union is equal to the largest size of the members). The tail of the header is the starting position of the memory actually given to the user, so the memory given to the user here is also a 16-byte alignment (the purpose of byte alignment is to improve the cache hit rate and batch processing ability to improve system efficiency).

The current memory structure is shown in the following figure:

Now we use head and tail to use this linked list

header_t *head, *tailIn order to support multi-threaded concurrent access to memory, we simply use global locks here.

pthread_mutex_t global_malloc_lock;Our malloc now looks like this:

void *malloc(size_t size)

{

size_t total_size;

void *block;

header_t *header;

if (!size) // 如果size为0或者NULL直接返回null

return NULL;

pthread_mutex_lock(&global_malloc_lock); // 全局加锁

header = get_free_block(size); // 先从已空闲区域找一块合适大小的内存

if (header) { // 如果能找到就直接使用,无需每次向操作系统申请

header->s.is_free = 0; // 标志这块区域非空闲

pthread_mutex_unlock(&global_malloc_lock); // 解锁

// 这个header对外部应该是完全隐藏的,真正用户需要的内存在header尾部的下一个位置

return (void*)(header + 1);

}

// 如果空闲区域没有则向操作系统申请一块内存,因为我们需要header存储一些元数据

// 所以这里要申请的内存实际是元数据区+用户实际需要的大小

total_size = sizeof(header_t) + size;

block = sbrk(total_size);

if (block == (void*) -1) { // 获取失败解锁、返回NULL

pthread_mutex_unlock(&global_malloc_lock);

return NULL;

}

// 申请成功设置元数据信息

header = block;

header->s.size = size;

header->s.is_free = 0;

header->s.next = NULL;

// 更新链表对应指针

if (!head)

head = header;

if (tail)

tail->s.next = header;

tail = header;

// 解锁返回给用户内存

pthread_mutex_unlock(&global_malloc_lock);

return (void*)(header + 1);

}

// 这个函数从链表中已有的内存块进行判断是否存在空闲的,并且能够容得下申请区域的内存块

// 有则返回,每次都从头遍历,暂不考虑性能和内存碎片问题。

header_t *get_free_block(size_t size)

{

header_t *curr = head;

while(curr) {

if (curr->s.is_free && curr->s.size >= size)

return curr;

curr = curr->s.next;

}

return NULL;

}You can look at the basic capabilities of our memory allocation now:

- Thread safety by locking

- Manage memory blocks by means of linked lists, and solve the problem of memory reuse.

Next, let's write the free function. First, we need to see whether the memory to be released is in the location of brk. If it is, it is directly returned to the operating system. If not, it is marked as free and reused later.

void free(void *block)

{

header_t *header, *tmp;

void *programbreak;

if (!block)

return;

pthread_mutex_lock(&global_malloc_lock); // 全局加锁

header = (header_t*)block - 1; // block转变为header_t为单位的结构,并向前移动一个单位,也就是拿到了这个块的元数据的起始地址

programbreak = sbrk(0); // 获取当前brk指针的位置

if ((char*)block + header->s.size == programbreak) { // 如果当前内存块的末尾位置(即tail块)刚好是brk指针位置就把它还给操作系统

if (head == tail) { // 只有一个块,直接将链表设置为空

head = tail = NULL;

} else {// 存在多个块,则找到tail的前一个快,并把它next设置为NULL

tmp = head;

while (tmp) {

if(tmp->s.next == tail) {

tmp->s.next = NULL;

tail = tmp;

}

tmp = tmp->s.next;

}

}

// 将内存还给操作系统

sbrk(0 - sizeof(header_t) - header->s.size);

pthread_mutex_unlock(&global_malloc_lock); // 解锁

return;

}

// 如果不是最后的链表就标志位free,后面可以复用

header->s.is_free = 1;

pthread_mutex_unlock(&global_malloc_lock);

}The above is a simple memory allocator; you can see that we use linked lists to manage heap memory areas, and use global locks to solve thread safety issues, while also providing certain memory reuse capabilities. Of course, this memory allocator also has several serious problems:

- Global locks will bring serious performance problems in high concurrency scenarios

- There are also some performance problems in memory reuse each time it is traversed from scratch

- The problem of memory fragmentation. When we reuse memory, we simply judge whether the block memory is larger than the required memory area. If in extreme cases, our free memory is 1G, and the newly applied memory is 1kb, it will cause serious waste of fragmentation.

- There is a problem with memory release, only the memory at the end is returned to the operating system, and the free part in the middle has no chance to be returned to the operating system.

So let's introduce how some perfect memory allocators handle it, and the memory allocation strategy in Go

TCMalloc

There are many kinds of memory allocators, which can be summarized as the following ideas:

1. Divide the memory allocation granularity, first define the memory area in the smallest unit, and then treat it separately according to the size of the object. Small objects are divided into several categories, which are managed by corresponding data structures to reduce memory fragmentation

2. Garbage collection and prediction optimization: When releasing memory, small memory can be merged into large memory, cached according to the strategy, and the performance can be directly reused next time. When certain conditions are met, it is released back to the operating system to avoid insufficient memory due to long-term occupation.

3. Optimize the performance under multi-threading: each thread has its own independent heap memory allocation area for multi-threading. Threads can access this area without locks, improving performance

Among them, Google's TCMalloc is the leader in the industry, and Go also borrows its ideas. Let's introduce it next.

Several important concepts of TCMalloc:

- Page: The operating system manages memory in units of pages, as does TCMalloc, but the size of the Page in TCMalloc is not necessarily equal to the size in the operating system, but a multiple. In "TCMalloc Decryption", it is said that the page size under x64 is 8KB.

- Span: A group of consecutive Pages is called Span. For example, there can be 2 page-sized Spans or 16-page Spans. Spans are one level higher than Pages to facilitate the management of memory areas of a certain size. Spans are The basic unit of memory management in TCMalloc.

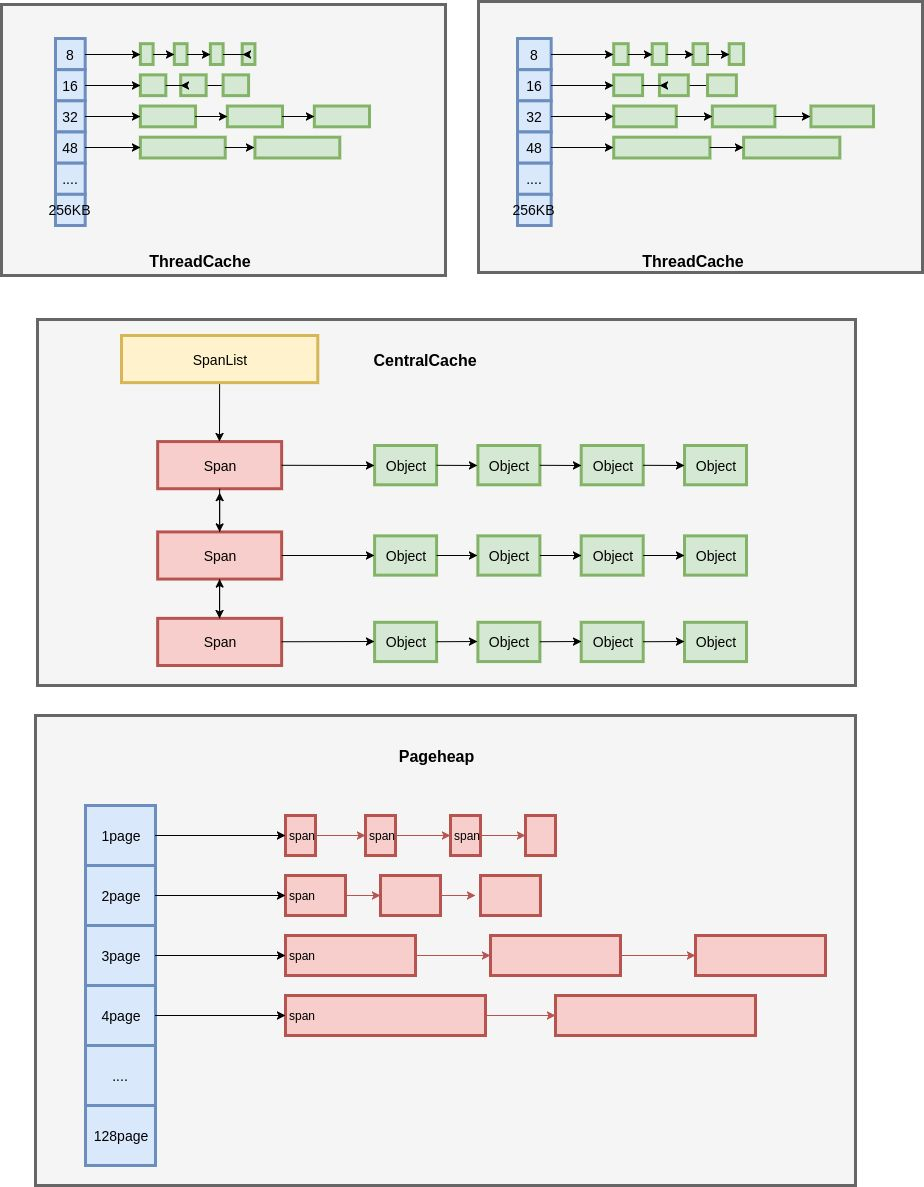

- ThreadCache: Each thread's own Cache, a Cache contains multiple free memory block linked lists, each linked list is connected to a memory block, the size of the memory blocks on the same linked list is the same, it can also be said that according to the size of the memory block, give The memory blocks are divided into categories, so that free memory blocks can be quickly selected from the appropriate linked list according to the requested memory size. Since each thread has its own ThreadCache, ThreadCache access is lock-free.

- CentralCache: It is a cache shared by all threads and a linked list of free memory blocks saved. The number of linked lists is the same as the number of linked lists in ThreadCache. When the ThreadCache memory block is insufficient, it can be taken from the CentralCache. When the ThreadCache memory block is large, it can be put back into the CentralCache. Since CentralCache is shared, its access is locked.

- PageHeap: PageHeap is an abstraction of heap memory. PageHeap also stores several linked lists. The linked list stores Span. When the CentralCache has no memory, it will be taken from PageHeap, and a Span will be split into several memory blocks and added to the linked list of the corresponding size. , when the CentralCache has a lot of memory, it will be put back into the PageHeap. As shown in the figure above, they are the span list of 1 page, the span list of 2 pages, etc., and finally the large span set, which is used to save medium and large objects. There is no doubt that PageHeap is also locked.

Different levels of objects are distinguished in TCMalloc, corresponding to different allocation processes:

- Small object size: 0~256KB; allocation process: ThreadCache -> CentralCache -> HeapPage, most of the time, ThreadCache cache is enough, there is no need to access CentralCache and HeapPage, no lock allocation and no system calls, the allocation efficiency is very High.

- Medium object size: 257~1MB; allocation process: directly select the appropriate size in PageHeap, the maximum memory saved by the Span of 128 Page is 1MB.

- Large object size: >1MB; allocation process: select an appropriate number of pages from the large span set to form a span to store data.

(The above picture and text are borrowed from: Illustrating TCMalloc , Go memory allocation )

In addition, TCMalloc also involves the method of merging multiple small areas into large areas when memory is released. If you are interested, you can read this article: TCMalloc decryption

Go memory allocation scheme

The memory allocation strategy in Go is based on the scheme of TCMalloc for memory allocation. At the same time, combined with Go's own characteristics, it divides the object level more carefully than TCMalloc, and changes the thread-specific cache in TCMalloc to the cache area bound to the logical processor P. In addition, Go has also formulated a set of memory allocation strategies based on its own escape analysis and garbage collection strategies.

Go uses escape analysis in the compilation phase to determine whether variables should be allocated to the stack or the heap. We will not introduce too much about escape analysis, but summarize the following points:

- The stack is more efficient than the heap and does not require GC, so Go will allocate as much memory as possible on the stack. Go's coroutine stack can automatically expand and shrink

- When allocating to the stack may cause problems such as illegal memory access, the heap is used, such as:

- When a value is accessed after the function is called (that is, the variable address is returned as a return value), the value is most likely allocated on the heap

- When the compiler detects that a value is too large, the value is allocated on the heap (stack expansion and contraction have costs)

- When compiling, the compiler does not know the size of this value (slice, map, etc. reference types) this value will be allocated on the heap

- Finally, don't guess where the value is, only the compiler and compiler developers know

Go implements refined memory management and performance assurance through detailed object division, extreme multi-level cache + lock-free policy cache, and precise bitmap management. All objects in Go are divided into three levels:

- Tiny object (0,16byte): The allocation process is, mache->mcentral->mheap bitmap search->mheap radix tree search->operating system allocation

- Small object [16byte, 32KB]: The allocation process is the same as the tiny object

- Large objects (above 32KB): divided into processes, mheap radix tree search -> operating system allocation (without mcache and mcentral)

The memory allocation process in Go can be seen in the following overview:

It mainly involves the following concepts:

page

The same as the Page in TCMalloc, a page size is 8kb (twice the page in the operating system), a light blue rectangle in the above figure represents a page

span

Span is the basic unit of memory management in Go. It is mspan in go. The size of span is a multiple of page. A lilac rectangle in the above picture is a span.

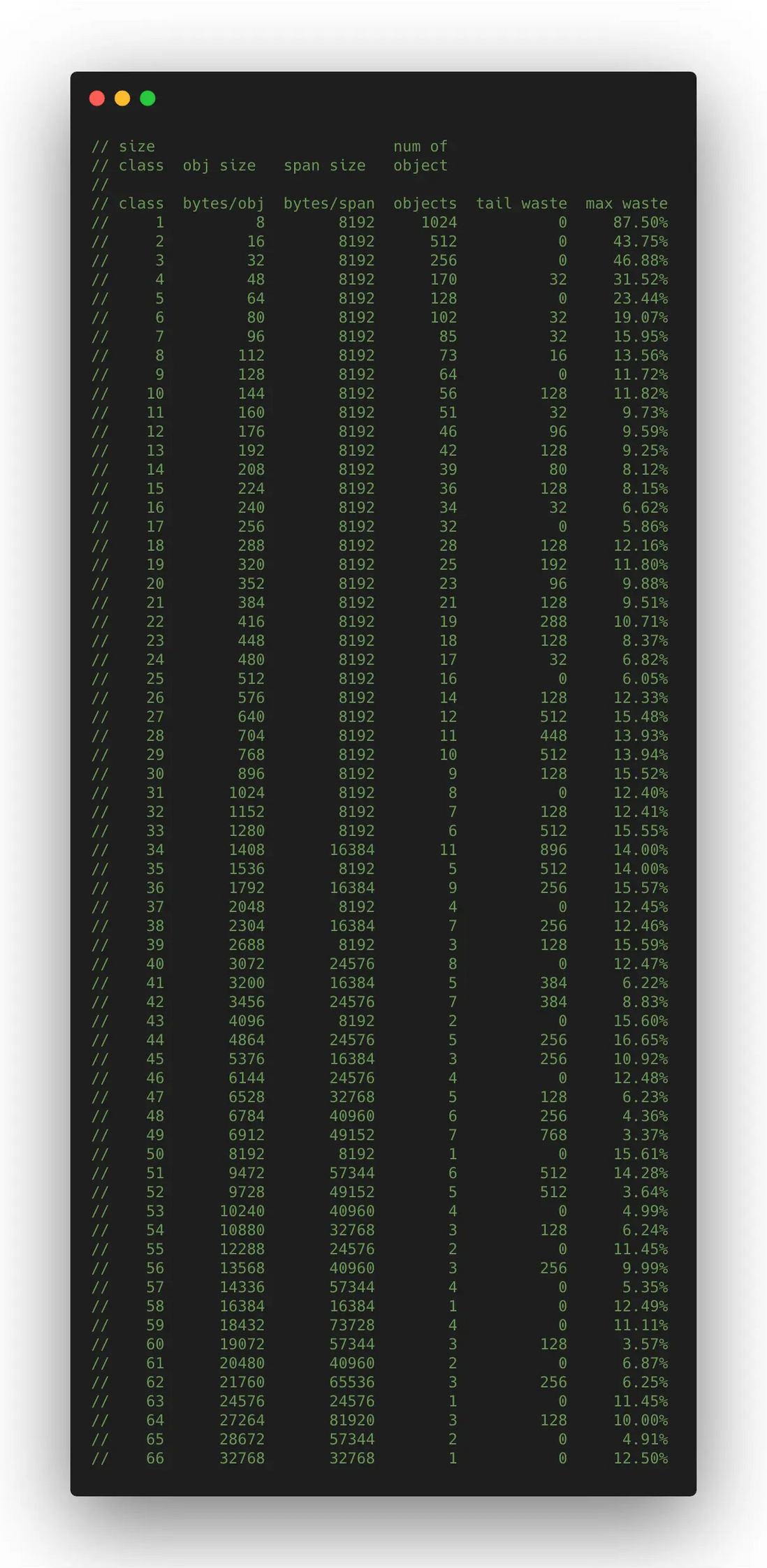

Go1.9.2 has a total of 67 levels of mspan;

For example, the size of each object in the first-level span is 8b, the size of the first-level span is one page, that is, 8192b, and a total of 1024 objects can be stored.

Corresponding to the code, it is placed in an array called class_to_size, which stores the size of the object in the span of each level

// path: /usr/local/go/src/runtime/sizeclasses.go

const _NumSizeClasses = 67

var class_to_size = [_NumSizeClasses]uint16{0, 8, 16, 32, 48, 64, 80, 96, 112, 128, 144, 160, 176, 192, 208, 224, 240, 256, 288, 320, 352, 384, 416, 448, 480, 512, 576, 640, 704, 768, 896, 1024, 1152, 1280, 1408, 1536,1792, 2048, 2304, 2688, 3072, 3200, 3456, 4096, 4864, 5376, 6144, 6528, 6784, 6912, 8192, 9472, 9728, 10240, 10880, 12288, 13568, 14336, 16384, 18432, 19072, 20480, 21760, 24576, 27264, 28672, 32768}There is also a class_to_allocnpages array to store the number of pages corresponding to each level of span

// path: /usr/local/go/src/runtime/sizeclasses.go

const _NumSizeClasses = 67

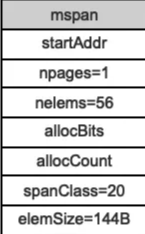

var class_to_allocnpages = [_NumSizeClasses]uint8{0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 1, 2, 1, 2, 1, 3, 2, 3, 1, 3, 2, 3, 4, 5, 6, 1, 7, 6, 5, 4, 3, 5, 7, 2, 9, 7, 5, 8, 3, 10, 7, 4}The definition of the mspan structure in the code is as follows:

// path: /usr/local/go/src/runtime/mheap.go

type mspan struct {

//链表前向指针,用于将span链接起来

next *mspan

//链表前向指针,用于将span链接起来

prev *mspan

// 起始地址,也即所管理页的地址

startAddr uintptr

// 管理的页数

npages uintptr

// 块个数,表示有多少个块可供分配

nelems uintptr

// 用来辅助确定当前span中的元素分配到了哪里

freeindex uintptr

//分配位图,每一位代表一个块是否已分配

allocBits *gcBits

// allocBits的补码,以用来快速查找内存中未被使用的内存

allocCache unit64

// 已分配块的个数

allocCount uint16

// class表中的class ID,和Size Classs相关

spanclass spanClass

// class表中的对象大小,也即块大小

elemsize uintptr

// GC中来标记哪些块已经释放了

gcmarkBits *gcBits

} There is a spanClass to pay attention to here. It is actually twice the class_to_size. This is because each class of objects corresponds to two mspans, one is allocated to objects containing pointers, and the other is allocated to objects that do not contain pointers, so garbage collection When , complex marking processing is not required for span areas without pointer objects, which improves the effect.

For example, an object in the size_class of level 10 is 144 bytes, a span occupies a page, and a total of 56 objects can be stored (you can see that 56 objects occupy less than 1 page, so there will be 128 bytes at the end. useless), its mspan structure is as follows:

Of course, the allocation of tiny objects will reuse an object, for example, two char types are placed in one object. It will be introduced later.

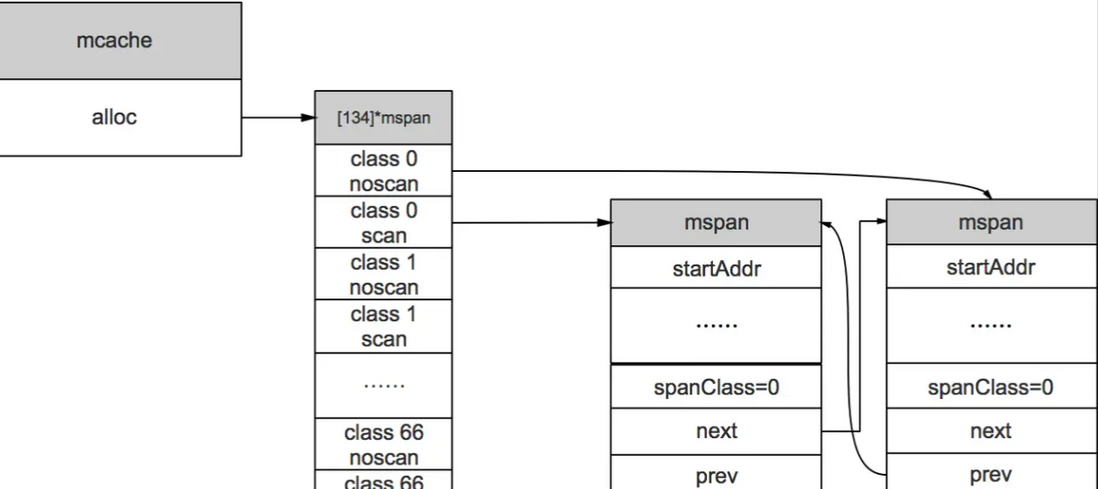

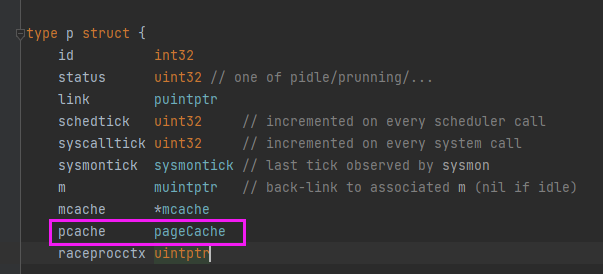

mcache

mcache is similar to ThreadCache in TCMalloc. Each level of span will be saved in mcache; each logical processor P will have its own mcache, and access to this area is lock-free. There are several fields in the structure of mcache that need attention:

//path: /usr/local/go/src/runtime/mcache.go

type mcache struct {

// mcache中对应各个等级的span都会有两份缓存

alloc [numSpanClasses]*mspan

// 下面三个是在微小对象分配时专门使用

tiny uintptr

tinyoffset uintptr

local_tinyallocs uintptr

}

numSpanClasses = _NumSizeClasses << 1It can be seen that macache contains spans of all specifications. Small objects and small objects will start to find space here. Large objects (over 32kb) have no corresponding class index and do not pass here. There are a total of 134 elements in the alloc array, and there are two spans in each level of 67x2; because each level corresponds to two spans, one is used for objects without pointers and half is used for objects with pointers (pointerless objects are used in There is no need to scan whether it references other active objects during garbage collection), the structure is as follows:

mcache is also the memory obtained from mcentral. When the Go runtime is initialized, runtime.allocmache will be called to initialize the thread cache.

// init initializes pp, which may be a freshly allocated p or a

// previously destroyed p, and transitions it to status _Pgcstop.

func (pp *p) init(id int32) {

pp.id = id

////////

.........

/////////

if pp.mcache == nil {

if id == 0 {

if mcache0 == nil {

throw("missing mcache?")

}

// Use the bootstrap mcache0. Only one P will get

// mcache0: the one with ID 0.

pp.mcache = mcache0

} else {

pp.mcache = allocmcache()

}

}

..........

}This function will call the cache allocator in runtime.mheap in the system stack to initialize the new runtime.mcache structure:

// dummy mspan that contains no free objects.

var emptymspan mspan

func allocmcache() *mcache {

var c *mcache

// 在系统栈中调用mheap的缓存分配器创建mcache

systemstack(func() {

lock(&mheap_.lock) // mheap是所有协程共用的需要加锁访问

c = (*mcache)(mheap_.cachealloc.alloc())

c.flushGen = mheap_.sweepgen

unlock(&mheap_.lock)

})

// 将alloc数组设置为空span

for i := range c.alloc {

c.alloc[i] = &emptymspan

}

c.nextSample = nextSample()

return c

}But all mspans in the just initialized mcache are empty placeholders emptymspan

Later, when needed, the span of the specified spanClass will be obtained from mcentral:

// refill acquires a new span of span class spc for c. This span will

// have at least one free object. The current span in c must be full.

//

// Must run in a non-preemptible context since otherwise the owner of

// c could change.

func (c *mcache) refill(spc spanClass) {

// Return the current cached span to the central lists.

s := c.alloc[spc]

...............

if s != &emptymspan {

// Mark this span as no longer cached.

if s.sweepgen != mheap_.sweepgen+3 {

throw("bad sweepgen in refill")

}

mheap_.central[spc].mcentral.uncacheSpan(s)

}

// Get a new cached span from the central lists.

s = mheap_.central[spc].mcentral.cacheSpan()

................

...............

c.alloc[spc] = s

}The refill method is called in the runtime.malloc method;

mcentral

mcentral is a cache shared by all threads and requires lock access; its main function is to provide mcache with segmented mspan resources. Each spanClass corresponds to a level of mcentral; mcentral as a whole is managed in mheap, which contains two mspan linked lists. In Go1.17.7 version, partial represents a span with a free area, and full represents a span list without a free area. . (This is not the nonempty and empty queues that many articles on the Internet talk about)

type mcentral struct {

spanclass spanClass

partial [2]spanSet // list of spans with a free object

full [2]spanSet // list of spans with no free objects

} type spanSet struct {

spineLock mutex

spine unsafe.Pointer // *[N]*spanSetBlock, accessed atomically

spineLen uintptr // Spine array length, accessed atomically

spineCap uintptr // Spine array cap, accessed under lock

index headTailIndex

}For tiny objects and small objects, the memory will first be obtained from mcache and mcentral. This part depends on the runtime.malloc code

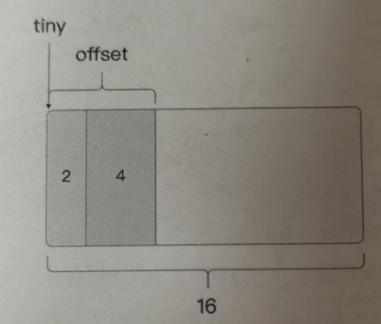

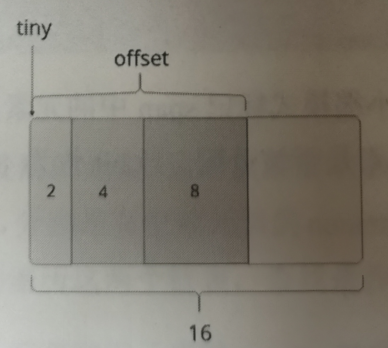

tiny object allocation

In Go, objects smaller than 16 bytes are regarded as tiny objects. The tiny objects will be put into a span whose sizeClass is 2, that is, 16 bytes. This does not mean that a 16-byte space is allocated every time a tiny object is allocated. A 16-byte space is stored in the form of byte alignment according to the rules of 2, 4, and 8. For example, 1-byte char will be allocated 2-byte space, and 9-byte data will be allocated 2+8=10 byte space.

off := c.tinyoffset

// Align tiny pointer for required (conservative) alignment.

if size&7 == 0 {

off = alignUp(off, 8)

} else if sys.PtrSize == 4 && size == 12 {

// Conservatively align 12-byte objects to 8 bytes on 32-bit

// systems so that objects whose first field is a 64-bit

// value is aligned to 8 bytes and does not cause a fault on

// atomic access. See issue 37262.

// TODO(mknyszek): Remove this workaround if/when issue 36606

// is resolved.

off = alignUp(off, 8)

} else if size&3 == 0 {

off = alignUp(off, 4)

} else if size&1 == 0 {

off = alignUp(off, 2)

}If a current 16-byte element can hold a new tiny object, make full use of the current element space

if off+size <= maxTinySize && c.tiny != 0 {

// The object fits into existing tiny block.

x = unsafe.Pointer(c.tiny + off)

c.tinyoffset = off + size

c.tinyAllocs++

mp.mallocing = 0

releasem(mp)

return x

} otherwise allocate space from the next element

// Allocate a new maxTinySize block.

span = c.alloc[tinySpanClass]

v := nextFreeFast(span)

if v == 0 {

v, span, shouldhelpgc = c.nextFree(tinySpanClass)

}

x = unsafe.Pointer(v)

(*[2]uint64)(x)[0] = 0

(*[2]uint64)(x)[1] = 0

// See if we need to replace the existing tiny block with the new one

// based on amount of remaining free space.

if !raceenabled && (size < c.tinyoffset || c.tiny == 0) {

// Note: disabled when race detector is on, see comment near end of this function.

c.tiny = uintptr(x)

c.tinyoffset = size

}

size = maxTinySizeThe contents of nextFreeFast and nextFree are described below

Small object allocation

var sizeclass uint8

if size <= smallSizeMax-8 {

sizeclass = size_to_class8[divRoundUp(size, smallSizeDiv)]

} else {

sizeclass = size_to_class128[divRoundUp(size-smallSizeMax, largeSizeDiv)]

}

size = uintptr(class_to_size[sizeclass])

spc := makeSpanClass(sizeclass, noscan)

span = c.alloc[spc]

v := nextFreeFast(span)

if v == 0 {

v, span, shouldhelpgc = c.nextFree(spc)

}

x = unsafe.Pointer(v)

if needzero && span.needzero != 0 {

memclrNoHeapPointers(unsafe.Pointer(v), size)

}Lines 1-6, calculate the corresponding sizeClass, that is, the object size, according to the size of the space to be allocated in the parameters

Lines 7-9, according to the level of object size and whether there is a pointer (noscan) to find the corresponding span in the alloc array of mcache

Line 10, first calculate whether there is free space in the current span, and return the address of the free space that can be allocated

Lines 11-13, if the current span corresponding to mcache has no free space, enter the nextFree function to find a free span

Then it is returned to the caller after other processing (garbage collection mark, lock relationship identification, etc.)

At the same time, it should also be noted that the space allocation here needs to be memory aligned, such as applying for 17-byte space, but the classification of spans grows in multiples of 8, which is larger than 17 and the closest level is 32 , so even if 17 bytes are needed, a 32-byte space will be used internally, which is why the sizeClass needs to be calculated according to size in the above code; it can also be seen that this allocation method will inevitably lead to memory waste, TCMalloc algorithm machine Try to keep the waste rate within 15%

In nextFreeFast, you can see that the freeIndex, allocCache and other attributes in mspan above are used;

Because allocCache is used here to quickly access the first 64 bytes, if the currently allocated bytes are within the range of allocCache, you can directly use the bitmap cache to quickly calculate the allocated area; as to why it is 64 bytes, I guess It is related to the size of the CacheLine in the CPU. The cache line of a 64-bit CPU is 64 bytes. Use this to improve the CPU cache hit rate and improve performance.

// nextFreeFast returns the next free object if one is quickly available.

// Otherwise it returns 0.

func nextFreeFast(s *mspan) gclinkptr {

theBit := sys.Ctz64(s.allocCache) // Is there a free object in the allocCache?

if theBit < 64 {

result := s.freeindex + uintptr(theBit)

if result < s.nelems {

freeidx := result + 1

if freeidx%64 == 0 && freeidx != s.nelems {

return 0

}

s.allocCache >>= uint(theBit + 1)

s.freeindex = freeidx

s.allocCount++

return gclinkptr(result*s.elemsize + s.base())

}

}

return 0

}Regarding the relationship between freeIndex and allocCache, it actually uses bitmap bitmap caching and stage marking to cooperate, because allocCache can only cache 64 bytes of data at a time, so in the process of span allocation, allocCache is rolling forward, once Identifies a 64-byte area, and freeIndex represents the element position at the end of the last allocation. The area to which the current span is allocated can be calculated by the free position in the current allocCache + freeIndex.

The specific calculation method can be found in the nextFreeIndex method in mbitmap.go

// nextFreeIndex returns the index of the next free object in s at

// or after s.freeindex.

// There are hardware instructions that can be used to make this

// faster if profiling warrants it.

func (s *mspan) nextFreeIndex() uintptr {

sfreeindex := s.freeindex

snelems := s.nelems

if sfreeindex == snelems {

return sfreeindex

}

if sfreeindex > snelems {

throw("s.freeindex > s.nelems")

}

aCache := s.allocCache

bitIndex := sys.Ctz64(aCache)

for bitIndex == 64 {

// Move index to start of next cached bits.

sfreeindex = (sfreeindex + 64) &^ (64 - 1)

if sfreeindex >= snelems {

s.freeindex = snelems

return snelems

}

whichByte := sfreeindex / 8

// Refill s.allocCache with the next 64 alloc bits.

s.refillAllocCache(whichByte)

aCache = s.allocCache

bitIndex = sys.Ctz64(aCache)

// nothing available in cached bits

// grab the next 8 bytes and try again.

}

result := sfreeindex + uintptr(bitIndex)

if result >= snelems {

s.freeindex = snelems

return snelems

}

s.allocCache >>= uint(bitIndex + 1)

sfreeindex = result + 1

if sfreeindex%64 == 0 && sfreeindex != snelems {

// We just incremented s.freeindex so it isn't 0.

// As each 1 in s.allocCache was encountered and used for allocation

// it was shifted away. At this point s.allocCache contains all 0s.

// Refill s.allocCache so that it corresponds

// to the bits at s.allocBits starting at s.freeindex.

whichByte := sfreeindex / 8

s.refillAllocCache(whichByte)

}

s.freeindex = sfreeindex

return result

}

In the return to nextFree function

func (c *mcache) nextFree(spc spanClass) (v gclinkptr, s *mspan, shouldhelpgc bool) {

s = c.alloc[spc]

shouldhelpgc = false

freeIndex := s.nextFreeIndex() // 获取可分配的元素位置

if freeIndex == s.nelems {

//如果当前span没有可分配空间,调用refill方法把当前span交给mcentral的full队列

// 并从mcentral的partial队列取一个有空闲的span放到mcache上

// The span is full.

if uintptr(s.allocCount) != s.nelems {

println("runtime: s.allocCount=", s.allocCount, "s.nelems=", s.nelems)

throw("s.allocCount != s.nelems && freeIndex == s.nelems")

}

c.refill(spc)

shouldhelpgc = true

s = c.alloc[spc]

freeIndex = s.nextFreeIndex() // 在新获取的span中重新计算freeIndex

}

if freeIndex >= s.nelems {

throw("freeIndex is not valid")

}

v = gclinkptr(freeIndex*s.elemsize + s.base()) // 获取span中数据的起始地址加上当前已分配的区域获取一个可分配的空闲区域

s.allocCount++

if uintptr(s.allocCount) > s.nelems {

println("s.allocCount=", s.allocCount, "s.nelems=", s.nelems)

throw("s.allocCount > s.nelems")

}

return

}Line 4 of the function obtains the position of the next allocated element. If freeIndex is equal to the maximum number of elements in the span, it means that the current level of span has been allocated. At this time, you need to call the refill method of mcache to the mcentral of the corresponding spanClass in mheap. Return the span that is not currently free to the full queue of mcentral, and obtain a span with a free area from the partail queue and put it on mcache.

The refill method can be seen below. If the span corresponding to the mcache level is not available, it will be obtained directly from mcentral. Otherwise, it means that the current span has no space to allocate, so the span needs to be handed over to mcentral again and wait for the garbage collector to mark the completion. You can continue to use it later.

func (c *mcache) refill(spc spanClass) {

// Return the current cached span to the central lists.

s := c.alloc[spc]

...............

if s != &emptymspan {

// Mark this span as no longer cached.

if s.sweepgen != mheap_.sweepgen+3 {

throw("bad sweepgen in refill")

}

mheap_.central[spc].mcentral.uncacheSpan(s)

}

// Get a new cached span from the central lists.

s = mheap_.central[spc].mcentral.cacheSpan()

................

...............

c.alloc[spc] = s

}Entering the cacheSpan function, you can see that the acquisition of free spans here goes through the following sequences:

- First try to get a span from the part of the partail queue that has been cleaned up by garbage collection

- If pop does not represent a span that is not currently cleaned by GC, try to obtain a free span from the part of the partial queue that has not been cleaned by GC, and clean it

- If the partail queue is not obtained, try to obtain a span from the uncleaned area of the full queue, clean it, and put it into the cleaning area of the full queue, which means that the span will not be allocated to other mcaches;

- If the area is not cleaned and the corresponding span is not obtained, it means that mcentral needs to expand and apply for an area from mheap.

At the same time, it can be found that the number of traversals here is 100, which may be considered almost enough. After all, these operations also take time, so I need one from mheap.

If a free span is obtained, jump to the haveSpan code segment, where the freeindex and allocCache bitmap caches are updated, and the span is returned;

// Allocate a span to use in an mcache.

func (c *mcentral) cacheSpan() *mspan {

// Deduct credit for this span allocation and sweep if necessary.

spanBytes := uintptr(class_to_allocnpages[c.spanclass.sizeclass()]) * _PageSize

deductSweepCredit(spanBytes, 0)

traceDone := false

if trace.enabled {

traceGCSweepStart()

}

spanBudget := 100

var s *mspan

sl := newSweepLocker()

sg := sl.sweepGen

// Try partial swept spans first.

if s = c.partialSwept(sg).pop(); s != nil {

goto havespan

}

// Now try partial unswept spans.

for ; spanBudget >= 0; spanBudget-- {

s = c.partialUnswept(sg).pop()

if s == nil {

break

}

if s, ok := sl.tryAcquire(s); ok {

// We got ownership of the span, so let's sweep it and use it.

s.sweep(true)

sl.dispose()

goto havespan

}

}

// Now try full unswept spans, sweeping them and putting them into the

// right list if we fail to get a span.

for ; spanBudget >= 0; spanBudget-- {

s = c.fullUnswept(sg).pop()

if s == nil {

break

}

if s, ok := sl.tryAcquire(s); ok {

// We got ownership of the span, so let's sweep it.

s.sweep(true)

// Check if there's any free space.

freeIndex := s.nextFreeIndex()

if freeIndex != s.nelems {

s.freeindex = freeIndex

sl.dispose()

goto havespan

}

// Add it to the swept list, because sweeping didn't give us any free space.

c.fullSwept(sg).push(s.mspan)

}

// See comment for partial unswept spans.

}

sl.dispose()

if trace.enabled {

traceGCSweepDone()

traceDone = true

}

// We failed to get a span from the mcentral so get one from mheap.

s = c.grow()

if s == nil {

return nil

}

// At this point s is a span that should have free slots.

havespan:

if trace.enabled && !traceDone {

traceGCSweepDone()

}

n := int(s.nelems) - int(s.allocCount)

if n == 0 || s.freeindex == s.nelems || uintptr(s.allocCount) == s.nelems {

throw("span has no free objects")

}

freeByteBase := s.freeindex &^ (64 - 1)

whichByte := freeByteBase / 8

// Init alloc bits cache.

s.refillAllocCache(whichByte)

// Adjust the allocCache so that s.freeindex corresponds to the low bit in

// s.allocCache.

s.allocCache >>= s.freeindex % 64

return s

}For mcache, if it feels that the remaining space of the span at the current level cannot meet the size required by the user, the span will be handed over to mcentral; mcentral judges whether it is directly placed in the heap for recycling or needs to be managed by itself according to the conditions. If it is managed by itself, then Then judge the relationship between the freeIndex of the span and the capacity. If there is remaining capacity, enter the partialSweep queue, and if there is no capacity, enter the fullSweep.

func (c *mcentral) uncacheSpan(s *mspan) {

if s.allocCount == 0 {

throw("uncaching span but s.allocCount == 0")

}

sg := mheap_.sweepgen

stale := s.sweepgen == sg+1

// Fix up sweepgen.

if stale {

// Span was cached before sweep began. It's our

// responsibility to sweep it.

//

// Set sweepgen to indicate it's not cached but needs

// sweeping and can't be allocated from. sweep will

// set s.sweepgen to indicate s is swept.

atomic.Store(&s.sweepgen, sg-1)

} else {

// Indicate that s is no longer cached.

atomic.Store(&s.sweepgen, sg)

}

// Put the span in the appropriate place.

if stale {

// It's stale, so just sweep it. Sweeping will put it on

// the right list.

//

// We don't use a sweepLocker here. Stale cached spans

// aren't in the global sweep lists, so mark termination

// itself holds up sweep completion until all mcaches

// have been swept.

ss := sweepLocked{s}

ss.sweep(false)

} else {

if int(s.nelems)-int(s.allocCount) > 0 {

// Put it back on the partial swept list.

c.partialSwept(sg).push(s)

} else {

// There's no free space and it's not stale, so put it on the

// full swept list.

c.fullSwept(sg).push(s)

}

}

}It can be seen that both partial and full in mcentral are spanSet arrays with two elements. The purpose of this is actually a double-cache strategy. When garbage collection is only collected and performed concurrently with user coroutines, half of each time is collected and the other half is written. Alternate next time, so as to ensure that there is always space to allocate, instead of serially waiting for the garbage collection to complete before allocating space, and using space for time to improve response performance

type mcentral struct {

spanclass spanClass

partial [2]spanSet // list of spans with a free object

full [2]spanSet // list of spans with no free objects

}The grow method in mcentral involves the memory allocation and management of mheap, which is described below.

mheap

mheap is similar to PageHeap in TCMalloc, representing the heap space held in Go, and the span managed by mcentral is also obtained from here. When mcentral has no free spans, it will apply to mheap. If there are no resources in mheap, it will apply to the operating system for memory. The application to the operating system is based on pages (4kb), and then the requested memory pages are organized according to the levels of page (8kb), span (multiple of page), chunk (512kb), heapArena (64m) .

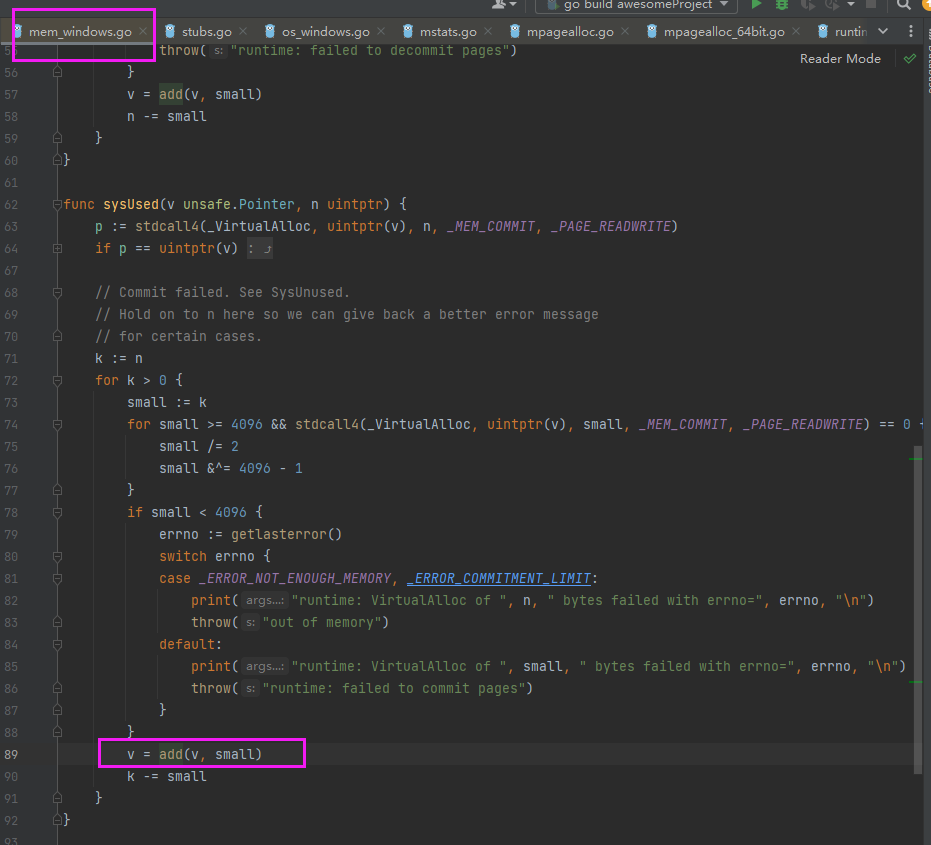

Bitmap cache for pageCache

The grow method in mcentral will call the alloc method of mheap

// grow allocates a new empty span from the heap and initializes it for c's size class.

func (c *mcentral) grow() *mspan {

npages := uintptr(class_to_allocnpages[c.spanclass.sizeclass()])

size := uintptr(class_to_size[c.spanclass.sizeclass()])

s, _ := mheap_.alloc(npages, c.spanclass, true)

if s == nil {

return nil

}

// Use division by multiplication and shifts to quickly compute:

// n := (npages << _PageShift) / size

n := s.divideByElemSize(npages << _PageShift)

s.limit = s.base() + size*n

heapBitsForAddr(s.base()).initSpan(s)

return s

}Then the allocSpan method is called internally.

func (h *mheap) alloc(npages uintptr, spanclass spanClass, needzero bool) (*mspan, bool) {

// Don't do any operations that lock the heap on the G stack.

// It might trigger stack growth, and the stack growth code needs

// to be able to allocate heap.

var s *mspan

systemstack(func() {

// To prevent excessive heap growth, before allocating n pages

// we need to sweep and reclaim at least n pages.

if !isSweepDone() {

h.reclaim(npages)

}

s = h.allocSpan(npages, spanAllocHeap, spanclass)

})

if s == nil {

return nil, false

}

isZeroed := s.needzero == 0

if needzero && !isZeroed {

memclrNoHeapPointers(unsafe.Pointer(s.base()), s.npages<<_PageShift)

isZeroed = true

}

s.needzero = 0

return s, isZeroed

}In the allocSpan method, if the area to be allocated is not large and the physical alignment is not considered, the space will be obtained from the pageCache cache of the logical processor first, so as to improve the performance of lock-free allocation space (and is space for time).

The following 16 lines can see that the corresponding space is tried to be obtained from the pcache of the logical processor P first.

func (h *mheap) allocSpan(npages uintptr, typ spanAllocType, spanclass spanClass) (s *mspan) {

// Function-global state.

gp := getg()

base, scav := uintptr(0), uintptr(0)

// On some platforms we need to provide physical page aligned stack

// allocations. Where the page size is less than the physical page

// size, we already manage to do this by default.

needPhysPageAlign := physPageAlignedStacks && typ == spanAllocStack && pageSize < physPageSize

// If the allocation is small enough, try the page cache!

// The page cache does not support aligned allocations, so we cannot use

// it if we need to provide a physical page aligned stack allocation.

pp := gp.m.p.ptr()

if !needPhysPageAlign && pp != nil && npages < pageCachePages/4 {

c := &pp.pcache

// If the cache is empty, refill it.

if c.empty() {

lock(&h.lock)

*c = h.pages.allocToCache()

unlock(&h.lock)

}

// Try to allocate from the cache.

base, scav = c.alloc(npages)

if base != 0 {

s = h.tryAllocMSpan()

if s != nil {

goto HaveSpan

}

// We have a base but no mspan, so we need

// to lock the heap.

}

} The structure of pageCache is as follows:

The code is in runtime/mpagecache.go

// 代表pageCache能够使用的空间数,8x64一共是512kb空间

const pageCachePages = 8 * unsafe.Sizeof(pageCache{}.cache)

// pageCache represents a per-p cache of pages the allocator can

// allocate from without a lock. More specifically, it represents

// a pageCachePages*pageSize chunk of memory with 0 or more free

// pages in it.

type pageCache struct {

base uintptr // base代表该虚拟内存的基线地址

// cache和scav都是起到位图标记的作用,cache主要是标记哪些内存位置已经被使用了,scav标记已经被清除的区域

// 用来加速垃圾未收,在垃圾回收一定条件下两个可以互换,提升分配和垃圾回收效率。

cache uint64 // 64-bit bitmap representing free pages (1 means free)

scav uint64 // 64-bit bitmap representing scavenged pages (1 means scavenged)

}Let's go back to the allocSpan method of mheap

radix tree

If the pageCache does not meet the allocation conditions or there is no free space, the mheap is globally locked to obtain memory

// For one reason or another, we couldn't get the

// whole job done without the heap lock.

lock(&h.lock)

.................

if base == 0 {

// Try to acquire a base address.

base, scav = h.pages.alloc(npages)

if base == 0 {

if !h.grow(npages) {

unlock(&h.lock)

return nil

}

base, scav = h.pages.alloc(npages)

if base == 0 {

throw("grew heap, but no adequate free space found")

}

}

}

................

unlock(&h.lock)Here, it is first obtained from the pages of mheap. This pages is a structure instance of pageAlloc, which is managed in the form of a radix tree. There are at most 5 layers, and each node corresponds to a pallocSum object. Except for the leaf node, each node contains the memory information of 8 consecutive child nodes. The higher the node contains, the more memory information. Represents 16G memory space. There are also some search optimizations

Then when mheap has no space, it will apply to the operating system. This part of the code will call the grow and sysGrow methods of pageAlloc in the grow function of mheap, and internally will call the platform-related sysUsed method to apply for memory to the operating system.

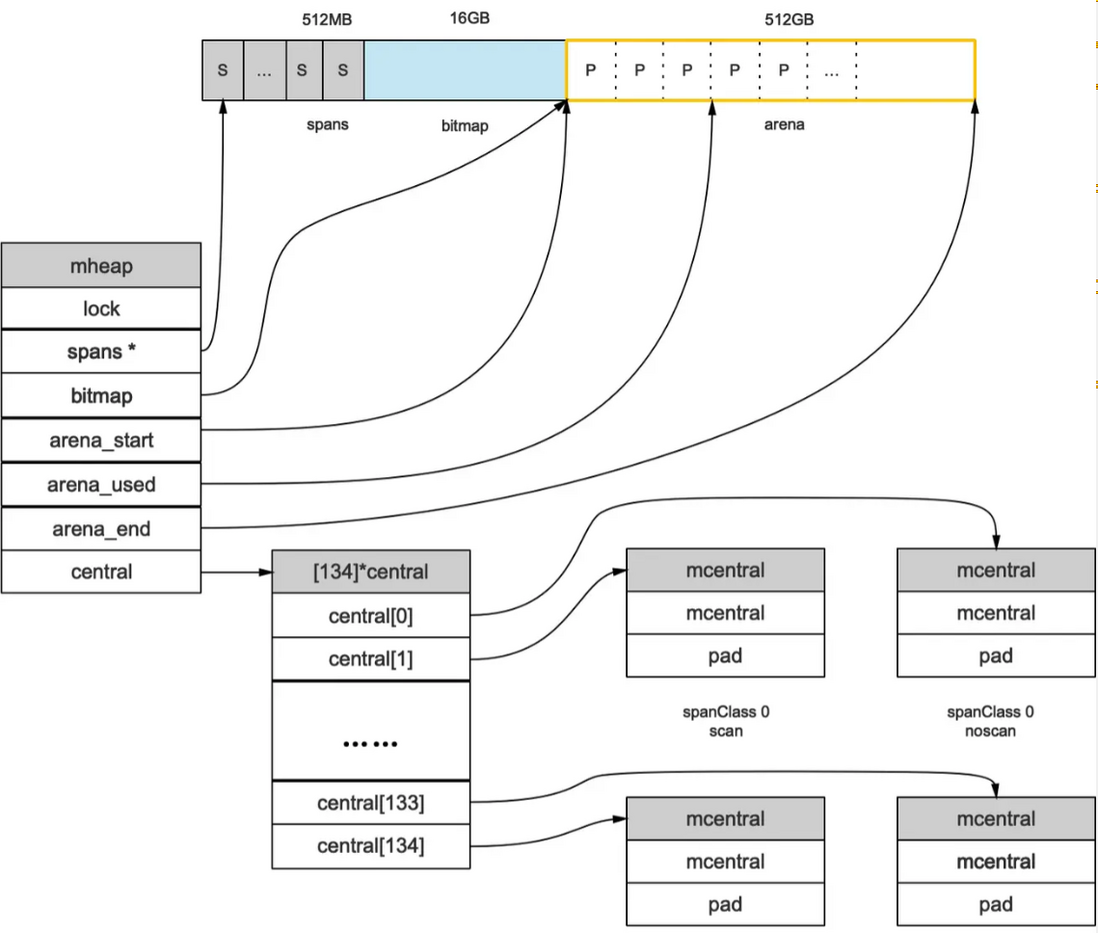

Another point to note in mheap is the management of mcentral

//path: /usr/local/go/src/runtime/mheap.go

type mheap struct {

lock mutex

// spans: 指向mspans区域,用于映射mspan和page的关系

spans []*mspan

// 指向bitmap首地址,bitmap是从高地址向低地址增长的

bitmap uintptr

// 指示arena区首地址

arena_start uintptr

// 指示arena区已使用地址位置

arena_used uintptr

// 指示arena区末地址

arena_end uintptr

central [67*2]struct {

mcentral mcentral

pad [sys.CacheLineSize - unsafe.Sizeof(mcentral{})%sys.CacheLineSize]byte

}

}First of all, notice the sys.CacheLineSize here, according to this, do free alignment to mcentral to prevent performance problems caused by the CPU's pseudo-shared cache (recommended to see my article about pseudo-shared cache: https://www.cnblogs. com/dojo-lzz/p/16183006.html ).

Secondly, it should be noted that the number of mcentral here is 67x2=134, which is also processed separately for objects with pointers and objects without pointers to improve the efficiency of garbage collection and thus improve the overall performance.

Use this picture to see it more clearly

To sum up, refined memory management and performance guarantee are achieved through detailed object division, extreme multi-level cache + lock-free policy cache, and precise bitmap management.

The whole article takes about a month. By looking at the source code, you can find that the existing information on Go memory allocation is either outdated, or similar to what others say, or independent thinking and practice can best reveal the essence.

Reference article

- Graphical memory allocation in Go language: https://juejin.cn/post/6844903795739082760

- Memory allocator: https://draveness.me/golang/docs/part3-runtime/ch07-memory/golang-memory-allocator/

- Stack space management: https://draveness.me/golang/docs/part3-runtime/ch07-memory/golang-stack-management/

- Technical dry goods | Understanding Go memory allocation: https://cloud.tencent.com/developer/article/1861429

- A simple memory allocator: https://github.com/KatePang13/Note/blob/main/%E4%B8%80%E4%B8%AA%E7%AE%80%E5%8D%95%E7% 9A%84%E5%86%85%E5%AD%98%E5%88%86%E9%85%8D%E5%99%A8.md

- Write your own memory allocator: https://soptq.me/2020/07/18/mem-allocator/

- Go memory allocation turned out to be this simple: https://segmentfault.com/a/1190000020338427

- Graphical memory allocation in Go language: https://juejin.cn/post/6844903795739082760

- Introduction to TCMalloc: https://blog.csdn.net/aaronjzhang/article/details/8696212

- TCMalloc decryption: https://wallenwang.com/2018/11/tcmalloc/

- Graphical TCMalloc: https://zhuanlan.zhihu.com/p/29216091

- Memory allocator: https://draveness.me/golang/docs/part3-runtime/ch07-memory/golang-memory-allocator/

- Process and thread: https://baijiahao.baidu.com/s?id=1687308494061329777&wfr=spider&for=pc

- Memory Allocator: https://blog.csdn.net/weixin_30940783/article/details/97806139

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。