I recently prepared a technical sharing. I saw a function of speech-to-text that I had done before and put it on slides. I simply sorted it out and shared it with you.

From technology selection, to solution design, to actual implementation, it can be said that the entire link is covered.

- Speech transcription flow chart

- How to record in PC browser

- How to send voice after recording

- Voice sending and real-time transcription

- Universal recording kit

- Summarize

Speech transcription flow chart

How to record in PC browser

AudioContext,AudioNode是什么?

MediaDevice.getUserMedia()是什么?

为什么localhost能播放,预生产不能播放?

js中的数据类型TypedArray知多少?

js-audio-recorder源码分析

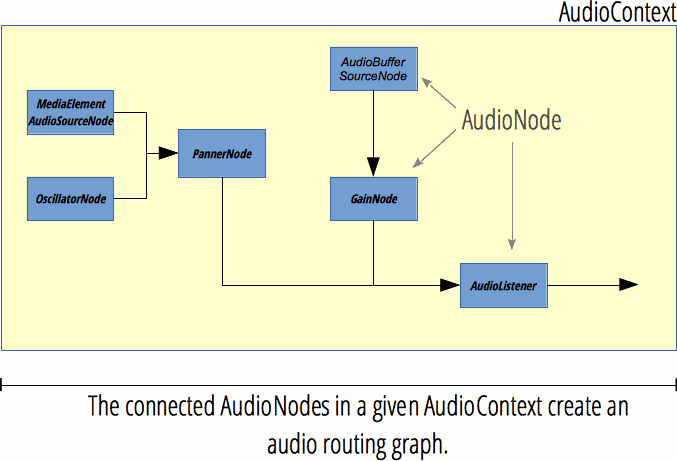

代码实现What is AudioContext?

The AudioContext interface represents an audio processing graph built from linked audio modules, each represented by an AudioNode.

An audio context controls the creation of all nodes and the execution of audio processing and decoding. Everything happens in one context.

ArrayBuffer: Audio binary file

decodeAudioData: decode

AudioBufferSourceNode:

connect is used to connect audio files

start play audio

AudioContext.destination: Speaker device

What is AudioNode?

- AudioNode is a base class for audio processing, including context, numberOfInputs, channelCount, connect

- The AudioBufferSourceNode used to connect audio files mentioned above inherits the connect and start methods of AudioNode

- GainNode for setting volume is also inherited from AudioNode

- The MediaElementAudioSourceNode used to connect the microphone device also inherits from AudioNode

- OscillationNode for filtering is indirectly inherited from AudioNode

- The PannerNode, which represents the position and behavior of the audio source signal in space, also inherits from AudioNode

- The AudioListener interface represents the position and orientation of the only person listening to the audio scene and is used for audio spatialization

- The above nodes can be connected layer by layer through the decorator mode, AudioBufferSourceCode can be connected to GainNode first, GainNode and then connected to AudioContext.destination speakers to adjust the volume

First look: what is MediaDevice.getUserMedia()

MediaStream MediaStreamTrack audio track

Demo: https://github.com/FrankKai/nodejs-rookie/issues/54

<button onclick="record()">开始录音</button>

<script>

function record () {

navigator.mediaDevices.getUserMedia({

audio: true

}).then(mediaStream => {

console.log(mediaStream);

}).catch(err => {

console.error(err);

}) ;

}Acquaintance: What is MediaDevice.getUserMedia()

MediaStream MediaStreamTrack audio track

- The MediaStream interface represents the media content stream

- The MediaStreamTrack interface represents a single media track within a stream

- track can be understood as audio track, so audio track means audio track

- Remind the user "whether to allow the code to gain access to the microphone". If denied, an error DOMException: Permission denied will be reported; if allowed, a MediaStream consisting of audio tracks will be returned, which contains detailed information on the audio tracks

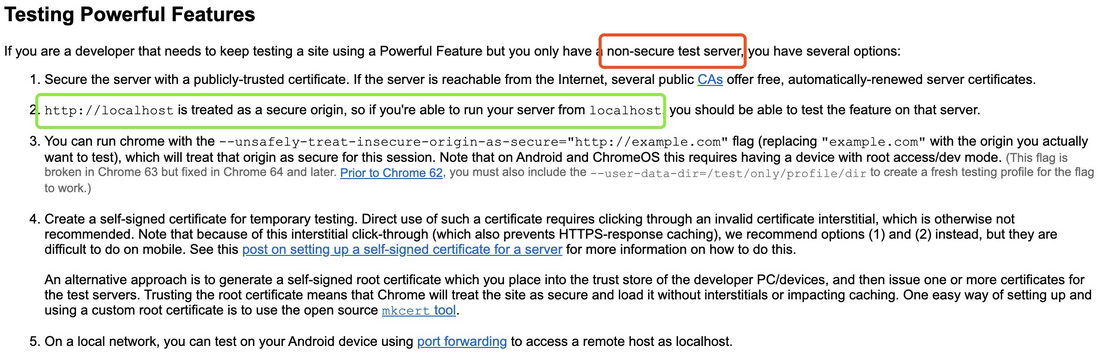

Why can localhost play, but pre-production can't play?

No way, I asked a question on stackOverflow

Why navigator.mediaDevice only works fine on localhost:9090?

Netizens said that the test can only be done in an HTTPS environment.

Well, production is HTTPS and works. ? ? ? But where does my localhost come from the HTTPS environment? ? ? So what is the reason?

Finally found the answer from the official update record of chromium

https://sites.google.com/a/chromium.org/dev/Home/chromium-security/deprecating-powerful-features-on-insecure-origins

After Chrome 47, the getUserMedia API can only allow video and audio requests from "secure and trusted" clients, such as HTTPS and local Localhost. If the page's script is loaded from a non-secure origin, there is no mediaDevices object available in the navigator object, and Chrome throws an error.

The voice function is pre-production, and the pre-release requires the following configurations:

Enter chrome://flags in the address bar

Search: insecure origins treated as secure

Configuration: http://foo.test.gogo.com

The production https://foo.gogo.com is completely OK

How much do you know about the data type TypedArray in js?

Typed array basics: TypedArray Buffer ArrayBuffer View Unit8Array Unit16Array Float64Array

- Used to process raw binary data

- TypedArray is divided into two types: buffers and views

- The buffer (implemented through the ArrayBuffer class) refers to a data block object; the buffer has no fixed format; the contents of the buffer cannot be accessed.

- The access permission of the memory in the buffer requires the use of the view; the view provides a context (including data type, initial position, and number of elements), which converts the data into a typed array

https://github.com/FrankKai/FrankKai.github.io/issues/164

Typed array usage example

// 创建一个16字节定长的buffer

let buffer = new ArrayBuffer(16); Pre-knowledge points for processing audio data

struct someStruct {

unsigned long id; // long 32bit

char username[16];// char 8bit

float amountDue;// float 32bit

}; let buffer = new ArrayBuffer(24);

// ... read the data into the buffer ...

let idView = new Uint32Array(buffer, 0, 1);

let usernameView = new Uint8Array(buffer, 4, 16);

let amountDueView = new Float32Array(buffer, 20, 1); Why are the offsets 1, 4, 20?

Because 32/8 = 4. 0 to 3 belong to idView. 8/8 = 1. 4 to 19 belong to usernameView. 32/8 = 4. 20 to 23 belong to the amountView.

Code implementation and source code analysis

1. Code implementation

Flowchart: 1. Initialize recorder 2. Start recording 3. Stop recording

Design Ideas: Voice Recorder, Voice Recorder Assistant, Voice Constructor, Voice Changer

2. Tried technical solutions

1. A front-end development of Renren.com

https://juejin.im/post/5b8bf7e3e51d4538c210c6b0

Cannot flexibly specify the number of sampling bits, sampling frequency and number of channels; cannot output audio in multiple formats; therefore deprecated.

2. js-audio-recorder

It can flexibly specify the number of sampling bits, sampling frequency and number of channels; it can output audio in various formats; it provides a variety of easy-to-use APIs.

github address: https://github.com/2fps/recorder

I haven't learned anything related to voice, so I can only learn and do it by referring to the achievements of my predecessors!

Code implementation and source code analysis

1. Dismantling the recording process

1. Initialize the recording instance

initRecorderInstance() {

// 采样相关

const sampleConfig = {

sampleBits: 16, // 采样位数,讯飞实时语音转写 16bits

sampleRate: 16000, // 采样率,讯飞实时语音转写 16000kHz

numChannels: 1, // 声道,讯飞实时语音转写 单声道

};

this.recorderInstance = new Recorder(sampleConfig);

},2. Start recording

startRecord() {

try {

this.recorderInstance.start();

// 回调持续输出时长

this.recorderInstance.onprocess = (duration) => {

this.recorderHelper.duration = duration;

};

} catch (err) {

this.$debug(err);

}

},3. Stop recording

stopRecord() {

this.recorderInstance.stop();

this.recorder.blobObjMP3 = new Blob([this.recorderInstance.getWAV()], { type: 'audio/mp3' });

this.recorder.blobObjPCM = this.recorderInstance.getPCMBlob();

this.recorder.blobUrl = URL.createObjectURL(this.recorder.blobObjMP3);

if (this.audioAutoTransfer) {

this.$refs.audio.onloadedmetadata = () => {

this.audioXFTransfer();

};

}

},2. Design ideas

recorder instance recorderInstance

- js-audio-recorder

Recorder Helper

- blobUrl, blobObjPCM, blobObjMP3

- hearing,tip,duration

EditorEditor

- transfered, tip, loading

Audio device

- urlPC,urlMobile,size

ConverterTransfer

- text

3. Initialization example of source code analysis-constructor

/**

* @param {Object} options 包含以下三个参数:

* sampleBits,采样位数,一般8,16,默认16

* sampleRate,采样率,一般 11025、16000、22050、24000、44100、48000,默认为浏览器自带的采样率

* numChannels,声道,1或2

*/

constructor(options: recorderConfig = {}) {

// 临时audioContext,为了获取输入采样率的

let context = new (window.AudioContext || window.webkitAudioContext)();

this.inputSampleRate = context.sampleRate; // 获取当前输入的采样率

// 配置config,检查值是否有问题

this.config = {

// 采样数位 8, 16

sampleBits: ~[8, 16].indexOf(options.sampleBits) ? options.sampleBits : 16,

// 采样率

sampleRate: ~[11025, 16000, 22050, 24000, 44100, 48000].indexOf(options.sampleRate) ? options.sampleRate : this.inputSampleRate,

// 声道数,1或2

numChannels: ~[1, 2].indexOf(options.numChannels) ? options.numChannels : 1,

};

// 设置采样的参数

this.outputSampleRate = this.config.sampleRate; // 输出采样率

this.oututSampleBits = this.config.sampleBits; // 输出采样数位 8, 16

// 判断端字节序

this.littleEdian = (function() {

var buffer = new ArrayBuffer(2);

new DataView(buffer).setInt16(0, 256, true);

return new Int16Array(buffer)[0] === 256;

})();

}How to understand new DataView(buffer).setInt16(0, 256, true)?

Controls the endianness of memory storage.

true is littleEndian, that is, little endian mode, status data is stored in low addresses, Int16Array uses the platform's endianness.

The so-called big-endian mode refers to the high address of low-order data, 0x12345678, 12 stores buf[0], and 78 (low-order data) stores buf[3] (high address). That is, conventional positive-order storage.

Little endian is the opposite of big endian. 0x12345678, 78 exists buf[0], there is a low address.

3. Initialization example of source code analysis - initRecorder

/**

* 初始化录音实例

*/

initRecorder(): void {

if (this.context) {

// 关闭先前的录音实例,因为前次的实例会缓存少量数据

this.destroy();

}

this.context = new (window.AudioContext || window.webkitAudioContext)();

this.analyser = this.context.createAnalyser(); // 录音分析节点

this.analyser.fftSize = 2048; // 表示存储频域的大小

// 第一个参数表示收集采样的大小,采集完这么多后会触发 onaudioprocess 接口一次,该值一般为1024,2048,4096等,一般就设置为4096

// 第二,三个参数分别是输入的声道数和输出的声道数,保持一致即可。

let createScript = this.context.createScriptProcessor || this.context.createJavaScriptNode;

this.recorder = createScript.apply(this.context, [4096, this.config.numChannels, this.config.numChannels]);

// 兼容 getUserMedia

this.initUserMedia();

// 音频采集

this.recorder.onaudioprocess = e => {

if (!this.isrecording || this.ispause) {

// 不在录音时不需要处理,FF 在停止录音后,仍会触发 audioprocess 事件

return;

}

// getChannelData返回Float32Array类型的pcm数据

if (1 === this.config.numChannels) {

let data = e.inputBuffer.getChannelData(0);

// 单通道

this.buffer.push(new Float32Array(data));

this.size += data.length;

} else {

/*

* 双声道处理

* e.inputBuffer.getChannelData(0)得到了左声道4096个样本数据,1是右声道的数据,

* 此处需要组和成LRLRLR这种格式,才能正常播放,所以要处理下

*/

let lData = new Float32Array(e.inputBuffer.getChannelData(0)),

rData = new Float32Array(e.inputBuffer.getChannelData(1)),

// 新的数据为左声道和右声道数据量之和

buffer = new ArrayBuffer(lData.byteLength + rData.byteLength),

dData = new Float32Array(buffer),

offset = 0;

for (let i = 0; i < lData.byteLength; ++i) {

dData[ offset ] = lData[i];

offset++;

dData[ offset ] = rData[i];

offset++;

}

this.buffer.push(dData);

this.size += offset;

}

// 统计录音时长

this.duration += 4096 / this.inputSampleRate;

// 录音时长回调

this.onprocess && this.onprocess(this.duration);

}

}3. Start recording of source code analysis - start

/**

* 开始录音

*

* @returns {void}

* @memberof Recorder

*/

start(): void {

if (this.isrecording) {

// 正在录音,则不允许

return;

}

// 清空数据

this.clear();

this.initRecorder();

this.isrecording = true;

navigator.mediaDevices.getUserMedia({

audio: true

}).then(stream => {

// audioInput表示音频源节点

// stream是通过navigator.getUserMedia获取的外部(如麦克风)stream音频输出,对于这就是输入

this.audioInput = this.context.createMediaStreamSource(stream);

}, error => {

// 抛出异常

Recorder.throwError(error.name + " : " + error.message);

}).then(() => {

// audioInput 为声音源,连接到处理节点 recorder

this.audioInput.connect(this.analyser);

this.analyser.connect(this.recorder);

// 处理节点 recorder 连接到扬声器

this.recorder.connect(this.context.destination);

});

}3. Stop recording and auxiliary functions of source code analysis

/**

* 停止录音

*

* @memberof Recorder

*/

stop(): void {

this.isrecording = false;

this.audioInput && this.audioInput.disconnect();

this.recorder.disconnect();

}

// 录音时长回调

this.onprocess && this.onprocess(this.duration);

/**

* 获取WAV编码的二进制数据(dataview)

*

* @returns {dataview} WAV编码的二进制数据

* @memberof Recorder

*/

private getWAV() {

let pcmTemp = this.getPCM(),

wavTemp = Recorder.encodeWAV(pcmTemp, this.inputSampleRate,

this.outputSampleRate, this.config.numChannels, this.oututSampleBits, this.littleEdian);

return wavTemp;

}

/**

* 获取PCM格式的blob数据

*

* @returns { blob } PCM格式的blob数据

* @memberof Recorder

*/

getPCMBlob() {

return new Blob([ this.getPCM() ]);

}

/**

* 获取PCM编码的二进制数据(dataview)

*

* @returns {dataview} PCM二进制数据

* @memberof Recorder

*/

private getPCM() {

// 二维转一维

let data = this.flat();

// 压缩或扩展

data = Recorder.compress(data, this.inputSampleRate, this.outputSampleRate);

// 按采样位数重新编码

return Recorder.encodePCM(data, this.oututSampleBits, this.littleEdian);

}4. The core algorithm of source code analysis-encodeWAV

static encodeWAV(bytes: dataview, inputSampleRate: number, outputSampleRate: number, numChannels: number, oututSampleBits: number, littleEdian: boolean = true) {

let sampleRate = Math.min(inputSampleRate, outputSampleRate),

sampleBits = oututSampleBits,

buffer = new ArrayBuffer(44 + bytes.byteLength),

data = new DataView(buffer),

channelCount = numChannels, // 声道

offset = 0;

// 资源交换文件标识符

writeString(data, offset, 'RIFF'); offset += 4;

// 下个地址开始到文件尾总字节数,即文件大小-8

data.setUint32(offset, 36 + bytes.byteLength, littleEdian); offset += 4;

// WAV文件标志

writeString(data, offset, 'WAVE'); offset += 4;

// 波形格式标志

writeString(data, offset, 'fmt '); offset += 4;

// 过滤字节,一般为 0x10 = 16

data.setUint32(offset, 16, littleEdian); offset += 4;

// 格式类别 (PCM形式采样数据)

data.setUint16(offset, 1, littleEdian); offset += 2;

// 声道数

data.setUint16(offset, channelCount, littleEdian); offset += 2;

// 采样率,每秒样本数,表示每个通道的播放速度

data.setUint32(offset, sampleRate, littleEdian); offset += 4;

// 波形数据传输率 (每秒平均字节数) 声道数 × 采样频率 × 采样位数 / 8

data.setUint32(offset, channelCount * sampleRate * (sampleBits / 8), littleEdian); offset += 4;

// 快数据调整数 采样一次占用字节数 声道数 × 采样位数 / 8

data.setUint16(offset, channelCount * (sampleBits / 8), littleEdian); offset += 2;

// 采样位数

data.setUint16(offset, sampleBits, littleEdian); offset += 2;

// 数据标识符

writeString(data, offset, 'data'); offset += 4;

// 采样数据总数,即数据总大小-44

data.setUint32(offset, bytes.byteLength, littleEdian); offset += 4;

// 给wav头增加pcm体

for (let i = 0; i < bytes.byteLength;) {

data.setUint8(offset, bytes.getUint8(i));

offset++;

i++;

}

return data;

}Four. The core algorithm of source code analysis-encodePCM

/**

* 转换到我们需要的对应格式的编码

*

* @static

* @param {float32array} bytes pcm二进制数据

* @param {number} sampleBits 采样位数

* @param {boolean} littleEdian 是否是小端字节序

* @returns {dataview} pcm二进制数据

* @memberof Recorder

*/

static encodePCM(bytes, sampleBits: number, littleEdian: boolean = true) {

let offset = 0,

dataLength = bytes.length * (sampleBits / 8),

buffer = new ArrayBuffer(dataLength),

data = new DataView(buffer);

// 写入采样数据

if (sampleBits === 8) {

for (var i = 0; i < bytes.length; i++, offset++) {

// 范围[-1, 1]

var s = Math.max(-1, Math.min(1, bytes[i]));

// 8位采样位划分成2^8=256份,它的范围是0-255;

// 对于8位的话,负数*128,正数*127,然后整体向上平移128(+128),即可得到[0,255]范围的数据。

var val = s < 0 ? s * 128 : s * 127;

val = +val + 128;

data.setInt8(offset, val);

}

} else {

for (var i = 0; i < bytes.length; i++, offset += 2) {

var s = Math.max(-1, Math.min(1, bytes[i]));

// 16位的划分的是2^16=65536份,范围是-32768到32767

// 因为我们收集的数据范围在[-1,1],那么你想转换成16位的话,只需要对负数*32768,对正数*32767,即可得到范围在[-32768,32767]的数据。

data.setInt16(offset, s < 0 ? s * 0x8000 : s * 0x7FFF, littleEdian);

}

}

return data;

}Voice sending and real-time transcription

- Where are the audio files stored?

- Blob Url those things

- What does the real-time voice transcription service server need to do?

- Front-end code implementation

Where are the audio files stored?

Are voice recordings sent to Alibaba Cloud OSS once?

Doing so appears to be a waste of resources.

Editing status: stored locally, the current browser can access it

Sending status: stored in OSS, accessible on the public network

How to save locally? Save as Blob Url

How to save OSS? Get the token from cms, upload it to the xxx-audio bucket of OSS, and get a hash

Blob Url those things

Blob Url长什么样?

blob:http://localhost:9090/39b60422-26f4-4c67-8456-7ac3f29115ecBlob objects are very common in front-end development. I will list several application scenarios below:

The base64 format attribute after canvas toDataURL will exceed the maximum length limit of the label attribute value

<input type="file" /> The File object after uploading the file, initially I just want to keep it locally, and then upload it to the server when the time is right

Create BlobUrl: URL.createObjectURL(object)

Release BlobUrl: URL.revokeObjectURL(objectURL)

Blob Url those things

How does the life cycle of URLs behave in vue components?

Vue's single-file components share a document, which is why it is called a single-page application, so it can communicate directly between components through blob URLs.

In the case of vue-router using hash mode, the routing between pages will not reload the entire page, so the life cycle of the URL is very strong, so you can also use blob for component communication across pages (non-new tabs). URL.

It should be noted that in the hash mode of vue, more attention needs to be paid to the memory release through URL.revokeObjectURL().

<!--组件发出blob URL-->

<label for="background">上传背景</label>

<input type="file" style="display: none"

id="background" name="background"

accept="image/png, image/jpeg" multiple="false"

@change="backgroundUpload"

>

backgroundUpload(event) {

const fileBlobURL = window.URL.createObjectURL(event.target.files[0]);

this.$emit('background-change', fileBlobURL);

// this.$bus.$emit('background-change', fileBlobURL);

},

<!--组件接收blob URL-->

<BackgroundUploader @background-change="backgroundChangeHandler"></BackgroundUploader>

// this.$bus.$on("background-change", backgroundChangeHandler);

backgroundChangeHandler(url) {

// some code handle blob url...

},How does the life cycle of URLs behave in vue components?

Vue's single-file components share a document, which is why it is called a single-page application, so it can communicate directly between components through blob URLs.

In the case of vue-router using hash mode, the routing between pages will not reload the entire page, so the life cycle of the URL is very strong, so you can also use blob for component communication across pages (non-new tabs). URL.

It should be noted that in the hash mode of vue, more attention needs to be paid to the memory release through URL.revokeObjectURL().

https://github.com/FrankKai/FrankKai.github.io/issues/138

What does the real-time voice transcription service server need to do?

Provides an interface that returns text by passing a File instance that stores the Audio Blob object.

this.recorder.blobObjPCM = this.recorderInstance.getPCMBlob();

transferAudioToText() {

this.editor.loading = true;

const formData = new FormData();

const file = new File([this.recorder.blobObjPCM], `${+new Date()}`, { type: this.recorder.blobObjPCM.type });

formData.append('file', file);

apiXunFei

.realTimeVoiceTransliterationByFile(formData)

.then((data) => {

this.xunfeiTransfer.text = data;

this.editor.tip = '发送文字';

this.editor.transfered = !this.editor.transfered;

this.editor.loading = false;

})

.catch(() => {

this.editor.loading = false;

this.$Message.error('转写语音失败');

});

}, /**

* 获取PCM格式的blob数据

*

* @returns { blob } PCM格式的blob数据

* @memberof Recorder

*/

getPCMBlob() {

return new Blob([ this.getPCM() ]);

} How does the server need to be implemented?

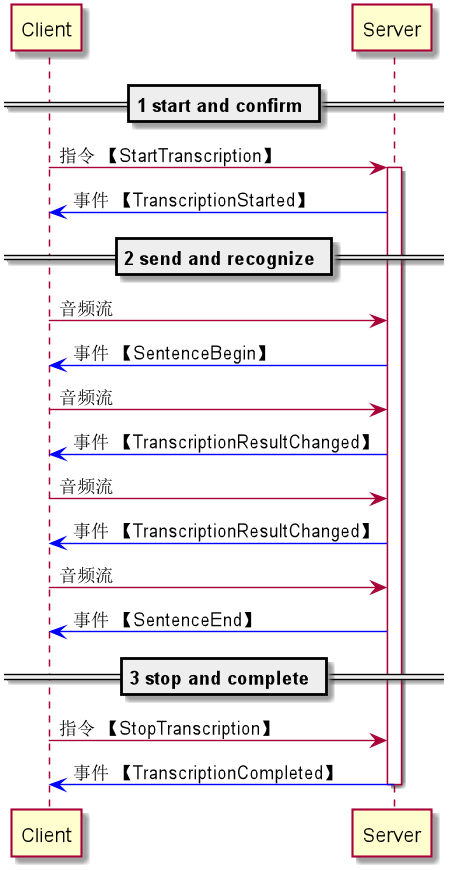

1. Authentication

When the client establishes a WebSocket connection with the server, it needs to use Token for authentication

2. start and confirm

The client initiates a request, and the server confirms that the request is valid

3. send and recognize

Send voice data cyclically and continuously receive recognition results

- stop and complete

Notify the server that the voice data is sent, and notify the client that the recognition is completed after the server recognizes it.

Alibaba OSS provides SDKs such as java, python, c++, ios, android, etc.

https://help.aliyun.com/document_detail/84428.html?spm=a2c4g.11186623.6.574.10d92318ApT1T6

Front-end code implementation

// 发送语音

async audioSender() {

const audioValidated = await this.audioValidator();

if (audioValidated) {

this.audio.urlMobile = await this.transferMp3ToAmr(this.recorder.blobObjMP3);

const audioBase64Str = await this.transferBlobFileToBase64(this.recorder.blobObjMP3);

this.audio.urlPC = await this.uploadAudioToOSS(audioBase64Str);

this.$emit('audio-sender', {

audioPathMobile: this.audio.urlMobile,

audioLength: parseInt(this.$refs.audio.duration * 1000),

transferredText: this.xunfeiTransfer.text,

audioPathPC: this.audio.urlPC,

});

this.closeSmartAudio();

}

},

// 生成移动端可以发送的amr格式音频

transferMp3ToAmr() {

const formData = new FormData();

const file = new File([this.recorder.blobObjMP3], `${+new Date()}`, { type: this.recorder.blobObjMP3.type });

formData.append('file', file);

return new Promise((resolve) => {

apiXunFei

.mp32amr(formData)

.then((data) => {

resolve(data);

})

.catch(() => {

this.$Message.error('mp3转换amr格式失败');

});

});

},

// 转换Blob对象为Base64 string,以供上传OSS

async transferBlobFileToBase64(file) {

return new Promise((resolve) => {

const reader = new FileReader();

reader.readAsDataURL(file);

reader.onloadend = function onloaded() {

const fileBase64 = reader.result;

resolve(fileBase64);

};

});

},Universal recording kit

1.指定采样位数,采样频率,声道数

2.指定音频格式

3.指定音频计算单位Byte,KB,MB

4.自定义开始和停止来自iView的icon,类型、大小

5.返回音频blob,音频时长和大小

6.指定最大音频时长和音频大小, 达到二者其一自动停止录制Generic component code analysis

/*

* * 设计思路:

* * 使用到的库:js-audio-recorder

* * 功能:

* * 1.指定采样位数,采样频率,声道数

* * 2.指定音频格式

* * 3.指定音频计算单位Byte,KB,MB

* * 4.自定义开始和停止来自iView的icon,类型、大小

* * 5.返回音频blob,音频时长和大小

* * 6.指定最大音频时长和音频大小, 达到二者其一自动停止录制

* * Author: 高凯

* * Date: 2019.11.7

*/

<template>

<div class="audio-maker-container">

<Icon :type="computedRecorderIcon" @click="recorderVoice" :size="iconSize" />

</div>

</template>

<script>

import Recorder from 'js-audio-recorder';

/*

* js-audio-recorder实例

* 在这里新建的原因在于无需对recorderInstance在当前vue组件上创建多余的watcher,避免性能浪费

*/

let recorderInstance = null;

/*

* 录音器助手

* 做一些辅助录音的工作,例如记录录制状态,音频时长,音频大小等等

*/

const recorderHelperGenerator = () => ({

hearing: false,

duration: 0,

size: 0,

});

export default {

name: 'audio-maker',

props: {

sampleBits: {

type: Number,

default: 16,

},

sampleRate: {

type: Number,

},

numChannels: {

type: Number,

default: 1,

},

audioType: {

type: String,

default: 'audio/wav',

},

startIcon: {

type: String,

default: 'md-arrow-dropright-circle',

},

stopIcon: {

type: String,

default: 'md-pause',

},

iconSize: {

type: Number,

default: 30,

},

sizeUnit: {

type: String,

default: 'MB',

validator: (unit) => ['Byte', 'KB', 'MB'].includes(unit),

},

maxDuration: {

type: Number,

default: 10 * 60,

},

maxSize: {

type: Number,

default: 1,

},

},

mounted() {

this.initRecorderInstance();

},

beforeDestroy() {

recorderInstance = null;

},

computed: {

computedSampleRate() {

const audioContext = new (window.AudioContext || window.webkitAudioContext)();

const defaultSampleRate = audioContext.sampleRate;

return this.sampleRate ? this.sampleRate : defaultSampleRate;

},

computedRecorderIcon() {

return this.recorderHelper.hearing ? this.stopIcon : this.startIcon;

},

computedUnitDividend() {

const sizeUnit = this.sizeUnit;

let unitDividend = 1024 * 1024;

switch (sizeUnit) {

case 'Byte':

unitDividend = 1;

break;

case 'KB':

unitDividend = 1024;

break;

case 'MB':

unitDividend = 1024 * 1024;

break;

default:

unitDividend = 1024 * 1024;

}

return unitDividend;

},

computedMaxSize() {

return this.maxSize * this.computedUnitDividend;

},

},

data() {

return {

recorderHelper: recorderHelperGenerator(),

};

},

watch: {

'recorderHelper.duration': {

handler(duration) {

if (duration >= this.maxDuration) {

this.stopRecord();

}

},

},

'recorderHelper.size': {

handler(size) {

if (size >= this.computedMaxSize) {

this.stopRecord();

}

},

},

},

methods: {

initRecorderInstance() {

// 采样相关

const sampleConfig = {

sampleBits: this.sampleBits, // 采样位数

sampleRate: this.computedSampleRate, // 采样频率

numChannels: this.numChannels, // 声道数

};

recorderInstance = new Recorder(sampleConfig);

},

recorderVoice() {

if (!this.recorderHelper.hearing) {

// 录音前重置录音状态

this.reset();

this.startRecord();

} else {

this.stopRecord();

}

this.recorderHelper.hearing = !this.recorderHelper.hearing;

},

startRecord() {

try {

recorderInstance.start();

// 回调持续输出时长

recorderInstance.onprogress = ({ duration }) => {

this.recorderHelper.duration = duration;

this.$emit('on-recorder-duration-change', parseFloat(this.recorderHelper.duration.toFixed(2)));

};

} catch (err) {

this.$debug(err);

}

},

stopRecord() {

recorderInstance.stop();

const audioBlob = new Blob([recorderInstance.getWAV()], { type: this.audioType });

this.recorderHelper.size = (audioBlob.size / this.computedUnitDividend).toFixed(2);

this.$emit('on-recorder-finish', { blob: audioBlob, size: parseFloat(this.recorderHelper.size), unit: this.sizeUnit });

},

reset() {

this.recorderHelper = recorderHelperGenerator();

},

},

};

</script>

<style lang="scss" scoped>

.audio-maker-container {

display: inline;

i.ivu-icon {

cursor: pointer;

}

}

</style>https://github.com/2fps/recorder/issues/21

Common component usage

import AudioMaker from '@/components/audioMaker';

<AudioMaker

v-if="!recorderAudio.blobUrl"

@on-recorder-duration-change="durationChange"

@on-recorder-finish="recorderFinish"

:maxDuration="audioMakerConfig.maxDuration"

:maxSize="audioMakerConfig.maxSize"

:sizeUnit="audioMakerConfig.sizeUnit"

></AudioMaker>

durationChange(duration) {

this.resetRecorderAudio();

this.recorderAudio.duration = duration;

},

recorderFinish({ blob, size, unit }) {

this.recorderAudio.blobUrl = window.URL.createObjectURL(blob);

this.recorderAudio.size = size;

this.recorderAudio.unit = unit;

},

releaseBlobMemory(blorUrl) {

window.URL.revokeObjectURL(blorUrl);

},

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。