For the same piece of logic, the performance of the code implemented by different people will vary by orders of magnitude; for the same piece of code, you may fine-tune a few characters or the order of a line of code, and the performance will be improved several times; for the same piece of code, it may also be There are also several times the performance difference running on different processors; ten times programmers are not just legends, but probably all around us. Ten times is reflected in the programmer's method, and the code performance is the most intuitive aspect.

This article is the fourth in the " How to Write High-Performance Code " series. This article will tell you how data access affects program performance, and how to improve program performance by changing data access.

Why does data access speed affect program performance?

Each operation of the program can be simplified into such a three-step model: the first step is to read the data (of course, some data are sent by other methods); the second step is to process the data; the third step, the The processed result is written to memory. Here I refer to these three steps as reading, computing, and writing. In fact, the real CPU instruction execution process will be slightly more complicated, but it is actually these three steps. And a complex program contains countless CPU instructions, if reading or writing data is too slow, it will inevitably affect the performance of the program.

In order to be more intuitive, I liken the process of program execution here to that of a chef cooking. The chef's workflow is to take the original ingredients, then process the ingredients (fry, cook, fry), and finally serve them. The factors that affect the chef's speed of serving dishes are not only before the processing process, but the time-consuming to obtain ingredients will also affect the chef's speed of serving dishes. Some ingredients are at hand and can be obtained quickly, but some ingredients may be in the cold storage or even in the vegetable market, which is very inconvenient to obtain.

The CPU is like a chef, and the data is the ingredients of the CPU, the data in the register is the ingredients at the CPU’s hand, the data in the memory is the ingredients in the cold storage, the data on the solid-state drive (SSD) is the ingredients still in the vegetable market, and the mechanical hard disk ( The data on the HDD) is like a vegetable still growing in the field... If the CPU is running the program, if it can't get the data it needs, it can only wait there to waste time.

How much does data access speed affect program performance?

The latency of reading and writing data in different memories is very different. Given that in most scenarios, we are reading data, we only take data reading as an example. The fastest register and the slowest mechanical disk, random The latency of reading and writing differs by a million times. Maybe you don't have an intuitive concept, let's take the chef as an analogy.

Suppose the chef wants to make a scrambled egg with tomatoes. If the ingredients are prepared, it only takes ten seconds for the ingredients to be fried in the pot. We compare this time to the time it takes for the CPU to fetch data from a register. However, if the CPU gets the data from the disk, the time it takes is equivalent to the chef growing tomatoes or raising chickens to lay eggs (3-4 months). It can be seen that obtaining data from the wrong storage device will greatly affect the running speed of the program.

Let's talk about an actual case we encountered in the production environment before, and we also had a failure in the production environment. The reason is this. When we have a service containerized transformation, it is not deployed in the same computer room as the upstream service. Although the delay across the computer room will only increase by 1ms, there is a problem with their service code writing, and there is a batch serialization of interfaces. Another service is called, and the serial accumulation causes the interface delay to increase by hundreds of ms. The service that had no performance problems originally had performance problems because of the migration of the computer room...

Differences in the performance of each memory

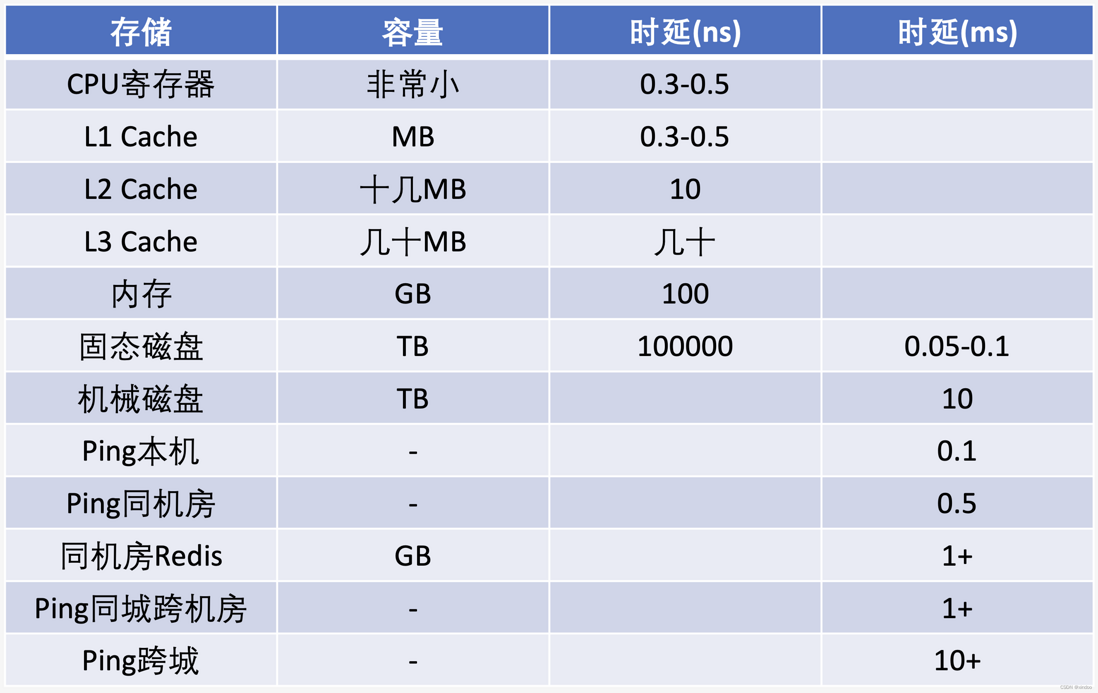

In fact, when coding, there are various storage devices encountered, such as registers, memory, disks, network storage..., each device has its own characteristics. Only by recognizing the differences between various memories can we use the right memory in the right scenario. The following table is the reference data of random read delay of various common storage devices...

Remarks: The above data may be different in different hardware devices. This is just to show the difference and does not represent the exact value. For accurate information, please refer to the hardware manual.

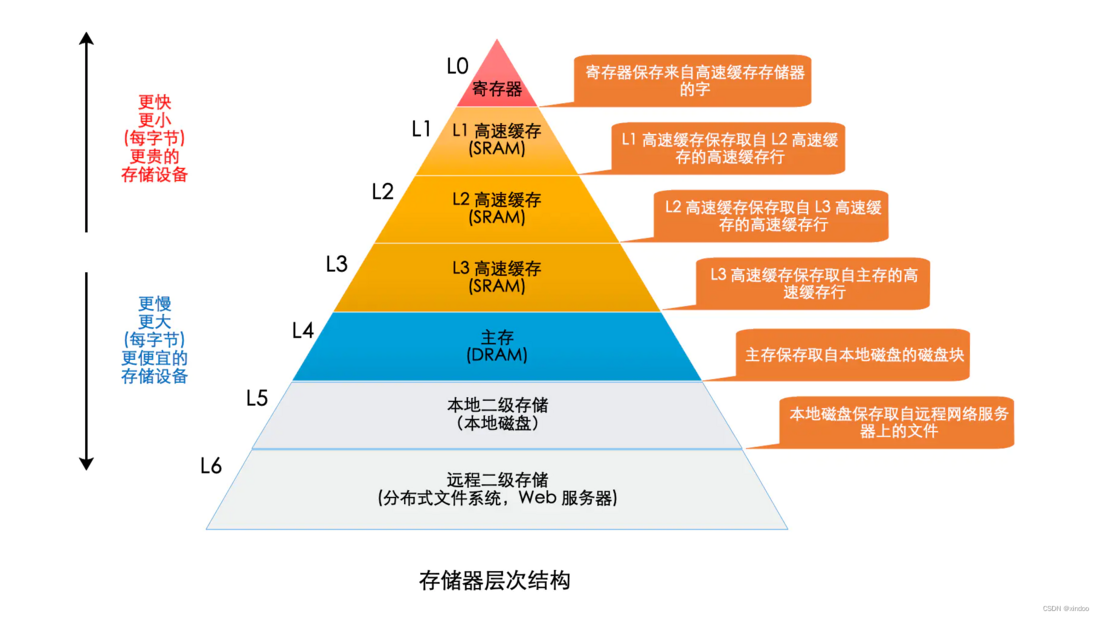

Although we think that the reading speed of memory is very fast in daily life, when we write code in daily life, the data acquisition speed is slow, and the speed of adding a memory cache simply takes off. However, the access speed of memory is still too slow compared to the running speed of the CPU. The time to read the memory once is enough for the CPU to execute hundreds of instructions, so modern CPUs add cache to the memory.

How to reduce the impact of data access latency on performance?

Reducing the impact of data access latency on performance is also as simple as putting data on the fastest storage medium possible . However, there are irreconcilable contradictions among access speed, capacity, and price . Simply put, the faster the speed, the smaller the capacity, but the more expensive the price. On the contrary, the larger the capacity, the slower the speed and the cheaper the price .

The world is always so smart, it seems that everything has been arranged, and we don't need to put all the data on the fastest storage medium. Remember the data locality we mentioned in the second article (using data features cleverly) [https://blog.csdn.net/xindoo/article/details/123941141 ]! There are two types of locality, spatial locality and temporal locality.

- Temporal locality: If a piece of data is accessed at a certain moment, the data will be accessed again soon after.

- Spatial locality: If a storage unit has been accessed, there is a high probability that nearby storage units will also be accessed soon after.

To sum up these two points, the program will only access a small part of the data in a concentrated manner most of the time. This means that we can cover most of the accessed data with less storage space. To put it directly, we can add cache. In fact, whether it is computer hardware, databases, or business systems, caches are everywhere. Even every line of code you write uses the cache when running on the machine. I don’t know if you have paid attention to the CPU. The CPU has a parameter, which is the cache size. Let’s take the intel Core i7-12650HX as an example, it has 24MB of L3 cache, this cache is the cache between the CPU and the memory. It's just that modern computers shield the underlying details, and we are unlikely to get to the main ones every day.

When we write our own code, we can also add cache to improve program performance. For a recent example we encountered in the system, we are doing data permission-related functions recently. Different employees have different permissions in our system, so the data they see should also be different. Our implementation method is that when each user requests the system, it first obtains a list of all permissions of the user, and then displays all the data in the permission list.

Because each person's permission list is relatively large, the performance of the permission interface is not very good, and each request takes a long time. Therefore, we directly added a cache to the data of this interface, and firstly fetched it from the cache, and the interface could not be readjusted, which greatly improved the performance of the program. Of course, because the permission data does not change frequently, there is no need to think too much about the consequences of data lag. In addition, we only add a few minutes to the cached data, because a single user uses our system for a few minutes. After a few minutes, the cache space will be automatically released after the data expires, so as to achieve the purpose of saving space.

When I was in college, laptops were still equipped with mechanical hard drives. At that time, computers would be very stuck for a long time. Later, I learned that replacing SSDs would improve computer performance. At that time, SSDs were quite expensive, and ordinary notebooks did not. I will use an SSD as a standard configuration. Later, I saved half a month of living expenses and replaced a 120g SSD for my laptop. The running speed of the computer has been significantly improved. In essence, it is because the random access delay of the SSD is hundreds of times faster than that of the mechanical hard disk. . Previously, a major manufacturer claimed to have improved the performance of mysql by hundreds of times, but it was actually based on SSD for many query optimizations.

Caching is not a silver bullet

Silver Bullet (English: Silver Bullet), refers to a bullet made of sterling silver or silver-plated. Under the influence of European folklore and the trend of Gothic novels since the 19th century, silver bullets are often portrayed as weapons with exorcism effects, special effects weapons against supernatural monsters such as werewolves and vampires. Later, it was also compared to an extremely effective solution, as the name of the killer, the strongest ultimate move, the trump card, etc.

Here is a special reminder that caching is not a panacea. Caching actually has side effects, that is, it is difficult to guarantee the validity of data. The cache actually contains old data. Is the current data still the same? Not sure, maybe the data has changed long ago, so you must pay attention to the validity of the cached data when using the cache. If the cache time is too long, the possible performance of data failure and the risk of data inconsistency will be greater. If the cache time is too short, because the original data needs to be fetched frequently, the cache will be less meaningful. Therefore, when using the cache, you must make a trade-off between data inconsistency and performance . You need to correctly evaluate the timeliness of the data and set a reasonable expiration policy for the cache.

As mentioned above, in fact, every line of code we write uses the cache. Now everyone already knows that this cache is actually the CPU's Cache. The Cache of the CPU also has obvious side effects, and we have to pay attention when writing multi-threaded code, that is, the problem of data consistency between multi-core CPUs. Because of the existence of CPU Cache, we have to consider the problem of data synchronization when writing multi-threaded code, which makes it difficult to write multi-threaded code, and it is difficult to troubleshoot when there is a problem.

There is an interview question that is actually easy to explain this problem - multi-threaded counter , multi-threaded to operate the counter, accumulating statistical data, how to ensure the accuracy of data statistics. If you simply use cnt++ to implement, you will encounter data inconsistency caused by multi-core CPU cache. The specific principle will not be explained here. Anyway, the result is that the statistical data will be less than the real data. The correct way is that you must add a multi-thread synchronization mechanism in the accumulation process to ensure that only one thread can be operating at the same time, and the data can be written back to memory after the operation. In java, locks or atoms must be used. class implementation. And this is a threshold for new programmers.

Summarize

Data access is an indispensable part of any program, and even for most programs, time is spent in the process of data access, so as long as the time-consuming of this part is optimized, the performance of the program will definitely be improved.

This is the whole content of this article. In the next article, we will continue to discuss how to optimize the performance to the extreme, so stay tuned! ! In addition, if you are interested, you can also check out the previous articles.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。