Introduction: Qin Lihui's sharing at the 5.21 Flink CDC Meetup.

This article is compiled from a speech delivered by SF big data R&D engineer Qin Lihui at the Flink CDC Meetup on May 21. The main contents include:

- SF data integration background

- Flink CDC practical problems and optimization

- future plan

Click to view live replay & speech PDF

1. Background of SF Express Data Integration

SF Express is an express logistics service provider. Its main business includes time-sensitive express, economic express, intra-city distribution and cold chain transportation.

The transportation process requires the support of a series of systems, such as order management systems, smart property systems, and many sensors in many transit terminals, cars or planes, which generate a lot of data. If data analysis is required on these data, data integration is an important step.

SF's data integration has experienced several years of development, and is mainly divided into two parts, one is offline data integration and the other is real-time data integration. The offline data integration is mainly based on DataX. This article mainly introduces the real-time data integration scheme.

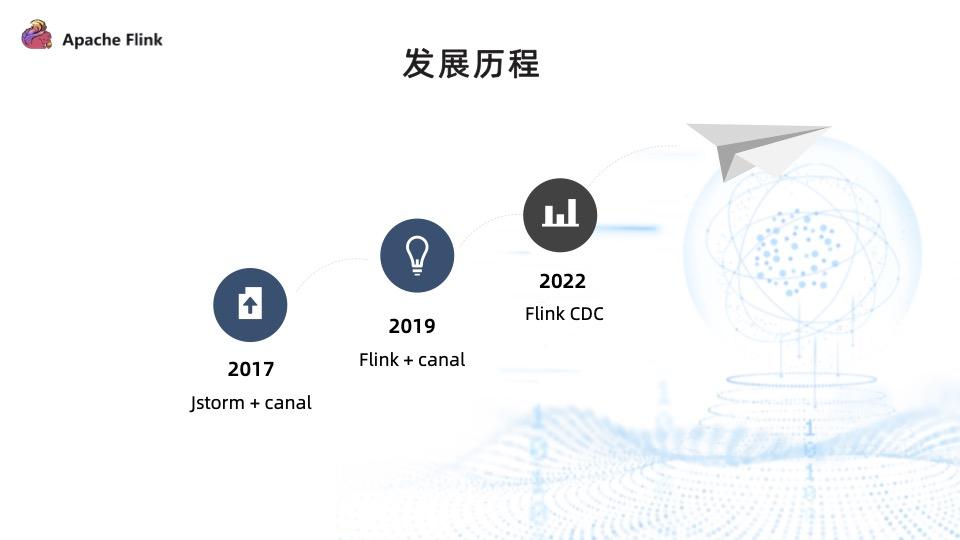

In 2017, the first version of the real-time data integration solution was implemented based on Jstorm + Canal. However, this solution has many problems, such as the inability to guarantee data consistency, low throughput, and difficulty in maintenance. In 2019, with the continuous development of the Flink community, it complemented many important features, so the second version of the real-time data integration solution was implemented based on Flink + Canal. However, this solution is still not perfect. After internal research and practice, we will fully turn to Flink CDC in early 2022.

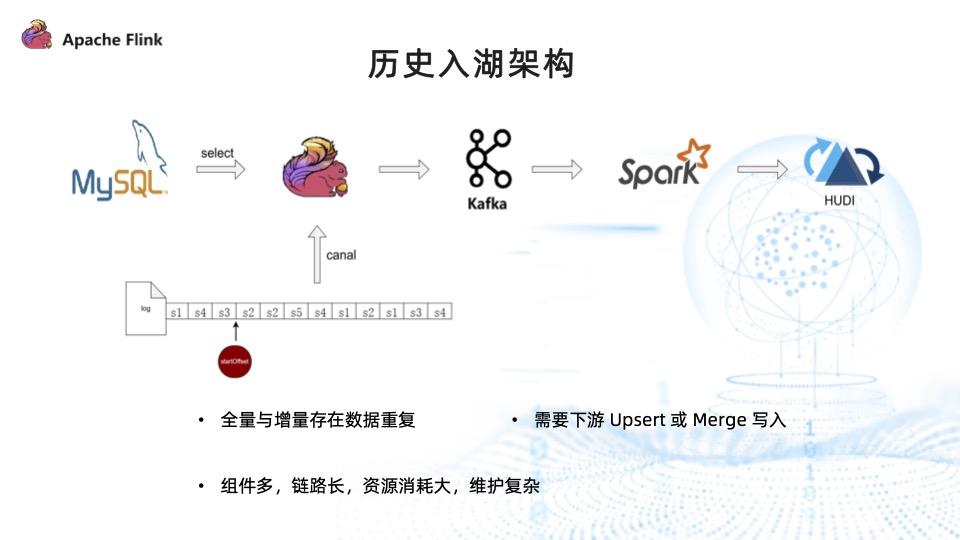

The above picture shows the real-time data entry lake architecture of Flink + Canal.

After Flink is started, it first reads the current Binlog information, marked as StartOffset, and collects the full amount of data through select and sends it to downstream Kafka. After the full collection is completed, the incremental log information is collected from startOffset and sent to Kafka. Finally, the data of Kafka is consumed by Spark and written to Hudi.

But there are three problems with this architecture:

- There is duplication of full and incremental data: because the table will not be locked during the collection process, if there is data change during the full collection process and the data is collected, then the data will be duplicated with the data in Binlog;

- Upsert or Merge writing is required downstream to eliminate duplicate data and ensure the eventual consistency of data;

- Two sets of computing engines are required, plus the message queue Kafka to write data into the data lake Hudi. The process involves many components, long links, and large resource consumption.

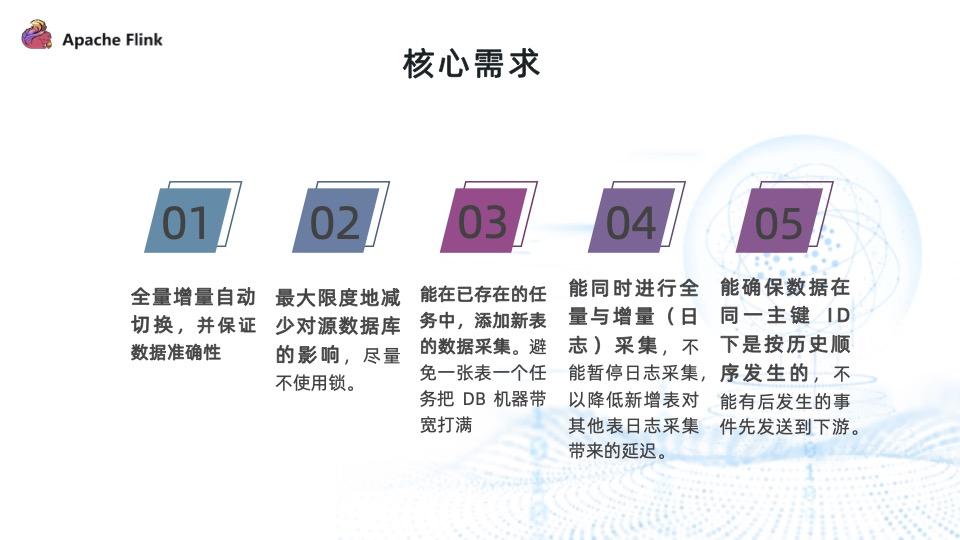

Based on the above questions, we have sorted out the core requirements of data integration:

- Full increments are automatically switched and data accuracy is guaranteed. The architecture of Flink + Canal can achieve full and incremental automatic switching, but the accuracy of the data cannot be guaranteed;

- Minimize the impact on the source database, for example, try not to use locks, energy flow control, etc. during the synchronization process;

- The ability to add data collection of new tables to existing tasks is a very core requirement, because in a complex production environment, waiting for all tables to be ready before data integration can lead to inefficiencies. In addition, if tasks cannot be merged, many tasks need to be started and binlog data collected many times, which may cause the bandwidth of the DB machine to be full;

- Full and incremental log collection can be performed at the same time. For new tables, log collection cannot be suspended to ensure data accuracy. This method will delay log collection for other tables;

- It can ensure that data occurs in historical order under the same primary key ID, and events that occur later will not be sent to the downstream first.

Flink CDC solves business pain points well, and is excellent in scalability, stability, and community activity.

- First of all, it can seamlessly connect to the Flink ecosystem, reuse Flink’s many sink capabilities, and use Flink’s data cleaning and conversion capabilities;

- Secondly, it can automatically switch between full and incremental, and ensure the accuracy of data;

- Third, it can support lock-free reading, resuming transfer from breakpoints, and horizontal expansion, especially in terms of horizontal expansion. In theory, when enough resources are given, the performance bottleneck generally does not appear on the CDC side, but on the data. Whether the source/target source can support reading/writing this much data.

2. Flink CDC practical problems and optimization

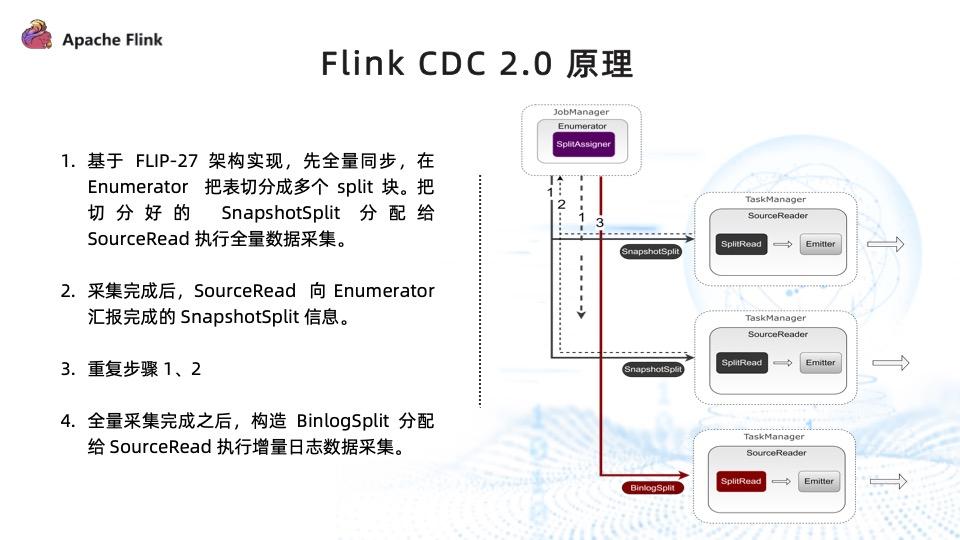

The above picture shows the architecture principle of Flink CDC 2.0. It is based on FLIP-27 implementation, and the core steps are as follows:

- Enumerator first splits the full data into multiple SnapshotSplits, and then sends the SnapshotSplits to SourceReader for execution according to the first step in the above figure. During the execution process, the data will be revised to ensure the consistency of the data;

- After the SnapshotSplit read is completed, report the block information that has been read to the Enumerator;

- Repeat steps (1) and (2) until the full amount of data is read;

- After the full amount of data is read, the Enumerator will construct a BinlogSplit based on the previously completed split information. Sent to SourceRead for execution to read incremental log data.

Problem 1: Adding a table will stop the Binlog log stream

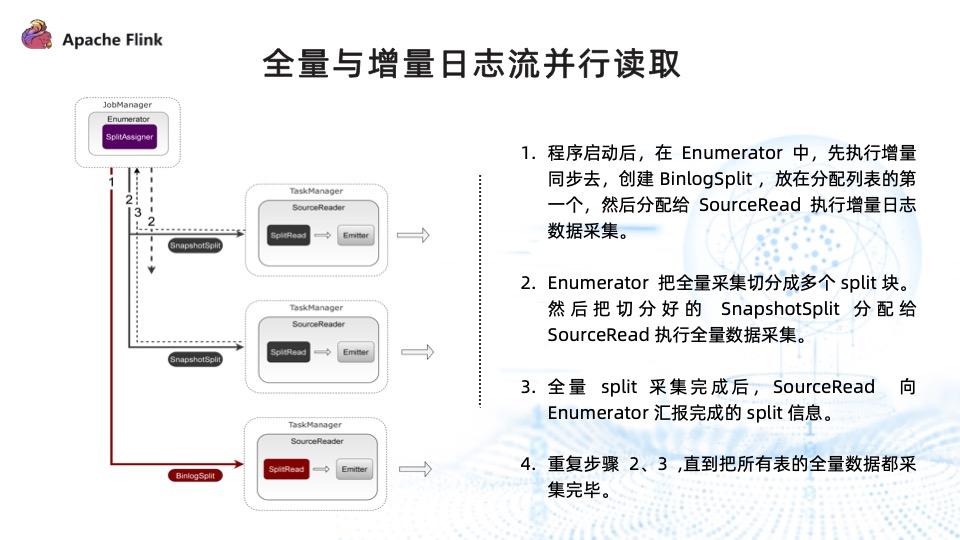

Adding new tables to existing tasks is a very important requirement, and Flink CDC 2.0 also supports this feature. However, in order to ensure data consistency, Flink CDC 2.0 needs to stop the reading of the Binlog log stream in the process of adding a table, and then read the full data of the new table. After the full data of the newly added table is read, restart the previously stopped Binlog task. This also means that adding a new table will affect the log collection progress of other tables. However, we hope that both full and incremental tasks can be performed at the same time. In order to solve this problem, we have extended Flink CDC to support parallel reading of full and incremental log streams. The steps are as follows:

- After the program starts, create a BinlogSplit in the Enumerator, put it in the first place in the allocation list, and assign it to the SourceReader to perform incremental data collection;

- Like the original full data collection, Enumerator divides the full collection into multiple split blocks, and then assigns the divided blocks to SourceReader to perform full data collection;

- After the full data collection is completed, the SourceReader reports the completed full data collection block information to the Enumerator;

- Repeat steps (2) and (3) to collect all the tables.

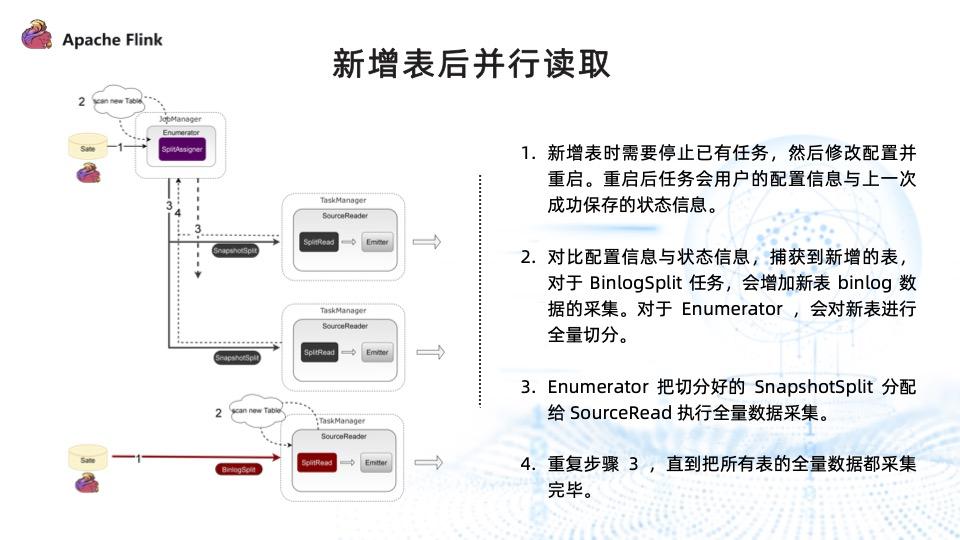

The above is the process of starting the task for the first time and reading the full and incremental logs in parallel. After adding a table, the parallel reading implementation steps are as follows:

- When resuming the task, Flink CDC will obtain the configuration information of the user's new table from the state;

- By comparing user configuration information and status information, the table to be added is captured. For the BinlogSplit task, the collection of binlog data of the new table will be added; for the Enumerator task, the new table will be fully split;

- Enumerator assigns the split SnapshotSplit to SourceReader to perform full data collection;

- Repeat step (3) until all full data is read.

However, after the parallel reading of full and incremental logs is implemented, the problem of data conflict occurs again.

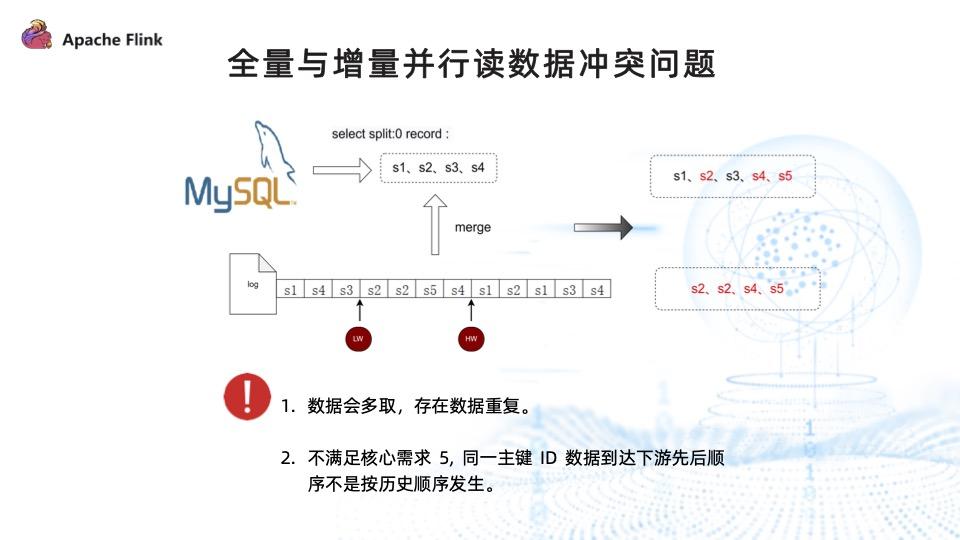

As shown in the figure above, before Flink CDC reads the full amount of data, it will first read the location information of the current Binlog, mark it as LW, and then read the full amount of data through select, and read s1, s2, s3, s4 four pieces of data. Then read the current Binlog position, mark it as HW, and then merge the changed data in LW and HW into the data collected before. After a series of operations, the final collected data is s1, s2, s3, s4 and s5.

The incremental collection process will also read the log information in the Binlog, and will send the four pieces of data s2, s2, s4, and s5 in the LW and HW to the downstream.

There are two problems in the whole process above: first, the data is fetched too much, and there is data duplication. The red mark in the above figure means that there is duplicate data; secondly, the full amount and the increment are in two different threads, and may also be in two different threads. In different JVMs, the data sent downstream first may be full data or incremental data, which means that the order in which the same primary key ID reaches the downstream is not in historical order, which is inconsistent with the core requirements.

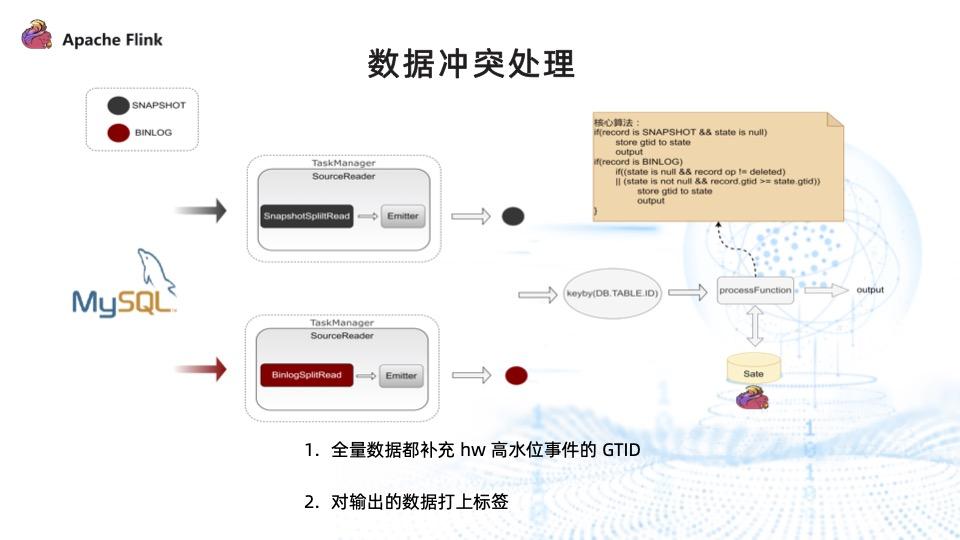

For the problem of data conflict, we provide a solution based on GTID implementation.

First, the full data is marked with a Snapshot tag, and the incremental data is marked with a Binlog tag; secondly, a high-water-level GTID information is supplemented for the full data, and the incremental data itself carries GTID information, so it does not need to be supplemented. When the data is sent, the downstream will connect a KeyBy operator, and then connect the data conflict processing operator. The core of the data conflict is to ensure that the data sent to the downstream is not duplicated and generated in historical order.

If the full amount of collected data is sent and no Binlog data has been sent before, the GTID of this data will be stored in the state and the data will be sent; if the state is not empty and the GTID of this record is greater than or equal to The GTID in the state also stores the GTID of this data in the state and sends this data;

In this way, the problem of data conflict is well resolved, and the data finally output to the downstream is not repeated and occurs in historical order.

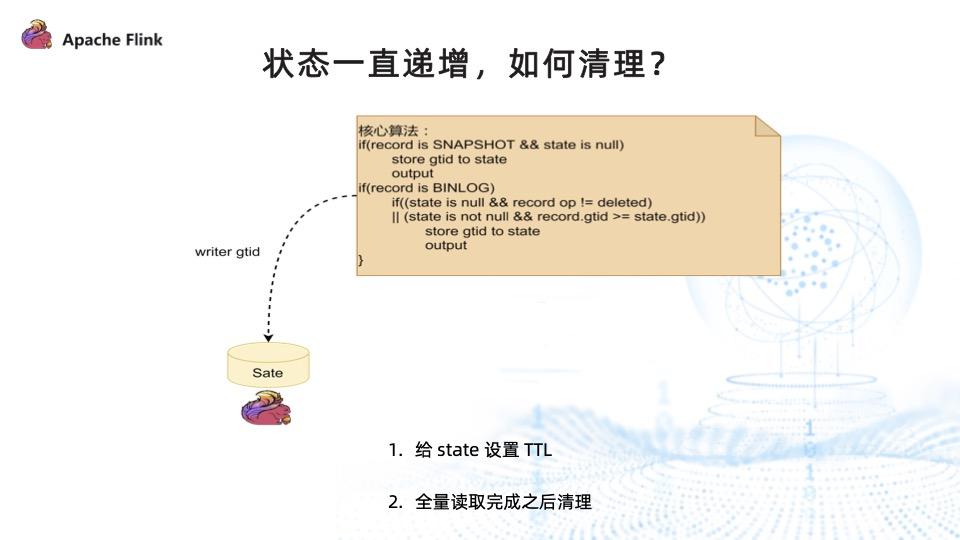

However, new problems arose. It can be seen from the processing algorithm that in order to ensure that the data is not repeated and is distributed in historical order, the GTID information corresponding to all records will be stored in the state, resulting in the state increasing all the time.

TTL is generally preferred for cleaning status, but TTL is difficult to control the time and cannot completely clean up the data. The second way is to clean up manually. After the full scale is completed, a record can be issued to tell the downstream to clean up the data in the state.

All the above problems are solved, and the final solution for parallel reading is shown in the figure below.

First, the data is labeled with four kinds of labels, which represent different states:

- SNAPSHOT : The full amount of collected data information.

- STATE\_BINLOG : The full collection has not yet been completed, and Binlog has collected the change data of this table.

- BINLOG : After the full data collection is completed, Binlog collects the change data of this table.

- TABLE\_FINISHED : After the full data collection is completed, the downstream is notified, and the state can be cleaned up.

The specific implementation steps are as follows:

- Allocate Binlog, at this time, the data collected by Binlog are all STATE\_BINLOG tags;

- Allocate the SnapshotSplit task, and all the collected data are SNAPSHOT labels;

- Enumerator monitors the status of the table in real time. After a certain table is executed and completes the checkpoint, it notifies the Binlog task. After the Binlog task receives the notification, the Binlog information collected by this table will be marked with the BINLOG label; in addition, it will also construct a TABLE\_FINISHED record and send it to the downstream for processing;

- After the data collection is completed, in addition to connecting the data conflict processing operator, a new step is added here: TABLE\_FINISHED event records filtered from the mainstream are sent to the downstream by broadcasting, and the downstream is cleaned up according to specific information The state information of the corresponding table.

Problem 2: There is data skew when writing Hudi

As shown in the figure above, when Flink CDC collects data from three tables, it will read the full data of tableA first, and then read the full data of tableB. During the process of reading tableA, only the sink of tableA has data flowing in downstream.

We solve the problem of data skew by using multi-table mixed reading.

Before introducing multi-table mixing, Flink CDC reads all chunks in tableA and then reads all chunks in tableB. After realizing multi-table mixed reading, the order of reading is changed to read chunk1 of tableA, chunk1 of tableB, chunk1 of tableC, and then read chunk2 of tableA, and so on, and finally solve the downstream sink data skew. The problem is to ensure that each sink has data inflow.

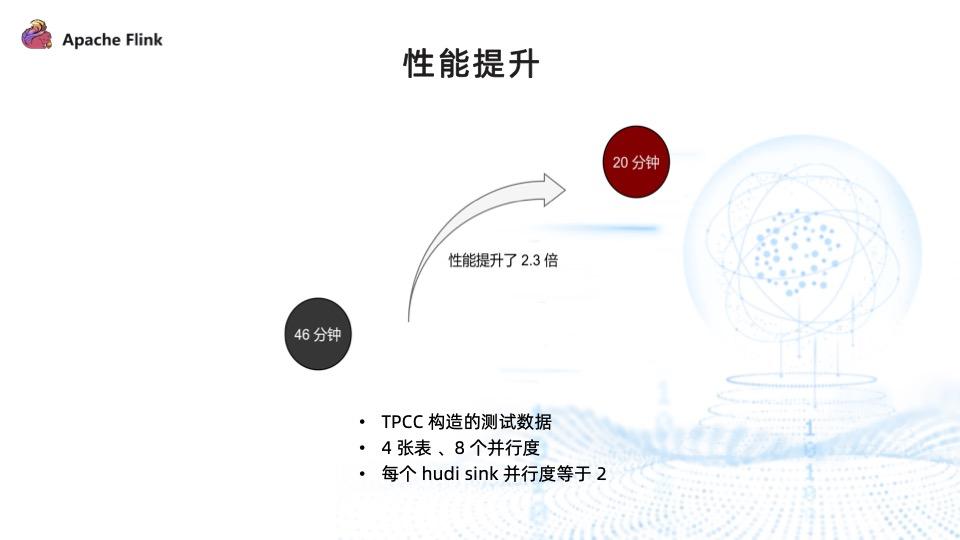

We tested the performance of multi-table mixed reads, and the test data constructed by the TPCC tool, read 4. Table, the total parallelism is 8, the parallelism of each sink is 2, the writing time is reduced from 46 minutes to 20 minutes, and the performance is improved by 2.3 times.

It should be noted that if the parallelism of the sink is equal to the total parallelism, the performance will not be significantly improved. The main function of multi-table mixed reading is to obtain the data sent by each table faster.

Question 3: The user needs to manually specify the schema information

If the user manually executes the schema mapping relationship between the DB schema and the sink, the development efficiency is low, time-consuming and error-prone.

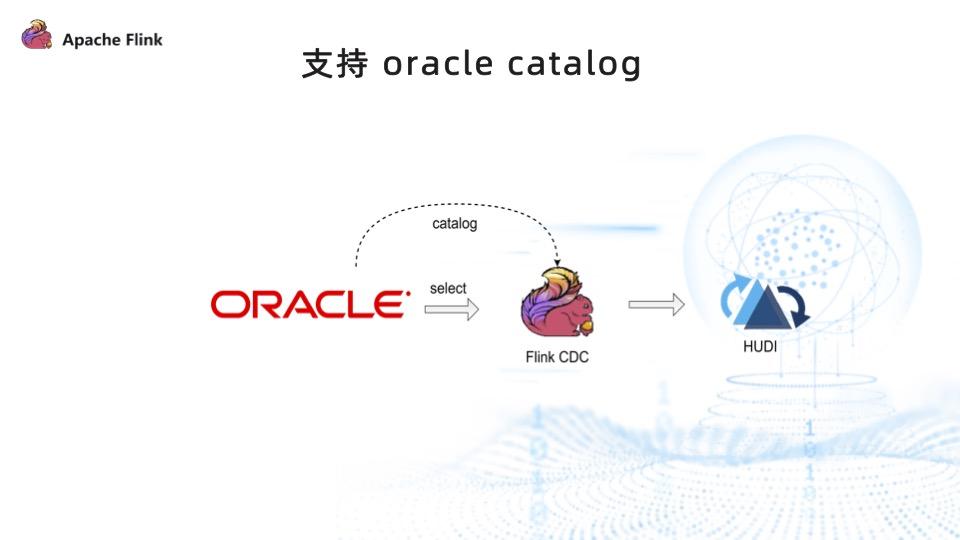

In order to lower the usage threshold for users and improve development efficiency, we have implemented Oracle catalog, which allows users to write data to Hudi through Flink CDC in a low-code way without specifying the mapping relationship between DB schema information and sink schema information. .

3. Future planning

First, support schema information change synchronization. For example, if the schema information of the data source changes, it can be synchronized to Kafka and Hudi; it supports the platform to access more data source types, enhances stability, and realizes the landing of more application scenarios.

Second, support the SQL-based method, use Flink CDC to synchronize data to Hudi, and reduce the user's threshold for use.

Third, I hope that the technology will be more open, grow together with the community, and contribute to the community.

Q&A

Q: How to deal with the resumed data collection?

A: There are two types of resuming from a breakpoint, divided into full volume and Binlog. But they are all based on the ability of Flink state, and the progress will be stored in the state during the synchronization process. If it fails, it can be restored from the state next time.

Q: When MySQL monitors multiple tables and writes them to Hudi tables using SQL, there are multiple jobs, which is very troublesome to maintain. How to synchronize the entire database through a single job?

A: We have expanded Flink CDC based on GTID to support adding new tables in tasks without affecting the collection progress of other tables. Regardless of the fact that the new table affects the progress of other tables, the ability to add new tables can also be based on Flink CDC 2.2.

Q: Will these features of SF Express be implemented in the CDC open source version?

A: At present, our solution still has some limitations. For example, we must use the GTID of MySQL and require downstream operators to handle data conflict. Therefore, it is difficult to realize open source in the community.

Q: Does the new table in Flink CDC 2.0 support full + incremental?

A: Yes.

Q: Will the GTID deduplication operator become a performance bottleneck?

A: After practice, there is no performance bottleneck, it just does some data judgment and filtering.

Click to view live replay & speech PDF

Copyright statement: The content of this article is contributed by Alibaba Cloud's real-name registered users. The copyright belongs to the original author. The Alibaba Cloud developer community does not own the copyright and does not assume the corresponding legal responsibility. For specific rules, please refer to the "Alibaba Cloud Developer Community User Service Agreement" and "Alibaba Cloud Developer Community Intellectual Property Protection Guidelines". If you find any content suspected of plagiarism in this community, fill out the infringement complaint form to report it. Once verified, this community will delete the allegedly infringing content immediately.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。