Summary

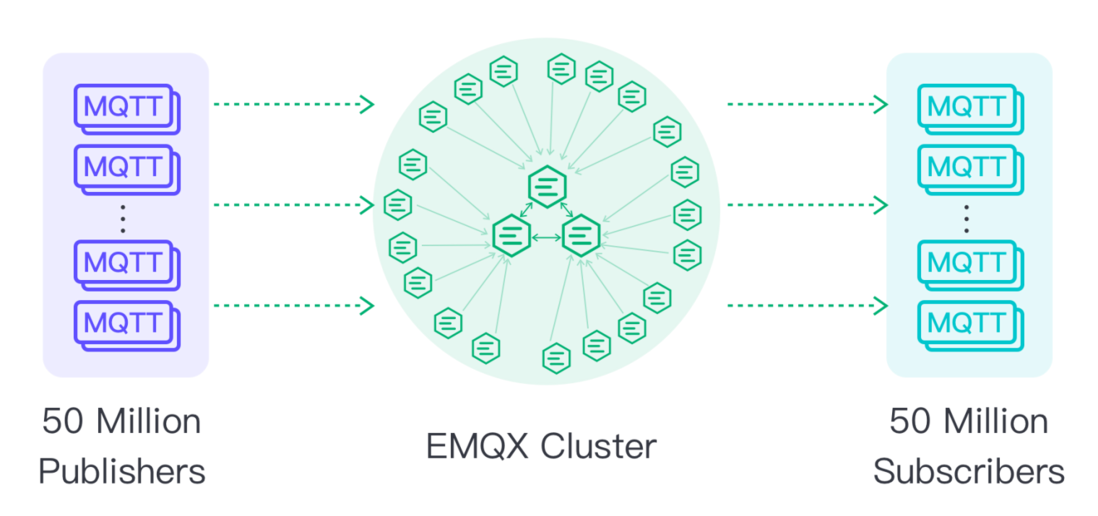

The continuous expansion of IoT device connection and deployment scale puts forward higher requirements for the scalability and robustness of IoT messaging platforms. In order to confirm that the performance of the cloud-native distributed MQTT message server EMQX can fully meet the needs of today's IoT connection scale, we established 100 million MQTT connections on the 23-node EMQX cluster, putting pressure on the scalability of EMQX test.

In this test, each MQTT client subscribed to a unique wildcard topic, which required more CPU resources than direct topics. When publishing messages, we chose a one-to-one publisher-subscriber topology model, which can process up to 1 million messages per second. In addition, we compared how the maximum subscription rate changes with increasing cluster size when using two different database backends - RLOG DB and Mnesia. This article will detail the testing situation and some of the challenges faced along the way.

Background introduction

EMQX is a highly scalable distributed open source MQTT message server, developed based on Erlang/OTP platform, which can support millions of concurrent clients. Therefore, EMQX needs to persist and replicate various data between cluster nodes, such as MQTT topics and their subscribers, routing information, ACL rules, various configurations, and more. To meet such needs, EMQX has been using Mnesia as the database backend since its release.

Mnesia is an Erlang/OTP based embedded ACID distributed database that uses a full mesh point-to-point Erlang distribution for transaction coordination and replication. This feature makes it difficult to scale horizontally: the more nodes, the more data replicated, the greater the overhead of write task coordination, and the greater the risk of a split-brain scenario.

In EMQX 5.0, we try to alleviate this problem through a new database backend type - RLOG (Replication Log), which is implemented by Mria . As an extension of the Mnesia database, Mria defines two types of nodes to help it scale horizontally: one is a core node, which behaves like a normal Mnesia node and participates in write transactions; the other is a replication node, which does not participate in Transaction processing, which delegates transaction processing to core nodes while keeping a read-only copy of the data locally. Because there are fewer nodes involved, this makes split-brain risk much lower and reduces the coordination required for transaction processing. At the same time, since all nodes can read data locally, fast access to read-only data can be achieved.

To be able to use this new database backend by default, we need to stress test it to verify that it does scale well horizontally. We set up a 23-node EMQX cluster, maintaining 100 million concurrent connections, split equally between publishers and subscribers, and publishing messages on a one-to-one basis. In addition, we also compared the RLOG DB backend with the traditional Mnesia backend and confirmed that RLOG does indeed have a higher arrival rate than Mnesia.

testing method

We used AWS CDK to deploy and run cluster tests, which can test different types and numbers of instances, and try different development branches of EMQX. Interested readers can check out our scripts in this Github repository . We use our emqtt-bench tool in load generator nodes ("loadgens" for short) to generate connect/pub/sub traffic with various options, and use EMQX's Dashboard and Prometheus to monitor test progress and instance health situation.

We tested them one by one with different instance types and numbers. In the last few tests, we decided to use c6g.metal instances for EMQX nodes and loadgen, and a "3+20" topology for the cluster, ie 3 core nodes participating in write transactions, and 20 read replicas and Writes are delegated to the replica nodes of the core node. As for loadgen, we observed that publisher clients require far more resources than subscribers. If you only connect and subscribe to 100 million connections, you only need 13 loadgen instances; if you also need to publish, you need 17.

No load balancer was used in these tests, and loadgen was connected directly to each node. In order to have core nodes dedicated to managing database transactions, we do not establish connections to these core nodes, each loadgen client connects directly to each node in an evenly distributed manner, so the number of connections and resource usage for all nodes is roughly same. Each subscriber subscribes to a wildcard topic of the form bench/%i/# with a QoS of 1, where %i represents each subscriber's unique number. Each publisher publishes a topic of the form bench/%i/test with QoS 1, where %i is the same as the subscriber's %i. This ensures that each publisher has exactly one subscriber. The payload size in a message is always 256 bytes.

In our test, we first connected all subscriber clients before we started connecting to the publisher. Only after all publishers are connected do they start publishing messages every 90 seconds. In the 100 million connection test reported in this article, subscribers and publishers connected to the broker at a rate of 16,000 connections/sec, although we believe the cluster can maintain higher connection rates.

Challenges encountered in testing

In experimenting with connections and throughputs of this magnitude, we encountered and investigated challenges that resulted in improved performance bottlenecks. system_monitor helps us a lot in tracking memory and CPU usage in Erlang processes, it can be called the "htop of BEAM processes", allowing us to find those with long message queues, high memory and/or CPU usage process. After we observed the situation during cluster testing, we leveraged it for some performance tuning in Mria [1][2][3].

In initial testing with Mria, simply put, the replication mechanism is basically to log all transactions to a hidden table subscribed by the replicate nodes. This actually creates some network overhead between core nodes, since each transaction is essentially "replicated". In our fork of Erlang/OTP code, we added a new Mnesia module that allows us to capture all committed transaction logs more easily, without the need to "duplicate" writes, greatly reducing network usage and keeping the cluster more efficient High connection and transaction rates. After these optimizations, we further stress-tested the cluster and discovered new bottlenecks that require further performance tuning [4][5][6].

Even our signature test tool needed some tweaking to handle such a large number of connections and connection rates. To this end we have made some quality improvements [7][8][9][10] and performance optimizations [11][12]. In our pub-sub test, there was even a dedicated branch (not in the current trunk branch) to further reduce memory usage.

Test Results

The animation above shows the final result of a one-to-one publish-subscribe test. We established 100 million connections, of which 50 million were subscribers and the other 50 million were publishers. By publishing a message every 90 seconds, we can see an average inbound and outbound rate of over 1 million per second. At the peak of the release, each of the 20 replica nodes (these are connected nodes) used on average 90% of the memory (~113GiB) and ~97% of the CPU (64 arm64 cores) during the release. The 3 core nodes processing transactions use less CPU (less than 1% usage) and only use 28% of memory (~36GiB). The network traffic required to publish a 256-byte payload is between 240MB/s and 290MB/s. At peak release, loadgen requires almost all of the memory (~120GiB) and the entire CPU.

Note: In this test, all paired publishers and subscribers happened to be on the same broker, which is not an ideal scenario that is very close to a real-world use case. At present, the EMQX team is conducting more tests and will continue to update the progress.

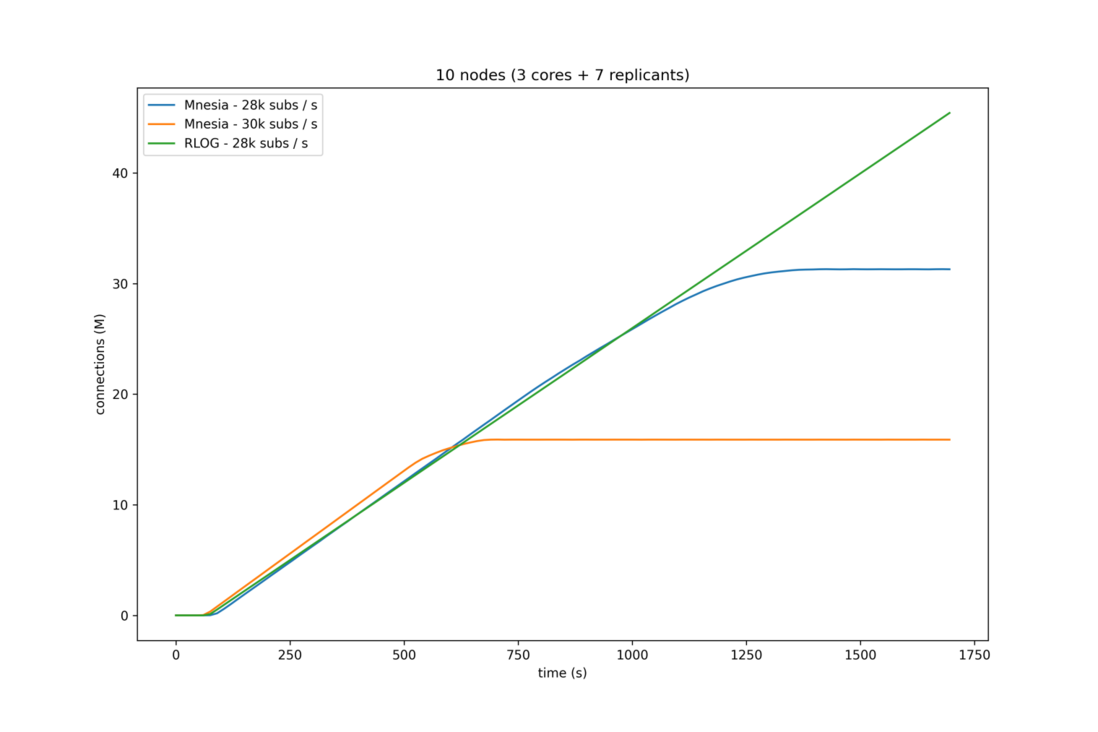

To compare the RLOG cluster with the equivalent Mnesia cluster, we used another topology with less total connections: RLOG uses 3 core nodes + 7 replication nodes, Mnesia cluster uses 10 nodes with 7 nodes Enter connection. We connect and subscribe at different rates and do not publish.

The figure below shows our test results. For Mnesia, the faster you connect and subscribe to nodes, the more "flattening" behavior is observed, where the cluster fails to reach the target maximum number of connections, which was 50 million in these tests. And with RLOG, we can see that it is able to achieve higher connection rates without the cluster exhibiting this flattening behavior. From this we can conclude that Mria using RLOG performs better at higher connection rates than the Mnesia backend we used in the past.

Epilogue

After a series of tests and these satisfactory results, we believe that the RLOG database backend provided by Mria is ready for use in EMQX 5.0. It has become the default database backend in the current master branch.

References

[1] - fix(performance): Move message queues to off_heap by k32 · Pull Request #43 · emqx/mria

[3] - fix(mria_status): Remove mria_status process by k32 · Pull Request #48 · emqx/mria

[4] - Store transactions in replayq in normal mode by k32 Pull Request #65 emqx/mria

[5] - feat: Remove redundand data from the mnesia ops by k32 Pull Request #67 emqx/mria

[6] - feat: Batch transaction imports by k32 Pull Request #70 emqx/mria

[7] - feat: add new waiting options for publishing by thalesmg Pull Request #160 emqx/emqtt-bench

[8] - feat: add option to retry connections by thalesmg Pull Request #161 emqx/emqtt-bench

[9] - Add support for rate control for 1000+ conns/s by qzhuyan · Pull Request #167 · emqx/emqtt-bench

[10] - support multi target hosts by qzhuyan · Pull Request #168 · emqx/emqtt-bench

[11] - feat: bump max procs to 16M by qzhuyan · Pull Request #138 · emqx/emqtt-bench

[12] - feat: tune gc for publishing by thalesmg Pull Request #164 emqx/emqtt-bench

Copyright statement: This article is original by EMQ, please indicate the source when reprinting.

Original link: https://www.emqx.com/zh/blog/reaching-100m-mqtt-connections-with-emqx-5-0

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。