Project overview and development design

The business partner of this early adopter is the food department, and the final project is "Explore Adventure": the user uses the "joystick" on the left to operate the IP character, go to the food hall they are interested in, adjust the current perspective, and virtually offline in 3D Venue shopping experience. The first perspective of the digital human image of food in the "Metaverse" virtual gourmet restaurant is as follows:

Students who want to experience can use the APP to scan the code directly (open the APP homepage, visit "Gourmet Restaurant", and click the floating layer in the lower right corner to experience):

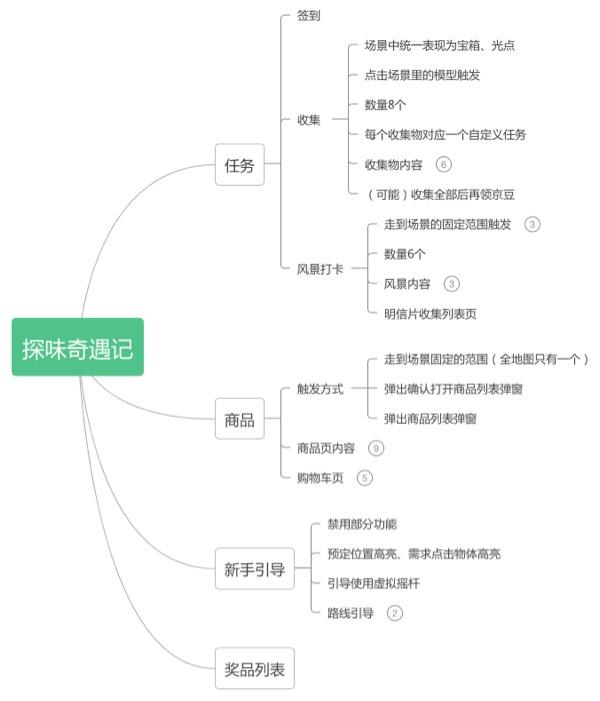

The two business indicators of “Taste of Adventures” are: page stay time and page revisit rate. Therefore, after discussing the plan with the design, the function is expanded from two dimensions of tasks and commodities, plus the list of rewards for task feedback and novice tutorials, the overall project development is divided into the following:

The ultimate purpose of 3D immersive experience is to ensure the achievement of business goals, and the shopping process in the original 2D field must still be considered. Therefore, a transition scheme is designed on the front-end architecture, and the rendering is divided into two parts. Part of it is the processing of 3D rendering, and part of it is the rendering of normal DOM nodes. The technology library used for 3D rendering is Babylon, and the actual front-end design is shown in the following figure:

Rendering implementation

- The 3D scene rendering scene includes street atmosphere, various special food venues, and the rendering of IP characters in HTML. In the early Demo, the 3D basic rendering has been realized. In this project, the following two points are mainly optimized:

- Forcing the interface to flip horizontally compared to the vertical screen with a field of view of less than 90 degrees, the horizontal screen is more in line with the human physiological visual range (about 114 degrees). In the previous technical solution, the front-end adopts an adaptive mobile phone for horizontal and vertical screen display. Since the APP does not support horizontal screen, it is necessary to manually flip the entire vertical screen content to horizontal screen display. After adjustment, regardless of whether the horizontal screen or vertical screen, the interface is presented as follows:

The main code involved is as follows:

// 全局容器在竖屏情况下,宽为屏幕高度,高为屏幕宽度,旋转90deg

.wrapper{

position: fixed;

width: 100vh;

height: 100vw;

top: 0;

left: 0;

transform-origin: left top;

transform: rotate(90deg) translateY(-100%);

transform-style: preserve-3d;

}

// 横屏情况不做旋转

@media only screen and (orientation: landscape) {

.wrapper{

width: 100vw;

height: 100vh;

transform: none;

}

}- Package Resource Management Center

3D pages need to render a lot of files, and the quality is not small, so the resource management center is encapsulated for the required resources to centrally process and load. Including: 3D model, texture image, texture and other types, as well as deduplication of the same resource file.

Taking model loading as an example, the main code involved is as follows:

async appendMeshAsync (tasks: Tasks, withLoading = true) {

// loading处理

...

const promiseList: Promise<MeshAssetTask>[] = []

// 过滤同名模型

const unqiTasks = _.uniqWith(tasks, (a, b) => a.name === b.name)

for (const item of unqiTasks) {

const { name, rootUrl, fileName, modelRoot = '' } = item

// 避免重复加载

if (this.modelAssets.has(name)) {

console.log(`${name}模型已加载过`)

continue

}

const promise = new Promise<MeshAssetTask>((res, reject) => {

const task = this.assertManager.addMeshTask(

`${Tools.RandomId}_task`,

modelRoot,

rootUrl,

fileName)

task.onSuccess = result => {

this.savemodelAssets(name, result)

res(result)

}

task.onError = () => {

reject(null)

}

})

promiseList.push(promise)

}

// load

this.assertManager.loadAsync()

const ret = await Promise.all(promiseList)

return ret

}The essence of the resource management center is still to use Babylon's addMeshTask, addTextureTask, etc. to load models and textures. During the project application process, it is found that when multiple models are loaded in batches in the APP, it will cause a lot of pressure on the memory, so the actual model loading Process, using a single loading mode (need to make decisions based on the current environment).

- DOM components

DOM components mainly cover business-related process interfaces in daily e-commerce activities, such as the display of product list, product details, and prize distribution pop-up windows in the following figure:

- Product list page

The list page realizes pulling operation configuration commodity group material, displaying commodity name, commodity picture, price, promotion information, etc.

The problem is: on the premise that the App only supports vertical screen, the horizontal screen solution of Demo is a 90-degree rotation at the style level, and the impact of horizontal screen flip on touch operation needs to be considered. The horizontal swipe operation in the landscape mode will be recognized as a vertical swipe operation in the vertical screen, resulting in the sliding direction not as expected.

Solution: Abandon the everyday CSS scheme ( overflow: scroll ) and rewrite the horizontal sliding component.

Specific implementation: first hide the part outside the display area; according to the sliding direction of the design, obtain the user's touch distance in the direction perpendicular to the direction. The touch distance of the axis, and then determine the horizontal offset of the product list and the sliding distance of the virtual scroll bar according to this touch distance, so as to correct the problem of incorrect sliding direction caused by rotating the screen.

- Business details page

Displaying more product details after the operation on the list page and covering the business link of "additional purchase" is an important part of the transaction of the associated order in the current activity. Here, you can reuse the commodity components in the ordinary venue.

- 3D model display popup

The project is connected to the distribution of prizes such as Jingdou and coupons, and at the same time, a cumulative sign-in reward is added to achieve the revisit rate target. Therefore, it also involves a large number of pop-up window prompts. The common pop-up window is implemented by reusing the Toast component in the venue, and the amount of development is not large.

One of the more special ones is the collection display interface, which is similar to the relationship between the product list and the business details. After clicking the collection, the 3D model of the corresponding item will appear. Due to the hierarchical relationship, the 3D model of the collection cannot be rendered on the artboard of the display scene, so another WebGL artboard is used for rendering. In order to reduce the amount of rendering, when the collection model artboard is displayed, the rendering of the scene artboard is suspended. Its effect and key code are as follows:

componentDidUpdate (prevProps: TRootStore) {

const prevIsStampShow = prevProps.stampDetailModal.isShow

const isStampShow = this.props.stampDetailModal.isShow

if (prevIsStampShow !== isStampShow) {

this.engine.stopRenderLoop()

if (isStampShow) {

this.engineRunningStatus = false

} else if (!this.engineRunningStatus) {

this.engine.runRenderLoop(() => {

this.mainScene.render()

})

}

}

}- blend mode

3D rendering and migration and reuse of DOM components cover most of the rendered scenes, but there are still some exceptions:

On the left is the product list page (DOM component) that appeared in the previous section, and on the right is the 3D model of the current type of product. Therefore, in addition to independent 3D model rendering and ordinary DOM rendering, it is also necessary to consider the mixing situation and the communication linkage between them.

Babylon is an excellent rendering framework, in addition to the rendering of 3D models, to complete model interaction, it can also render 2D interfaces mixed in 3D scenes. However, compared with native DOM rendering, the development cost and finished effect of using Babylon to render 2D images are unmatched. Therefore, when we need to render 2D scenes, we try to use the DOM rendering method as much as possible.

Under such a page structure, the 3D model rendering logic is handled by the Babylon framework, the common DOM rendering logic is handled by React, and the state and behavior between the two are handled by an event management center. The interaction between the two can be like between two components.

Specifically, on the category product list page, after the user triggers the display of the product list page, Babylon takes a screenshot of the current user's scene frame, darkens it, and sets it as the background of the entire canvas after blurring, and then the camera only renders the right side. In the model part, when the model on the right triggers some interactions, the DOM layer on the left is notified to request the corresponding product information and switch the display due to event management.

Set the page background:

Tools.CreateScreenshot(this.scene.getEngine(), activeCamera, { width, height }, data => {

...

setProductBg(data)

...

})

private setProductBg (pic: string) {

const productUI = this.UIList.get('productUI')

if (productUI && productUI.layer) {

const image = new Image('bg', pic)

image.width = '100%'

image.height = '100%'

productUI.addControl(image)

this.mask = new Rectangle('mask')

this.mask.width = '100%'

this.mask.height = '100%'

this.mask.thickness = 0

this.mask.background = 'rgba(0, 0, 0, 0.5)'

productUI.addControl(this.mask)

productUI.layer.layerMask = detailLaymask

}

}Babylon synchronizes the DOM layer with events:

private showSlider = (slider: Slider):void => {

slider.saveActive()

...

const index = slider.getCurrentIndex()

const dataItem = this.currentDataList[index]

const groupId = dataItem?.comments?.[0] || ''

this.eventCenter.trigger(EVENT_TYPES.SHOWDETSILS, {

name: dataItem.name,

groupId,

index

})

if (actCamera && slider) {

(slider as Slider).expand()

slider.layerMask = detailLaymask

}

...

} The final effect is shown in the following figure:

Camera-Processed Air Wall

The exploration of the IP protagonist in the food street requires pathfinding. Which roads in the entire streetscape can be passed and which cannot be passed is determined by the coordinates and gaps of each building in the design of the visual students. However, we have to deal with situations such as the switching and displacement of the viewing angle when the protagonist walks on a relatively narrow road, which may lead to the penetration of the mold and the viewing angle that should not appear.

The interpenetration between characters and buildings can be solved by setting the collision property of the air wall (including the ground) and the character model so that the two models will not be interspersed. The corresponding main code is as follows:

// 设置场景全局的碰撞属性

this.scene.collisionsEnabled = true

...

// 遍历模型中的空气墙节点,设置为检查碰撞

if (mesh.id.toLowerCase().indexOf('wall') === 0) {

mesh.visibility = 0

mesh.checkCollisions = true

obstacle.push(mesh as Mesh)

} It can also be achieved by setting the collision property to avoid the camera from entering the building or the ground. However, when the position of the obstacle is between the camera and the character, such as a corner, the camera will be stuck and the character will continue to walk.

So the idea is to find the ray from the character's position to the direction of the lens, the closest point that intersects the air wall, and then move the lens to the position of the result point, so that the lens and obstacles are not interspersed.

In actual development, 3 hexahedrons are inserted between the lens (shown as a sphere in the figure below) and the character. When the hexahedron is interspersed with the air wall, the position of the lens moves to the hexahedron closest to the character where the interpenetration occurs and is close to the endpoint of one end of the character. will move to the point above the character's head. The example of the actual screen combined with the project is as follows:

The default lens is positioned at the default lens radius as shown below:

When one of the hexahedrons intersects the air wall, move the camera to the end point of the collision hexahedron as shown:

In the process of lens movement, add position transition animation to achieve the effect of stepless zoom

The accurate judgment interspersed between models refers to a post in the Babylonjs discussion forum. The built-in intersecMesh method of Babylon uses AABB box judgment.

map function

The street view designed in "Taste Adventures" is slightly larger than expected, and at the same time increases the game interest of "Treasure Box". For users who participate in the event for the first time, the current location is not expected. In response to this situation, the design has added a map function, and the entrance is displayed in the upper left corner to view the current location. The specific instructions are as follows:

The implementation of the map function depends on the visual design, as well as the calculation of the current position. The main links involved are as follows:

- When displaying the map pop-up window, the event dispatcher triggers an event to make the scene write the character's current position and direction to the map pop-up window store, and display the character's logo to the corresponding location on the map;

Handle the location correspondence between the map and the scene. First find the 0 point position of the scene corresponding to the map, then calculate the approximate zoom value according to the scene size and image size, and finally fine-tune to obtain the accurate corresponding zoom value.

export function toggleShow (isShow?: boolean) { // 打开地图弹窗时触发获取人物位置信息事件 eventCenter.getInstance().trigger(EVENT_TYPES.GET_CHARACTER_POSITION) store.dispatch({ type: EActionTypes.TOGGLE_SHOW, payload: { isShow } }) } // 场景捕捉时间把人物位置和方向写入store this.appEventCenter.on(EVENT_TYPES.GET_CHARACTER_POSITION, () => { const pos = this.character?.position ?? { x: 0, z: 0 } const rotation = this.character?.mesh.rotation.y ?? 0 if (pos) { updatePos({ x: -pos.x, y: pos.z, rotation: rotation - Math.PI }) } })

Beginner's guide

In addition to the daily function guidance, the novice guide adds route guidance for specific building locations in similar racing games. The idea is to reduce the cognitive cost of new players through the game guidance function that users are already familiar with.

In terms of development and implementation, it is disassembled into mathematical problems. When the direction and end point of the guide line are fixed, the length displayed by the guide line is the projected length of the character's position to the end point vector in the direction of the guide line, as shown in the following example coordinate diagram:

// 使用向量点乘计算投影长度

setProgress (val: number | Vector3) {

if (typeof val === 'number') {

this.progress = val

} else if (this.startPoint && this.endPoint) {

const startToEnd = this.endPoint.add(this.startPoint.negate())

const startToEndNormal = Vector3.Normalize(startToEnd)

this.progress = 1 - Vector3.Dot(startToEndNormal, (this.endPoint.add(val.negate()))) / startToEnd.length()

}

}The guide line is a plane, and the gradient effects and animations at both ends are implemented using custom shaders

# 顶点着色器

uniform mat4 worldViewProjection;

uniform vec2 uScale;# [1, 引导线长度/引导线宽度] 用于计算纹理的uv座标

attribute vec4 position;

attribute vec2 uv;

varying vec2 st;

void main(void) {

gl_Position = worldViewProjection * position;

st = vec2(uv.x * uScale.x, uv.y * uScale.y);

}

# 片元着色器

uniform sampler2D textureSampler;

uniform vec2 uScale;# 同顶点着色器

uniform float uOffset;# 纹理偏移量,改变这个值实现动画

uniform float uAlphaTransStart;# 引导线开始端透明渐变长度/引导线宽度

uniform float uAlphaTransEnd;# 引导线结束端透明渐变长度/引导线宽度

varying vec2 st;

void main(void) {

vec2 rst = vec2(st.x, -st.y + uOffset);

float alphaEnd = smoothstep(0., uAlphaTransEnd, st.y);

float alphaStart = smoothstep(uScale.y, uScale.y - uAlphaTransStart, st.y);

float alpha = alphaStart * alphaEnd;

gl_FragColor = vec4(texture2D(textureSampler, rst).rgb, alpha);

}performance optimization

In HTML, rendering 3D models through third-party libraries, and building a street view and multiple venues is indeed a huge expense in terms of memory. During the development process, due to the parallel with the visual design, no abnormality was found in the early stage. As the visual gradually provided model files, the overall atmosphere was gradually presented, and the phenomenon of stuttering and flashback during the game also appeared.

Through the monitoring of memory in the PC-side development environment and the communication with the App Webview boss, I entered the performance optimization discussion in advance. At present, there are 30 models in the whole project, and the average size of a single file is about 2M (the largest street view is 7M). Therefore, in terms of performance optimization, the following methods are mainly adopted:

- Controlling Texture Accuracy During the optimization process, it was found that texture data accounted for most of the volume of the model file. After communicating and trying with the visual, most textures were controlled below 1Kx1K, and some textures were 512x512 in size.

- Using compressed textures and using traditional jpg/png formats as texture files will cause the image files to occupy a space in both the browser image cache and GPU storage, increasing the page memory usage. When the memory occupies a certain size, there will be flashbacks on IOS devices, freezes and frame drops on Android devices. By using the compressed texture format, only one copy of the image cache can be reserved in the GPU storage, which greatly reduces the memory usage. The specific operation is realized: use the texture compression tool in the project, convert the original jpg/png image file into a compressed texture format file such as pvrtc/astc, and split the texture file and other information of the model, according to the support degree on different devices Load texture files in different formats.

- Bake high-poly details to low-poly: Bake the details of the high-precision model to the texture, and then apply the texture to the low-precision model, while ensuring the texture accuracy, try to hide the defects of the model accuracy.

Other trampled pits

- "Light" processing

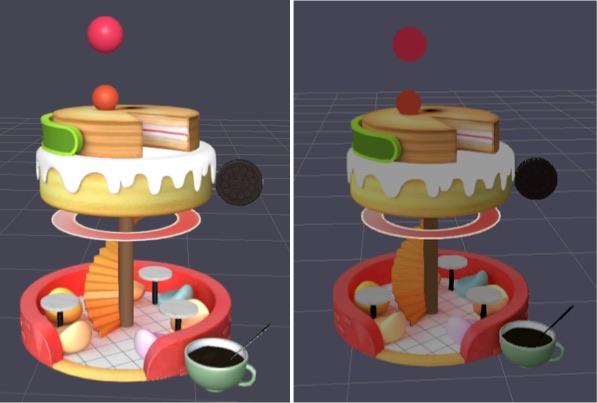

In the first draft of the "white model" provided by the vision, since the color and the overall street view are transitional versions, everyone has no objection to the rendering effect, but when the overall color and texture are exported at the same time, the front-end rendering results and visual baking export preview big different. Through careful comparison, as well as the preview comparison on the 3D material management platform developed by ourselves, we found that the influence of "light" is very large. The left and right of the figure below are the cases of adding "light" and removing "light":

After communicating with vision and trying many experiments, the final solution is as follows:

- The ambient light is added to the HDR map to make the scene get brighter performance and reflection information;

- Add a directional light in the forward direction of the camera to increase the overall brightness.

Corresponding extension optimization: increase the ground light reflection, realize the reflection to enhance the atmosphere of the street scene.

- GUI rendering clarity In the project, Babylon's built-in GUI layer is used to display pictures. The clarity of the display effect is too poor to meet the requirements of restoring the design draft. For example, the text in the figure below, the zigzag of the return arrow:

Because in the default configuration, the size of the 3D canvas is the display resolution of the screen. For example, the iPhone 13 is 390x844. The official method provided by Babylon is to use the physical resolution of the screen as the size of the canvas, which can not only render the GUI point-to-point, but also the scene. The resolution is also clearer; but this solution increases the GPU rendering pressure of the overall project, and it is not easy to use the solution to increase the amount of calculation for the already tight resources.

So modify the GUI components with obvious poor rendering quality, move them out of the 3D artboard, and use DOM components to render them. The button image can be rendered to the effect required by the design draft under the condition of using the same resources. The effect after processing is as follows:

- Handling of emissive materials

In the process of visual design, self-illuminating materials will be used on some models, so that this model affects the surrounding models to present delicate light and shadow effects, as shown in the following visual draft:

However, the framework used for development does not have application-level implementation of emissive materials. When we put the model into the page and no lights are set, only the emissive material part of the model can be rendered, and the other parts are black (below As shown on the left of the picture); and after setting the light, the model is the brightest, without any light and shadow effects (as shown on the right of the following picture):

Solution: Cooperate with the designer to bake the light and shadow effects generated by the local lighting into the material map, and only need to set a reasonable light position in the page restoration to restore the effect, as shown in the following figure:

Epilogue

Thanks to the exploratory spirit and support of the leaders of the Marketing Operation Team of the Food and Drinks Department and the Innovative Marketing Design Team of the Platform Marketing Design Department, they made every effort to make the project go live on the Food Festival in May. "Taste and Adventure" is an attempt and exploration of future shopping, meeting customers' needs for a beautiful and novel future. Make shopping scene-based and interesting to bring customers a better shopping experience. When reviewing the project data, it was found that the click-through rate and conversion rate data were slightly higher than the same period.

In the process of landing 3D technology this time, although I have stepped on a lot of pits, it is also full of harvest. As a wasteland reclamation group, it is indeed a step closer to the goal of "metaverse". I believe our technology and products will become more and more mature. , and please look forward to the team's visual 3D editing tools!

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。