This article was first published on the Nebula Graph Community public account

1. Project Background

Weilan is a knowledge graph application for querying technologies, industries, enterprises, scientific research institutions, disciplines and their relationships. It contains tens of billions of relationships and billions of entities. In order to make this business run perfectly Up, after research, we use Nebula Graph as the main database to carry our knowledge graph business. With the product iteration of Nebula Graph, we finally choose to use the v2.5.1 version of Nebula Graph as the final version.

2. Why choose Nebula Graph?

In the field of open source graph databases, there are undoubtedly many options, but in order to support knowledge graph services for such large-scale data, Nebula Graph has the following advantages over other graph databases, which is why we choose Nebula Graph:

- Less memory usage

In our business scenario, our QPS is relatively low and there is no high fluctuation. At the same time, compared to other graph databases, Nebula Graph has a smaller idle memory footprint, so we can configure machines with lower memory by using Go and run the Nebula Graph service, which definitely saves us money.

- Use the multi-raft consensus protocol

Compared with traditional raft, multi-raft not only increases the availability of the system, but also has higher performance than traditional raft. The performance of the consensus algorithm mainly depends on whether it allows holes and granularity segmentation. In the application layer, whether KV database or SQL, can successfully use these two characteristics, the performance will definitely not be bad. Since the serial submission of raft is extremely dependent on the performance of the state machine, even on KV, the op of one key is slow, which will significantly slow down other keys. Therefore, the key to the performance of a consistent protocol must be how the state machine can be parallelized as much as possible, even if the granularity of multi-raft is relatively coarse (compared to Paxos), but for the raft protocol that does not allow holes Still, there is a huge improvement.

- The storage side uses RocksDB as the storage engine

As a storage engine/embedded database, RocksDB is widely used as a storage side in various databases. More importantly, Nebula Graph can improve database performance by adjusting RocksDB's native parameters.

- fast write speed

Our business requires frequent and large writes, and Nebula Graph can achieve an insertion speed of 20,000/s even in the case of vertices with a large amount of long text content (3 machines in the cluster, 3 copies of data, 16 threads to insert) speed, while the insertion speed of no attribute edge can reach 350,000/s under the same conditions.

3. What problems did we encounter when using Nebula Graph?

In our knowledge graph business, many scenarios need to show users a paginated one-time relationship. At the same time, there are some super nodes in our data, but according to our business scenarios, the super nodes will definitely be the nodes with the highest possibility of user access. So this cannot be simply classified as a long-tail problem ; and because our user volume is not large, the cache must not be hit often, and we need a solution to make user query latency smaller.

For example: the business scenario is to query the downstream technology of this technology, and at the same time, it needs to be sorted according to the sort key we set, which is a local sort key. For example, an institution ranks very high in a certain field, but is relatively general in the global or other fields. In this scenario, we must set the ranking attribute on the edge, and fit and standardize the global ranking items so that each The variance of the dimension data is all 1, and the mean value is all 0, so that local sorting can be performed, and paging operations are also supported to facilitate user query.

The statement is as follows:

MATCH (v1:technology)-[e:technologyLeaf]->(v2:technology) WHERE id(v2) == "foobar" \

RETURN id(v1), v1.name, e.sort_value AS sort ORDER BY sort | LIMIT 0,20;This node has 130,000 adjacent edges. In this case, even if the sort_value attribute is indexed, the query takes nearly two seconds. This speed is obviously unacceptable.

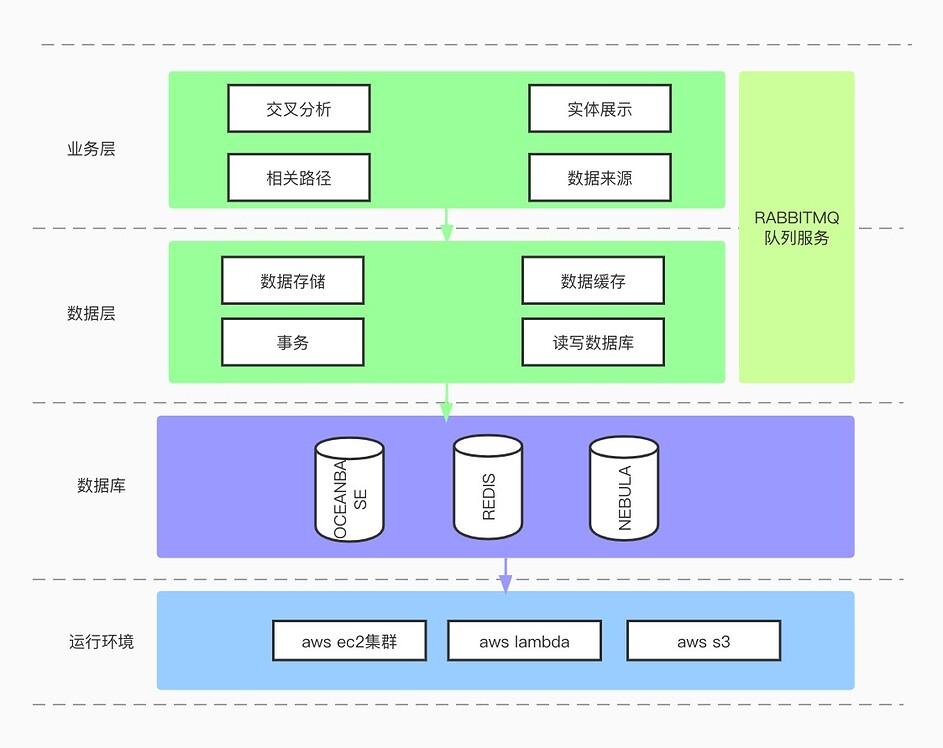

We finally chose to use the open source OceanBase database of Ant Financial to assist us in realizing our business. The data model is as follows:

technologydownstream

| technology_id | downstream_id | sort_value |

|---|---|---|

| foobar | id1 | 1.0 |

| foobar | id2 | 0.5 |

| foobar | id3 | 0.0 |

technology

| id | name | sort_value |

|---|---|---|

| id1 | aaa | 0.3 |

| id2 | bbb | 0.2 |

| id3 | ccc | 0.1 |

The query statement is as follows:

SELECT technology.name FROM technology INNER JOIN (SELECT technologydownstream.downstream_id FROM technologydownstream

WHERE technologydownstream.technology_id = 'foobar' ORDER BY technologydownstream.sort_value DESC LIMIT 0,20) AS t

WHERE t.downstream_id=technology.id;This statement took 80 milliseconds. Here is the whole architecture design

4. How do we tune when using Nebula Graph?

As mentioned earlier, one of the great advantages of Nebula Graph is that it can use native RocksDB parameters for tuning to reduce learning costs. We share the specific meaning of tuning items and some tuning strategies as follows:

| RocksDB parameters | meaning |

|---|---|

| max_total_wal_size | Once the wal file exceeds max_total_wal_size, a new wal file will be forced to be created. The default value is 0, max_total_wal_size = write_buffer_size max_write_buffer_number 4 |

| delete_obsolete_files_period_micros | Period for deleting expired files, expired files include sst files and wal files, the default is 6 hours |

| max_background_jobs | Maximum number of background threads = max_background_flushes + max_background_compactions |

| stats_dump_period_sec | If not empty, the rocksdb.stats information will be printed to the LOG file every stats_dump_period_sec seconds |

| compaction_readahead_size | The amount of data prefetched from the hard disk during compression. If you are running RocksDB on non-SSD disks, it should be set to at least 2 MB for performance reasons. If it is non-zero, it will also force new_table_reader_for_compaction_inputs=true |

| writable_file_max_buffer_size | Maximum buffer size used by WritableFileWriter RocksDB's write cache. Tuning this parameter is important for Direct IO mode. |

| bytes_per_sync | The amount of data in each sync, accumulated to bytes_per_sync, will automatically flush to the disk. This option is applied to the sst file, and the wal file uses wal_bytes_per_sync |

| wal_bytes_per_sync | Every time the wal file is filled with the wal_bytes_per_sync file size, the file will be refreshed by calling sync_file_range. The default value is 0, which means it will not take effect. |

| delayed_write_rate | If Write Stall occurs, the write speed will be limited below delayed_write_rate |

| avoid_flush_during_shutdown | By default, all memtables will be refreshed when the DB is closed. If this option is set, it will not be forced to refresh, which may cause data loss. |

| max_open_files | RocksDB can open the number of file handles (mainly sst files), so that the next time you access it, you can use it directly without reopening it. When the cached file handle exceeds max_open_files, some handles will be closed. It should be noted that when the handle is closed, the index cache and filter cache of the corresponding sst will also be released together, because the index block and filter block are cached on the heap, and the number is limited. Controlled by the max_open_files option. Judging from the organization of the index_block of the sst file, generally speaking, the index_block is 1 to 2 orders of magnitude larger than the data_block, so the index_block must be loaded first each time data is read. At this time, the index data is placed on the heap, and the data will not be actively eliminated. ; If a large number of random reads are used, it will cause serious read amplification, and RocksDB may occupy a large amount of physical memory for unknown reasons. Therefore, the adjustment of this value is very important, and you need to make a trade-off between performance and memory usage according to your own workload. If this value is -1, RocksDB will always cache all open handles, but this will cause a relatively large amount of memory overhead |

| stats_persist_period_sec | If non-null, statistics are automatically saved to hidden column family ___ rocksdb_stats_history___ every stats_persist_period_sec. |

| stats_history_buffer_size | If non-zero, statistics snapshots are taken periodically and stored in memory. The upper limit of the memory size of statistics snapshots is stats_history_buffer_size |

| strict_bytes_per_sync | For performance reasons, RocksDB does not synchronize Flush by default when writing data to the hard disk. Therefore, there is a possibility of data loss under abnormal conditions. In order to control the amount of lost data, some parameters are required to set the refresh action. If this parameter is true, RocksDB will refresh the disk strictly according to the settings of wal_bytes_per_sync and bytes_per_sync, that is, a complete file is refreshed every time, if this parameter is false, only part of the data will be refreshed each time: that is to say, if possible If you don't care about data loss, you can set false, but it is recommended to be true |

| enable_rocksdb_prefix_filtering | Whether to enable prefix_bloom_filter, after it is enabled, a bloom filter will be constructed in memtable according to the previous rocksdb_filtering_prefix_length bit written to the key |

| enable_rocksdb_whole_key_filtering | Create bloomfilter in memtable, where the mapped key is the full key name of memtable, so this configuration conflicts with enable_rocksdb_prefix_filtering. If enable_rocksdb_prefix_filtering is true, this configuration does not take effect |

| rocksdb_filtering_prefix_length | see enable_rocksdb_prefix_filtering |

| num_compaction_threads | The maximum number of concurrent compaction threads in the background, which is actually the maximum number of threads in the thread pool. The thread pool of compaction has a low priority by default. |

| rate_limit | The parameters used to record the rate controller created by NewGenericRateLimiter in the code, so that the rate_limiter can be constructed from these parameters when restarting. rate_limiter is a tool used by RocksDB to control the writing rate of Compaction and Flush, because too fast writing will affect the reading of data, we can set it like this: rate_limit = {"id":"GenericRateLimiter"; "mode":"kWritesOnly"; "clock":"PosixClock"; "rate_bytes_per_sec":"200"; "fairness":"10"; "refill_period_us":"1000000"; "auto_tuned":"false";} |

| write_buffer_size | The maximum size of the memtable, if it exceeds this value, RocksDB will turn it into an immutable memtable and create another new memtable |

| max_write_buffer_number | The maximum number of memtables, including mem and imm. If it is full, RocksDB will stop subsequent writes. Usually this is caused by writing too fast but Flush is not timely. |

| level0_file_num_compaction_trigger | A dedicated trigger parameter for Leveled Compaction. When the number of files in L0 reaches the value of level0_file_num_compaction_trigger, it will trigger the merger of L0 and L1. The larger the value, the smaller the write amplification and the larger the read amplification. When this value is large, it is close to Universal Compaction state |

| level0_slowdown_writes_trigger | When the number of files in level0 is larger than this value, the writing speed will be reduced. Adjusting this parameter and the level0_stop_writes_trigger parameter is to solve the Write Stall problem caused by too many L0 files |

| level0_stop_writes_trigger | When the number of files at level0 is greater than this value, writing will be rejected. Adjusting this parameter and the level0_slowdown_writes_trigger parameter is to solve the Write Stall problem caused by too many L0 files |

| target_file_size_base | SST size for L1 files. Increasing this value will reduce the size of the entire DB. If you need to adjust it, you can make target_file_size_base = max_bytes_for_level_base / 10, that is, level 1 will have 10 SST files. |

| target_file_size_multiplier | Make the SST size of the files in the upper layer of L1 (L2...L6) larger than the current layer by target_file_size_multiplier times |

| max_bytes_for_level_base | The maximum capacity of the L1 layer (sum of all SST file sizes), beyond which a Compaction will be triggered |

| max_bytes_for_level_multiplier | The incremental parameter of the total file size of each layer compared to the previous layer |

| disable_auto_compactions | Whether to disable automatic compaction |

Exchange graph database technology? To join the Nebula exchange group, please fill in your Nebula business card first, and the Nebula assistant will pull you into the group~~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。