This article was first published on the Nebula Graph Community public account

This article organizes the practice sharing of Li Shiming, a senior engineer from Zhilian Recruitment, in "Zhaolian Recruitment Recommended Scenario Application "

Search Recommendation Architecture

Before talking about specific application scenarios, let's take a look at the screenshots of Zhaopin's search and recommendation page.

This is a simple Zhaopin search page, which can be seen by users who log in to the Zhaopin Recruitment App, but the recommendation, recall logic and sorting concepts behind this page are the focus of this article.

function matrix

Functionally, we can learn from the matrix diagram that when searching and recommending, the system is divided into two parts: Online and Offline.

In the Online part, it mainly involves real-time operations, such as searching for a certain keyword and displaying personal recommendations in real time. These functional operations require other functional support, such as hot word association, entity recognition, intent understanding, or personal user portrait drawing based on specific input. The next step is recall, using inverted index, matching according to text and similarity, and introducing Nebula Graph to realize graph index and vector index, all to solve the recall problem. Finally, is the display of search results - how to sort. There will be a rough sorting, such as the common sorting model TF/IDF , BM25 , cosine similarity of vectors and other recall engine sorting. The rough sorting is followed by the fine sorting, that is, the sorting of machine learning. The common ones are linear models, tree models, and deep models.

The above is the online process, and there is a corresponding set of offline process. The offline part is mainly the data processing work of the entire business, and the related behaviors of users, such as data collection, data processing, and finally writing the data to the recall engine, such as the Solr and ES of the inverted index mentioned above , Nebula Graph for graph indexing, and Milvus for vector indexing to provide online recall capabilities.

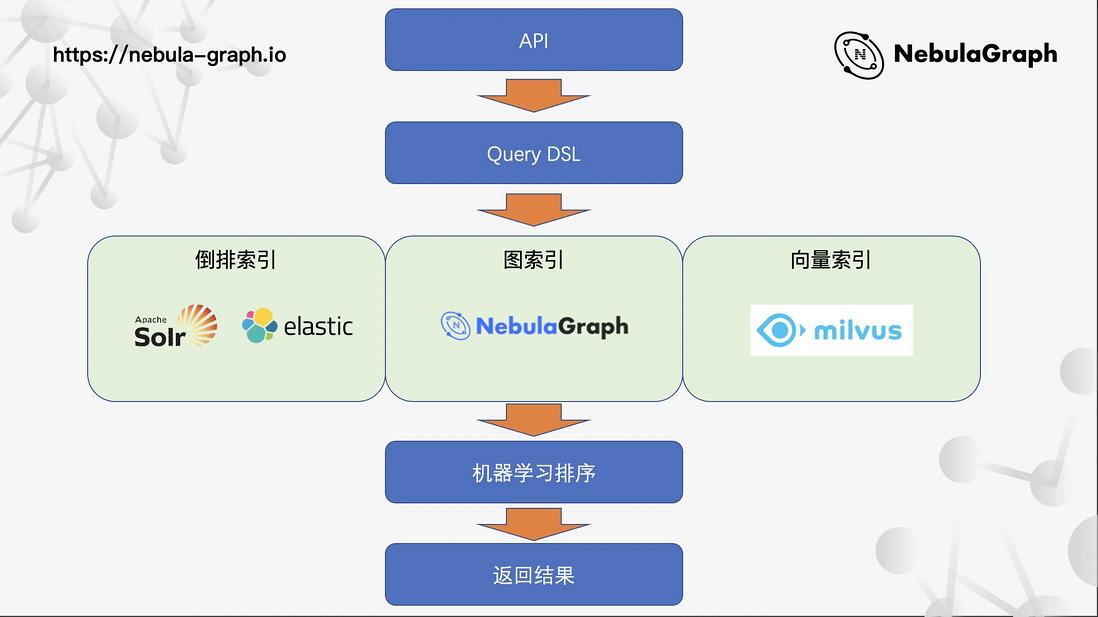

online architecture

When a user clicks the search button of Zhaopin, what happens? As shown in the figure above, after an API call, and then through the unified encapsulation and processing of Query DSL, it enters the three-way (inverted index, graph index and vector index mentioned above) recall, machine learning sorting, and finally returns the result to the front end to show.

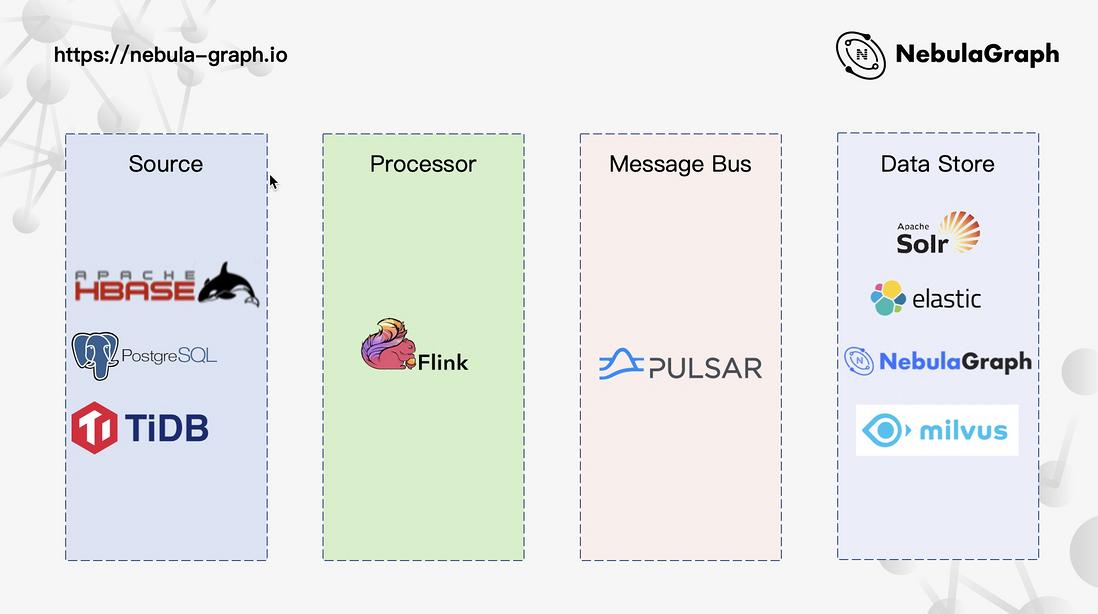

Offline Architecture

As described in the function matrix above, the offline part is mainly data processing. Data platforms such as HBase, relational database PostgreSQL, KV database TiDB are processed through data links, and finally written to the data storage layer .

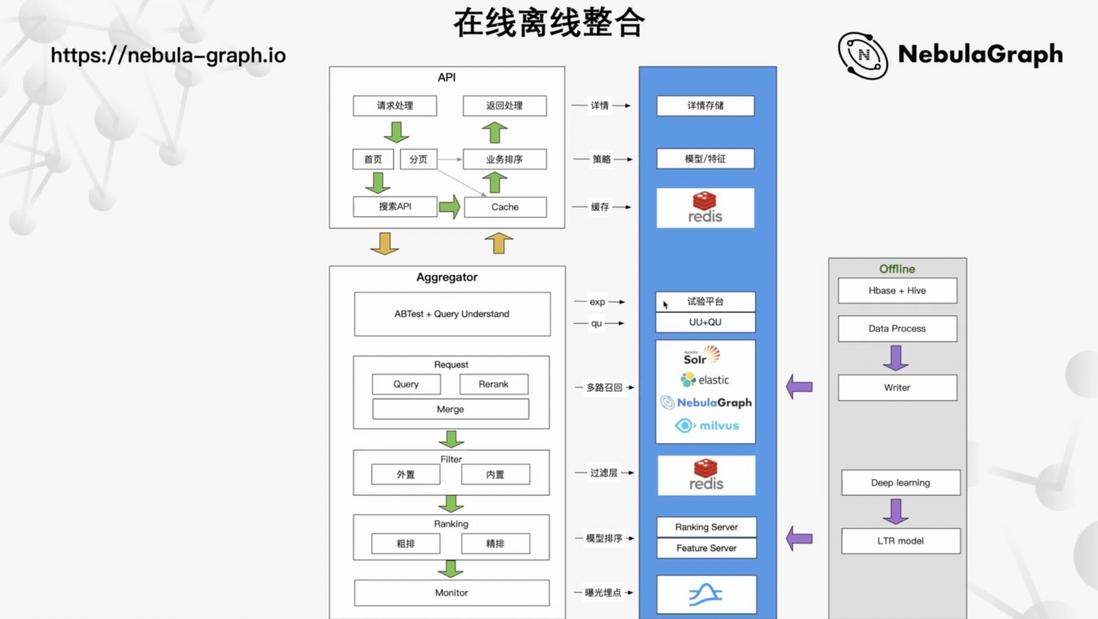

Overall business process

Integrating online and offline architectures, the following figure details the API request processing, caching, paging, A/B Test, user portraits, Query Understanding, and multiple recall processes.

Platform Architecture

The online and offline functional architecture is introduced, and now we will talk about how Zhaopin Recruitment supports the entire functional matrix.

From the bottom, the Zhilian technical team supports the entire functional matrix by building these three platforms.

First of all, at the top is our entire search recommendation architecture platform, which is divided into three modules: data processing, aggregation layer, and machine learning. In the data processing module, it is mainly used to complete data layer matters such as data processing, data synchronization, data merging, and format conversion; the aggregation layer handles intent recognition, AB testing, online recall, and sorting models; and the machine learning module is mainly used to do Things like feature processing, feature extraction, model updates.

Below the search recommendation architecture platform is the search recall engine, which consists of Solr, Elasticsearch, Nebula Graph, and Milvus, responsible for inverted index, graph index and vector index respectively.

The bottom layer is the big data platform, which connects to data sources such as Pulsar, Flink, HBase, HIVE, Redis, and TiDB.

The application of Nebula Graph in recommendation scenarios

Zhilian's data scale

The online environment of Zhilian has deployed 9 high-end physical machines. If the machines are configured, the number of CPU cores is about 64~72, and the memory is about 256G. Each machine deploys 2 stored nodes, there are 18 stored nodes in total, and 3-5 nodes are deployed for query graphd and metadata metad nodes respectively. The online environment currently has 2 namespaces, a total of 15 shards, and a three-copy mode.

The test environment adopts K8s deployment, and subsequent online deployment will gradually become K8s mode.

After talking about the deployment situation, let’s talk about the usage of Zhaopin Recruitment, which is currently a tens of millions of points and a billion-level edge. If running online, the highest QPS is more than 1,000; the time-consuming P99 is less than 50 ms.

The following picture shows the monitoring system developed by Zhilian. It is used to view the monitoring data of Prometheus, check the node status, the current query QPS and time consumption, and more detailed monitoring indicators such as CPU memory consumption.

Introduction to business scenarios

The following is a brief introduction to the business scenario

Collaborative filtering in recommendation scenarios

A relatively common business in the recommendation scenario is collaborative filtering, which is mainly used to solve the four businesses in the lower left corner of the above figure:

- U2U: user1 and user2 are similar users;

- I2I: itemA and itemB are similar items;

- U2I2I: Item-based collaboration to recommend similar items;

- U2U2I: Based on user collaboration, recommend similar users' preferred items;

The above U2U is creating a user to user relationship, which may be similar at the matrix (vector level) or at the behavior level. 1. and 2. are basic synergies (similarity), establishing a good relationship between users and users, items and items, and then extending more complex relationships based on this basic synergy, such as recommending similar items to users through item collaboration , or according to the user's collaboration, recommend similar users' preferred items. To put it simply, this scenario is mainly to realize that users can obtain similar recommendations of related items or related item recommendations of similar users through a certain relationship.

Let's analyze this scene

Requirement Analysis of Collaborative Filtering

Specifically, in the field of recruitment, there is a relationship between CV (resume) and JD (job). Focusing on the middle part of the above figure, there is a similar relationship between user behavior and matrix between CV and JD. A position, a user has posted a position, or the HR of the enterprise has browsed a resume. These user behaviors, or based on a certain algorithm, will establish a certain relationship between CV and JD. At the same time, it is necessary to create the connection between CV and CV, which is the U2U mentioned above; the relationship between JD and JD is the above I2I. After the association is created, the whole point of interesting things can be done - user A has viewed CV1 (resume) and recommends similar CV2 (resume), user B has browsed jobs, and can also recommend other JDs to him based on the similarity of jobs… The "hidden" point of this requirement is mentioned here, which is the need for attribute filtering. What is attribute filtering? The system will recommend CVs based on the similarity of the CVs. Here, we need to match the relevant attributes: attribute filtering based on the desired city, desired salary, and desired industry. The realization of recall must take into account the above factors. The expected city of CV1 cannot be Beijing, but the expected city of similar CV you recommend is Xiamen.

Technical realization

The original technical implementation - Redis

The first way of implementing collaborative filtering on Zhilian is to use Redis to store the relationship through kv, which is convenient for query. Obviously, this operation can bring certain benefits:

- The structure is simple and can be launched quickly;

- Redis has a low threshold for use;

- The technology is relatively mature;

- Because Redis is an in-memory database, it is fast and time-consuming;

However, at the same time, it also brings some problems:

Attribute filtering cannot be achieved. Like the above-mentioned attribute filtering based on city and salary, it is impossible to use the Redis solution. For example, now we want to push 10 related positions to the user, we get 10 related positions offline, and then we create this relationship, but if the user modifies his job-seeking intention at this time, or adds more If there are many screening conditions, it is necessary to recommend online in real time, which cannot be satisfied in this scenario. Not to mention the complex graph relationship mentioned above, in fact, if this kind of query is done with a graph, a 1-hop query can be satisfied.

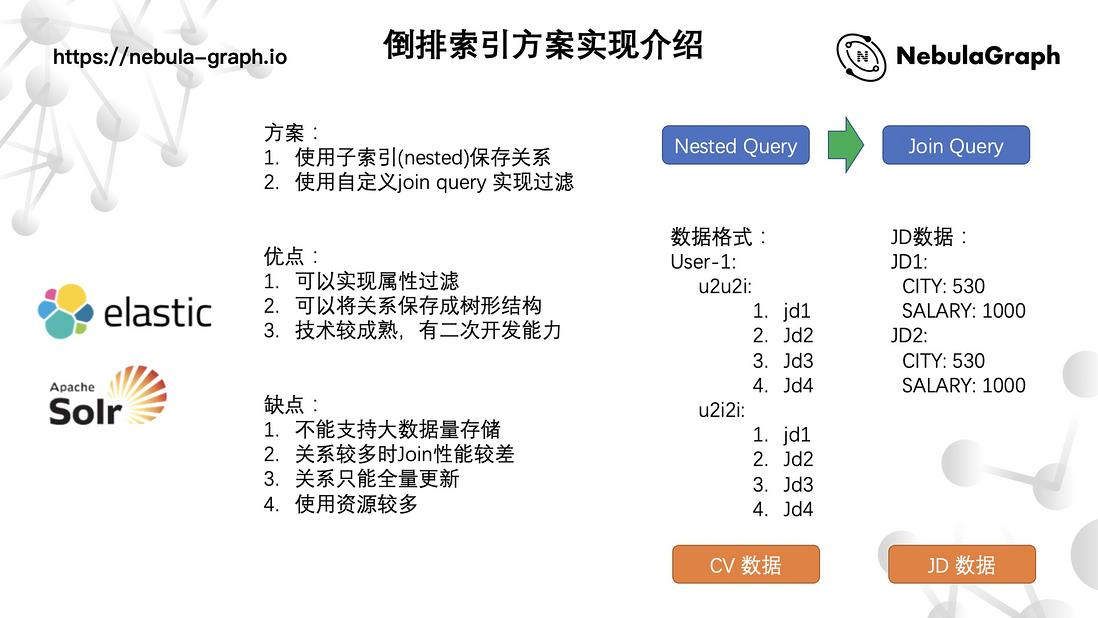

Try the inverted index implementation again - ES and Solr

Because Zhilian has a certain accumulation in the inverted index, we tried the inverted index later. Based on the Lucene perspective, it has a concept of indexes. You can save the relationship as a sub-index nested, and then filter this block, store the relationship ID in the sub-index, and then implement cross-index JOIN through JOIN query, so that attributes can be filtered through JOIN. Compared with the implementation of Redis in this form, the relationship can also be saved, and attribute filtering can also be implemented. But in the actual development process, we found some problems:

- It cannot support large data storage. When the relationship is very large, the corresponding single inverted row will be particularly large. For Lucene, it is marked deletion. The marked ones are deleted first and then new ones are inserted. This operation must be repeated for each sub-index.

- When there are many relationships, the JOIN performance is not good. Although the cross-index JOIN query is implemented, its performance is not good.

- Relationships can only be updated in full. In fact, when designing a cross-index, we designed a single-machine cross-index JOIN, and all JOIN operations are performed in one shard, but this solution requires each shard to store the full amount of JOIN index data.

- It uses a lot of resources. If cross-indexing involves cross-server, the performance will not be very good, and it will consume more resources to adjust the performance.

The right side of the above figure is a specific implementation record. The data format is the storage method of the relationship, and then the attribute filtering is performed through the data of JOIN JD. Although this solution finally realizes the function, it does not run online.

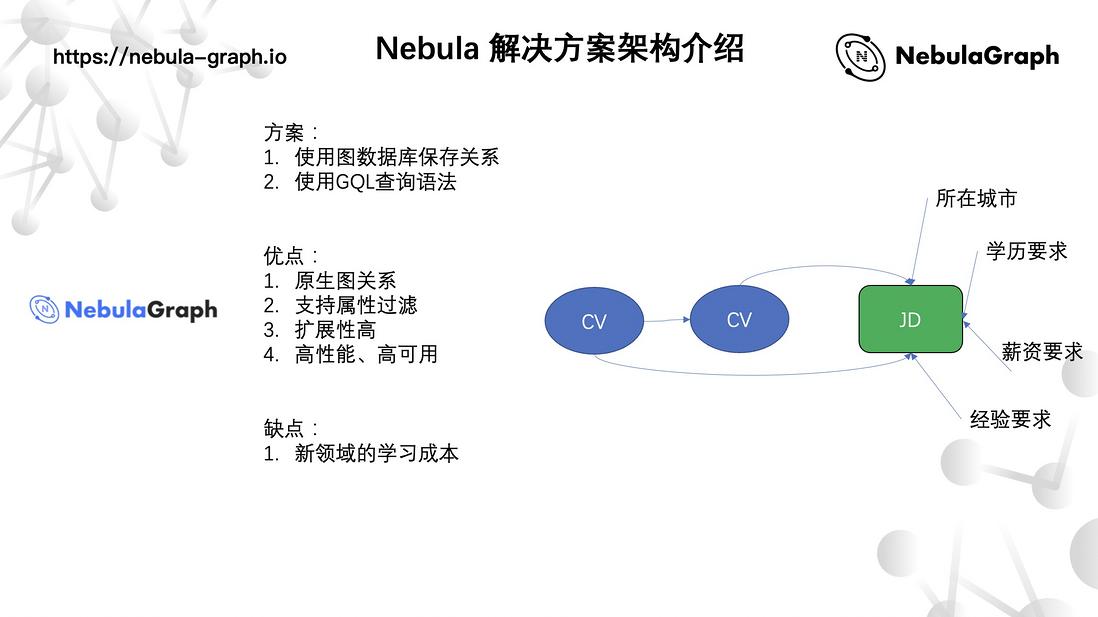

Graph Index - Nebula Graph

After our research, the industry has a high evaluation of Nebula Graph. Zhilian uses Nebula Graph to implement graph indexing. Like the scene in U2U and U2I, CV and JD are stored as points by graph, and edges are stored as relationships. As for attribute filtering, as shown in the figure above, JD attributes such as city, education requirements, salary requirements, experience requirements, etc. are stored as attributes of points; and for correlation, a "point" is stored on the edge of the relationship, and finally through the score Sort by relevance.

The only disadvantage of the new technology solution is the learning cost of the new field, but it is much more convenient after being familiar with the graph database.

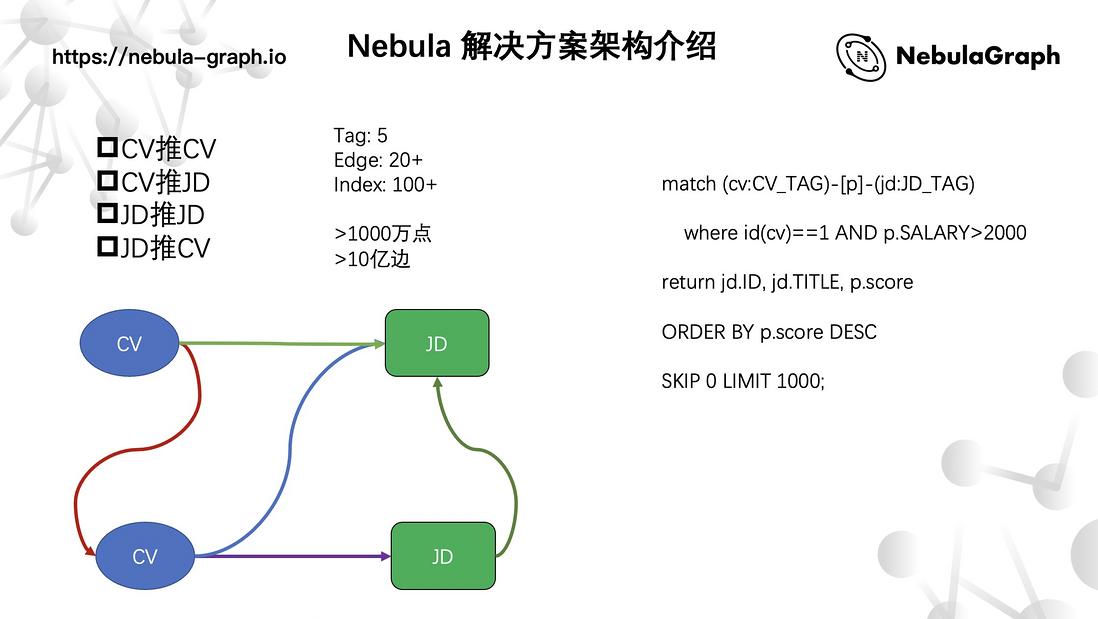

Recommendations based on Nebula Graph

Specific CV pushes CV, CV pushes JD, JD pushes JD, JD pushes CV scenarios, all can be satisfied, like the following statement:

match (cv:CV_TAG)-[p]-(jd:JD_TAG)

where id(cv)==1 AND p.SALARY>2000

return jd.ID, jd.TITLE, p.score

ORDER BY p.score DESC

SKIP 0 LIMIT 1000;It is a specific nGQL statement for CV to push JD: start the query through the resume (CV), pass some attribute filtering conditions, such as salary, sort by ORDER BY according to the similarity score on the side, and finally return a recommended JD information.

In the whole business, because the relationship is relatively simple, there are a total of 5 kinds of tags and 20+ kinds of edge relationships involved, as well as the creation of more than 100 kinds of indexes.

Summary of problems in the use of Nebula Graph

data write

Data writing is mainly divided into three aspects:

First T+1 data refresh. To expand, because the data is processed in advance, if it is to be used for online business, it involves the T+1 data refresh problem. If the data is refreshed, it may be cold data at the beginning, or there may be no data. When refreshing, the relational data is written directly, and this edge data may not even have the starting point. After the entire edge data is refreshed, it is necessary to insert non-existing points. So there is an improvement here, we first insert the point data and then write the edge data, so that the relationship can be better created. There is also a problem with data refresh, that is, the edge data is run out of T+1, so the data of the previous day has been invalid, and the existing relationship needs to be deleted here, and then the new relationship needs to be written.

Let's talk about data format conversion. Before, we used inverted index or KV to store the relationship. In the data structure, the graph structure is slightly different from before. Like the relationship just mentioned, what kind of relationship edge needs to be created between two points, and what kind of data is stored on the edge, all need to be reset. At that time, Zhilian developed an internal tool to customize Schema, which can easily store data as points and some data as edges, which can be configured flexibly. Even if there are other business access, with this gadget, there is no need to solve the Schema setting through Coding.

The last problem is the data invalidation caused by the continuous increase of data. Like the common cumulative online active users, after a period of time, the active user who was three months ago may be a silent user now, but according to the accumulation mechanism, the data of active users will continue to increase, which will undoubtedly give the server Bring data pressure. Therefore, we add a TTL attribute to time-sensitive features, and regularly delete active users that have expired.

data query

There are three main problems in data query:

- attribute multi-value problem

- Java Client Session Issues

- Grammar update problem

Specifically, Nebula itself does not support multiple values of attributes. We want to connect multiple edges to a point, but doing so will bring an extra cost of one-hop query. However, we have thought of another easy-to-operate method to implement the attribute multi-value problem. For example, we now want to store 3 cities, where the ID of city A is the ID prefix of city B. If we use simple text storage here, There will be inaccurate search results. Like the above query 5, the city 530 will also be queried, so when we write the data, we add identifiers before and after the data, so that other data will not be returned by mistake when performing prefix matching.

The second is the session management problem. Zhilian has created multiple Spaces in a cluster. Generally speaking, if there are multiple Spaces, you need to switch Spaces before querying. However, there will be performance loss, so Zhilian implements the session sharing function. Each Session maintains a Space connection, and the same Session pool does not need to cut Space.

The last one is the grammar update problem, because we started to use Nebula Graph from v2.0.1, and later upgraded to v2.6, and went through grammar iteration - switching from the original GO FROM to MATCH. In itself, students who write business do not care what kind of query syntax is used at the bottom. Therefore, here, Zhilian implements a DSL, which abstracts the upper layer of the query language to perform syntax conversion, and converts the business syntax into the corresponding nGQL query syntax. The advantage of adding DSL is that the query statement of the scene is no longer restricted to a single syntax. If the effect of MATCH is good, use MATCH, and use GO to achieve good results.

Implementation of Unified DSL

The above figure is the general idea of a unified DSL. First, start from a point (CV) (the blue block above the above figure), join an edge (the blue block in the middle of the above figure), and then fall to a certain point (the above figure). The blue block at the bottom of the figure), and finally output fields through select, sort to sort, and limit paging.

In terms of implementation, the graph index mainly uses the match, range and join functions. match is used for equality matching, and range is used for interval query, such as time interval or numerical range. And join mainly realizes how a point is related to another point. In addition to these 3 basic functions, there are also Boolean operations.

In the above way, we have unified the DSL, whether Nebula, Solr, or Milvus can be unified into a set of usage, and one DSL can call different indexes.

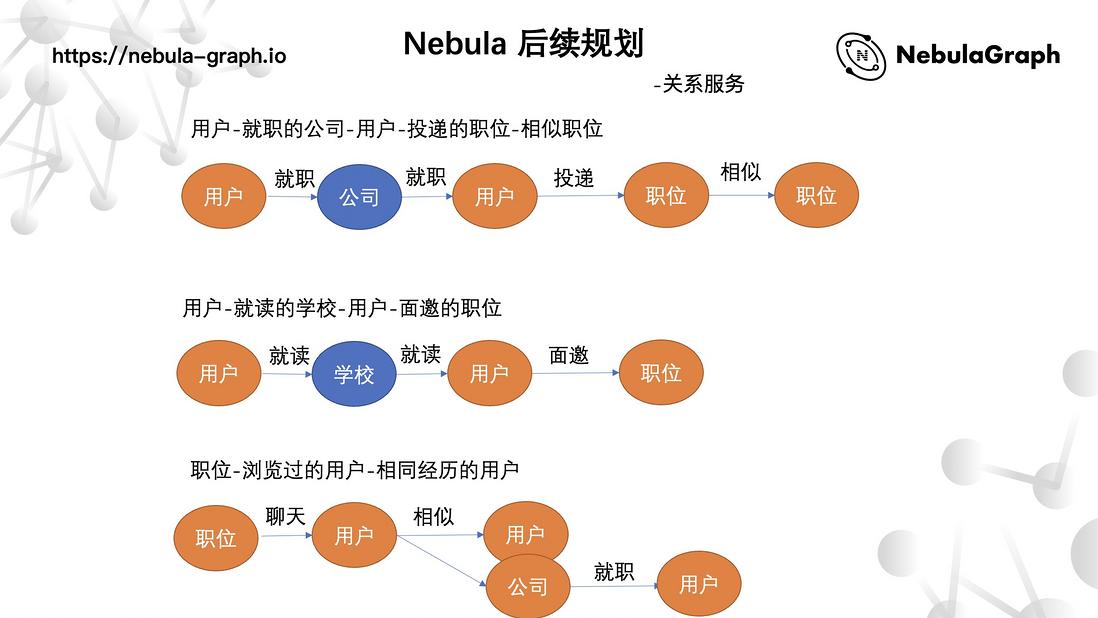

The follow-up plan of Zhilian Nebula Graph

More interesting and complex scenarios

The business implementation mentioned above is based on offline processing data, and Zhilian will deal with online real-time relationships later. As shown in the figure above, for a user and a position, the relationship between the two can be complex. For example, they are all related to a certain company, or the company that the position belongs to is a user who has worked before, the user is more inclined to apply for a certain field or industry, the position requires the user to be proficient in a certain skill, etc. a complex network of relationships.

The next step of Zhilian is to build such a complex relationship network and do some more interesting things. For example, a user has worked in company A, so his colleagues are queried through this relationship, and then relevant recommendations are made; or the previous/next class students of the user's same school tend to post a certain position or company. All kinds of related recommendations can be made; such as behavioral data such as who has chatted with the job posted by the user, and the user’s job-seeking intention requirements: salary level, city, field industry and other information, you can make related recommendations online. .

More convenient data display

At present, data query is through a specific query syntax, but the next step is to allow more people to query data with a low threshold.

higher resource utilization

At present, the utilization rate of our machine deployment resources is not high. Now we deploy two service nodes on one machine, and the configuration requirements of each physical machine are relatively high. In this case, the CPU and memory usage will not be very high. It is added to K8s, which can break up all service nodes and utilize resources more conveniently. If a physical node is down, another service can be quickly pulled up with the help of K8s, so that the disaster recovery capability will also be improved. Another point is that now we have a multi-graph space. If K8s is used, different spaces can be isolated to avoid data interference between graph spaces.

Better Nebula Graph

The last point is to upgrade the version of Nebula Graph. At present, Zhilian is using the v2.6 version. In fact, the v3.0 released by the community mentioned that the performance of MATCH has been optimized. We will try to upgrade the version in the future.

Exchange graph database technology? To join the Nebula exchange group, please fill in your Nebula business card first, and the Nebula assistant will pull you into the group~~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。