In real-time interactive scenarios, video quality is a key indicator that affects viewer experience. However, how to evaluate video quality in real time has always been an industry problem. It is necessary to make the unknown video quality user's subjective experience known.

The unknown part is often the most to be overcome. Shengwang has also been continuously exploring video quality evaluation methods in line with the real-time interactive field. MOS sub-evaluation model for subjective experience of video quality. Using advanced deep learning algorithms, we can achieve a no-reference evaluation of the MOS score (average subjective opinion score) of the subjective experience of video quality in real-time interactive scenes. We call this evaluation system VQA (Video Quality Assessment).

Shengwang VQA is a set of objective indicators for "evaluating subjective video quality experience". Before the introduction of Shengwang VQA, there were already two methods for evaluating video quality in the industry. The first is objective video quality assessment, which is mainly used in streaming media playback scenarios and evaluates quality based on how much information is provided by the original reference video. The second is subjective video quality assessment. The traditional method mainly relies on manual video viewing and scoring. Although it can intuitively reflect the audience's feelings about video quality to a certain extent, this method is still time-consuming, labor-intensive, costly, and subjective. There are deviations and so on.

The above two traditional video quality evaluation methods are difficult to apply to real-time interactive scenarios. In order to solve the above problems, Shengwang has built a large-scale video quality subjective evaluation database, and based on this The VQA model running on the mobile terminal uses deep learning algorithms to evaluate the subjective experience MOS score of the video image quality at the receiving end of the real-time interactive scene. Evaluate efficiency, enabling real-time video quality assessment.

To put it simply, we established a database of subjective video quality ratings, and then established an algorithm model through a deep learning algorithm, and trained it based on a large amount of video-MOS score information, and finally applied it to real-time interactive scenes to realize video Accurate simulation of the subjective MOS score of image quality. But the difficulty lies in: 1. How to collect data sets, that is, how to quantify people's subjective evaluation of video quality; 2. How to build a model so that the model can run on any receiving end and evaluate the quality of the receiving end in real time.

Collect professional, rigorous and reliable video quality datasets

In order to ensure the professionalism, rigor and reliability of the data set, Shengwang first made the video content rich in sources in the video material sorting stage to avoid visual fatigue when the scorers were scoring. There are too many video materials in some image quality ranges, and too few videos in some image quality ranges, which will affect the average value of subsequent scoring. The following picture shows the scoring distribution we collected in a certain video:

Secondly, in order to be more in line with the real-time interactive scene, the design of the sound network data set is very rigorous, covering a variety of scene video damage and distortion types, including: dark light and noise, motion blur, blurry screen, block effect, motion blur (camera shake) , Hue, Saturation, Highlights and Noise, etc. The scoring indicator is also set to 1-5 points, with 0.5 divided into a picture quality interval, each interval is accurate to 0.1, the granularity is finer and corresponds to the detailed standard.

Finally, in the data cleaning stage, we set up a rater group of ≥15 people according to ITU standards, first calculate the correlation between each rater and the overall mean, remove raters with low correlation, and then evaluate the remaining raters. Calculate the average value to get the final video subjective experience MOS score. Although different raters have different definitions of absolute intervals for "good" and "bad", or their sensitivity to image quality damage, the judgments of "better" and "worse" are still the same.

Establish a MOS score evaluation model for subjective video quality experience based on mobile terminals

After the data is collected, the next step is to establish a MOS score evaluation model for video subjective experience through deep learning algorithms based on the database, so that the model can replace manual scoring. In the real-time interactive scenario, the receiving end cannot obtain the lossless video reference source, so the solution of SoundNet is to define the objective VQA as a no-reference evaluation tool on the decoding resolution of the receiving end, and use the deep learning method to monitor the decoded video. quality.

● Academic rigor of model design : In the process of training the deep learning model, we also refer to a number of academic-level papers (see the paper references at the end of the article), for example, in the non-end-to-end training, the original video is extracted For some features, we found that sampling in the video space has the greatest impact on performance, while sampling in the temporal domain has the highest correlation with the MOS of the original video (Reference Paper 1). At the same time, it is not only the characteristics of the spatial domain that affect the image quality experience, but also the distortion in the time domain, including a time domain lag effect (refer to Paper 2). This effect corresponds to two behaviors: one is that the subjective experience decreases immediately when the video quality decreases, and the other is the slow improvement of the viewer's experience when the video quality improves. In this regard, the sound network also considers this phenomenon when modeling.

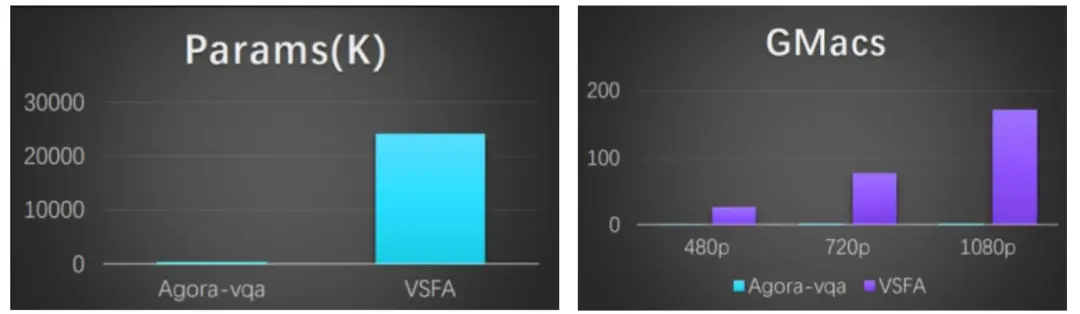

● 99.1% reduction in the parameters of the ultra-small model on the mobile terminal : Considering that many real-time interactive scenarios are currently applied on the mobile terminal, SoundNet has designed an ultra-small model that is easier to use on the mobile terminal. The amount of computation is reduced by 99.4%. Even after the low-end mobile phone is connected, you can run without pressure and conduct a census of the video quality on the terminal. At the same time, we have also implemented an innovative deep learning model compression method, which further reduces the amount of model parameters by 59% and the amount of computation by 49.2% while maintaining the relevance of model prediction based on a lightweight version. It can be used as a general method to simplify the models of other deep learning tasks, forming an effective general simplification method.

● The performance of the model is better than that of the large model published in the academic world : on the one hand, the correlation of the prediction results of the small model of the sound network VQA is comparable to that of the large model published by the academia, or even slightly better than the results of some large models. We selected the sound network VQA The model and the four video quality evaluation algorithm models of IQA, BRISQUE, V-BLINDS and VSFA published by the academic community were tested on two large-scale public datasets KoNViD-1k and LIVE-VQC. The experimental results are as follows :

On the other hand, compared with the large model based on deep learning in academia, the model of the sound network VQA has a great computational advantage. The amount of parameters and operations are far lower than the VSFA model. This performance advantage gives VQA the possibility to directly evaluate the video call service experience on the terminal, which greatly improves the saving of computing resources under the condition of providing a certain accuracy guarantee.

● The VQA model has good generalization ability . In deep learning algorithms, generalization ability refers to the ability of the algorithm to adapt to fresh samples. The set performance is good, and it can give reasonable results after training on the unknown data test set. In the early stage, Shengwang's VQA model mainly focused on internal video conferencing tools and educational scene data for priority polishing, but in the subsequent test results of entertainment scenes, the correlation reached more than 84%. The good generalization ability will lay a good foundation for building an industry-recognized video quality evaluation standard based on SoundNet VQA in the future.

● More suitable for RTE real-time interaction scenarios : At present, some similar VQA algorithms in the industry are mainly used in non-real-time streaming media playback scenarios, and due to the limitations of evaluation methods, the final evaluation results often have a certain gap with the user's real subjective experience score. , while the VQA algorithm model of Shengwang can be applied to many scenarios of real-time interaction, and the final evaluation of the subjective video quality score is highly consistent with the user's real sensory experience. At the same time, the video data of the sound network VQA model does not need to be uploaded to the server, and can be directly run on the terminal in real time, which not only saves resources, but also effectively avoids data privacy issues for customers.

From XLA to VQA is the evolution of QoS to QoE indicators

In real-time interaction, QoS service quality mainly reflects the performance and quality of audio and video technical services, while QoE quality of experience represents the user's subjective perception of real-time interaction service quality and performance. Shengwang has previously launched a real-time interactive XLA experience quality standard, which includes four indicators: 5s login success rate, 600ms video freeze rate, 200ms audio freeze rate, and <400ms network delay. The monthly compliance rate of each indicator must exceed 99.5%, the four indicators of XLA mainly reflect the quality of service (QoS) of real-time audio and video. SoundNet VQA can more intuitively reflect the user's subjective quality of experience (QoE) for video quality, and it also means that real-time interactive quality evaluation indicators will realize the evolution from QoS to QoE.

For enterprise customers and developers, Shengwang VQA can also empower multiple values:

1. Enterprise selection and avoid pitfalls . When selecting real-time audio and video service providers, many enterprises and developers will use the subjective experience of several audio and video call demos or simple access tests as the selection criteria. It helps enterprises to have a quantifiable evaluation standard when selecting service providers, and more clearly understand the subjective experience evaluation of the audio and video quality of service providers on the user side.

2. Help ToB enterprises provide video quality assessment tools for customers . For enterprises that provide enterprise-level video conferencing, collaboration, training, and various industry-level video systems, they can effectively quantify video quality through SoundNet VQA, helping enterprises to be more intuitive and feasible. Quantitatively display the image quality of its own products and services.

3. To help optimize the product experience , Shengwang VQA turns the unknown subjective experience of users in real-time interaction into known, which will undoubtedly help customers to experience evaluation and fault detection on the product side. Only a more comprehensive understanding of objective Service quality indicators and subjective user experience quality can further optimize the product experience and ultimately improve the user experience.

future outlook

Next, SoundNet VQA still has a long way to go. For example, the VQA data set used for model training is mostly composed of video clips with durations ranging from 4 to 10s. In actual calls, the recency effect needs to be considered, only By linearly tracking and reporting video clips, it may not be possible to accurately fit the overall subjective feelings of users. In the next step, we plan to comprehensively consider clarity, fluency, interaction delay, audio and video synchronization, etc., to form a time-varying experience evaluation method.

At the same time, the sound network VQA is also expected to be open sourced in the future. We hope to work with industry manufacturers and developers to promote the continuous evolution of VQA, and finally form the RTE industry-recognized subjective experience evaluation standard for video quality.

At present, Shengwang VQA is in the process of iterative polishing of the internal system, and it will be gradually opened in the future. It is planned to integrate the online evaluation function in the SDK simultaneously, and release the offline evaluation tool. If you want to know more about or experience the sound network VQA, you can click to read the original text below to leave your information, we will communicate with you further.

References for academic papers :

[1] Z. Ying, M. Mandal, D. Ghadiyaram and A. Bovik, "Patch-VQ: 'Patching Up' the Video Quality Problem," 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville , TN, USA, 2021, pp. 14014-14024.

[2] K. Seshadrinathan and AC Bovik, "Temporal hysteresis model of time varying subjective video quality," 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 2011, pp. 1153-1156.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。