By: F5's Owen Garrett Senior Director Product Management January 21, 2016

When deployed correctly, caching is one of the quickest ways to speed up web content. Caching not only brings content closer to the end user (thereby reducing latency), it also reduces the number of requests to upstream origin servers, increasing capacity and reducing bandwidth costs.

The availability of globally distributed cloud platforms such as AWS and global DNS-based load balancing systems such as Route 53 allows you to create your own global content delivery network (CDN).

In this article, we'll learn how NGINX Open Source and NGINX Plus cache and deliver traffic accessed using byte-range requests. A common use case is HTML5 MP4 video, where byte ranges are requested for trick-play (skip and seek) video playback. Our goal is to implement a video transmission caching solution that supports the byte range and minimizes user latency and upstream network traffic.

Editor : The cache tile method discussed in Populating Cache Tile by Tile was introduced in NGINX Plus R8. For an overview of all the new features in this release, see our blog: NGINX Plus R8 Release.

Our testing framework

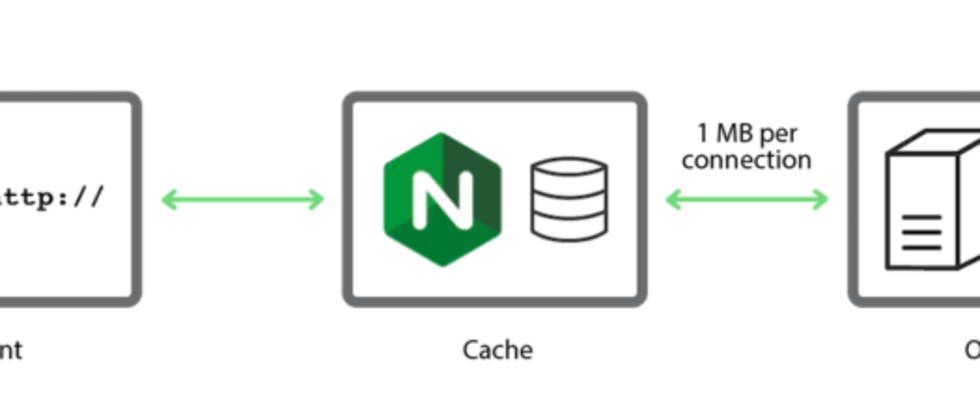

We needed a simple, reproducible testing framework to investigate alternative strategies for caching with NGINX.

A simple, repeatable testbed for studying caching strategies in NGINX

We start with a 10-MB test file that contains byte offsets every 10 bytes so we can verify that byte range requests are working correctly:

origin$ perl -e 'foreach $i ( 0 ... 1024*1024-1 ) { printf "%09d\n",

$i*10 }' > 10Mb.txtThe first line in the file is as follows:

origin$ head 10Mb.txt

000000000

000000010

000000020

000000030

000000040

000000050

000000060

000000070

000000080

000000090A curl request for an intermediate byte range (500,000 to 500,009) in the file returns the expected byte range:

client$ curl -r 500000-500009 http://origin/10Mb.txt

000500000Now let's add a bandwidth limit of 1MB/s for a single connection between the origin server and the NGINX proxy cache:

origin# tc qdisc add dev eth1 handle 1: root htb default 11

origin# tc class add dev eth1 parent 1: classid 1:1 htb rate 1000Mbps

origin# tc class add dev eth1 parent 1:1 classid 1:11 htb rate 1MbpsTo check that the delay is working as expected, we retrieve the entire file directly from the origin server:

cache$ time curl -o /tmp/foo http://origin/10Mb.txt

% Total % Received % Xferd Average Speed Time ...

Dload Upload Total ...

100 10.0M 100 10.0M 0 0 933k 0 0:00:10 ...

... Time Time Current

... Spent Left Speed

... 0:00:10 --:--:-- 933k

real 0m10.993s

user 0m0.460s

sys 0m0.127sIt took nearly 11 seconds to deliver the file, which is a reasonable simulation of the performance of edge caches pulling large files from origin servers over bandwidth-constrained WAN networks.

Default Byte Range Cache Behavior for NGINX

Once NGINX has cached the entire resource, it serves byte-range requests directly from the cached copy on disk.

What happens when content is not cached? When NGINX receives a byte-range request for uncached content, it requests the entire file (not the byte-range) from the origin server and starts streaming the response to temporary storage.

Once NGINX receives the data it needs to satisfy the client's raw byte range request, NGINX sends the data to the client. Behind the scenes, NGINX continues to stream the full response to a file in temporary storage. After the transfer is complete, NGINX moves the file to the cache.

We can easily demonstrate the default behavior with the following simple NGINX configuration:

proxy_cache_path /tmp/mycache keys_zone=mycache:10m;

server {

listen 80;

proxy_cache mycache;

location / {

proxy_pass http://origin:80;

}

}We first empty the cache:

cache # rm –rf /tmp/mycache/*Then we request the middle ten bytes of 10Mb.txt:

client$ time curl -r 5000000-5000009 http://cache/10Mb.txt

005000000

real 0m5.352s

user 0m0.007s

sys 0m0.002sNGINX sends a request to the origin server for the entire 10Mb.txt file and starts loading it into the cache. Once the requested byte range is cached, NGINX delivers it to the client. As reported by the time command, this happens in a little over 5 seconds.

In our previous tests, it took just over 10 seconds to transfer the entire file, which means it took about 5 seconds to retrieve and cache the entire contents of a 10Mb.txt after transferring the intermediate byte range to the client. The virtual server's access log records a transfer of 10,486,039 bytes (10 MB) in the full file with a status code of 200:

192.168.56.10 - - [08/Dec/2015:12:04:02 -0800] "GET /10Mb.txt HTTP/1.0" 200 10486039 "-" "-" "curl/7.35.0"If we curl the request repeatedly after the entire file is cached, the response is immediate because NGINX serves the requested byte range from the cache.

However, there are problems with this basic configuration (and the resulting default behavior). If we request the same byte range a second time, after it is cached but before the entire file is added to the cache, NGINX sends a new request for the entire file to the origin server and starts a new cache fill operation. We can demonstrate this behavior with the following command:

client$ while true ; do time curl -r 5000000-5000009 http://dev/10Mb.txt ; doneEvery new request to the origin server triggers a new cache fill operation, and the cache does not "stabilize" until the cache fill operation completes and no other operations are in progress.

Imagine a scenario where a user starts watching a video file immediately after it is published. If a cache fill operation takes 30 seconds (for example), but the delay between additional requests is less than this value, the cache may never fill and NGINX will continue to send more and more requests for the entire file to the origin server.

NGINX provides two cache configurations that can effectively solve this problem:

Cache Lock - With this configuration, during a cache fill operation triggered by the first byte range request, NGINX forwards any subsequent byte range requests directly to the origin server. After the cache fill operation is complete, NGINX serves all requests for the byte range in the cache and the entire file.

Cache Tiling - With this strategy introduced in NGINX Plus R8 and NGINX Open Source 1.9.8, NGINX splits the file into smaller subranges that can be retrieved quickly, and requests each subrange from the origin server as needed.

Use cache locks for a single cache fill operation

The following configuration triggers a cache fill as soon as the first byte range request is received, and forwards all other requests to the origin server while the cache fill operation is in progress:

proxy_cache_path /tmp/mycache keys_zone=mycache:10m;

server {

listen 80;

proxy_cache mycache;

proxy_cache_valid 200 600s;

proxy_cache_lock on;

# Immediately forward requests to the origin if we are filling the cache

proxy_cache_lock_timeout 0s;

# Set the 'age' to a value larger than the expected fill time

proxy_cache_lock_age 200s;

proxy_cache_use_stale updating;

location / {

proxy_pass http://origin:80;

}

} proxy_cache_lock on – Set cache lock. When NGINX receives the first byte range request for a file, it requests the entire file from the origin server and initiates a cache fill operation. NGINX does not convert subsequent byte range requests into requests for the entire file or initiate new cache fill operations. Instead, it queues requests until the first cache fill operation completes or the lock times out.

proxy_cache_lock_timeout – Controls how long the cache is locked (default 5 seconds). When the timeout expires, NGINX forwards each queued request to the origin server unmodified (as a request for Range as a byte range of reserved headers, not as a request for the entire file), and does not cache the origin server returns response.

In the case we tested with 10Mb.txt the cache filling operation can take a lot of time, so we set the lock timeout to 0 (zero) seconds because there is no need to queue requests. NGINX will immediately forward any byte range requests for the file to the origin server until the cache fill operation is complete.

proxy_cache_lock_age - Sets the deadline for cache fill operations. If the operation does not complete within the specified time, NGINX forwards another request to the origin server. It always took longer than expected to fill the cache, so we increased it from the default of 5 seconds to 200 seconds.

proxy_cache_use_stale updating – If NGINX is updating the resource, tells NGINX to use the current cached version of the resource immediately. This has no effect on the first request (which triggers a cache update), but will speed up responses to subsequent requests from the client.

We repeat our test, requesting the middle byte range of 10Mb.txt . The file is not cached, and like the previous test, time shows that NGINX takes a little over 5 seconds to deliver the requested byte range (recall that the network is limited to 1 Mb/s throughput ):

client # time curl -r 5000000-5000009 http://cache/10Mb.txt

005000000

real 0m5.422s

user 0m0.007s

sys 0m0.003sDue to cache locking, subsequent requests for byte ranges are satisfied almost immediately when the cache is filled. NGINX forwards these requests to the origin server without trying to satisfy them from the cache:

client # time curl -r 5000000-5000009 http://cache/10Mb.txt

005000000

real 0m0.042s

user 0m0.004s

sys 0m0.004sIn the following excerpt from the origin server access log, entry 206 with a status code confirms that the origin server is processing a byte range request during the completion of the cache fill operation. (We use the log_format directive to include Range request headers in log entries to identify which requests were modified and which were not.)

The last line, with status code 200, corresponds to completion of the first byte range request. NGINX modifies this to a request for the entire file and triggers a cache fill operation.

192.168.56.10 - - [08/Dec/2015:12:18:51 -0800] "GET /10Mb.txt HTTP/1.0" 206 343 "-" "bytes=5000000-5000009" "curl/7.35.0"

192.168.56.10 - - [08/Dec/2015:12:18:52 -0800] "GET /10Mb.txt HTTP/1.0" 206 343 "-" "bytes=5000000-5000009" "curl/7.35.0"

192.168.56.10 - - [08/Dec/2015:12:18:53 -0800] "GET /10Mb.txt HTTP/1.0" 206 343 "-" "bytes=5000000-5000009" "curl/7.35.0"

192.168.56.10 - - [08/Dec/2015:12:18:54 -0800] "GET /10Mb.txt HTTP/1.0" 206 343 "-" "bytes=5000000-5000009" "curl/7.35.0"

192.168.56.10 - - [08/Dec/2015:12:18:55 -0800] "GET /10Mb.txt HTTP/1.0" 206 343 "-" "bytes=5000000-5000009" "curl/7.35.0"

192.168.56.10 - - [08/Dec/2015:12:18:46 -0800] "GET /10Mb.txt HTTP/1.0" 200 10486039 "-" "-" "curl/7.35.0"When we repeat the test after the entire file is cached, NGINX serves any further byte range requests from the cache:

client # time curl -r 5000000-5000009 http://cache/10Mb.txt

005000000

real 0m0.012s

user 0m0.000s

sys 0m0.002sUsing cache locks optimizes cache fill operations at the cost of sending all subsequent user traffic to the origin server during cache fill.

Fill the cache slice by slice

The Cache Slice module introduced in NGINX Plus R8 and NGINX Open Source 1.9.8 provides an alternative method of filling the cache, which is more efficient when bandwidth is severely constrained and cache filling operations take a long time.

Editor: For an overview of all the new features in NGINX Plus R8, see NGINX Plus R8 on our blog.

Using the cache tiling approach, NGINX divides the file into smaller segments and requests each segment as needed. These segments are accumulated in the cache, and requests for resources are satisfied by passing the appropriate portion of one or more segments to the client. Requests for large byte ranges (or indeed entire files) trigger subrequests for each required segment, which are cached as they arrive from the origin server. Once all segments are cached, NGINX assembles the response from them and sends it to the client.

NGINX Cache Tiles Explained

In the configuration snippet below, the slice directive (introduced in NGINX Plus R8 and NGINX Open Source 1.9.8) tells NGINX to segment each file into 1-MB slices.

When using the slice directive, we must also add the $slice_range variable to the proxy_cache_key directive to distinguish the segments of the file, and we must replace the scalar in the Range request header so that NGINX requests the appropriate byte range from the origin server. We upgraded the request to HTTP/1.1 because HTTP/1.0 does not support byte range requests.

proxy_cache_path /tmp/mycache keys_zone=mycache:10m;

server {

listen 80;

proxy_cache mycache;

slice 1m;

proxy_cache_key $host$uri$is_args$args$slice_range;

proxy_set_header Range $slice_range;

proxy_http_version 1.1;

proxy_cache_valid 200 206 1h;

location / {

proxy_pass http://origin:80;

}

}As before, we request an intermediate byte range in 10Mb.txt:

client$ time curl -r 5000000-5000009 http://cache/10Mb.txt

005000000

real 0m0.977s

user 0m0.000s

sys 0m0.007sNGINX satisfies the request by requesting a single 1-MB file segment (byte range 4194304–5242879) containing the requested byte range 5000000–5000009.

KEY: www.example.com/10Mb.txtbytes=4194304-5242879

HTTP/1.1 206 Partial Content

Date: Tue, 08 Dec 2015 19:30:33 GMT

Server: Apache/2.4.7 (Ubuntu)

Last-Modified: Tue, 14 Jul 2015 08:29:12 GMT

ETag: "a00000-51ad1a207accc"

Accept-Ranges: bytes

Content-Length: 1048576

Vary: Accept-Encoding

Content-Range: bytes 4194304-5242879/10485760If a byte-range request spans multiple segments, NGINX requests all required segments (not yet cached), and then assembles the byte-range response from the cached segments.

The Cache Slice module was developed for delivering HTML5 video, which pseudo-streams content to the browser using byte-range requests. It's great for video assets where the initial cache fill operation can take several minutes because bandwidth is limited and the file doesn't change after publishing.

Choose the best slice size

Set the slice size to a value small enough that each segment can be transferred quickly (for example, within a second or two). This will reduce the likelihood of multiple requests triggering the above continuous update behavior.

On the other hand, the slice size may be too small. If a request for an entire file fires thousands of small requests at the same time, the overhead can be high, resulting in excessive memory and file descriptor usage and more disk activity.

Also, because the cache tiling module splits resources into independent segments, resources cannot be changed once they are cached. Each time the ETag receives a segment from the origin, the module verifies the header of the resource, and if the ETag changes, NGINX aborts the transaction because the underlying cached version is now corrupted. We recommend that you only use cache tiles for large files that won't change after publishing, such as video files.

Summarize

If you're using byte ranges to deliver large amounts of resources, both cache locking and cache tiling techniques can minimize network traffic and provide your users with excellent content delivery performance.

If the cache fill operation can be performed quickly, and you can receive traffic spikes from the origin server during the fill process, use the cache locking technique.

If the cache fill operation is very slow and the content is stable (not changing), use the new cache tiling technique.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。