Three years of experience in front end more or less working with nginx configuration.

The importance of nginx cannot be overstated.

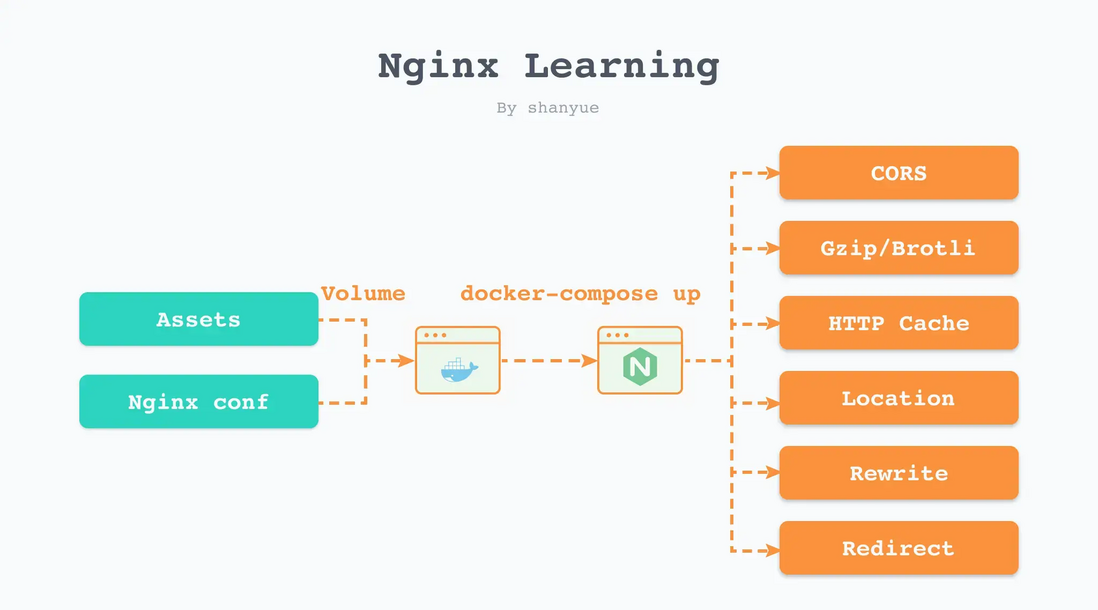

This article introduces the common configuration of nginx from the perspective of the front end, and learns nginx through docker, which ensures that all sample configurations can run normally.

I put all the docker/nginx configurations in this article in simple-deploy , which can be cloned and run quickly through docker compose.

And all the interface examples are maintained in the Learn Nginx By Docker documentation, which can be opened and quickly debugged through Apifox .

The following steps can be used to debug the nginx interface in apifox .

- Download Apifox

- Clone the project in Apifox

- Clone the example repository in Github

- After cloning, enter the learn-nginx directory

-

docker-compose upStart container - Open the Apifox debugging interface to learn nginx

nginx configuration file

We use the nginx image to understand what nginx's configuration files are.

$ docker run -it --rm nginx:alpine sh

$ ls -lah /etc/nginx/

total 40K

drwxr-xr-x 3 root root 4.0K Nov 13 2021 .

drwxr-xr-x 1 root root 4.0K Jun 14 07:55 ..

drwxr-xr-x 2 root root 4.0K Nov 13 2021 conf.d

-rw-r--r-- 1 root root 1.1K Nov 2 2021 fastcgi.conf

-rw-r--r-- 1 root root 1007 Nov 2 2021 fastcgi_params

-rw-r--r-- 1 root root 5.2K Nov 2 2021 mime.types

lrwxrwxrwx 1 root root 22 Nov 13 2021 modules -> /usr/lib/nginx/modules

-rw-r--r-- 1 root root 648 Nov 2 2021 nginx.conf

-rw-r--r-- 1 root root 636 Nov 2 2021 scgi_params

-rw-r--r-- 1 root root 664 Nov 2 2021 uwsgi_params In nginx , the following files are more important, and they are all related:

-

/etc/nginx/nginx.conf -

/etc/nginx/conf.d/default.conf

/etc/nginx/nginx.conf

The nginx main configuration file refers to all configuration files in the /etc/nginx/conf.d/ directory.

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}/etc/nginx/conf.d/default.conf

server {

listen 80;

server_name localhost;

#access_log /var/log/nginx/host.access.log main;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}/usr/share/nginx/html

The default static resource directory is also the welcome page of nginx.

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>Efficiently learn nginx configuration through docker

An efficient way to learn nginx is recommended: use the nginx image locally and mount the nginx configuration to start the container .

Through the following docker-compose verify the nginx configuration in seconds, which is undoubtedly an excellent tool for learning nginx.

I put all the configurations about nginx in simple-deploy , and each configuration corresponds to a service in docker-compose , such as the following nginx, location, order1 is service .

version: "3"

services:

# 关于 nginx 最常见配置的学习

nginx:

image: nginx:alpine

ports:

- 8080:80

volumes:

- ./nginx.conf:/etc/nginx/conf.d/default.conf

- .:/usr/share/nginx/html

# 关于 location 的学习

location: ...

# 关于 location 匹配顺序的学习

order1: ...Each time the configuration is modified, the container needs to be restarted, and the specified content can be learned according to the service name.

$ docker-compose up <service>

# 学习 nginx 最基础的配置

$ docker-compose up nginx

# 学习关于 location 的配置

$ docker-compose up location All nginx configurations in this article can be learned through docker , with all the codes and configurations attached.

root and index

-

root: The root path of static resources. See documentation https://nginx.org/en/docs/http/ngx_http_core_module.html#root -

index: When the requested path ends with/, the index file under the path will be automatically searched. See documentation https://nginx.org/en/docs/http/ngx_http_index_module.html#index

root and index are the basis of front-end deployment. By default, the root is /usr/share/nginx/html , so when we deploy the front-end, we often hang the built static resource directory to this address.

server {

listen 80;

server_name localhost;

root /usr/share/nginx/html;

index index.html index.htm;

}location

location is used to match routes. The configuration syntax is as follows.

location [ = | ~ | ~* | ^~ ] uri { ... } The following modifiers can be provided before uri

-

=exact match, highest priority. -

^~Prefix match, followed by priority. If the same prefix matches, take the longest path. -

~regular match, priority again (~* is only case-insensitive, not a single column). If it is also a regular match, take the first path. -

/Universal match, priority again.

To verify the matched location, I add a custom response header X-Config in the following example, which can be verified through the browser console network panel.

add_header X-Config B;Note that the links in all my configuration files can be clicked directly, avoiding the inconvenience of looking for mapped port numbers in the compose configuration file .

location modifier validation

For these four modifiers can be verified under my nginx.

Since proxy_pass is used here, location2 and api are required to be started together. In the location2 service, the service name can be used as the hostname directly http://api:3000 Access the api service.

The api service, a whoami service written by myself, is used to print out the request path and other information, see shfshanyue/whoami for details.

$ docker-compose up location2 apiThe following is the configuration file for verifying location, see shfshanyue/simple-daploy:learn-nginxs

server {

listen 80;

server_name localhost;

root /usr/share/nginx/html;

index index.html index.htm;

# 通用匹配,所有 /xxx 任意路径都会匹配其中的规则

location / {

add_header X-Config A;

try_files $uri $uri.html $uri/index.html /index.html;

}

# http://localhost:8120/test1 ok

# http://localhost:8120/test1/ ok

# http://localhost:8120/test18 ok

# http://localhost:8120/test28 not ok

location /test1 {

# 可通过查看响应头来判断是否成功返回

add_header X-Config B;

proxy_pass http://api:3000;

}

# http://localhost:8120/test2 ok

# http://localhost:8120/test2/ not ok

# http://localhost:8120/test28 not ok

location = /test2 {

add_header X-Config C;

proxy_pass http://api:3000;

}

# http://localhost:8120/test3 ok

# http://localhost:8120/test3/ ok

# http://localhost:8120/test38 ok

# http://localhost:8120/hellotest3 ok

location ~ .*test3.* {

add_header X-Config D;

proxy_pass http://api:3000;

}

# http://localhost:8120/test4 ok

# http://localhost:8120/test4/ ok

# http://localhost:8120/test48 ok

# http://localhost:8120/test28 not ok

location ^~ /test4 {

# 可通过查看响应头来判断是否成功返回

add_header X-Config E;

proxy_pass http://api:3000;

}

}location priority validation

In my configuration file, start with order to name all the nginx configurations for priority verification, there are a total of four configuration files, see docker-compose for details.

Here only order1 is used as an example for verification. The configuration is as follows:

# 以下配置,访问以下链接,其 X-Config 为多少

#

# http://localhost:8210/shanyue,为 B,若都是前缀匹配,则找到最长匹配的 location

server {

root /usr/share/nginx/html;

# 主要是为了 shanyue 该路径,因为没有后缀名,无法确认其 content-type,会自动下载

# 因此这里采用 text/plain,则不会自动下载

default_type text/plain;

location ^~ /shan {

add_header X-Config A;

}

location ^~ /shanyue {

add_header X-Config B;

}

}Start the service:

$ docker-compose up order1curl to verify:

Of course, it can also be verified through the browser console network panel. Since only the response header needs to be verified here, we can only send the head request through curl --head .

# 查看其 X-Config 为 B

$ curl --head http://localhost:8210/shanyue

HTTP/1.1 200 OK

Server: nginx/1.21.4

Date: Fri, 03 Jun 2022 10:15:11 GMT

Content-Type: text/plain

Content-Length: 15

Last-Modified: Thu, 02 Jun 2022 12:44:23 GMT

Connection: keep-alive

ETag: "6298b0a7-f"

X-Config: B

Accept-Ranges: bytesproxy_pass

proxy_pass Reverse proxy is also the most important content of nginx, which is also commonly used to solve cross-domain problems.

When using the proxy_pass proxy path, there are two cases

- If the proxy server address does not contain a URI, the client request path is the same as the proxy server path. strongly recommend this way

- If the proxy server address contains a URI, then the client request path matches the location, and the path after the location is appended to the proxy server address.

# 不含 URI

proxy_pass http://api:3000;

# 含 URI

proxy_pass http://api:3000/;

proxy_pass http://api:3000/api;

proxy_pass http://api:3000/api/;Another example:

- Visit http://localhost:8300/api3/hello and match the following path successfully

-

proxy_passwith URI attached - The extra path after the matching path is

/hello, attach it afterproxy_pass, get http://api:3000/hello/hello

location /api3 {

add_header X-Config C;

# http://localhost:8300/api3/hello -> proxy:3000/hello/hello

proxy_pass http://api:3000/hello;

}It's a bit of a mouthful. There are multiple examples in our test environment. Use the following code to start repeatable tests:

$ docker-compose up proxy apiSince the service proxied by proxy_pass is whoami, the real request path can be printed out, which can be tested according to this

server {

listen 80;

server_name localhost;

root /usr/share/nginx/html;

index index.html index.htm;

# 建议使用此种 proxy_pass 不加 URI 的写法,原样路径即可

# http://localhost:8300/api1/hello -> proxy:3000/api1/hello

location /api1 {

# 可通过查看响应头来判断是否成功返回

add_header X-Config A;

proxy_pass http://api:3000;

}

# http://localhost:8300/api2/hello -> proxy:3000/hello

location /api2/ {

add_header X-Config B;

proxy_pass http://api:3000/;

}

# http://localhost:8300/api3/hello -> proxy:3000/hello/hello

location /api3 {

add_header X-Config C;

proxy_pass http://api:3000/hello;

}

# http://localhost:8300/api4/hello -> proxy:3000//hello

location /api4 {

add_header X-Config D;

proxy_pass http://api:3000/;

}

}add_header

Control response headers.

Since many features are controlled through response headers, many things can be done based on this directive, such as:

- Cache

- CORS

- HSTS

- CSP

- ...

Cache

location /static {

add_header Cache-Control max-age=31536000;

}CORS

location /api {

add_header Access-Control-Allow-Origin *;

}HSTS

location / {

listen 443 ssl;

add_header Strict-Transport-Security max-age=7200;

}CSP

location / {

add_header Content-Security-Policy "default-src 'self';";

}Operation

- Preliminary: Learn nginx configuration based on docker, and configure index.html strong cache 60s time

- Intermediate: How to use nginx and whoami mirrors to simulate 502/504

- Advanced: learn nginx configuration based on docker, and configure gzip/brotli

- Interview: What is the difference between brotli/gzip

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。