Introduction: OPPO Knowledge Graph is a self-developed large-scale general-purpose knowledge graph led by the Xiaobu assistant team of OPPO's digital intelligence engineering system and constructed by multi-team collaboration. At present, it has reached the scale of hundreds of millions of entities and billions of triples, mainly in small Distributed assistant knowledge quiz, e-commerce search and other scenarios.

This article mainly shares the technical challenges and corresponding solutions related to algorithms in the process of OPPO knowledge graph construction, mainly including entity classification, entity alignment, information extraction, entity linking, and graph question answering query parsing and other related algorithm content.

The full text revolves around the following four points:

- background

- OPPO Knowledge Graph

- Application of Knowledge Graph in Xiaobu Assistant

- Summary and Outlook

background

First of all, I will share with you the background of Xiaobu Assistant and Knowledge Graph.

Background - Xiaobu Assistant

Xiaobu Assistant is an interesting, caring and ubiquitous AI assistant from OPPO, which is installed on OPPO mobile phones, OnePlus, Realme, and IoT smart hardware such as smart watches. It can provide users with system applications, life services, audio-visual entertainment, information query, intelligent chat and other services, and then tap potential user value, marketing value and technical value.

Background - OPPO Knowledge Graph

At the end of 2020, OPPO began to build its own knowledge graph. After a year or so, OPPO has built a high-quality general knowledge graph of hundreds of millions of entities and billions of relationships. Currently, the OPPO Knowledge Graph supports millions of Q&A requests from Xiaobu online every day. Further, OPPO is gradually expanding the general knowledge graph to multiple vertical categories such as commodity graph, health graph, and risk control graph.

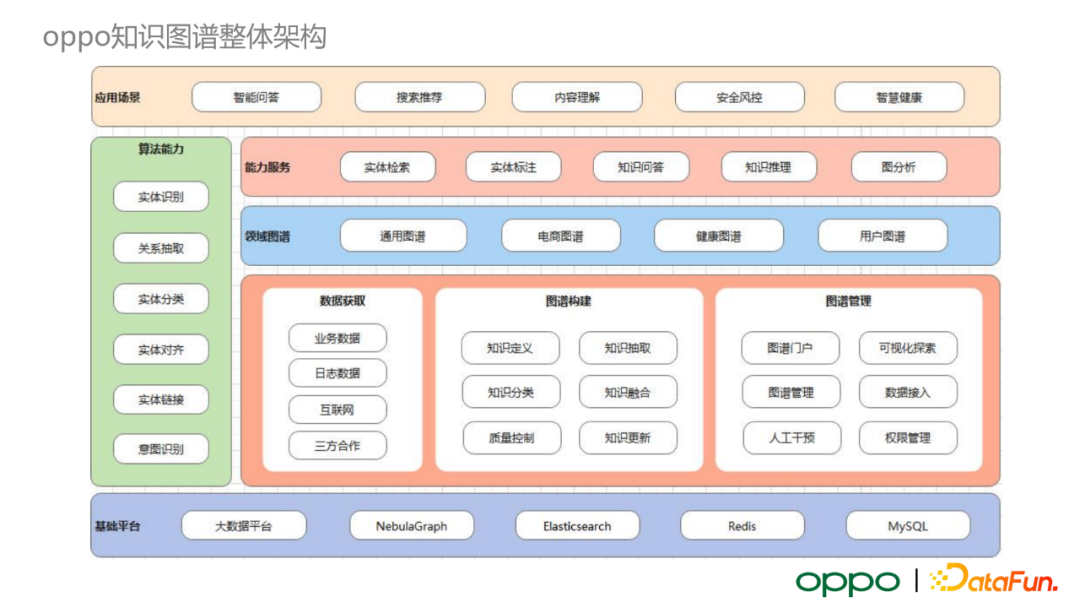

OPPO Knowledge Graph

Next, I will introduce the overall architecture of the OPPO Knowledge Graph. As shown in the picture above, it consists of three parts. The bottom layer is a general data processing platform and a graph database related framework. We specifically choose Nebula Graph to store graph data. The middle layer contains data acquisition, graph construction and graph management modules. The top layer covers various application scenarios of the OPPO map, including intelligent question and answer, search recommendation, content understanding, security risk control, smart health, etc.

The three core algorithms for applying knowledge graphs are described below: entity classification, entity alignment, and information extraction.

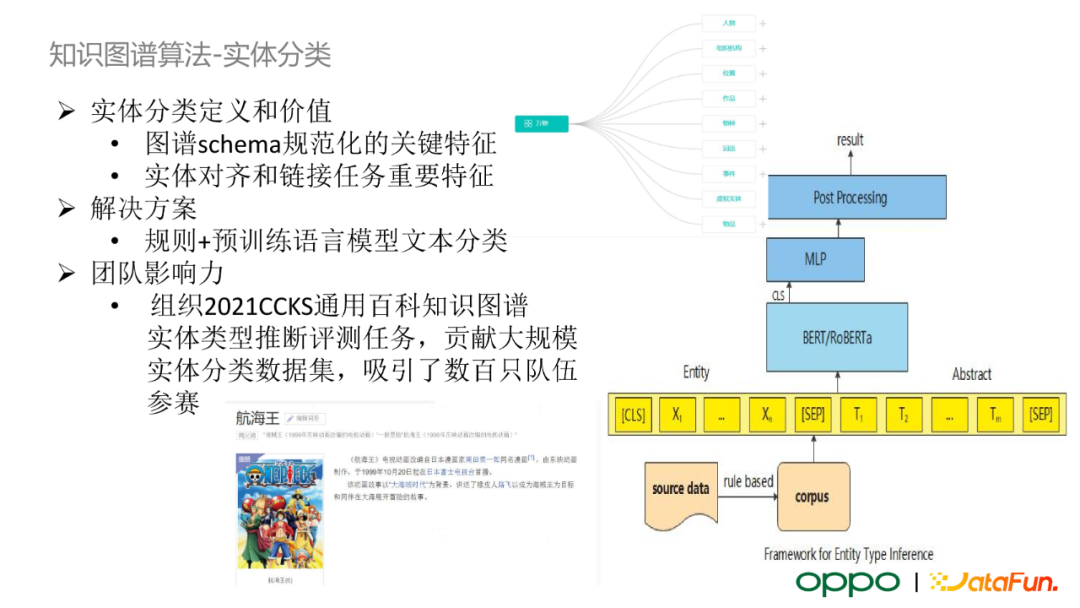

Knowledge Graph Algorithm - Entity Classification

Entity classification categorizes entities according to the predefined schema of the graph, which enables us to associate attributes and relationships with entities. At the same time, entity classification can provide important features for downstream entity alignment, entity linking, and online intelligent question answering services. At present, we adopt the pipeline scheme of rules + pre-trained language model text classification. In the first stage, we process a large amount of semi-supervised pseudo-labeled data with predefined rules using entity descriptions such as Wikipedia. Subsequently, these data will be handed over to the labeling colleagues for verification, sorted out the labeled training set, and combined with the pre-trained language model to train a multi-label text classification model. It is worth mentioning that we provided a large-scale entity classification dataset for the industry, and organized the 2021 CCKS General Encyclopedia Knowledge Graph Competition, which attracted hundreds of teams to participate.

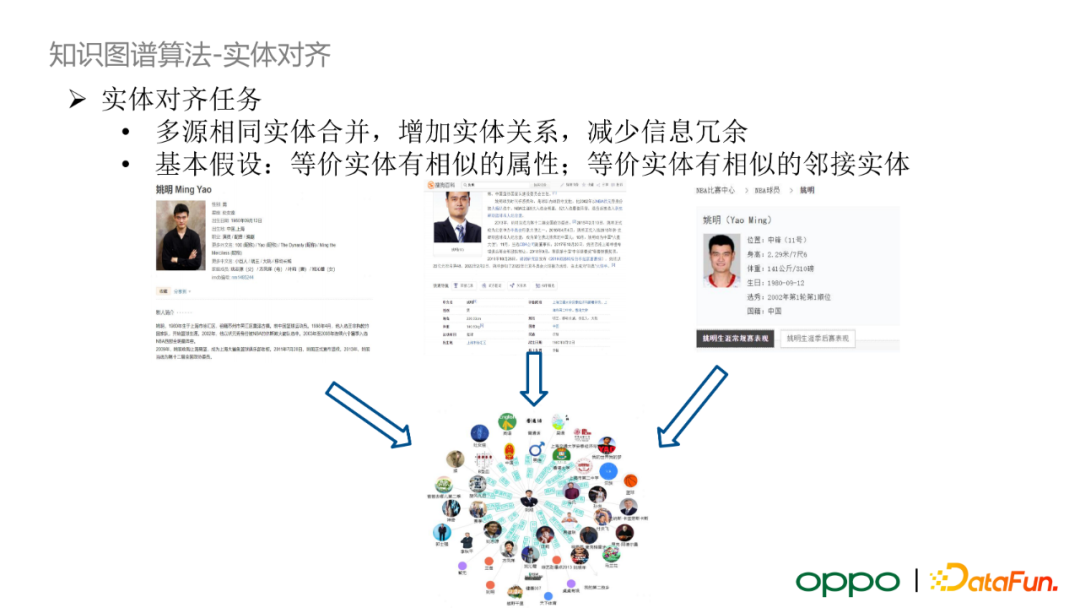

Knowledge Graph Algorithm - Entity Alignment

Entity alignment task is a key link in knowledge graph construction. In the open source data of the Internet, the same entity has similar or the same information from multiple sources. If this part of redundant information is all included in the knowledge graph, it will bring ambiguity when using the knowledge graph downstream for information retrieval. Therefore, we integrate information from the same entities from multiple sources to reduce information redundancy.

Knowledge Graph Algorithm - Entity Alignment Algorithm

Specifically, since the scale of entities in the knowledge graph reaches hundreds of millions, considering the efficiency problem, we propose a two-stage scheme of Dedupe+BERT semantic classification. In the first stage, we use parallel processing to group entities with the same name and alias, enter the Dedupe data deduplication tool, and generate the first-stage entity alignment results. We require a high accuracy rate for the results of this stage. In the second stage, we train an entity relatedness matching model whose input is a pair of candidate entities, aiming to adjust and complement the alignment results of the first stage.

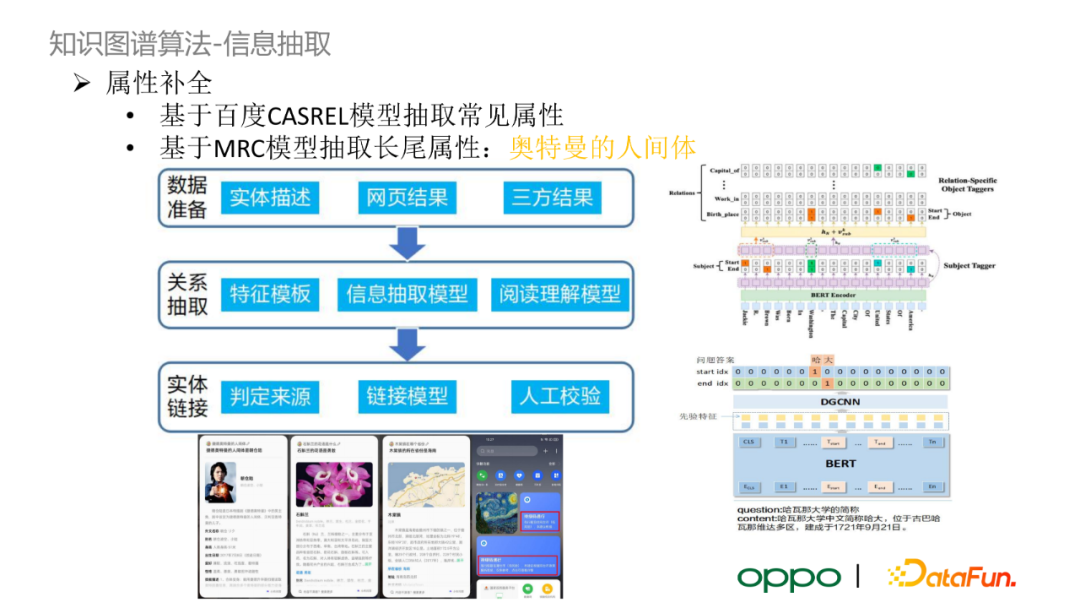

Knowledge Graph Algorithm - Information Extraction

After entity classification and alignment, due to the lack of information in the data source, there are some key attributes of some entities missing in the graph. We divide entities with missing attributes into two categories, and use information extraction to complete them.

The first category is the common entities and attributes , such as the capital of the country, the age and gender of the person, etc. We train a relation extraction model using Baidu CASREL model and open source datasets commonly used in the industry. The structure of the model can be viewed as a multi-label pointer network, where each label corresponds to a relation type. CASREL first extracts the main entity in the sentence, then inputs the embedding of the main entity into the pointer network, predicts the starting position and ending position of the object in the sentence under each relation, and finally determines whether the SPO triplet is credible through the set threshold. .

The second category is long-tail attributes , which are less annotated on open source datasets. We utilize a reading comprehension (MRC) model to extract long-tail attributes. If we want to extract the attribute of "a certain Ultraman's human body", we will use the question "who is a certain Ultraman's human body" as a query, retrieve the text results, and use the reading comprehension model to determine whether the text contains Objects that contain "human bodies".

To sum up, in the process of building the knowledge graph, we applied the tasks of entity classification, entity alignment and information extraction, hoping to improve the quality and richness of the knowledge graph through them. In the subsequent construction process, we hope to extend the entity classification based on transfer learning to vertical scenarios such as commodity classification and game classification under the existing framework. In addition, the current entity alignment task is still relatively basic, and we hope to combine multi-modality, node representation learning and other multi-strategy alignment schemes in the future. Finally, for information extraction tasks, we hope to extract entity relationships based on a small number of labeled samples, or even zero labeled samples, with the help of large-scale pre-trained language models. We also consider applying the entity extraction algorithm, so that it can be implemented in the business scenarios of Xiaobu Assistant.

Application of Knowledge Graph in Xiaobu Assistant

The third part focuses on the application of knowledge graph in Xiaobu Assistant business scenarios.

The application of knowledge graph

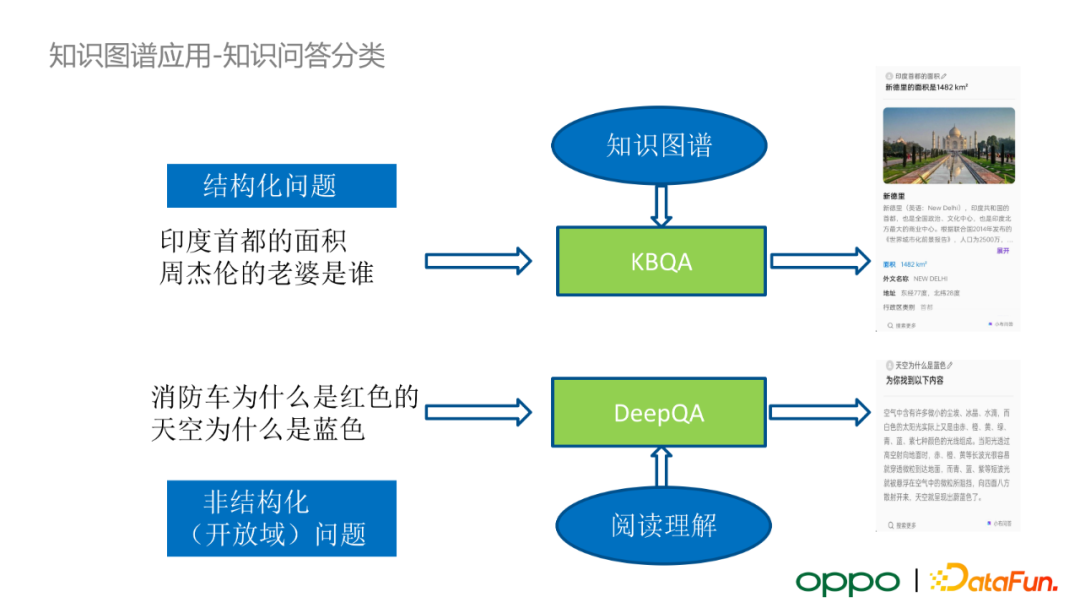

Xiaobu Assistant can be divided into three categories according to the field of dialogue: small talk, task dialogue and knowledge question and answer. Among them, the chat type uses retrieval and generative algorithms; the task dialogue uses frame semantic algorithms to analyze the query structure; the knowledge question and answer is further subdivided into two parts, the structured question and answer of KBQA based on knowledge graph and the structured question and answer based on reading comprehension and vector Retrieved unstructured question answering.

Knowledge Graph Application - Knowledge Question Answer Classification

First introduce the knowledge quiz. For structured problems, we use KBQA to solve them; for unstructured problems, we use the framework of DeepQA+ reading comprehension to deal with them.

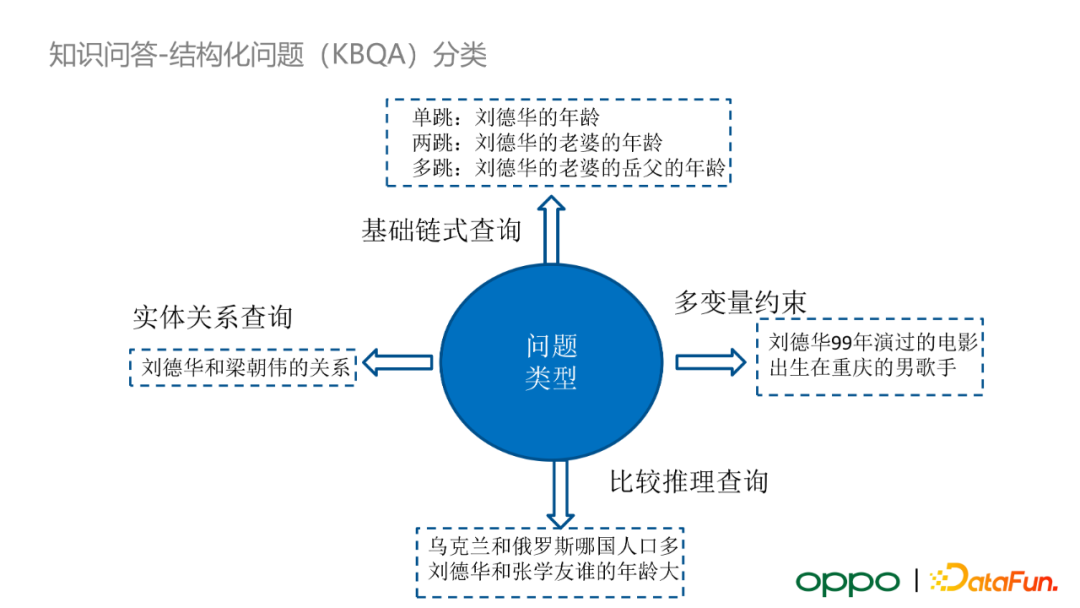

Knowledge Graph Application - Structured Classification

For structured problems, there are four types of queries in the application of Xiaobu Assistant:

- Basic chain queries, such as single-hop, two-hop, or even more-hop problems

- Multivariate Constraint Query

- Entity Relationship Query

- Compare Inference Queries

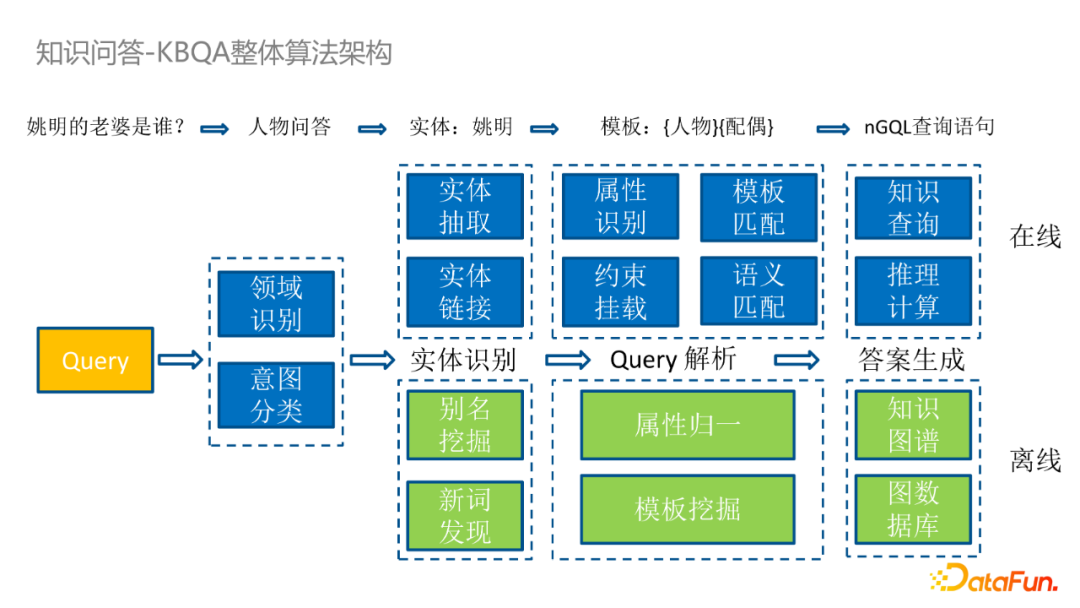

Knowledge Graph Application - KBQA Overall Algorithm Architecture

We design a KBQA-based algorithm framework for structured problems. First, after receiving the query input from the online user, we will first perform domain recognition and intent classification on it. If the query can be solved using KBQA, then we will perform entity recognition, query parsing and answer generation on the query. These three main steps can be further categorized by both online and offline. For example, offline KBQA will perform alias mining, new word discovery, attribute normalization, template mining, and finally update the knowledge graph and graph database. Online KBQA will perform entity extraction, entity linking, attribute recognition, constraint mounting, template matching, and semantic matching of long-tail templates, and finally perform knowledge query in graph data or perform inference calculations based on query results.

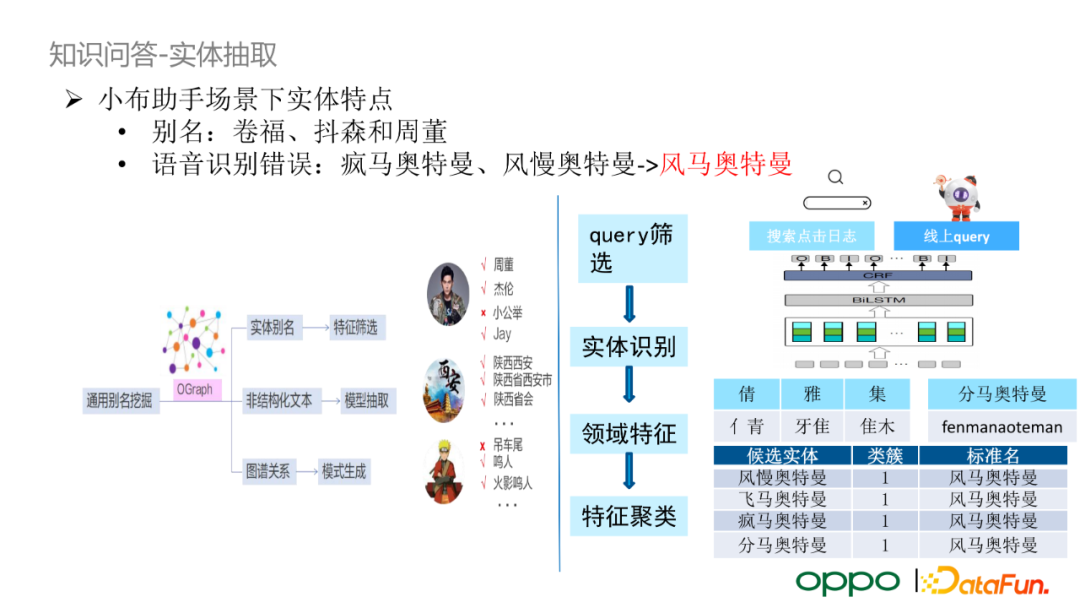

Knowledge Q&A - Entity Extraction

The input of Xiaobu Assistant is mainly voice, so the entities in the Xiaobu Assistant scene often have aliases and speech recognition errors. During voice input, users often do not say the full name of the person, but instead use an alias. Secondly, the error rate of speech recognition is relatively high, resulting in a large difference between the input and the query input on the web page. For the alias problem, the most basic solution is to build a mapping vocabulary based on the entity aliases of the knowledge graph; secondly, for compound entities, we will use the hypernyms in the graph to mine entity compound words. For the problem of speech recognition errors, we will use a large number of internal search click logs, and use the standard name contained in the title in the click page to match the corresponding query. There are two types of input features for matching: features of radicals (Qianya set and Qingya set) and pinyin features (sub-Ma Ultraman and Fengma Ultraman). We cluster the features of the candidate standard names and the features of the query, and finally select the standard name with the closest distance. It is worth mentioning that we also perform additional manual verification before generating the entity mapping vocabulary.

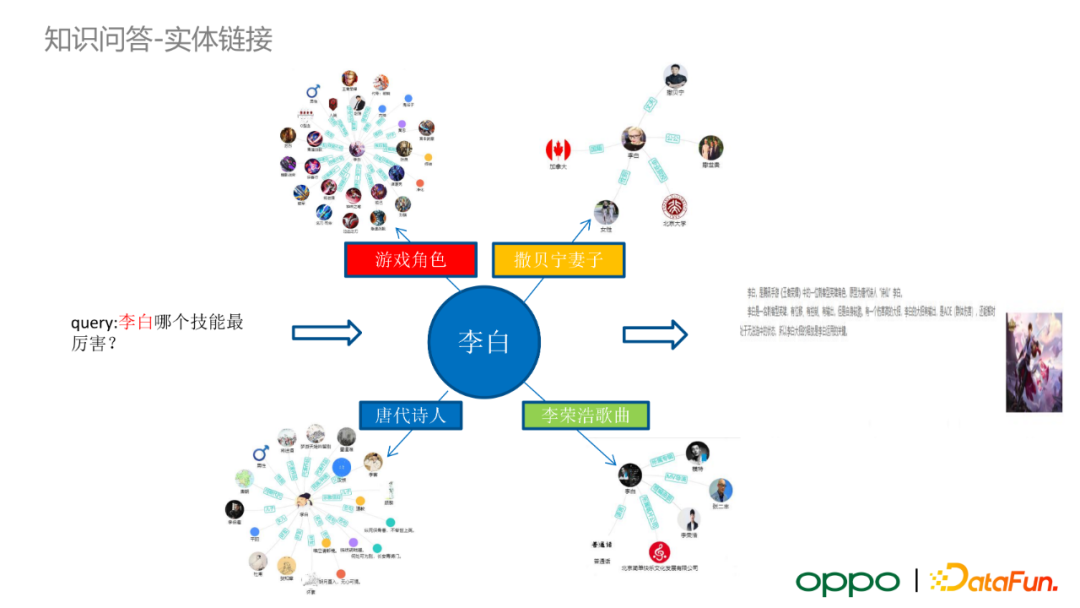

Knowledge Q&A - Entity Linking

After performing entity recognition on user query, we need to perform entity linking task. Take the query "Which skill of Li Bai is the most powerful?" as an example. The entity "Li Bai" will correspond to many different types of entities in the knowledge graph, such as game characters, Tang Dynasty poets, Li Ronghao songs, Sa Beining's wife, etc. At this point, we need to combine the semantics of the query to select the real entity.

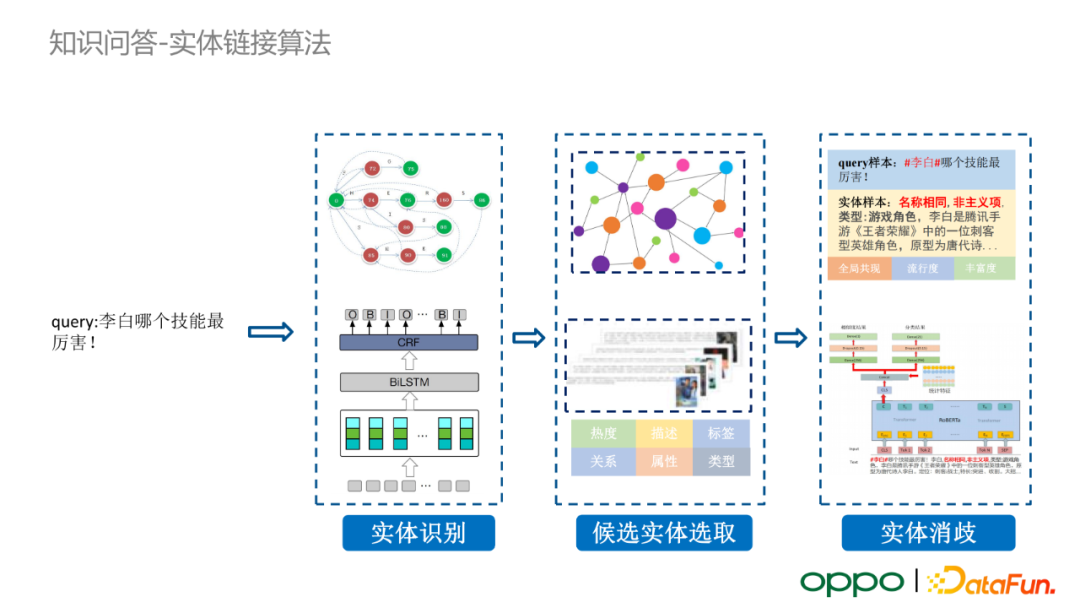

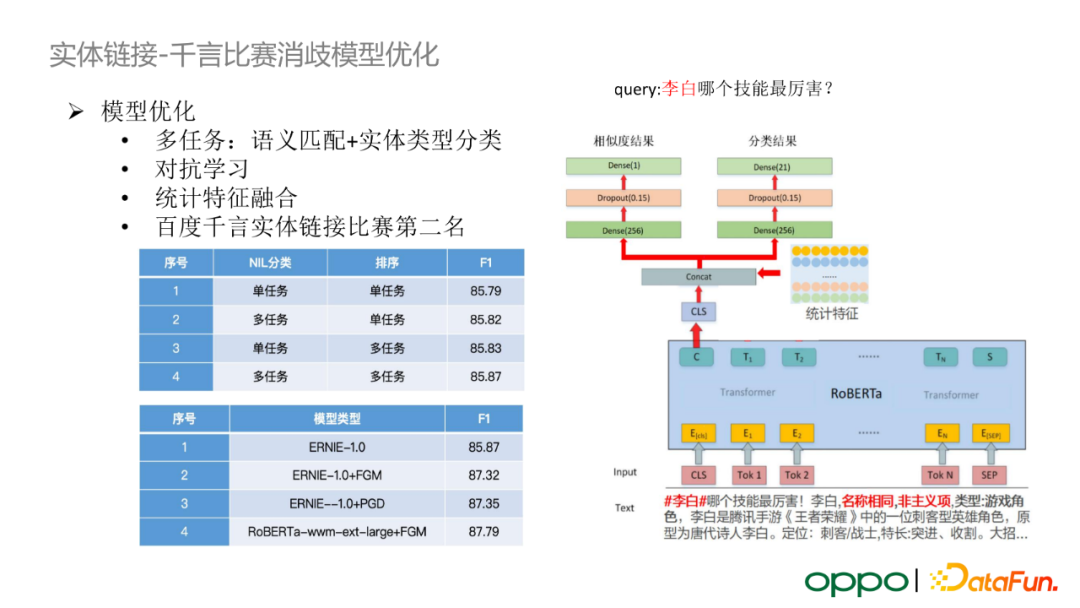

Entity linking goes through three steps. First, we use BiLSTM+CRF for entity recognition; then, we recall candidate entities in the knowledge graph; finally, we score the matching degree between query and candidate entities based on the entity disambiguation model, and select the most matching entity. If the matching degree of all candidate entities is lower than the preset threshold, we will output a special empty category.

The scheme presented in the picture above was proposed by our team in participating in the Baidu Qianyan Entity Link Competition. It adopts a multi-task training framework (semantic matching + entity type classification), introduces an adversarial learning strategy, incorporates statistical features (such as entity popularity, entity richness, etc.), and adds a multi-model integration method. In the end, we won the second place in the Qianyan Entity Link Competition. Specifically, we model the query-entity similarity matching task as a binary classification task, and the entity type classification task as a multi-classification task. Through comparative experiments, we prove that the idea of multi-task learning and adversarial learning has a certain improvement in the effect of entity disambiguation model. It should be pointed out that when the entity linking model is actually used online, we will select a relatively small-scale model.

Knowledge Q&A - Query parsing algorithm

After entity linking the query, we need to extract the attributes of the query based on the template. There are two main solutions in the industry: methods based on semantic parsing and methods based on information retrieval. OPPO mainly selects the semantic parsing scheme, and uses the semantic matching method for long-tail attributes that fail to parse.

In the semantic parsing class scheme, first we need to mine common templates for semantic parsing. We use the method of remote supervision template mining, use the massive question and answer data on the Internet, match these corpora with our knowledge graph, get the attributes of the entities in the question and answer database, and finally get the common query templates in the question and answer corpus. For example, for the question and answer "q: How old is Andy Lau ans: 59 years old", after the graph attribute retrieval, the query is actually asking for the age attribute of a certain person. Similarly, we can get a query that asks a person's height attribute, age attribute, and birthplace attribute, and generate a series of query templates accordingly. Based on the mined templates, we can train a semantic matching classification model whose input is the original query and a candidate query attribute. In addition, during the training process, we will mask the entities out, aiming to make the model learn the correlation between the semantics of the query and entity attributes outside the entity information. After the model is trained, we use the query log on the small wiring, first perform entity extraction on them, and then input the query and all the candidate attributes corresponding to the query entity in the graph into the model, perform semantic matching prediction tasks, and get some high confidence. degree candidate template. The output template will be checked by the annotator, and the final template will be added to the algorithm module of query parsing.

Next, a concrete example is used to explain how we use templates to parse online KBQA questions. For example, the user inputs the query as "how big is the capital of India", that is, the real intention of the user is to ask the area of New Delhi. First, we will perform entity recognition on the query, map "India" to the "country" in the map, and use the template to map the "capital" to "the capital of the country" or "the capital of the dynasty", and similarly, "how big" Normalized to attributes like "person's age", "area size", "company size", "planet size", etc. At this time, the corresponding attributes cannot be completely determined, so we will arrange and combine all the candidate attributes, combined with the pruning method, select the most likely template, and generate the intermediate expression. In the example above, the best template would be "area of the country's capital". This query is actually a two-hop problem, and we will use a single template to abstract part of it into a subquery, such as "capital of a country". Specifically, we will concatenate the subqueries with the remaining query templates to generate a compound query. When executing the query of the knowledge graph, we will first execute the sub-query and replace it with the entity obtained by the query, and finally continue to search the final result in the graph according to the generated query.

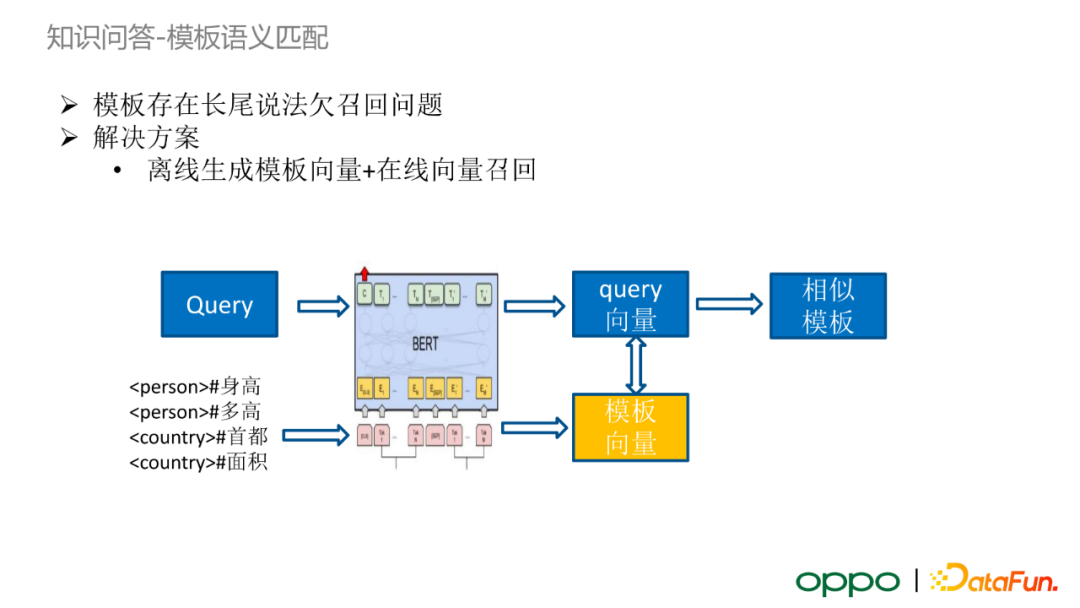

Knowledge Q&A - Template Semantic Matching

Although we mine a large number of normalized templates offline, this method still does not work well for some extreme cases. Due to the uncertainty of user input, the template has the problem of under-recall of long-tail queries. Considering the problem of online efficiency, it is difficult for us to completely score the semantic matching between query and all templates.

Based on the above problems, we propose a scheme similar to the two-tower model matching: generate the corresponding template vector from the template through the BERT model, and establish the template vector index. When a suitable template cannot be obtained for query parsing, the input query will pass through the BERT model to obtain the query vector, and then recall some similar template vectors in the template vector index, and finally judge whether to accept the candidate template through a manually set threshold. In actual business, the proportion of responses using this method is relatively small.

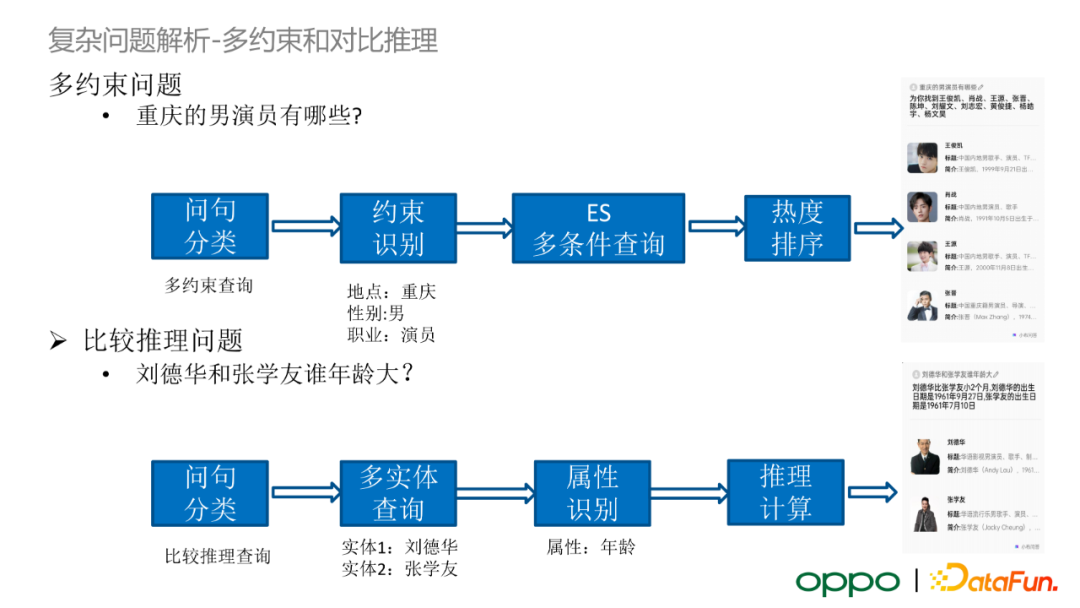

Users may ask multi-constrained questions, such as "Who are the male actors in Chongqing?". We will use the question classification module to determine that the query is a multi-constraint query, and then identify all the constraints contained in the query. When using multi-constraint queries, we do not perform query operations in the graph database, but choose ES for multi-condition queries, because the graph database retrieval takes a lot of time. Finally, we sort the ES query output by heat and output relatively reasonable results. Similarly, for the comparative reasoning problem, we first use the question classification module to identify that the query belongs to the comparative reasoning query, and then perform a multi-entity query in the graph, and judge whether the attributes corresponding to the two entities are comparable according to the attributes that need to be queried in the comparative reasoning. If the multi-entity attributes are comparable, we will perform the inference calculation and finally output the result of the inference query.

Knowledge Q&A - Unstructured Question Q&A

Next, I will briefly introduce OPPO's solution for unstructured question and answer.

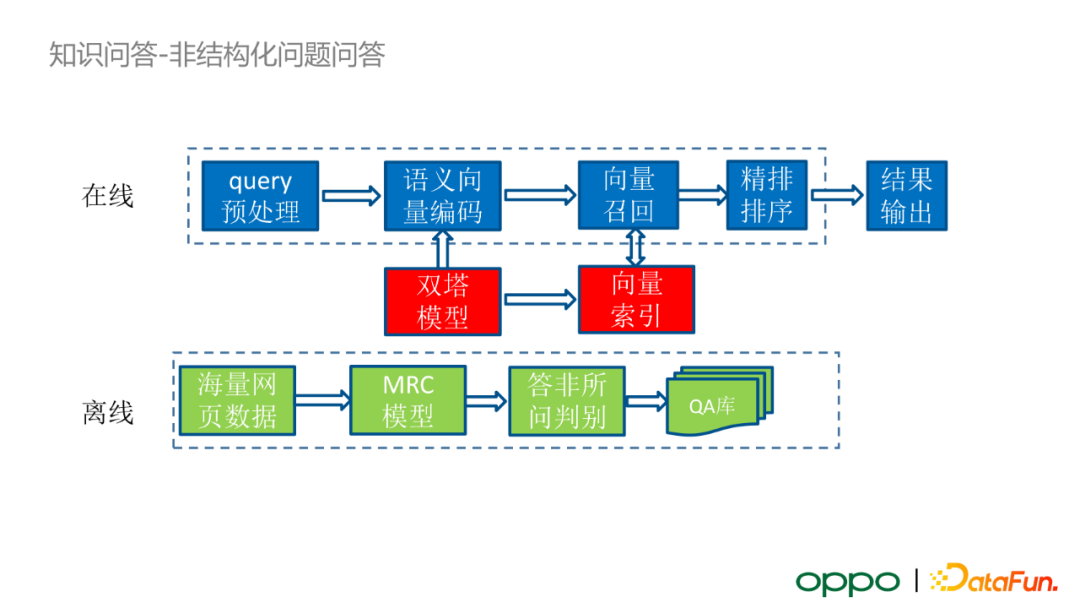

In the offline case, we select the popular mass webpage data + MRC model for answer extraction. First, use a large number of URLs and click logs contained in the search query to obtain the web page text data corresponding to the query; then, input the query and web page text data into the MRC model to get the answer corresponding to the query in the text; after that, the answer will go through an offline The trained discriminant model of "unanswered questions" filters out those answers that are truly relevant to the query; finally builds a question-answer database offline.

In addition, based on the built QA library, we will use the two-tower model to construct a vector index of the QA database. When querying online, the query will first go through the modules of intent recognition and style recognition. In the OPPO business setting, the priority of using KBQA is higher than that of using unstructured question answering framework. If KBQA cannot return results for the input query, the query is fed into the vector retrieval framework for unstructured questions. Query will be encoded with semantic vectors by the twin-tower model, and then vector recall will be performed in the index library to obtain the topK candidate QA. Since the vector recall scheme will lose the interaction information between the query and the answer, after obtaining the candidate QA, the query vector and the candidate vector will go through a refined sorting model to enhance the semantic interaction, and get the final refined sorting score. Based on preset thresholds, we can choose to accept or reject candidate QA results.

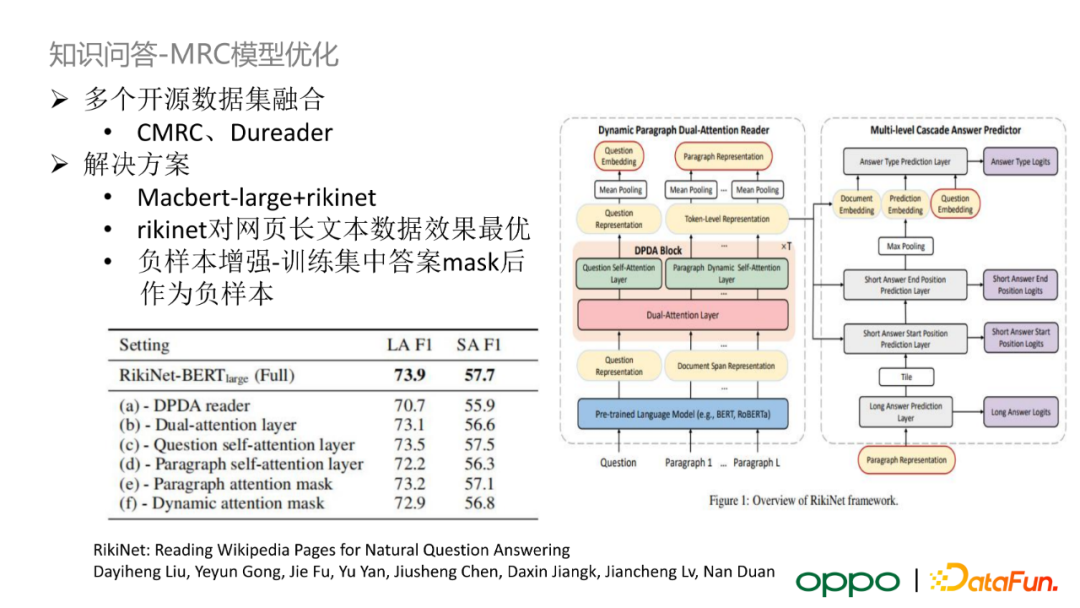

We made some optimizations for the MRC model. First, we choose RikiNet as the MRC model. Its characteristic is that the data processing effect of long text in web pages is the best. The potential reason is that RikiNet will divide the input text into paragraphs. Noise information affects answer information.

Summary and Outlook

Finally, make a summary and outlook on the content shared today.

Although OPPO started building the knowledge map relatively late, it has accumulated a lot of experience in the construction process. First of all, the graph construction algorithm is a key link in building a high-quality knowledge graph. We will give priority to ensuring the accuracy of the algorithm, and the requirements for the recall rate are relatively low. Secondly, for the knowledge question answering algorithm, when offline, we will train a large model for template mining and reading comprehension to ensure the data quality of offline mining; when serving online, we will choose an online template + small model solution to ensure service efficiency.

In the future, we may try the following types of optimizations:

- Common sense reasoning graph + common sense question and answer

- Multimodal graph + multimodal question answering

- User Graph + Personalized Recommendation

- Knowledge graph + large-scale pre-trained language model

- Information extraction for low resource conditions

Q&A

Q: What is the entity magnitude of the general encyclopedia? Is there any way to reduce the time complexity of entity alignment?

A: The entity scale of OPPO's internal knowledge graph is about 200 million, and the relationship number is on the order of one billion. Due to the large scale of the graph, when trying to reduce the time complexity of entity alignment, we first classify candidate entities. For example, if the entity type is a person, we will perform entity alignment under the condition of the person category, which is equivalent to drawing on divide and conquer. The idea of reducing part of the computational complexity. Subsequently, we employ a two-stage entity alignment algorithm. The first stage will not involve deep learning models, but will group entities into coarse-grained groups (such as entities with the same alias), and use the Dedupe and Spark frameworks to perform parallelized entity alignment.

Q: How to judge the dialogue field of query in Knowledge Q&A?

A: Xiaobu Assistant has a complex system of domain classification and intent recognition internally. For example, in the field of small talk, we annotate a large amount of small talk corpus. Then, we will train the BERT model to classify the corpus.

Q: How to distinguish between speech recognition errors and new words?

A: When correcting speech recognition errors, we use the web page title corresponding to the search click log, and enter the model together with the query for entity recognition. This is because we believe that the page title will contain the correct entity name in most cases. Then, we consider the features of radicals and pinyin features. When the similarity between the query feature and the candidate entity feature reaches a preset threshold, then we can basically consider it a speech recognition error rather than a new word.

Q: Will some manual features be added to the entity disambiguation model?

A: We will add some hand-crafted features, such as the entity's popularity, the proportion of entities that are actually marked as positive in the training data, and the number of entity attributes and other statistical features.

Q: What is the reason why RikiNet is more effective for long text data on web pages?

A: RikiNet has designed a special attention mechanism. First, it breaks long text into paragraphs. Generally, short answers will only appear in one paragraph. The attention mechanism of RikiNet makes no attention interaction between paragraphs, so that irrelevant information contained in paragraphs without answers cannot affect the semantic information of paragraphs containing answers. It's just that this is only the conclusion obtained in the experiment, and there is no theoretical support.

Q: How is the pruning of query path parsing implemented in query online template matching?

A: For example "how big is the capital of India", we will use entity classification to assist in the pruning task. First of all, "India" is a country, although "capital" can be mapped to "nation capital" or "dynasty capital", but since the preceding entity is identified as "country", the ordering of "dynasty capital" is relatively lean back. In general, we prune based on the relationships between identified entity types and attributes. If there is no conflict in the attribute types and the pruning operation cannot be performed directly, then we will sort the candidate templates by popularity, that is, if a template appears more frequently in the training set, we will give priority to this template.

Q: How does OPPO respond to voice input in dialect?

A: OPPO currently mainly supports Cantonese dialect input. This part of the work is the responsibility of the front-end ASR, so the dialect will be converted to Mandarin text input after the ASR module.

That's all for today's sharing, thank you all.

Exchange graph database technology? To join the Nebula exchange group, please fill in your Nebula business card first, and the Nebula assistant will pull you into the group~~

Check out NebulaGraph's GitHub here: GitHub - vesoft-inc/nebula

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。