R&D personnel inevitably use logs during project development to record information and troubleshoot problems. Apache Log4j2 provides a flexible and powerful logging framework. Although it is quick to get started, it is very easy to step on the "pit" if you are not careful. This article introduces the "pits" that the Meituan unified API gateway service Shepherd has stepped on in practice about the log leading to thread Block, and how we analyze and solve the problem from the source level of the log framework, and share it with you at the end. Some practical experience about avoiding "pits" in logs, I hope to bring you some help.

1 Introduction

The importance of logging to a program cannot be overstated. It's "big", we often use logs to record information and troubleshoot problems in our projects, and relevant code can be seen everywhere. It is also "small". As an auxiliary tool, the log is easy to use and quick to use, and we usually don't spend too much energy on the log. But the seemingly inconspicuous log also hides various "pits". If it is used improperly, it will not only not help us, but may also reduce service performance and even drag down our service.

The log causes the problem of thread block, I believe you may have encountered it, and you should have a deep understanding of it; maybe you have not encountered it, but it does not mean that there is no problem, but it may not be triggered yet. This article mainly introduces Meituan unified API gateway service Shepherd (see the article "Design and Implementation of Ten Billion-Scale API Gateway Service Shepherd" ) in practice about the "pits" that logs lead to thread Blocks, and then share some avoidance. "Pit" experience.

2. Background

The API gateway service Shepherd is developed based on the Java language, using the industry-famous Apache Log4j2 as the main logging framework, and using Meituan's internal XMD-Log SDK and Scribe-Log SDK to process log content. The overall process of log processing is shown in Figure 1 below. . When a business prints logs, the log framework decides whether to send the logs to XMDFile or Scribe based on the Logger configuration. Among them, XMDFile is the name of the log appender provided by XMD-Log, which is responsible for outputting logs to the local disk, and Scribe is the name of the log appender provided by Scribe-Log, which is responsible for reporting logs to the remote log center.

With the rapid growth of the business, the thread block problem caused by the log becomes more and more frequent. For example, calling the back-end RPC service times out, resulting in a large number of thread blocks for the caller; another example, the abnormal log output inside the business leads to a large number of thread blocks in the service, etc. These problems seriously affect the stability of the service. Therefore, we have thoroughly investigated and repaired the stability risk factors of the log framework based on various thread block problems caused by logs that have been exposed by the project in the past period of time, and conducted comprehensive verification in offline and online environments. . In this process, we have summarized some practical experience related to the use of logs, and hope to share them with you.

Before entering the text, firstly introduce the running environment and log related configuration information of the project at that time.

- JDK version

java version "1.8.0_45"

Java(TM) SE Runtime Environment (build 1.8.0_45-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.45-b02, mixed mode)- log dependency version

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>2.7</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.7</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>2.7</version>

</dependency>- log configuration file

<?xml version="1.0" encoding="UTF-8"?>

<configuration status="warn">

<appenders>

<Console name="Console" target="SYSTEM_OUT" follow="true">

<PatternLayout pattern="%d{yyyy/MM/dd HH:mm:ss.SSS} %t [%p] %c{1} (%F:%L) %msg%n" />

</Console>

<XMDFile name="ShepherdLog" fileName="shepherd.log"/>

<!--XMDFile异步磁盘日志配置示例-->

<!--默认按天&按512M文件大小切分日志,默认最多保留30个日志文件。-->

<!--注意:fileName前会自动增加文件路径,只配置文件名即可-->

<XMDFile name="LocalServiceLog" fileName="request.log"/>

<Scribe name="LogCenterSync">

<!-- 在指定日志名方面,scribeCategory 和 appkey 两者至少存在一种,且 scribeCategory 高于 appkey。-->

<!-- <Property name="scribeCategory">data_update_test_lc</Property> -->

<LcLayout/>

</Scribe>

<Async name="LogCenterAsync" blocking="false">

<AppenderRef ref="LogCenterSync"/>

</Async>

</appenders>

<loggers>

<AsyncLogger name="com.sankuai.shepherd" level="info" additivity="false">

<AppenderRef ref="ShepherdLog" level="warn"/>

<AppenderRef ref="LogCenterAsync" level="info"/>

</AsyncLogger>

<root level="info">

<!--Console日志是同步、阻塞的,推荐只在本地调试时使用,线上将该配置去掉-->

<!--appender-ref ref="Console" /-->

<appender-ref ref="LocalServiceLog"/>

<appender-ref ref="LogCenterAsync"/>

</root>

</loggers>

</configuration>3. Stepped pit

This chapter mainly records the thread block problems caused by a series of logs in the project in the past period of time, and analyzes the root causes of the problems one by one.

3.1 The full log queue leads to thread Block

3.1.1 Problem scene

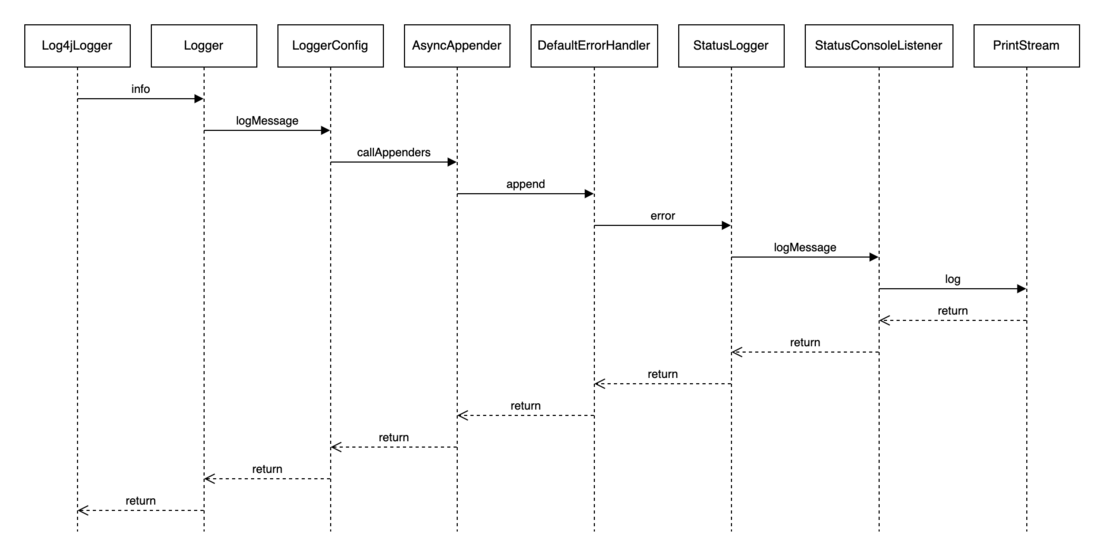

After receiving the "jvm.thread.blocked.count" alarm, check the thread monitoring indicators through the monitoring platform immediately. The thread stack at that time is shown in Figure 2 and Figure 3.

It is not difficult to see from the Blocked thread stack that this is related to log printing, and it is an INFO-level log. Then, log in to the machine to check whether the log is abnormal. It is found that the log volume is very large at that time, and a 500MB log file is filled almost every two minutes.

What is the relationship between a large number of output logs and thread blocks? Next, this chapter will deeply analyze the root cause of the thread block in combination with the call chain shown in Figure 4 below.

3.1.2 Why block thread?

Start the analysis from the Blocked thread stack, and check the relevant code snippets of PrintStream as shown in Figure 5 below. You can see that there are synchronized calls in the blocked area. Combined with the above, it is found that a 500MB log file is filled every two minutes, and the initial suspicion is that it is a log. Excessive amount caused the thread to block.

But the above speculation still has some points worth scrutinizing:

- If the thread is blocked just because the log volume is too large, the log framework is too unbearable to be reused, and it cannot be used in high concurrency and high throughput business scenarios.

- The log configuration clearly outputs the log to a file, how can it be output to the Console PrintStream?

3.1.3 Why is the output to the Console?

Continuing to analyze the call chain along the thread stack, it can be seen that an error occurred when AsyncAppender called the append method to append the log. The relevant code snippets are as follows:

// org.apache.logging.log4j.core.appender.AsyncAppender

// 内部维护的阻塞队列,队列大小默认是128

private final BlockingQueue<LogEvent> queue;

@Override

public void append(final LogEvent logEvent) {

if (!isStarted()) {

throw new IllegalStateException("AsyncAppender " + getName() + " is not active");

}

if (!Constants.FORMAT_MESSAGES_IN_BACKGROUND) { // LOG4J2-898: user may choose

logEvent.getMessage().getFormattedMessage(); // LOG4J2-763: ask message to freeze parameters

}

final Log4jLogEvent memento = Log4jLogEvent.createMemento(logEvent, includeLocation);

// 日志事件转入异步队列

if (!transfer(memento)) {

// 执行到这里说明队列满了,入队失败,根据是否blocking执行具体策略

if (blocking) {

// 阻塞模式,选取特定的策略来处理,策略可能是 "忽略日志"、"日志入队并阻塞"、"当前线程打印日志"

// delegate to the event router (which may discard, enqueue and block, or log in current thread)

final EventRoute route = asyncQueueFullPolicy.getRoute(thread.getId(), memento.getLevel());

route.logMessage(this, memento);

} else {

// 非阻塞模式,交由 ErrorHandler 处理失败日志

error("Appender " + getName() + " is unable to write primary appenders. queue is full");

logToErrorAppenderIfNecessary(false, memento);

}

}

}

private boolean transfer(final LogEvent memento) {

return queue instanceof TransferQueue

? ((TransferQueue<LogEvent>) queue).tryTransfer(memento)

: queue.offer(memento);

}

public void error(final String msg) {

handler.error(msg);

}As the name suggests, AsyncAppender is an asynchronous Appender. It processes logs asynchronously and maintains a BlockingQueue queue inside it. Every time a log is processed, it first tries to store the Log4jLogEvent event in the queue, and then hand it over to the background thread to take out the event from the queue and process it. (The log is processed by the Appender associated with AsyncAppender), but the queue length is always limited, and the default size of the queue is 128. If the log volume is too large or the log asynchronous thread processing is not timely, it is likely to cause the log queue to be full. .

When the log queue is full, the log framework provides two processing methods, as follows:

If the blocking configuration is true, the corresponding processing strategy will be selected. The default is the SYNCHRONOUS strategy. You can configure other strategies or custom strategies provided by the logging framework through the log4j2.AsyncQueueFullPolicy parameter in the log4j2.component.properties file.

- DISCARD strategy , directly ignore the log.

- SYNCHRONOUS strategy , the current thread sends logs directly to Appender.

- ENQUEUE policy , forcibly blocking into the queue.

- If blocking is configured to false, failure logs are handled by ErrorHandler and ErrorAppender. The logging framework provides a default ErrorHandler implementation, namely DefaultErrorHandler. Currently, it is not supported for businesses to customize ErrorHandler in log configuration files such as XML and JSON. The log framework does not provide ErrorAppender by default. If necessary, you can customize the error-ref configuration in XML, JSON and other log configuration files.

As you can see in the log configuration file of this project, AsyncAppender sets blocking to false, and does not configure error-ref. The following is a detailed analysis of DefaultErrorHandler.

// org.apache.logging.log4j.core.appender.DefaultErrorHandler

private static final Logger LOGGER = StatusLogger.getLogger();

private static final int MAX_EXCEPTIONS = 3;

// 5min 时间间隔

private static final long EXCEPTION_INTERVAL = TimeUnit.MINUTES.toNanos(5);

private int exceptionCount = 0;

private long lastException = System.nanoTime() - EXCEPTION_INTERVAL - 1;

public void error(final String msg) {

final long current = System.nanoTime();

// 当前时间距离上次异常处理时间间隔超过5min 或者异常处理数小于3次

if (current - lastException > EXCEPTION_INTERVAL || exceptionCount++ < MAX_EXCEPTIONS) {

// StatusLogger 负责处理

LOGGER.error(msg);

}

lastException = current;

}DefaultErrorHandler adds conditional restrictions when processing exception logs. Only when either of the following two conditions is met will it be processed, thereby avoiding performance problems caused by a large number of exception logs.

- The processing interval between two logs exceeds 5 minutes.

- The number of exception logs does not exceed 3 times.

But the default implementation of the version of the logging framework used by the project seems to have some inconsistencies:

- lastException is used to mark the timestamp of the last exception. This variable may be accessed by multiple threads, and thread safety cannot be guaranteed in the case of multiple threads.

- exceptionCount is used to count the number of exception logs. This variable may be accessed by multiple threads, and thread safety cannot be guaranteed in the case of multiple threads.

Therefore, in a multi-threaded scenario, a large number of exception logs may be processed by DefaultErrorHandler at the same time, causing thread safety issues. It is worth mentioning that this issue has been reported to the community by the related Issue: DefaultErrorHandler can not share values across threads , and it has been fixed in version 2.15.0 .

As you can see from the above DefaultErrorHandler code, StatusLogger is really responsible for processing logs. Continue to follow up the code and enter the logMessage method. The execution logic of the method is as follows:

- If a StatusListener is registered inside the StatusLogger, the corresponding StatusListener is responsible for processing logs.

- Otherwise, SimpleLogger is responsible for processing the log and directly outputs the log to the System.err output stream.

// org.apache.logging.log4j.status.StatusLogger

private static final StatusLogger STATUS_LOGGER = new StatusLogger(StatusLogger.class.getName(),

ParameterizedNoReferenceMessageFactory.INSTANCE);

// StatusListener

private final Collection<StatusListener> listeners = new CopyOnWriteArrayList<>();

private final SimpleLogger logger;

private StatusLogger(final String name, final MessageFactory messageFactory) {

super(name, messageFactory);

this.logger = new SimpleLogger("StatusLogger", Level.ERROR, false, true, false, false, Strings.EMPTY,

messageFactory, PROPS, System.err);

this.listenersLevel = Level.toLevel(DEFAULT_STATUS_LEVEL, Level.WARN).intLevel();

}

/**

* Retrieve the StatusLogger.

*

* @return The StatusLogger.

*/

public static StatusLogger getLogger() {

return STATUS_LOGGER;

}

@Override

public void logMessage(final String fqcn, final Level level, final Marker marker, final Message msg,

final Throwable t) {

StackTraceElement element = null;

if (fqcn != null) {

element = getStackTraceElement(fqcn, Thread.currentThread().getStackTrace());

}

final StatusData data = new StatusData(element, level, msg, t, null);

msgLock.lock();

try {

messages.add(data);

} finally {

msgLock.unlock();

}

if (listeners.size() > 0) {

// 如果系统注册了 listener,由 StatusConsoleListener 处理日志

for (final StatusListener listener : listeners) {

if (data.getLevel().isMoreSpecificThan(listener.getStatusLevel())) {

listener.log(data);

}

}

} else {

// 否则由 SimpleLogger 处理日志,直接输出到 System.err

logger.logMessage(fqcn, level, marker, msg, t);

}

}From the above Blocked thread stack, StatusConsoleListener is responsible for processing logs, and StatusConsoleListener is the implementation class of StatusListener interface, so how is StatusConsoleListener created?

3.1.4 How does StatusConsoleListener come from?

Generally speaking, each project will have a log configuration file (such as log4j2.xml), which corresponds to the Configuration interface in the Log4j2 log framework. Different log configuration file formats have different implementation classes:

- XmlConfiguration, the XML format log configuration

- JsonConfiguration, the JSON format log configuration

- XMDConfiguration, the log configuration (XML format) defined by the internal log component XMD-Log of Meituan

- ......

log4j2.xml sample configuration (for example only, do not use this configuration in actual projects).

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="debug" name="RoutingTest">

<Properties>

<Property name="filename">target/rolling1/rollingtest-$${sd:type}.log</Property>

</Properties>

<ThresholdFilter level="debug"/>

<Appenders>

<Console name="STDOUT">

<PatternLayout pattern="%m%n"/>

<ThresholdFilter level="debug"/>

</Console>

<Routing name="Routing">

<Routes pattern="$${sd:type}">

<Route>

<RollingFile name="Rolling-${sd:type}" fileName="${filename}"

filePattern="target/rolling1/test1-${sd:type}.%i.log.gz">

<PatternLayout>

<pattern>%d %p %c{1.} [%t] %m%n</pattern>

</PatternLayout>

<SizeBasedTriggeringPolicy size="500" />

</RollingFile>

</Route>

<Route ref="STDOUT" key="Audit"/>

</Routes>

</Routing>

</Appenders>

<Loggers>

<Logger name="EventLogger" level="info" additivity="false">

<AppenderRef ref="Routing"/>

</Logger>

<Root level="error">

<AppenderRef ref="STDOUT"/>

</Root>

</Loggers>

</Configuration>Log4j2 will load and parse the log4j2.xml configuration file at startup, and create a specific Configuration instance by the corresponding ConfigurationFactory.

// org.apache.logging.log4j.core.config.xml.XmlConfiguration

public XmlConfiguration(final LoggerContext loggerContext, final ConfigurationSource configSource) {

super(loggerContext, configSource);

final File configFile = configSource.getFile();

byte[] buffer = null;

try {

final InputStream configStream = configSource.getInputStream();

try {

buffer = toByteArray(configStream);

} finally {

Closer.closeSilently(configStream);

}

final InputSource source = new InputSource(new ByteArrayInputStream(buffer));

source.setSystemId(configSource.getLocation());

final DocumentBuilder documentBuilder = newDocumentBuilder(true);

Document document;

try {

// 解析 xml 配置文件

document = documentBuilder.parse(source);

} catch (final Exception e) {

// LOG4J2-1127

final Throwable throwable = Throwables.getRootCause(e);

if (throwable instanceof UnsupportedOperationException) {

LOGGER.warn(

"The DocumentBuilder {} does not support an operation: {}."

+ "Trying again without XInclude...",

documentBuilder, e);

document = newDocumentBuilder(false).parse(source);

} else {

throw e;

}

}

rootElement = document.getDocumentElement();

// 处理根节点属性配置,即 <Configuration></Configuration> 节点

final Map<String, String> attrs = processAttributes(rootNode, rootElement);

// 创建 StatusConfiguration

final StatusConfiguration statusConfig = new StatusConfiguration().withVerboseClasses(VERBOSE_CLASSES)

.withStatus(getDefaultStatus());

for (final Map.Entry<String, String> entry : attrs.entrySet()) {

final String key = entry.getKey();

final String value = getStrSubstitutor().replace(entry.getValue());

// 根据配置文件中的 status 属性值,来设置 StatusConfiguration 的 status level

if ("status".equalsIgnoreCase(key)) {

statusConfig.withStatus(value);

// 根据配置文件中的 dest 属性值,来设置 StatusConfiguration 的日志输出 destination

} else if ("dest".equalsIgnoreCase(key)) {

statusConfig.withDestination(value);

} else if ("shutdownHook".equalsIgnoreCase(key)) {

isShutdownHookEnabled = !"disable".equalsIgnoreCase(value);

} else if ("verbose".equalsIgnoreCase(key)) {

statusConfig.withVerbosity(value);

} else if ("packages".equalsIgnoreCase(key)) {

pluginPackages.addAll(Arrays.asList(value.split(Patterns.COMMA_SEPARATOR)));

} else if ("name".equalsIgnoreCase(key)) {

setName(value);

} else if ("strict".equalsIgnoreCase(key)) {

strict = Boolean.parseBoolean(value);

} else if ("schema".equalsIgnoreCase(key)) {

schemaResource = value;

} else if ("monitorInterval".equalsIgnoreCase(key)) {

final int intervalSeconds = Integer.parseInt(value);

if (intervalSeconds > 0) {

getWatchManager().setIntervalSeconds(intervalSeconds);

if (configFile != null) {

final FileWatcher watcher = new ConfiguratonFileWatcher(this, listeners);

getWatchManager().watchFile(configFile, watcher);

}

}

} else if ("advertiser".equalsIgnoreCase(key)) {

createAdvertiser(value, configSource, buffer, "text/xml");

}

}

// 初始化 StatusConfiguration

statusConfig.initialize();

} catch (final SAXException | IOException | ParserConfigurationException e) {

LOGGER.error("Error parsing " + configSource.getLocation(), e);

}

if (getName() == null) {

setName(configSource.getLocation());

}

// 忽略以下内容

} // org.apache.logging.log4j.core.config.status.StatusConfiguration

private static final PrintStream DEFAULT_STREAM = System.out;

private static final Level DEFAULT_STATUS = Level.ERROR;

private static final Verbosity DEFAULT_VERBOSITY = Verbosity.QUIET;

private final Collection<String> errorMessages = Collections.synchronizedCollection(new LinkedList<String>());

// StatusLogger

private final StatusLogger logger = StatusLogger.getLogger();

private volatile boolean initialized = false;

private PrintStream destination = DEFAULT_STREAM;

private Level status = DEFAULT_STATUS;

private Verbosity verbosity = DEFAULT_VERBOSITY;

public void initialize() {

if (!this.initialized) {

if (this.status == Level.OFF) {

this.initialized = true;

} else {

final boolean configured = configureExistingStatusConsoleListener();

if (!configured) {

// 注册新 StatusConsoleListener

registerNewStatusConsoleListener();

}

migrateSavedLogMessages();

}

}

}

private boolean configureExistingStatusConsoleListener() {

boolean configured = false;

for (final StatusListener statusListener : this.logger.getListeners()) {

if (statusListener instanceof StatusConsoleListener) {

final StatusConsoleListener listener = (StatusConsoleListener) statusListener;

// StatusConsoleListener 的 level 以 StatusConfiguration 的 status 为准

listener.setLevel(this.status);

this.logger.updateListenerLevel(this.status);

if (this.verbosity == Verbosity.QUIET) {

listener.setFilters(this.verboseClasses);

}

configured = true;

}

}

return configured;

}

private void registerNewStatusConsoleListener() {

// 创建 StatusConsoleListener,级别以 StatusConfiguration 为准

// 默认 status 是 DEFAULT_STATUS 即 ERROR

// 默认 destination 是 DEFAULT_STREAM 即 System.out

final StatusConsoleListener listener = new StatusConsoleListener(this.status, this.destination);

if (this.verbosity == Verbosity.QUIET) {

listener.setFilters(this.verboseClasses);

}

this.logger.registerListener(listener);

} // org.apache.logging.log4j.status.StatusConsoleListener

private Level level = Level.FATAL; // 级别

private String[] filters;

private final PrintStream stream; // 输出流

public StatusConsoleListener(final Level level, final PrintStream stream) {

if (stream == null) {

throw new IllegalArgumentException("You must provide a stream to use for this listener.");

}

this.level = level;

this.stream = stream;

}Taking XmlConfiguration as an example, analyzing the above log configuration parsing code snippet, we can see that when XmlConfiguration is created, StatusConfiguration will be created first, and then StatusConsoleListener will be created and registered to StatusLogger listeners when StatusConfiguration is initialized, and the \<Configuration\> tag in the log configuration file The attribute value of XmlConfiguration->StatusConfiguration->StatusConsoleListener ultimately affects the behavior of StatusConsoleListener.

The \<Configuration\> tag in the log configuration file can configure property fields, some of which are as follows:

- status , optional values include OFF, FATAL, ERROR, WARN, INFO, DEBUG, TRACE, ALL , this value determines the StatusConsoleListener level, the default is ERROR.

- dest , optional values include out, err, standard URI path , this value determines the output stream destination of StatusConsoleListener, the default is System.out.

In the log configuration file of this project, you can see that the dest attribute value of Configuration is not set, so the log is directly output to System.out.

3.1.5 What is StatusLogger used for?

As mentioned above, the StatusConsoleListener is registered in the StatusLogger. The StatusLogger will judge the log level before sending it to the StatusListener to process the log. If the level condition is not satisfied, the log will be ignored. The default log level of the StatusConsoleListener is ERROR.

// org.apache.logging.log4j.status.StatusLogger

@Override

public void logMessage(final String fqcn, final Level level, final Marker marker, final Message msg,

final Throwable t) {

StackTraceElement element = null;

if (fqcn != null) {

element = getStackTraceElement(fqcn, Thread.currentThread().getStackTrace());

}

final StatusData data = new StatusData(element, level, msg, t, null);

msgLock.lock();

try {

messages.add(data);

} finally {

msgLock.unlock();

}

// 系统注册了 listener,由 StatusConsoleListener 处理日志

if (listeners.size() > 0) {

for (final StatusListener listener : listeners) {

// 比较当前日志的 leve 和 listener 的 level

if (data.getLevel().isMoreSpecificThan(listener.getStatusLevel())) {

listener.log(data);

}

}

} else {

logger.logMessage(fqcn, level, marker, msg, t);

}

}Let's look back at StatusLogger. StatusLogger is implemented in a singleton mode. It outputs logs to the Console (such as System.out or System.err). From the above analysis, it is very easy to cause thread Blocks in high concurrency scenarios. What's the point of existence?

Looking at the official introduction, the general idea is that before the log initialization is completed, there is also a need to print log debugging. StatusLogger is born to solve this problem.

Troubleshooting tip for the impatient:

From log4j-2.9 onward, log4j2 will print all internal logging to the console if system property log4j2.debug is defined (with any or no value).

Prior to log4j-2.9, there are two places where internal logging can be controlled:

- Before a configuration is found, status logger level can be controlled with system property org.apache.logging.log4j.simplelog.StatusLogger.level.

- After a configuration is found, status logger level can be controlled in the configuration file with the "status" attribute, for example: \<Configuration status="trace"\>.

Just as it is desirable to be able to diagnose problems in applications, it is frequently necessary to be able to diagnose problems in the logging configuration or in the configured components. Since logging has not been configured, "normal" logging cannot be used during initialization . In addition, normal logging within appenders could create infinite recursion which Log4j will detect and cause the recursive events to be ignored. To accomodate this need, the Log4j 2 API includes a StatusLogger .

3.1.6 Problem Summary

The excessive log volume causes the AsyncAppender log queue to be full, new log events cannot be queued, and the ErrorHandler processes the logs. At the same time, due to the thread safety problem of the ErrorHandler, a large number of logs are output to the Console, and the Console is outputting logs to the PrintStream output stream When there is a synchronized synchronization code block, it leads to thread Block in high concurrency scenarios.

3.2 AsyncAppender causes thread Block

3.2.1 Problem scene

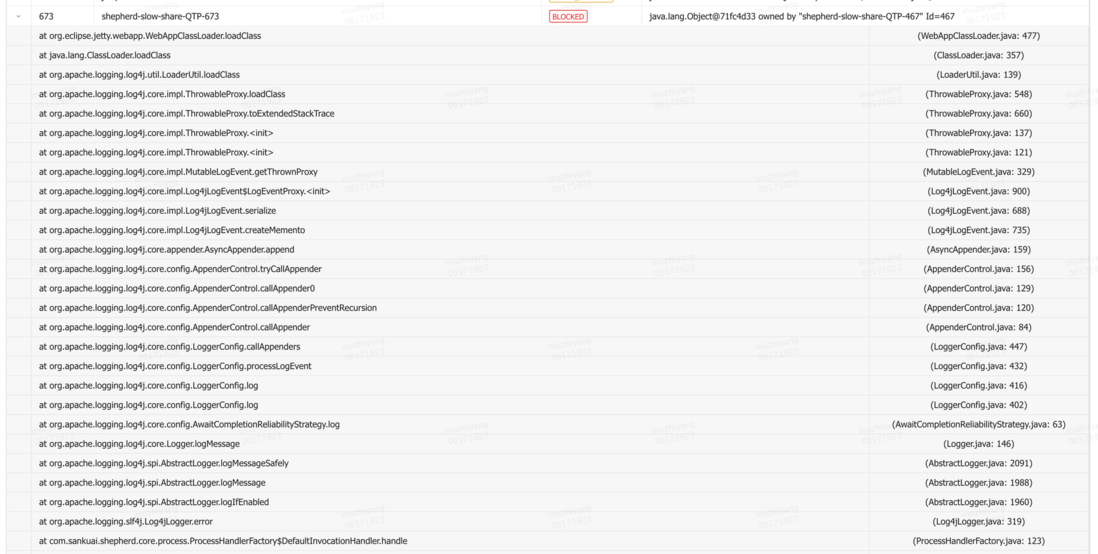

After receiving the "jvm.thread.blocked.count" alarm, check the thread monitoring indicators through the monitoring platform immediately. The thread stack at that time is shown in Figures 6 and 7 below.

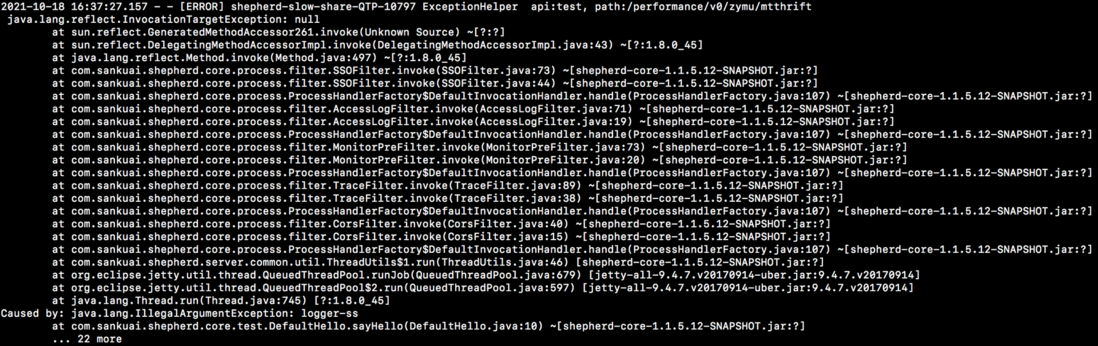

It is not difficult to see from the Blocked thread stack that it is related to log printing. Since it is an ERROR level log, check the specific error log and find that there are two business exceptions, as shown in Figure 8 and Figure 9 below:

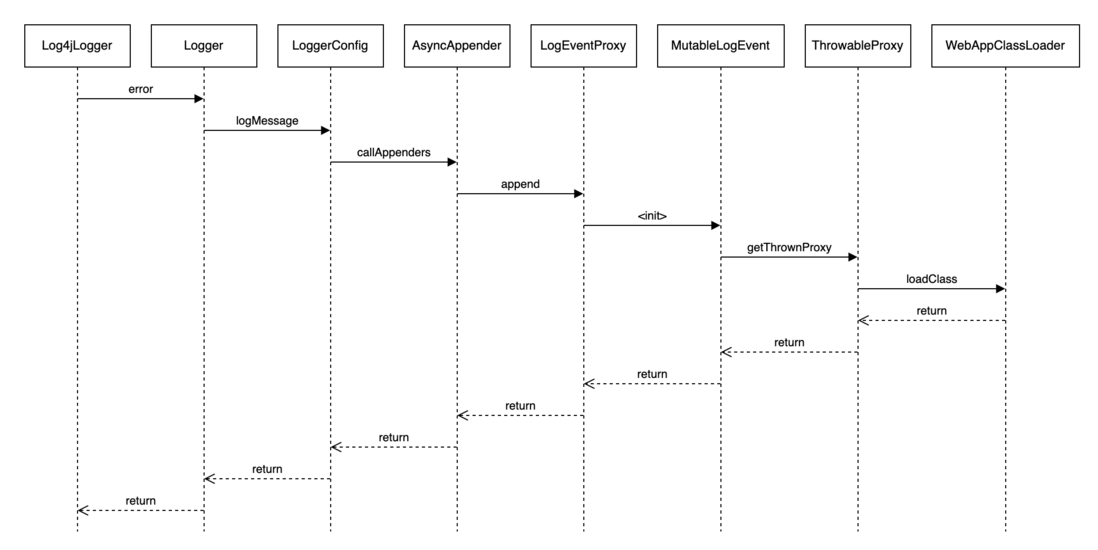

Could these business exceptions be the culprit behind the thread block? Next, this chapter will deeply analyze the root cause of the thread block in combination with the call chain shown in Figure 10 below.

3.2.2 Why block thread?

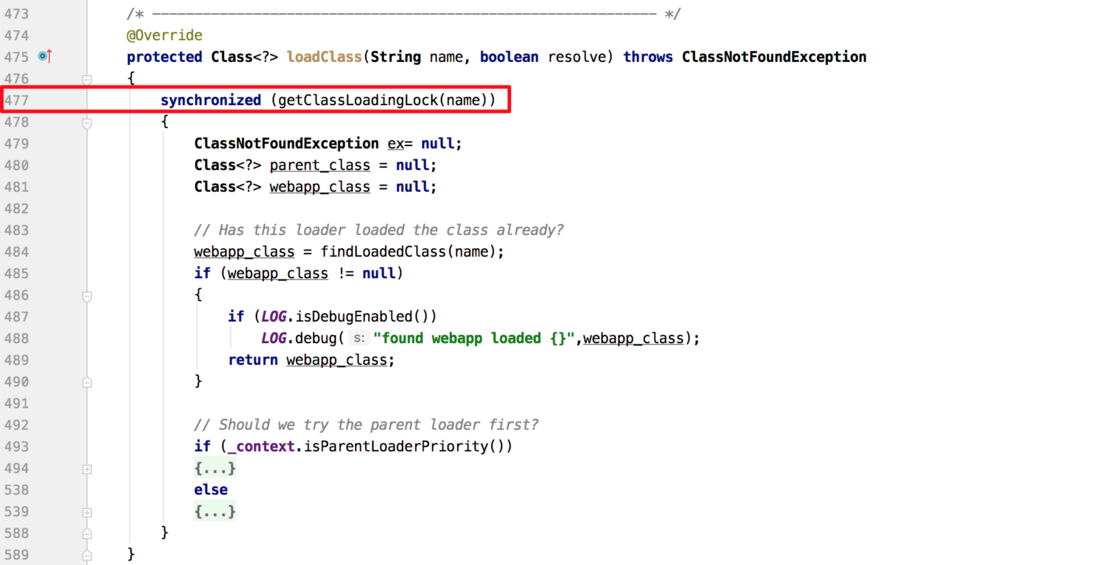

It can be seen from the Blocked thread stack that the thread is blocked on the class loading process. Check the relevant code snippet of WebAppClassLoader as shown in Figure 11 below. It is found that the synchronized block is indeed added according to the class name when loading the class. Therefore, the initial guess is that the class loading causes Thread Block.

But there are still some points worth scrutinizing the above speculation:

- The project code just outputs an ERROR log, why does it trigger class loading?

- Under normal circumstances, class loading hardly triggers thread blocks, otherwise a project needs to load thousands of classes. If blocks are caused by loading classes, the project cannot run normally.

3.2.3 Why is class loading triggered?

Continue to analyze from the Blocked thread stack, check the related code of ThrowableProxy in the stack, and find that its constructor traverses all stack elements in the entire exception stack, and finally obtains the JAR name and version information where all stack element classes are located. The specific process is as follows:

- First get the class name of the stack element.

- Then obtain the corresponding Class object by means of loadClass.

- Further obtain the JAR information of the class, obtain the JAR name from CodeSource, and obtain the JAR version from Package.

// org.apache.logging.log4j.core.impl.ThrowableProxy

private ThrowableProxy(final Throwable throwable, final Set<Throwable> visited) {

this.throwable = throwable;

this.name = throwable.getClass().getName();

this.message = throwable.getMessage();

this.localizedMessage = throwable.getLocalizedMessage();

final Map<String, CacheEntry> map = new HashMap<>();

final Stack<Class<?>> stack = ReflectionUtil.getCurrentStackTrace();

// 获取堆栈扩展信息

this.extendedStackTrace = this.toExtendedStackTrace(stack, map, null, throwable.getStackTrace());

final Throwable throwableCause = throwable.getCause();

final Set<Throwable> causeVisited = new HashSet<>(1);

this.causeProxy = throwableCause == null ? null : new ThrowableProxy(throwable, stack, map, throwableCause,

visited, causeVisited);

this.suppressedProxies = this.toSuppressedProxies(throwable, visited);

}

ExtendedStackTraceElement[] toExtendedStackTrace(final Stack<Class<?>> stack, final Map<String, CacheEntry> map,

final StackTraceElement[] rootTrace,

final StackTraceElement[] stackTrace) {

int stackLength;

if (rootTrace != null) {

int rootIndex = rootTrace.length - 1;

int stackIndex = stackTrace.length - 1;

while (rootIndex >= 0 && stackIndex >= 0 && rootTrace[rootIndex].equals(stackTrace[stackIndex])) {

--rootIndex;

--stackIndex;

}

this.commonElementCount = stackTrace.length - 1 - stackIndex;

stackLength = stackIndex + 1;

} else {

this.commonElementCount = 0;

stackLength = stackTrace.length;

}

final ExtendedStackTraceElement[] extStackTrace = new ExtendedStackTraceElement[stackLength];

Class<?> clazz = stack.isEmpty() ? null : stack.peek();

ClassLoader lastLoader = null;

for (int i = stackLength - 1; i >= 0; --i) {

// 遍历 StackTraceElement

final StackTraceElement stackTraceElement = stackTrace[i];

// 获取堆栈元素对应的类名称

final String className = stackTraceElement.getClassName();

// The stack returned from getCurrentStack may be missing entries for java.lang.reflect.Method.invoke()

// and its implementation. The Throwable might also contain stack entries that are no longer

// present as those methods have returned.

ExtendedClassInfo extClassInfo;

if (clazz != null && className.equals(clazz.getName())) {

final CacheEntry entry = this.toCacheEntry(stackTraceElement, clazz, true);

extClassInfo = entry.element;

lastLoader = entry.loader;

stack.pop();

clazz = stack.isEmpty() ? null : stack.peek();

} else {

// 对加载过的 className 进行缓存,避免重复加载

final CacheEntry cacheEntry = map.get(className);

if (cacheEntry != null) {

final CacheEntry entry = cacheEntry;

extClassInfo = entry.element;

if (entry.loader != null) {

lastLoader = entry.loader;

}

} else {

// 通过加载类来获取类的扩展信息,如 location 和 version 等

final CacheEntry entry = this.toCacheEntry(stackTraceElement,

// 获取 Class 对象

this.loadClass(lastLoader, className), false);

extClassInfo = entry.element;

map.put(stackTraceElement.toString(), entry);

if (entry.loader != null) {

lastLoader = entry.loader;

}

}

}

extStackTrace[i] = new ExtendedStackTraceElement(stackTraceElement, extClassInfo);

}

return extStackTrace;

}

/**

* Construct the CacheEntry from the Class's information.

*

* @param stackTraceElement The stack trace element

* @param callerClass The Class.

* @param exact True if the class was obtained via Reflection.getCallerClass.

* @return The CacheEntry.

*/

private CacheEntry toCacheEntry(final StackTraceElement stackTraceElement, final Class<?> callerClass,

final boolean exact) {

String location = "?";

String version = "?";

ClassLoader lastLoader = null;

if (callerClass != null) {

try {

// 获取 jar 文件信息

final CodeSource source = callerClass.getProtectionDomain().getCodeSource();

if (source != null) {

final URL locationURL = source.getLocation();

if (locationURL != null) {

final String str = locationURL.toString().replace('\\', '/');

int index = str.lastIndexOf("/");

if (index >= 0 && index == str.length() - 1) {

index = str.lastIndexOf("/", index - 1);

location = str.substring(index + 1);

} else {

location = str.substring(index + 1);

}

}

}

} catch (final Exception ex) {

// Ignore the exception.

}

// 获取类所在 jar 版本信息

final Package pkg = callerClass.getPackage();

if (pkg != null) {

final String ver = pkg.getImplementationVersion();

if (ver != null) {

version = ver;

}

}

lastLoader = callerClass.getClassLoader();

}

return new CacheEntry(new ExtendedClassInfo(exact, location, version), lastLoader);

}As can be seen from the above code, the ThrowableProxy#toExtendedStackTrace method uses Map<String, CacheEntry> to cache the CacheEntry corresponding to the current stack element class to avoid repeated parsing of the CacheEntry, but since the key used by the Map cache put operation comes from the StackTraceElement.toString method, The key used by the get operation comes from the StackTraceElement.getClassName method. Even for the same StackTraceElement, the return results corresponding to the toString and getClassName methods are different, so this map is useless.

// java.lang.StackTraceElement

public String getClassName() {

return declaringClass;

}

public String toString() {

return getClassName() + "." + methodName +

(isNativeMethod() ? "(Native Method)" :

(fileName != null && lineNumber >= 0 ?

"(" + fileName + ":" + lineNumber + ")" :

(fileName != null ? "("+fileName+")" : "(Unknown Source)")));

}This issue has been reported to the community via Issue: fix the CacheEntry map in ThrowableProxy#toExtendedStackTrace to be put and gotten with same key , and the issue has been fixed in version 2.11.1 . Although the cache validity problem is fixed by letting the get/put methods use the same key, since ThrowableProxy creates a new Map for each Throwable instead of using the global Map, its cache is only valid for a single Throwable. The range is very limited, it is tasteless to eat, and it is a pity to abandon it.

Closer to home, usually a class loader only loads a class once, and the class loader stores a class cache inside, which does not need to be loaded repeatedly, but the current phenomenon is that there are a large number of threads due to class loading, so there must be some classes. It can't be loaded, and it keeps trying to load it repeatedly. What kind of class can't be loaded?

3.2.4 What class cannot be loaded?

To find out what class cannot be loaded, in the final analysis, it is still necessary to analyze the specific stack of the business exception.

Comparing the two business exception stacks shown in Figure 12 and Figure 13, we can see that the two stacks are basically similar, and most of the classes are very common, but the only difference is:

- sun.reflect.NativeMethodAccessorImpl (see Figure 12).

- sun.reflect.GeneratedMethodAccessor261 (see Figure 13).

It is not difficult to guess from the literal information that this is related to reflection calls, but the problem is that the two stacks correspond to the same business code. Why are there two different exception stacks?

According to the relevant information, this is related to the JVM reflection call. The JVM divides the reflection call into two situations:

- By default, the native method is used for reflection operations.

- Under certain conditions, bytecode will be generated for reflection operation , that is, the sun.reflect.GeneratedMethodAccessor\<N\> class is generated, which is a wrapper class for the method of reflection call, proxying different methods, and the class suffix serial number is incremented.

The main process of the JVM reflection call is to obtain the MethodAccessor and execute the invoke call by the MethodAccessor. The relevant code is as follows:

// java.lang.reflect.Method

@CallerSensitive

public Object invoke(Object obj, Object... args)

throws IllegalAccessException, IllegalArgumentException,

InvocationTargetException

{

if (!override) {

if (!Reflection.quickCheckMemberAccess(clazz, modifiers)) {

Class<?> caller = Reflection.getCallerClass();

checkAccess(caller, clazz, obj, modifiers);

}

}

MethodAccessor ma = methodAccessor; // read volatile

if (ma == null) {

// 获取 MethodAccessor

ma = acquireMethodAccessor();

}

// 通过 MethodAccessor 调用

return ma.invoke(obj, args);

}

private MethodAccessor acquireMethodAccessor() {

MethodAccessor tmp = null;

if (root != null) tmp = root.getMethodAccessor();

if (tmp != null) {

methodAccessor = tmp;

} else {

// 通过 ReflectionFactory 创建 MethodAccessor

tmp = reflectionFactory.newMethodAccessor(this);

setMethodAccessor(tmp);

}

return tmp;

}When noInflation is false (default is false) or the class where the reflection method is located is a VM anonymous class (the class name includes a slash "/"), ReflectionFactory will return a MethodAccessor proxy class, namely DelegatingMethodAccessorImpl.

// sun.reflect.ReflectionFactory

public MethodAccessor newMethodAccessor(Method method) {

// 通过启动参数获取并解析 noInflation 和 inflationThreshold 值

// noInflation 默认为 false

// inflationThreshold 默认为15

checkInitted();

if (noInflation && !ReflectUtil.isVMAnonymousClass(method.getDeclaringClass())) {

return new MethodAccessorGenerator().

generateMethod(method.getDeclaringClass(),

method.getName(),

method.getParameterTypes(),

method.getReturnType(),

method.getExceptionTypes(),

method.getModifiers());

} else {

NativeMethodAccessorImpl acc =

new NativeMethodAccessorImpl(method);

DelegatingMethodAccessorImpl res =

new DelegatingMethodAccessorImpl(acc);

acc.setParent(res);

// 返回代理 DelegatingMethodAccessorImpl

return res;

}

}

private static void checkInitted() {

if (initted) return;

AccessController.doPrivileged(

new PrivilegedAction<Void>() {

public Void run() {

// Tests to ensure the system properties table is fully

// initialized. This is needed because reflection code is

// called very early in the initialization process (before

// command-line arguments have been parsed and therefore

// these user-settable properties installed.) We assume that

// if System.out is non-null then the System class has been

// fully initialized and that the bulk of the startup code

// has been run.

if (System.out == null) {

// java.lang.System not yet fully initialized

return null;

}

String val = System.getProperty("sun.reflect.noInflation");

if (val != null && val.equals("true")) {

noInflation = true;

}

val = System.getProperty("sun.reflect.inflationThreshold");

if (val != null) {

try {

inflationThreshold = Integer.parseInt(val);

} catch (NumberFormatException e) {

throw new RuntimeException("Unable to parse property sun.reflect.inflationThreshold", e);

}

}

initted = true;

return null;

}

});

}By default, DelegatingMethodAccessorImpl represents NativeMethodAccessorImpl, but as the number of reflection calls increases, when the number of reflection calls of a method exceeds a certain threshold (inflationThreshold, the default value is 15), NativeMethodAccessorImpl will automatically generate bytecodes through bytecode generation technology. Generate the MethodAccessorImpl implementation class, and modify the internal proxy object of DelegatingMethodAccessorImpl to point to the bytecode generation class instance, thereby changing the logic of subsequent reflection calls.

// sun.reflect.DelegatingMethodAccessorImpl

class DelegatingMethodAccessorImpl extends MethodAccessorImpl {

// 内部代理 MethodAccessorImpl

private MethodAccessorImpl delegate;

DelegatingMethodAccessorImpl(MethodAccessorImpl delegate) {

setDelegate(delegate);

}

public Object invoke(Object obj, Object[] args)

throws IllegalArgumentException, InvocationTargetException

{

return delegate.invoke(obj, args);

}

void setDelegate(MethodAccessorImpl delegate) {

this.delegate = delegate;

}

} // sun.reflect.NativeMethodAccessorImpl

class NativeMethodAccessorImpl extends MethodAccessorImpl {

private final Method method;

private DelegatingMethodAccessorImpl parent;

private int numInvocations;

NativeMethodAccessorImpl(Method method) {

this.method = method;

}

public Object invoke(Object obj, Object[] args)

throws IllegalArgumentException, InvocationTargetException

{

// We can't inflate methods belonging to vm-anonymous classes because

// that kind of class can't be referred to by name, hence can't be

// found from the generated bytecode.

// 每次调用时 numInvocations 都会自增加1,如果超过阈值(默认是15次),就会修改父类的代理对象,从而改变调用链路

if (++numInvocations > ReflectionFactory.inflationThreshold()

&& !ReflectUtil.isVMAnonymousClass(method.getDeclaringClass())) {

MethodAccessorImpl acc = (MethodAccessorImpl)

// 动态生成字节码,优化反射调用速度

new MethodAccessorGenerator().

generateMethod(method.getDeclaringClass(),

method.getName(),

method.getParameterTypes(),

method.getReturnType(),

method.getExceptionTypes(),

method.getModifiers());

// 修改父代理类的代理对象

parent.setDelegate(acc);

}

return invoke0(method, obj, args);

}

void setParent(DelegatingMethodAccessorImpl parent) {

this.parent = parent;

}

private static native Object invoke0(Method m, Object obj, Object[] args);

}As can be seen from the MethodAccessorGenerator#generateName method, the class name rule generated by the bytecode is sun.reflect.GeneratedConstructorAccessor\<N\>, where N is an increasing number starting from 0, and the generated class is defined by the DelegatingClassLoader class loader. Therefore, other class loaders cannot load the class, and thus cannot generate class cache data, which results in the need to traverse the JarFile every time a class is loaded, which greatly reduces the class search speed, and the class loading process is a synchronized call. The situation will worsen, resulting in thread Block.

// sun.reflect.MethodAccessorGenerator

public MethodAccessor generateMethod(Class<?> declaringClass,

String name,

Class<?>[] parameterTypes,

Class<?> returnType,

Class<?>[] checkedExceptions,

int modifiers)

{

return (MethodAccessor) generate(declaringClass,

name,

parameterTypes,

returnType,

checkedExceptions,

modifiers,

false,

false,

null);

}

private MagicAccessorImpl generate(final Class<?> declaringClass,

String name,

Class<?>[] parameterTypes,

Class<?> returnType,

Class<?>[] checkedExceptions,

int modifiers,

boolean isConstructor,

boolean forSerialization,

Class<?> serializationTargetClass)

{

final String generatedName = generateName(isConstructor, forSerialization);

// 忽略以上代码

return AccessController.doPrivileged(

new PrivilegedAction<MagicAccessorImpl>() {

public MagicAccessorImpl run() {

try {

return (MagicAccessorImpl)

ClassDefiner.defineClass

(generatedName,

bytes,

0,

bytes.length,

declaringClass.getClassLoader()).newInstance();

} catch (InstantiationException | IllegalAccessException e) {

throw new InternalError(e);

}

}

});

}

// 生成反射类名,看到了熟悉的 sun.reflect.GeneratedConstructorAccessor<N>

private static synchronized String generateName(boolean isConstructor, boolean forSerialization)

{

if (isConstructor) {

if (forSerialization) {

int num = ++serializationConstructorSymnum;

return "sun/reflect/GeneratedSerializationConstructorAccessor" + num;

} else {

int num = ++constructorSymnum;

return "sun/reflect/GeneratedConstructorAccessor" + num;

}

} else {

int num = ++methodSymnum;

return "sun/reflect/GeneratedMethodAccessor" + num;

}

} // sun.reflect.ClassDefiner

static Class<?> defineClass(String name, byte[] bytes, int off, int len,

final ClassLoader parentClassLoader)

{

ClassLoader newLoader = AccessController.doPrivileged(

new PrivilegedAction<ClassLoader>() {

public ClassLoader run() {

return new DelegatingClassLoader(parentClassLoader);

}

});

// 通过 DelegatingClassLoader 类加载器定义生成类

return unsafe.defineClass(name, bytes, off, len, newLoader, null);

}So, why do JVM reflection calls do this?

In fact, this is an optimization method of the JVM for reflection calls. It is explained in the documentation comments of sun.reflect.ReflectionFactory. This is an "Inflation" mechanism. The calling method of loading bytecode is called for the first time. It will be 3~4 times slower than the native call, but it will be more than 20 times faster than the native call in subsequent calls. In order to prevent the reflection call from affecting the startup speed of the application, the native method is used for the first few times of the reflection call. When the number of calls exceeds a certain number of calls, the bytecode method is used to improve the performance of the reflection call.

"Inflation" mechanism. Loading bytecodes to implement Method.invoke() and Constructor.newInstance() currently costs 3-4x more than an invocation via native code for the first invocation (though subsequent invocations have been benchmarked to be over 20x faster). Unfortunately this cost increases startup time for certain applications that use reflection intensively (but only once per class) to bootstrap themselves. To avoid this penalty we reuse the existing JVM entry points for the first few invocations of Methods and Constructors and then switch to the bytecode -based implementations.

So far, the direct cause of thread Block caused by class loading has finally been clarified, but this is not the root cause. An ERROR log is usually printed in the business code. Why does it cause the abnormal stack class to be parsed and loaded?

3.2.5 Why parse the exception stack?

The brief process of processing logs by AsyncAppender is shown in Figure 15 above. It maintains a BlockingQueue queue and an AsyncThread thread inside. When processing logs, the logs are first converted into Log4jLogEvent snapshots and then queued. At the same time, the AsyncThread thread is responsible for obtaining elements from the queue to process logs asynchronously. event.

// org.apache.logging.log4j.core.appender.AsyncAppender

@Override

public void append(final LogEvent logEvent) {

if (!isStarted()) {

throw new IllegalStateException("AsyncAppender " + getName() + " is not active");

}

if (!Constants.FORMAT_MESSAGES_IN_BACKGROUND) { // LOG4J2-898: user may choose

logEvent.getMessage().getFormattedMessage(); // LOG4J2-763: ask message to freeze parameters

}

// 创建 日志数据快照

final Log4jLogEvent memento = Log4jLogEvent.createMemento(logEvent, includeLocation);

// 放入 BlockingQueue 中

if (!transfer(memento)) {

if (blocking) {

// delegate to the event router (which may discard, enqueue and block, or log in current thread)

final EventRoute route = asyncQueueFullPolicy.getRoute(thread.getId(), memento.getLevel());

route.logMessage(this, memento);

} else {

error("Appender " + getName() + " is unable to write primary appenders. queue is full");

logToErrorAppenderIfNecessary(false, memento);

}

}

}When AsyncAppender generates a snapshot of LogEvent Log4jLogEvent, it will first convert the serialization of LogEvent to LogEventProxy. At this time, the processing of different types of LogEvent is slightly different:

- Log4jLogEvent type , first execute the Log4jLogEvent#getThrownProxy method to trigger the creation of a ThrowableProxy instance.

- MutableLogEvent type , first create a LogEventProxy instance, execute the MutableLogEvent#getThrownProxy method in the constructor, and trigger the creation of a ThrowableProxy instance.

In summary, regardless of whether the actual type of LogEvent is MutableLogEvent or Log4jLogEvent, it will eventually trigger the creation of a ThrowableProxy instance, and trigger parsing and loading of the exception stack class in the ThrowableProxy constructor.

// org.apache.logging.log4j.core.impl.Log4jLogEvent

// 生成Log4jLogEvent快照

public static Log4jLogEvent createMemento(final LogEvent event, final boolean includeLocation) {

// TODO implement Log4jLogEvent.createMemento()

return deserialize(serialize(event, includeLocation));

}

public static Serializable serialize(final LogEvent event, final boolean includeLocation) {

if (event instanceof Log4jLogEvent) {

// 确保 ThrowableProxy 已完成初始化

event.getThrownProxy(); // ensure ThrowableProxy is initialized

// 创建 LogEventProxy

return new LogEventProxy((Log4jLogEvent) event, includeLocation);

}

// 创建 LogEventProxy

return new LogEventProxy(event, includeLocation);

}

@Override

public ThrowableProxy getThrownProxy() {

if (thrownProxy == null && thrown != null) {

thrownProxy = new ThrowableProxy(thrown);

}

return thrownProxy;

}

public LogEventProxy(final LogEvent event, final boolean includeLocation) {

this.loggerFQCN = event.getLoggerFqcn();

this.marker = event.getMarker();

this.level = event.getLevel();

this.loggerName = event.getLoggerName();

final Message msg = event.getMessage();

this.message = msg instanceof ReusableMessage

? memento((ReusableMessage) msg)

: msg;

this.timeMillis = event.getTimeMillis();

this.thrown = event.getThrown();

// 创建 ThrownProxy 实例

this.thrownProxy = event.getThrownProxy();

this.contextData = memento(event.getContextData());

this.contextStack = event.getContextStack();

this.source = includeLocation ? event.getSource() : null;

this.threadId = event.getThreadId();

this.threadName = event.getThreadName();

this.threadPriority = event.getThreadPriority();

this.isLocationRequired = includeLocation;

this.isEndOfBatch = event.isEndOfBatch();

this.nanoTime = event.getNanoTime();

} // org.apache.logging.log4j.core.impl.MutableLogEvent

@Override

public ThrowableProxy getThrownProxy() {

if (thrownProxy == null && thrown != null) {

// 构造 ThrowableProxy 时打印异常堆栈

thrownProxy = new ThrowableProxy(thrown);

}

return thrownProxy;

}3.2.6 Problem Summary

When Log4j2 prints the exception log, AsyncAppender will first create a snapshot of the log event, and further trigger parsing and loading of the exception stack class. The JVM optimizes the performance of reflection calls by generating bytecodes, but the dynamically generated classes cannot be loaded by the WebAppClassLoader class loader. Therefore, when a large number of exception stacks containing reflection calls are output to the log, class loading is frequently triggered. The class loading process is synchronized and locked, and each load needs to read the file, which is slow, resulting in thread block.

3.3 Lambda expression leads to thread Block

3.3.1 Problem scene

After receiv

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。