Calculation scheduling algorithm of Workflow

I've been curious for so long, and today I finally wrote about the most-cueed topic: Workflow's computing scheduling, including the original scheduling algorithm and related data structures.

PS The original text was written in May 2022~

As an asynchronous scheduling programming paradigm, C++ Workflow has several levels of disassembly of scheduling:

- User code layer: Provides structured concurrency at the task flow level, including series-parallel, DAG, and composite tasks, etc., to manage business logic and organize the dependencies of things to be done;

- Resource management layer: Coordinates and manages different resources, such as network, CPU, GPU, file IO, etc., to achieve the most efficient and general resource reuse with the least cost.

Today, I will focus on introducing the original computing scheduling algorithm within Workflow, including the Executor module (only 200 lines of code) and related modules, how to manage computing resources and coordinate different computing tasks as a whole, so that no matter how long the task takes, it can be The most general solution for as balanced scheduling as possible.

And after reading it, you can also have a better understanding of the life cycle that has been emphasized in the previous article "A Logic Complete Thread Pool" . Architecture design has always emphasized that each module itself is complete and self-consistent, because it is more conducive to the evolution of upper-level modules.

https://github.com/sogou/workflow/blob/master/src/kernel/Executor.cc

1. Problems faced by computing scheduling

No matter what kind of computing hardware is used, the problems to be solved by computing scheduling are:

- make full use of resources;

- Resource allocation for different categories of tasks;

- priority management;

The first point is easy to understand. Taking the CPU as an example, as long as the task comes, try to run as many cores on the CPU as possible.

The second point , different categories are for example: each thing consists of 3 steps A->B->C , the computing resources consumed is 1:2:3 , the simple way is to give 3 thread pools respectively, the thread ratio 1:2:3 (assuming a 24-core machine, we can configure the number of threads in the three thread pools to 4, 8, and 12 respectively).

However, since the online environment is complex and changeable, if the resource consumption becomes 7:2:3, the fixed number of threads solution is obviously not advisable, and it is difficult to match such a situation without changing the code.

Another disadvantage of this is that if 100 a's are submitted in a batch, obviously only 4 threads can work, and it is difficult to achieve "resource application ready-to-use";

Is there any solution? To be more complex, dynamic monitoring can be introduced which is time-consuming. However, introducing any complex scheme will have a new overhead, which is wasteful in most cases.

Continue to look at the third point , the priority management is such as: or A->B->C three tasks. Now that a D has been added, I want to be called up as soon as possible. The simple approach is often to give all tasks a priority number, such as 1-32.

But this is not a long-term solution. The number is fixed and will always be used up in higher priority, and the task itself is greedy. As long as it has the highest priority, everyone will eventually roll up (not

What we need is a solution that flexibly configures the thread ratio , fully schedules the CPU , and handles priorities fairly .

2. Innovative data structure: multi-dimensional scheduling queue

Almost all the solutions in Workflow are general-purpose. For CPU computing, it is: a global thread pool and a unified management method. In use, the computing task only needs to bring a queue name, and it can be handed over to Workflow to help you achieve the most balanced scheduling.

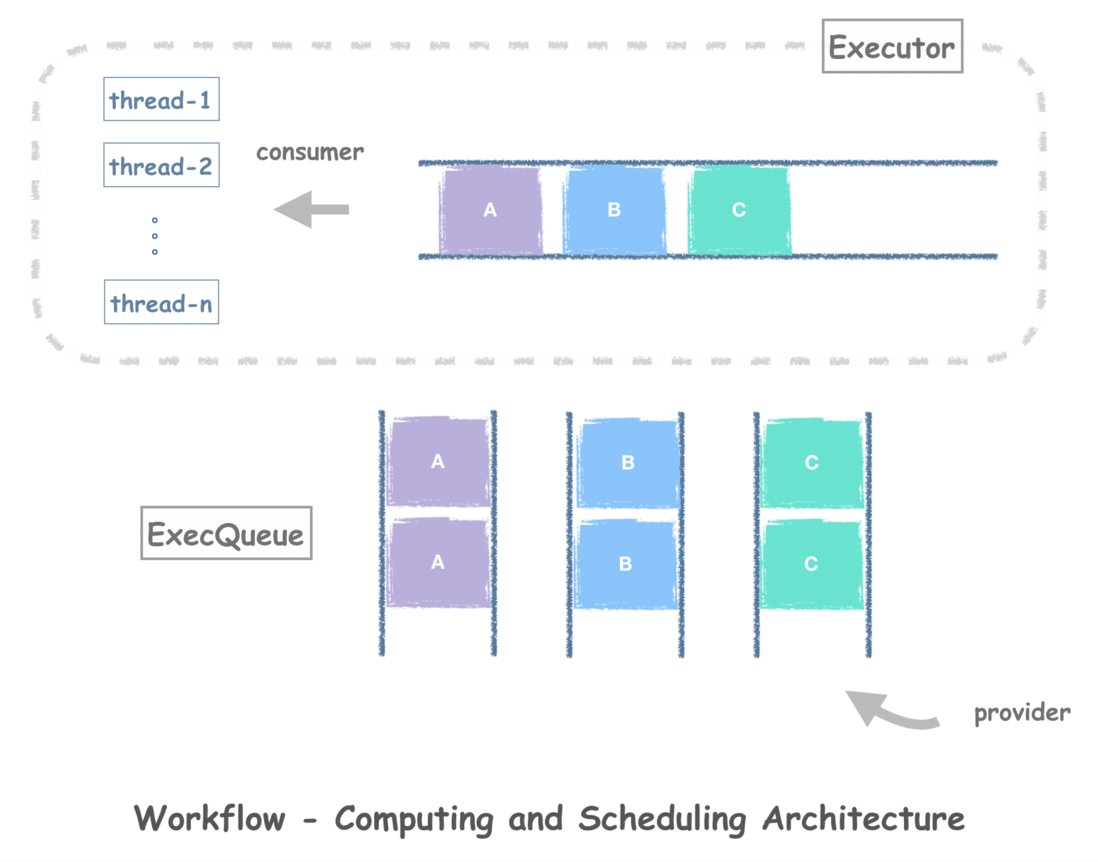

The basic schematic is as follows:

Inside the Executor, there is a thread pool and a basic main queue. And each task itself also belongs to an ExecQueue, you can see that its structure is a group of sub-queues distinguished by names.

This combination of data structures can do the following three things:

- First, as long as there are idle computing threads available, the task will be called up in real time, and the computing queue name will not work.

When the computing thread cannot call up each task in real time, the tasks under the same queue name will be called up in the order of FIFO, and the queues are treated equally.

For example, firstly submit n tasks with queue name A, and then continuously submit n tasks with queue name B. So no matter what the CPU time of each task is or the number of computing threads, the two queues tend to finish executing at the same time.

- This rule can be extended to any number of queues and any order of submission.

Let's take a look at what the algorithm is.

The first point: the thread of Executor keeps taking tasks from the main queue inside Executor for execution;

Second point: before the thread takes the task from the main queue and is ready to execute the task, it also takes the task from its own subqueue. And, if there are still tasks behind the sub-queue, the next task is taken out and placed in the main queue.

The third point: When an external user submits a task to Workflow, Workflow will first put the task in the subqueue by name. And if it is found that this is the first task in the sub-queue (indicating that the sub-queue was empty just now), it is immediately submitted to the main queue.

The algorithm itself is quite simple, and when submitting a task, you only need to give the scheduler slight guidance, that is, the queue name (an ExecQueue corresponding to the Executor), and there is no need to specify information such as priority or calculation time estimation.

When we receive enough and equal number of A, B, C tasks, no matter what order the tasks arrive in, and regardless of the computation time of each (note each, not each) task, A, B, C The three subqueues will be calculated at the same time.

The length of the main queue will never exceed the number of sub-queues, and in the main queue, each sub-queue will always have only one task, which is the inevitable result of the algorithm.

3. Source code analysis

We take the simplest WFGoTask as an example, and implement the abstract scheduling algorithm into the code layer by layer from the outside to the inside.

1) Usage example

void func(int a);

// 使用时

WTGoTask *task = WFTaskFactory::create_go_task("queue_name_test", func, 4);

task->start();2) Derivative relationship

Friends who have learned about Workflow tasks must know that any task in Workflow is a behavior derivation , and there is a layer in the middle, which is the basic unit , that is, it is derived from SubTask and specific execution units , so that upper-level tasks can be serialized by SubTask into the task flow. , can also do what a specific execution unit does.

For computing scheduling, the specific execution unit must be each computing task that can be scheduled by a thread.

We can see that WFGoTask is derived from ExecRequest, which is the basic unit of execution. (Review to the network level , the basic unit is CommRequest , one represents execution , one represents network , symmetry is everywhere~)

Open the src/kernel/ExecRequest.h file to find it, here just look at what is done in dispatch() :

// 这里可以看到,具体执行单元是ExecSession,它负责和Executor等打交道

class ExecRequest : public SubTask, public ExecSession

{

public:

virtual void dispatch()

{

if (this->executor->request(this, this->queue) < 0)

...

} dispath() What it does is to submit itself and its own queue to the Executor through the request() interface.

3) The production interface of Executor: request()

int Executor::request(ExecSession *session, ExecQueue *queue)

{

...

// 把任务加到对应的子队列后面

list_add_tail(&entry->list, &queue->task_list);

if (queue->task_list.next == &entry->list)

{ // 子队列刚才是空的,那么可以立刻把此任务提交到Executor中

struct thrdpool_task task = {

.routine = Executor::executor_thread_routine,

.context = queue

};

if (thrdpool_schedule(&task, this->thrdpool) < 0) //提交

...

}

return -!entry;

}4) Executor's consumption interface: routine()

I just saw that when the thread actually executes a task, it calls executor_thread_routine() , and the context passed in is the subqueue where the task is located.

void Executor::executor_thread_routine(void *context)

{

ExecQueue *queue = (ExecQueue *)context;

...

entry = list_entry(queue->task_list.next, struct ExecTaskEntry, list);

list_del(&entry->list); // 从子队列里删掉当前正要执行的任务

...

if (!list_empty(&queue->task_list))

{ // 如果子队列后面还有任务(也就是同名任务),放进来主队列中

struct thrdpool_task task = {

.routine = Executor::executor_thread_routine,

.context = queue

};

__thrdpool_schedule(&task, entry, entry->thrdpool);

}

...

session->execute(); // 执行当前任务... 跑啊跑...

session->handle(ES_STATE_FINISHED, 0); // 执行完,调用接口,会打通后续任务流

}4. Transformation case sharing: replacing traditional pipeline mode with task flow

Inside the company, the most classic transformation case is to replace the traditional pipeline mode with Workflow task scheduling, which is similar to the number of threads allocated to different modules according to the resource ratio introduced at the beginning. , want to add another step/module, etc., are very inflexible solutions.

Compared with the Workflow solution, many drawbacks of the traditional approach can be perfectly avoided.

In addition, there are still some problems in the actual calculation scheduling, which are very test of the implementation details of the framework. For example, whether the error handling is well done, whether the dependency and cancellation are well done, and whether the life cycle is well managed. Although these are not the matter of the computing module itself, but the task flow layer of Workflow provides a good solution.

When the last thread pool article po went online, some friends also asked:

Here's a simple way to share:

5. Finally

Summarize some thoughts above.

When we do calculation scheduling, we often forget that the fundamental problem to be solved is A->B->C , not 1:2:3 . If you often have to worry about other issues, it is often because the scheduling scheme itself is not general enough. Only an architectural solution that is the most general and returns to the essence of the problem can allow developers to focus on improving their own modules without worrying about other issues, and it is also more convenient for the upper layer to do secondary development, providing developers with endless possibilities full of imagination sex.

In addition, it is also used as a supplement to the scene of the thread pool with complete logic in the previous article. Take out the upper-level code such as Executor and analyze it, in order to truly feel the importance of the underlying thread pool so that the task itself can call up the next task.

There are many innovative practices in Workflow. Maybe I have a lot of bad expressions or lack of technology, but technical articles are all with a mentality of sharing new ideas and new practices~ Well, I hope to see everyone's differences Comments are welcome to send to the issue of the project~~~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。