tjhttp 2. "Graphic HTTP" - Historical Development of HTTP Protocol (Key Point)

Knowledge point

- The structure of request and response messages.

- The evolution history of the HTTP protocol, introducing the major feature changes of different HTTP versions from scratch. (emphasis)

- A few of the more common HTTP issues are discussed.

2.0 Introduction

This chapter is basically mostly personal expansion, because the content of the book is really shallow. The content of this article is very long, and even if it is so long, it only talks about part of the HTTP protocol. The HTTP protocol itself is very complicated.

2.1 Request and Response Message Structure

The basic content of the request message:

The request content needs to be sent from the client to the server:

GET /index.htm HTTP/1.1

Host: hackr.jpThe basic content of the response message:

The server returns according to the request content processing result:

The first part is the HTTP protocol version, followed by the status code 200 and the reason phrase.

The next line contains the date and time when the response was created, including the properties of the header fields.

HTTP/1.1 200 OK

Date: Tue, 10 Jul 2012 06:50:15 GMT

Content-Length: 362

Content-Type: text/html

<html> ……2.2 HTTP Evolution History

| Protocol version | core problem solved | Solution |

|---|---|---|

| 0.9 | HTML file transfer | Established the communication process of client request and server response |

| 1.0 | Different types of file transfers | set header field |

| 1.1 | Creating/disconnecting TCP connections is expensive | Establish a long connection for multiplexing |

| 2 | Concurrency is limited | Binary Framing |

| 3 | TCP packet loss blocking | Using UDP protocol |

| SPDY | HTTP1.X request delay | multiplexing |

2.2.1 Overview

Let's review the evolutionary history of HTTP. The following is the general evolutionary route of the entire HTTP connection without all the details.

Note: The upgrade content of the protocol has selected representative parts, and the complete content needs to read the original RFC protocol to understand

- http0.9 : It only has the most basic HTTP connection model, which existed for a very short period of time and was quickly improved later.

- http1.0: In version 1.0, each TCP connection can only send one request. After the data is sent, the connection is closed. If other resources are requested, the TCP connection must be re-established. (TCP requires three handshakes and four waves between the client and the server in order to ensure correctness and reliability, so the cost of establishing a connection is very high)

http1.1:

- Long connection : add a new Connection field, the default is keep-alive, keep the connection from being disconnected, that is, the TCP connection is not closed by default, and can be reused by multiple requests;

- Pipeline : In the same TCP connection, the client can send multiple requests, but the order of the responses is still returned in the order of the requests, and the server will only respond after processing one response before the next response;

- host field : The host field is used to specify the domain name of the server, so that multiple requests can be sent to different websites on the same server, which improves the reuse of the machine, which is also an important optimization;

HTTP/2:

- Binary format : 1.x is a text protocol, but 2.0 is a binary frame as the basic unit. It can be said to be a binary protocol. All transmitted information is divided into messages and frames, and encoded in binary format. One frame contains data and identifiers, making network transmission efficient and flexible;

- Multiplexing : In version 2.0 multiplexing, multiple requests share one connection, and multiple requests can be concurrent on one TCP connection at the same time. The identification of the binary frame is mainly used to distinguish and realize the multiplexing of the link;

- Header compression : The 2.0 version uses the HPACK algorithm to compress the header data, thereby reducing the size of the request and improving the efficiency. This is very easy to understand. Before each transmission, the same header must be sent, which is very redundant. Incremental update of header information effectively reduces the transmission of header data;

- Server-side push : In version 2.0, the server is allowed to actively send resources to the client, which can accelerate the client;

- HTTP/3:

This version is an epoch-making change. In HTTP/3, the TCP protocol will be deprecated and theQUIC protocol based on the UDP protocol will be used instead. It should be noted that QUIC is proposed by Google (same as SPDY in 2.0). QUIC refers to fast UDP Internet connections . Since UDP is used, it also means that there may be packet loss and decreased stability in the network. Of course, Google will not let such a thing happen, so the QUIC they proposed can not only ensure stability, but also ensure the compatibility of SSL, because HTTP3 will be launched together with TLS1.3.

For these reasons, the people who developed the network protocol IETF basically agreed with QUIC’s proposal (it’s too good to be free), so HTTP 3.0 came. But this is only the most basic draft. In subsequent discussions, we hope that QUIC can be compatible with other transport protocols. The final order is as follows: IP / UDP / QUIC / HTTP. In addition, TLS has a detail optimization that the browser sends its own key exchange material to the server for the first time when connecting, which further shortens the exchange time.

Why HTTP3.0 needs to start from the protocol is that although HTTP/2 solves the problem that the HTTP protocol cannot be multiplexed, it does not solve the problem from the TCP level. The specific TCP problems are as follows:

- The head of the queue is blocked ,

HTTP/2multiple requests run in oneTCPconnection, if the network request with the lower sequence number is blocked, even if the sequence number is higherTCPThe segment has been received, and the application layer cannot read this part of the data from the kernel. From the HTTP point of view, multiple requests are blocked, and the page is only loaded with part of the content; -

TCPTLS握手时延缩短:TCLTLS握手,共有3-RTT的Delay, HTPT/3 is finally compressed to 1 RTT (unimaginable how fast); - 连接迁移需要重新连接,移动

4G网络环境WIFI时,TCP四元组来确认一条TCP, then after the network environment changes, it will cause theIPaddress or port to change, soTCPcan only disconnect, and then re-establish the connection to switch the network environment. high cost;

RTT: RTT is the abbreviation of Round Trip Time , which is simply the time for communication back and forth .

The following is the official comparison of the shortening of RTT speed. In the end, only the key exchange of 1RTT is required for the first connection, and the subsequent connections are all 0RTT!

2.2.2 HTTP 0.9

This version is basically the scratch paper protocol, but it has the most primitive basic model of HTTP, such as only the GET command, no Header information, and the destination is very simple, no multiple data formats, only the simplest text.

In addition, the server will immediately close the TCP connection after establishing and sending the request content. At this time, a TCP can only send one HTTP request, which adopts the method of one response and one response.

Of course, these contents will be upgraded and improved in later versions.

2.2.3 HTTP 1.0

Original text of the agreement: https://datatracker.ietf.org/doc/html/rfc1945

Obviously HTTP 0.9 has many flaws and cannot meet the network transmission requirements. Browsers now need to transmit more complex images, scripts, audio and video data.

In 1996, HTTP underwent a major upgrade. The main updates are as follows:

- Add more request methods: POST, HEAD

- Add Header header to support more situation changes

- The concept of protocol version number was introduced for the first time

- Transmission is no longer limited to text data

- Add response status code

There is a sentence at the beginning of the original text of the HTTP1.0 protocol:

原文:

Status of This Memo:

This memo provides information for the Internet community. This memo

does not specify an Internet standard of any kind. Distribution of

this memo is unlimited.This agreement uses the word memo , which means memorandum, which means that although a lot of regulations that seem to be similar to standards have been written, they are actually still drafts , and no agreements and standards are stipulated. In addition, this agreement It was drafted at a branch of MIT, so it can be considered a temporary proposal after discussions.

Introduction to the main changes of HTTP 1.0

Under the premise that this is a memorandum, let's introduce some important concepts of the protocol.

HTTP 1.0 defines a stateless, connectionless application-layer protocol, and paper defines the HTTP protocol itself.

Stateless, connectionless definition: HTTP1.0 stipulates that a short-term connection can be maintained between the server and the client. Each request needs to initiate a new TCP connection (no connection), and the connection is immediately disconnected after the connection is completed, and the server is not responsible for Log past requests (stateless).

In this way, there is a problem, that is, one access usually requires multiple HTTP requests, so it is very inefficient to establish a TCP connection for each request. In addition, there are two more serious problems: head-of-line blocking and inability to reuse connections .

Blocking at the head of the queue: Because the TCP connection is sent in a similar way to queuing, if the previous request does not arrive or is lost, the latter request needs to wait for the previous request to complete or complete the retransmission before making the request.

Unable to reuse connections : TCO connection resources are limited. At the same time, because of TCP's own adjustment ( sliding window ), TCP will have a slow-start process to prevent network congestion.

RTT time calculation: TCP three-way handshake requires at least 1.5 RTTs in total. Note that HTTP connections are not HTTPS connections.

Sliding window: A simple understanding is that TCP provides a mechanism that allows the "sender" to control the amount of data sent according to the actual receiving capability of the "receiver" .

2.2.4 HTTP1.1

The upgrade of HTTP 1.1 has changed a lot. The main change is to solve the problem of establishing connection and transmitting data . In HTTP 1.1, the following contents are introduced for improvement:

- Long connection: that is,

Keep-aliveheader field, so that TCP does not close by default, ensuring that one TCP can transmit multiple HTTP requests - Concurrent connections: A domain name allows multiple long connections to be specified (note that it will still block if the upper limit is exceeded);

- Pipeline mechanism: One TCP can send multiple requests at the same time (but the actual effect is very tasteless and will increase server pressure, so it is usually disabled);

- Add more methods: PUT, DELETE, OPTIONS, PATCH, etc.;

- HTTP1.0 adds cache fields (If-Modified-Since, If-None-Match), while HTTP1.1 introduces more fields, such as

Entity tag,If-Unmodified-Since, If-Match, If-None-Matchand more cache strategies for cache headers. - Allows data to be transmitted in chunks (Chunked), which is important for big data transmission;

- Force the use of the Host header to create conditions for hosting on the Internet;

- In the request header, the

rangefield that supports resumable uploads is introduced;

The following is the content of the notes recorded in the second chapter of the book. The date of writing the book is when HTTP1.1 was flourishing, which basically corresponds to some notable features of the HTTP1.1 protocol.

stateless protocol

The HTTP protocol itself does not have the function of saving the previously sent requests or responses. In other words, the HTTP protocol itself only guarantees that the format of the protocol message conforms to the requirements of HTTP. In addition, transmission and network communication need to rely on lower layers. agreement is completed.

HTTP is designed in such a simple form, in essence, everything except the content of the protocol itself is not considered, so as to achieve the effect of high-speed transmission. However, because of the simplicity and rudeness of HTTP, the protocol itself needs many auxiliary components to complete various access effects of the WEB, such as maintaining the login status, saving recent browser access information, and remembering passwords, etc., which all require cookies and sessions to complete.

HTTP/1.1 introduced cookies and sessions to assist HTTP in completing state storage and other operations.

request resource location

Most of the time, HTTP accesses resources through the domain name of the URL. Locating the real service to be accessed by the URL requires the cooperation of DNS. What DNS is will not be repeated here.

If it is a request to access the server, you can initiate a request through*, such asOPTIONS * HTTP/1.1.

request method

In fact, the most used ones are GET and POST .

GET : It is usually regarded as a resource that can be directly accessed through the URL without the need for server verification. However, a large number of request parameters are usually carried in the URL, but these parameters are usually not related to sensitive information, so they are placed in the URL Very convenient and simple.

POST : Usually it is the parameters submitted by the form, which requires interception and verification of the server to obtain, such as downloading files or accessing some sensitive resources. In fact, POST requests are used more frequently than GET requests, because POST requests are used more frequently than GET requests. The requested data is "encrypted" protected. Compared with GET requests, it is much safer and more reliable.

persistent connection

The so-called persistent connection contains certain historical reasons. In the earliest days of HTTP 1.0, each access and response was a very small resource interaction, so after a request is completed, it can basically be disconnected from the server, and the next time it needs to be requested again. connect.

However, with the development of the Internet, the resource package is getting bigger and bigger, and the demands and challenges for the Internet are also getting bigger and bigger.

In subsequent HTTP/1.1, all connections are persistent connections by default, in order to reduce the frequent request connection and response of the client and server.

Supporting HTTP 1.1 requires both parties to support persistent connections to complete the communication.

Pipeline

Note that the full-duplex protocol in the true sense of HTTP is only implemented in HTTP/2, and the core of the implementation is multiplexing .

Pipelining can be seen as an enhancement to enable half-duplex HTTP1.1 to support full-duplex protocols.

Full-duplex protocol: It means that both ends of the HTTP connection can directly send requests to the other party without waiting for response data to the other party, realizing the function of processing multiple requests and responses at the same time.

HTTP/1.1 allows multiple http requests to be output through a socket at the same time without waiting for the corresponding response (here reminds that pipelining also requires the support of both parties to complete the connection).

It should be noted that this is essentially a TCP request encapsulating multiple requests and then directly throwing them to the server for processing. The client can do other things next, either waiting for the server to wait slowly, or accessing other resources by itself.

The client encapsulates multiple TCP requests into one and sends it to the server through the FIFO queue. Although the server can process multiple requests in the FIFO queue, it must wait for all requests to complete and then respond back one by one in the order in which the FIFO is sent, that is, In fact, it does not fundamentally solve the blockage problem.

The piped technology is convenient, but the restrictions and rules outweigh the benefits, and it's a bit of a fart. As a result, it is not very popular and not used by many servers, and most HTTP requests will also disable pipelining to prevent server requests from being blocked and not responding.

Pipelining Summary

- In fact, pipelining can be seen as a request that was originally blocked on the client side, becoming a request blocked on the server side.

- Pipeline requests are usually GET and HEAD requests. POST and PUT do not need to be piped. Pipeline can only use existing

keep-aliveconnections. - Pipelining is an improvement under the HTTP1.1 protocol, the server cannot handle parallel requests well, but this solution is not ideal, and it failed to save Wei and Zhao and was eventually disabled by major browsers.

- The difference between the ordering of FIFO queues and the ordering of TCP can be simply regarded as the difference between strong consistency and weak consistency . FIFO queue orderliness means that requests and responses must be sent in exactly the same way as in the queue, while TCP only guarantees the approximate logical order of sending and responses, and the actual situation may be inconsistent with the described situation.

- Because the pipeline throws the burden to the server, from the perspective of the client, it completes full-duplex communication. Actually this is just pseudo full duplex communication.

cookies

The content of cookies is not the focus of this book. If you need to know the relevant knowledge, you can directly query the information to understand, basically grab a lot.

2.2.5 HTTP/2 ]( https://www.ietf.org/archive/id/draft-ietf-httpbis-http2bis-07.html ))

The protocol changes of HTTP2 are relatively large. On the whole, there are some important adjustments as follows:

- SPDY: This concept was proposed by Google. At first, it was hoped to be an independent protocol. However, in the end, relevant technical personnel of SPDY participated in HTTP/2. Therefore, Google Chrome fully supports HTTP/2 and abandoned the idea of SPDY becoming a protocol by itself. SPDY, with the following improvements:

- HTTP Speed + Mobility: Microsoft proposes to improve the speed and performance standards of mobile communication, which is based on SPDY and WebSocket proposed by Google.

- Network-Friendly HTTP Upgrade: Improve HTTP performance when communicating on mobile.

Judging from the influence of the three, it is obvious that Google has the greatest influence. Since HTTP 3.0 was initiated by Google, it can be seen that the standard formulation of the HTTP protocol is basically Google's final say.

Next, let's take a look at the most important SPDY. Google is a geek company. SPDY can be regarded as a "toy" created by Google to improve the transmission efficiency of the HTTP protocol between the official release of HTTP1.1 and HTTP/2, focusing on optimization. The request delay problem of HTTP1.X and the security problem of HTTP1.X are solved:

Reduce latency (multiplexing): Use multiplexing to reduce the problem of high latency. Multiplexing refers to using Stream to allow multiple requests to share a TCP connection to solve HOL Blocking (head of line blocking) ( head-of-line blocking), while improving bandwidth utilization.

- In HTTP1.1

keep-aliveis usedhttp pipeliningis essentiallymultiplexing, but the specific implementation is not ideal. - All major browsers disable by default

pipelining, also because of the HOL blocking problem.

- In HTTP1.1

- Server-side push : HTTP1.X pushes are all half-duplex, so in 2.0, the real server-side request is full-duplex, and WebSocket shines in this full-duplex piece.

- Request priority : For a bottom-line solution that introduces multiplexing, it is easy to block a single request when multiplexing uses multiple streams. That is to say, the same problem as the pipeline connection mentioned above, SPDY prioritizes important requests by setting priorities. For example, the content of the page should be displayed first, and then the CSS file should be loaded for beautification and script interaction, etc., which actually reduces the The user won't close the page while waiting to the odds.

- Header compression : HTTP1.X headers are often redundant, so 2.0 will automatically select a suitable compression algorithm to automatically compress requests to speed up requests and responses.

- HTTPS-based encrypted protocol transmission : HTTP1.X itself will not add SSL encryption, while 2.0 allows HTTP to have its own SSL, thereby improving transmission reliability and stability.

Most of these contents are adopted by HTTP/2 in the follow-up. Let's take a look at the specific implementation details of HTTP/2.

HTTP/2 specific implementation (emphasis)

Of course, this part only talks about some key upgrades in the protocol. For details, please refer to "References" and click the title of HTTP/2.

Binary frame (Stream)

HTTP/2 uses stream (binary) instead of ASCII encoding transmission to improve transmission efficiency. Requests sent by the client are encapsulated into binary frames with numbers, and then sent to the server for processing.

HTTP/2 completes multiple request operations through one TCP connection . The server accepts the stream data and checks the number to combine it into a complete request content, which also needs to split the response according to the splitting rules of binary frames. Using binary framing to segment data like this, the client and server only need one request to complete the communication, that is, the multiple Streams mentioned by SPDY are merged into one TCP connection to complete.

Binary framing divides data into smaller messages and frames, which are encoded in binary format. In HTTP1.1 the header message is encapsulated into Headers, and then Request body encapsulated into Data frame.

The purpose of using binary framing is forward compatibility. It is necessary to add a binary framing layer between the application layer and the transport layer, so that the HTTP1.X protocol can be upgraded more easily without conflicting with the past protocols.

The relationship between frames, messages, and Streams

- Frame: It can be considered as the smallest unit in the stream.

- Message: Represents a request in HTTP1.X.

- Stream: Contains one or more messages.

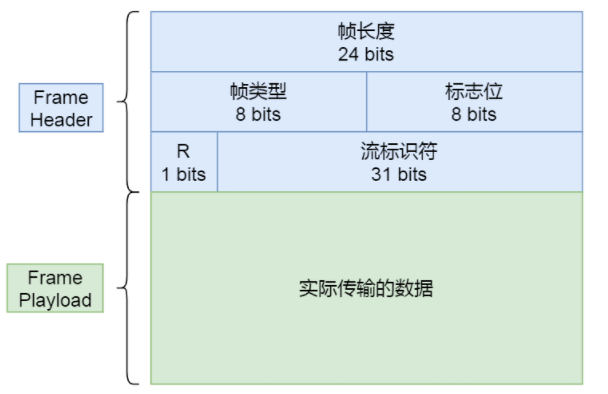

Binary Framing Structure

The binary frame structure mainly includes two parts: the header frame and the data frame. The header has only 9 bytes in the number of frames. Note that R is reserved for the flag bit. So the whole calculation is:

It consists of 3 bytes frame length + 1 byte frame type + 31bit stream identifier and 1bit unused flag bit.

Frame length : data frame length, 24-bit 3-byte size, the value is between 2^14 (16384) - 2^24 (1677215), the receiver's SETTINGS_MAS_FRAM_SIZE setting.

Frame Type : Distinguish between data frame and control frame.

Flag bit : carries simple control information, and the flag bit indicates the priority of the stream.

Stream identifier : Indicates which stream the frame belongs to. The upper limit is 2 to the 31st power. The receiver needs to assemble and restore the packet according to the ID of the stream identifier. The messages of the same Stream must be in order. In addition, the client and the server use odd and even numbers to identify streams respectively, and multiplexing can be applied only when the concurrent streams use the identifiers.

R : 1-bit reserved flag, not yet defined, 0x0 is the end.

Frame data : The actual transmission content is specified by the frame type.

If you want to know more details, you can refer to the official introduction in the "References" section and use it in conjunction with WireShark to capture packets. This reading note cannot be comprehensive and in-depth.

Finally, the specific content of the supplementary frame type is defined. The frame type defines the number of frames of 10 types:

Multiplexing

With the foreshadowing of the previous binary frame structure, let's take a look at how multiplexing is going on. First of all, we need to explain the problems that existed in the past HTTP1.1:

At the same time, requests for the same domain name have access restrictions, and requests exceeding the limit will be automatically blocked.

In the traditional solution, multi-domain access and server distribution are used to load resources to a specific server, so that the response speed of the entire page is improved. For example, using CDN of multiple domain names to accelerate access

With the update of HTTP/2, HTTP2 has switched to binary frames as an alternative, allowing a single HTTP2 request to multiplex multiple request and response content, which means that many "takeaways" can be packaged in one package and delivered to you .

In addition, the flow control data also means that it can support multi-stream parallelism without relying too much on TCP, because the communication is reduced to one frame, and the inside of the frame corresponds to one message, which can realize parallel exchange of messages.

Header Compression

HTTP1.X does not support Header compression. If there are too many pages, it will lead to bandwidth consumption and unnecessary waste.

The solution to this problem in SPDY is to use fields in DEFLATE format. This design is very effective, but there are actually attack methods for CRIME information leakage .

HPACK is defined in HTTP/2. The HPACK algorithm uses static Huffman coding to encode the request header to reduce the transmission size, but it is necessary to maintain the header table between the client and the server. The header table can be maintained and stored before sending. The key-value pair information that has been passed, the content of the repeatedly sent message can be directly obtained by looking up the table, reducing the generation of redundant data, and the subsequent second request will send non-repetitive data.

The HPACK compression algorithm mainly includes two modules, index table and Huffman coding . The index table is divided into dynamic table and static table at the same time. The K/V value of 61 headers is predefined in the static table, and the dynamic table is first in first out. The content of the queue is empty initially, and the decompression header needs to be placed at the head of the queue every time it is added, and the removal starts from the tail of the queue.

Note that in order to prevent the client from crashing due to excessive expansion of memory, the dynamic table will automatically release HTTP/2 requests after a certain length is exceeded.

HPACK algorithm

The HPACK algorithm is an application and improvement of the Huffman algorithm. The classic case of the Huffman algorithm is ZIP compression, that is, although we may not know it, it may be used every day.

The idea of the HPACK algorithm is to maintain a hash table on both sides of the client and the server, and then cache the header fields in the table to reduce the binary data transmission in the stream, thereby improving the transmission efficiency.

The three main components of HPACK are detailed as follows:

- Static table: HTTP2 is a static table of strings and fields that appear in the header, including 61 basic headers content,

- Dynamic table: The static table has only 61 fields, so use the dynamic table to store the fields that are not in the static table, and start indexing from 62. When transmitting the fields that do not appear, first of all, the index number is established, and then the string needs to be Huffman encoded. Complete the binary conversion and send it to the server. If it is the second time, you can find the index of the corresponding dynamic table, which effectively avoids the transmission of some redundant data.

- Huffman coding: This algorithm is very important and has a major impact on the development of the modern Internet.

Huffman coding : is an entropy coding (weight coding) algorithm for lossless data compression . Invented in 1952 by American computer scientist David Albert Huffman. Huffman proposed the construction method of the optimal binary tree in 1952, that is, the method of constructing the optimal binary prefix code, so the optimal binary tree is also called Huffman tree, and the corresponding optimal binary prefix code is also called Hough. Mann coding.

The following is the original paper corresponding to Huffman coding:

Huffman coding original paper:

Link: https://pan.baidu.com/s/1r_yOVytVXb-zlfZ6csUb2A?pwd=694k

Extraction code: 694k

In addition, here is a relatively popular Huffman video. It is strongly recommended to watch it repeatedly. It can help you quickly understand what Huffman coding is. Of course, the premise is that you can use magic.

https://www.youtube.com/watch?v=Jrje7ep5Ff8&t=29s

request priority

Request priority did not actually appear in HTTP/2. A set of priority-related instructions was defined in the previous RFC7540 , but because it was too complicated, it was not used and popularized, but the information inside is still worthwhile. for reference.

The content of HTTP/2 cancels all RFC7540 priority directives, and all descriptions are removed and retained in the original protocol.

HTTP/2 uses multiplexing, so it is necessary to prioritize the use of important resources and allocate them to the front to load first, but in fact, the priority is not balanced in the process of implementing the solution, and many servers do not actually observe the client's request and Behavior.

Finally, there is a fundamental disadvantage, that is, the TCP layer cannot be parallelized, and the use of priority in a single request may even be weaker than HTTP1.X.

flow control

The so-called flow control is the problem of competition between data streams. It should be noted that HTTP2 only controls the flow data, and provides flow control by using the WINDOW_UPDATE frame.

Note that frames that are not 4 octets in length window_update need to be responded to as an error of frame_size_error .

PS: In the following design, the payload is a reserved bit + 31-bit unsigned integer , which means that in addition to the current flow control window, an additional 8 bytes of data can be transmitted, so the final legal range is 1到 2^31 - 1 (2,147,483,647) octets. WINDOW_UPDATE Frame {

Length (24) = 0x04,

Type (8) = 0x08,

Unused Flags (8),

Reserved (1),

Stream Identifier (31),

Reserved (1),

Window Size Increment (31),

}For flow control, there are the following salient features:

- Traffic control needs to be based on various proxy server controls in the middle of HTTP, not non-end-to-end control;

- Announces how many bytes each stream has received on each connection on a credit basis, the WINDOW_UPDATE framework does not define any flags and does not enforce it;

- The flow control has the concept of direction, the receiver is responsible for flow control, and can set the size of each flow window;

-

WINDOW_UPDATEcan send frames with the flagEND_STREAMflag set, indicating that the receiver may enter a half-closed or closed state to receive WINDOW_UPDATE frames, but the receiver cannot treated as wrong; - The receiver must treat the

WINDOW_UPDATEframe with the flow control window incremented to 0 as a flow error of type PROTOCOL_ERROR ;

server push

Server push is intended to solve the disadvantage that requests are always initiated from the client in HTTP1.X. The purpose of server push is to reduce the waiting and delay of the client. But in practice server push is difficult to apply because it means predicting user behavior. Server-side push consists of push request and push response parts.

push request

The push request uses the PUSH_PROMISE frame as the transmission. This frame contains the field block, control information and the complete request header field, but cannot carry the relevant information including the message content, because it is the specified frame structure, so the client also It needs to be explicitly associated with the server, so the server push request is also called "Promised requests".

When the requesting client receives the CONTINUATION frame, the CONTINUATION frame header field must be a valid set of request header fields, and the server must pass the ":method" pseudo field header Add a safe and cacheable method to the Ministry. If the cache method received by the client is not safe, it needs to respond to an error on the PUSH_PROMISE frame. This design is somewhat similar to two secret agents pairing secret codes. One secret code is right or wrong. You have to cheat the other party immediately.

PUSH_PROMISE Can be transmitted on any client and server, but there is a premise that the stream needs to be in a "half-closed" or "open" state for the server, otherwise it is not allowed to pass CONTINUATION HEADERS field block transfer.

PUSH_PROMISE frame can only be initiated by the server, because it is designed for server push, it is "illegal" to use client push. PUSH_PROMISE Frame structure:

Again the payload is an unsigned integer of reserved bits + 31 bits . What is the payload? It is a redefinition of the terminology of the entity in the HTTP1.1 protocol, which can be simply regarded as the request Body of the message.

The following is the corresponding source code definition:

PUSH_PROMISE frame definition

PUSH_PROMISE Frame {

Length (24),

Type (8) = 0x05,

Unused Flags (4),

PADDED Flag (1),

END_HEADERS Flag (1),

Unused Flags (2),

Reserved (1),

Stream Identifier (31),

[Pad Length (8)],

Reserved (1),

Promised Stream ID (31),

Field Block Fragment (..),

Padding (..2040),

} CONTINUATION frame: used to request to continue transmission after connection, note that this frame is not dedicated to server push.

CONTINUATION Frame {

Length (24),

Type (8) = 0x09,

Unused Flags (5),

END_HEADERS Flag (1),

Unused Flags (2),

Reserved (1),

Stream Identifier (31),

Field Block Fragment (..),

}push response

If the client does not want to accept the request or the server takes too long to initiate the request, it can send the --- RST_STREAM frame code identifier CANCEL or REFUSED_STREAM content to tell the server that it does not accept the service client request push.

If the client needs to receive these response information, it needs to pass CONTINUATION and PUSH_PROMISE to receive the server request as mentioned before.

Other features:

- Clients can use the SETTINGS_MAX_CONCURRENT_STREAMS setting to limit the number of responses the server can push simultaneously.

- If the client does not want to receive the push stream from the server, it can set SETTINGS_MAX_CONCURRENT_STREAMS to 0 or reset

PUSH_PROMISEto keep the stream for processing.

2.2.6 HTTP/3

Progress Tracker: RFC 9114 - HTTP/3 (ietf.org)

Why does 3 exist?

It can be found that although HTTP/2 has made a qualitative leap, due to the defects of the TCP protocol itself, the problem of head-of-line blocking may still exist, and once network congestion occurs, it will be more serious than HTTP1.X (users can only see one whiteboard).

Therefore, the follow-up research direction of Google turned to QUIC , which is actually to improve the UDP protocol to solve the problems of the TCP protocol itself . But now it seems that this improvement is not perfect. At present, some domestic manufacturers are making their own improvements to QUIC.

HTTP/3 Why choose UDP

This leads to another question, why 3.0 has so many protocols to choose from, why use UDP? Usually there are the following points:

- There are many devices based on the TCP protocol, and compatibility is very difficult.

- TCP is an important component of Linux, and it is very troublesome to modify, or it is not dare to change it at all.

- UDP itself is connectionless, and there is no cost to establish and disconnect.

- UDP packets themselves do not guarantee stable transmission, so there is no blocking problem (belonging to love or not).

- The cost of UDP transformation is much lower than that of other protocols.

What's New in HTTP/3

- QUIC (no head-of-line blocking) : Optimize multiplexing and use the QUIC protocol instead of the TCP protocol to solve the problem of head-of-line blocking. QUIC is also based on flow design, but the difference is that a flow packet loss will only affect the data retransmission of this flow. TCP is connected based on IP and port, which is very troublesome in the changeable mobile network environment. QUIC identifies the connection by ID. As long as the ID remains unchanged, the network environment can change quickly and continue to connect.

- 0RTT : Note that 0TT for establishing a connection is still not implemented on HTTP/3, at least 1RTT is required.

RTT: RTT is the abbreviation of Round Trip Time , which is simply the time for communication back and forth . RTT consists of three parts:

- Round-trip propagation delay.

- Network device queuing delay.

- Application processing delays.

HTTPS usually requires TCP handshake and TLS handshake to establish a complete connection, at least 2-3 RTTs, and ordinary HTTP also requires at least 1 RTT. The purpose of QUIC is that in addition to the 1RTT time consumed by the initial connection, other connections can achieve 0RTT.

Why can't the first interaction 0RTT be done? Because the initial transmission is blunt, it is still necessary to transmit the key information on both sides. Because there is data transmission, it still takes 1 RTT time to complete the action, but the data transmission after the handshake is completed only requires 0RTT time.

Forward error correction: QUIC data packets are allowed to carry other data packets in addition to their own content. When a packet is lost, the content of the lost packet is obtained by carrying the data of other packets.

Specifically how to do it? For example, if 3 packets lose one packet, the "number" of the lost packet can be calculated by the XOR value of other data packets (actually check packets) and then retransmitted, but this XOR operation can only be used for the loss of one data packet. Calculation, if multiple packets are lost, it is impossible to calculate more than one packet with the XOR value, so retransmission is still required at this time (but the retransmission cost of QUIC is much lower than that of TCP).

- Connection migration: QUIC abandons the five-tuple concept of TCP, and uses a 64-bit random number ID as the connection ID. The QUIC protocol can immediately reconnect as long as the ID is the same when switching network environments. An improvement that is very useful for the situation where wifi and mobile phone traffic are frequently switched in modern society.

Terminology Explanation ⚠️:

5-tuple : is a communication term, the English name is five-tuple, or 5-tuple, usually refers to the source IP (source IP), source port (source port), destination IP (destination IP), destination port (destination port) ), the layer 4 protocol (the layer 4 protocol) and other 5 fields to represent a session, it is a session.

This concept is also mentioned in the book "How the Network is Connected". That is the step of creating a socket in Chapter 1. To create a socket, you actually need to use this concept of five ancestors, because to create a "channel", both parties need to inform themselves of their own information to the other party's own IP and port, so that channel creation and subsequent protocol communication can be completed.By the way, expand the 4-tuple and 7-tuple.

4-tuple : that is to use 4 dimensions to determine the unique connection, these 4 dimensions are source IP (source IP), source port (source port), destination IP (destination IP), destination port (destination port) .

7-tuple : use 7 fields to determine network traffic, namely source IP (source IP), source port (source port), destination IP (destination IP), destination port (destination port), 4 layer communication protocol (the layer 4 protocol), service type (ToS byte), interface index (Input logical interface (ifIndex))

- Encrypted and authenticated packets:

QUICThe default will encrypt the packet header, because the TCP header is publicly transmitted, this improvement is very important. - Flow control, transmission reliability:

QUICAdd a layer of reliable data transmission to theUDPprotocol, so both flow control and transmission reliability can be guaranteed. Frame format change

The following is a comparison of the format gap between

HTTP/3and 3 on the Internet. It can be found that the frame header has only two fields: type and length . The frame type is used to distinguish data frames and control frames, which is a change inherited from HTTP/2. Data frames include HEADERS frames, DATA frames, and HTTP packet bodies.关于2.0的头部压缩算法升级成了---2ecaa1f55df2480d3d42f0cf1a7516c8

QPACK算法:需要注意HTTP3的QPACKHTTP/2中的HPACKSimilarly,HTTP/3inQPACKalso uses static table, dynamic table andHuffmanencoding.So compared to the previous algorithm

HPACK,QPACKWhat is the upgrade of the algorithm?HTTP/2中的HPACK的静态表只有61 项,HTTP/3中的QPACK的静态表扩大到91 项。The biggest difference is that the dynamic table is optimized, because there is a timing problem in the dynamic table in HTTP2.0.

The so-called timing problem is that if there is a packet loss during transmission, the dynamic table at one end has been changed, but the other end has not changed, and the encoding needs to be retransmitted, which also means that the entire request will be blocked.

Therefore, HTTP3 uses the high speed of UDP, while maintaining the stability of QUIC , and without forgetting the security of TLS, QUIC was announced as the standard of HTTP3 in the YTB live broadcast in 2018.

YTB address: (2) IETF103-HTTPBIS-20181108-0900 - YouTube , the poor Internet's ceiling protocol development team, IETF, doesn't even have 10,000 fans.

2.3 Discussion of some HTTP problems

2.3.1 Head of line blocking

The problem of head-of-line blocking is not only a problem of HTTP, in fact, the lower-level protocols and network device communication also have head-of-line blocking problems.

switch

When the switch uses the FIFO queue as the buffer of the buffer port, according to the principle of first-in, first-out, only the oldest network packet can be sent each time. At this time, if the output port of the switch is blocked, network packet waiting will occur. This can cause network latency issues.

But even if there is no head-of-line blocking, the FIFO queue buffer itself will get stuck with new network packets and queue up to send behind the old network packets, so this is a problem caused by the FIFO queue itself.

It's a bit similar to queuing up nucleic acids. The people in the front can't do it unless they finish it, but the people in front have never done it, and the back can only wait.

Switch HO problem solution

Using the virtual output queue solution, the idea of this solution is that only the network packets in the input buffer will be blocked by HOL. When the bandwidth is sufficient, it does not need to be output directly through the buffer, thus avoiding the problem of HOL blocking.

Architectures without input buffering are common in small and medium-sized switches.

Thread blocking problem demonstration

Switch: _ The switch looks up the MAC address according to the MAC address table, and then sends the signal to the corresponding port _ A network signal transfer device, which is somewhat similar to the transfer station of the telephone exchange.

Example of head-of-line blocking: 1st and 3rd input streams are competing to send packets to the same output interface, in which case if the switch fabric decides to transmit packets from the 3rd input stream, they cannot be in the same time slot Process the first input stream.

Note that the first input stream blocks output interface 3 packets, which are available for processing.

Out-of -order transfer :

Because TCP does not guarantee the transmission order of network packets, it may lead to out-of-order transmission, and HOL blocking will significantly increase the packet reordering problem.

Similarly, in order to ensure reliable message transmission in lossy networks, although the atomic broadcast algorithm solves this problem, it will also generate HOL blocking problems, which is also a common problem caused by disordered transmission.

The Bimodal Multicast algorithm is a random algorithm using the gossip protocol to avoid head-of-line blocking by allowing certain messages to be received out of order.

HTTP header blocking

HTTP 2.0 solves the weaknesses of the HTTP protocol through multiplexing and truly eliminates the HOL blocking problem at the application layer, but the out-of-order transmission at the TCP protocol layer is still unsolvable.

Therefore, in 3.0, the TCP protocol was directly replaced with the QUIC protocol to eliminate the HOL blocking problem at the transport layer.

2.4.2 HTTP/2 full duplex support

Note that HTTP is full-duplex in the true sense until 2.0. The so-called HTTP support for full-duplex confuses the TCP protocol, because TCP supports full-duplex , and TCP can use the network card to send and receive data at the same time.

In order to understand the concept of TCP and HTTP full-duplex, you should understand the two modes of duplex in HTTP: half-duplex (http 1.0/1.1), full-duplex (http 2.0) .

Half-duplex : Only one side of the link can send data and the other side can receive data at the same time.

- http 1.0 is a short connection mode. Each request must establish a new tcp connection. After a request is responded, it is directly disconnected, and the next request repeats this step.

- HTTP 1.1 is a long connection mode, which can be multiplexed. The establishment of a tcp connection will not be disconnected immediately. Resource 1 sends a response, resource 2 sends a response, and resource 3 sends a response, eliminating the need to establish a tcp for each resource. overhead.

Full Duplex : Both ends can send or receive data at the same time.

- The http 2.0 resource 1 client can send the request for resource 2 without waiting for the response, and finally realizes sending and receiving at the same time.

2.4.3 Disadvantages of HTTP 2.0

- It solves the blocking problem of HTTP head-of-line requests, but does not solve the blocking problem of head-of-line requests of TCP protocol. In addition, HTTP/2 needs to use TLS handshake and HTTP handshake at the same time, and TLS needs to be used for transmission on top of HTTPS connection establishment. .

- HTTP/2 head-of-line blocking occurs when TCP loses packets. Because all requests are put into one packet, they need to be retransmitted. At this time, TCP will block all requests. But if it is HTTP1.X, then at least multiple TCP connections are more efficient,

- Multiplexing will increase server pressure, because there is no limit to the number of requests, a large number of requests in a short period of time will instantly increase server pressure

- Multiplexing is prone to timeouts because multiplexing cannot identify bandwidth and how many requests the server can handle.

Packet loss is not as good as HTTP1.X

The situation when the packet is lost is HTTP P2.0 because the request frames are all in a TCP connection, which means that all requests must be blocked by TCP. In the past, multiple TCP connections were used to complete data interaction, and one of them blocked other requests. It can be reached normally but is more efficient.

Binary Framing Purpose

The fundamental purpose is to make more effective use of the TCP underlying protocol and use binary transmission to further reduce the conversion overhead of data in different communication layers.

Keep-alive disadvantages of HTTP1.X

- The interaction must be in the order of the request and response, and the multiplexing of HTTP2 must respond in order.

- A single TCP handles one request at a time, but HTTP2 can send multiple requests at the same time without a request upper limit.

2.4.4 Is the HTTP protocol really stateless?

Carefully read the comparison of the three versions of HTTP1.x, HTTP/2 and HTTP3.0, you will find that the definition of HTTP stateless has secretly changed . Why do you say that?

Before explaining the specific content, we need to clarify a concept, that is, although cookies and sessions make HTTP "stateful", in fact, this has nothing to do with the concept of the HTTP protocol itself.

The fundamental purpose of Cookie and Session is to ensure the visibility of the session state itself. The two "simulate" the user's last access state by creating multiple independent states, but each time The HTTP request itself does not depend on the last HTTP request. From a broad perspective, all services are stateful, but this does not interfere with the stateless definition of HTTP1.X itself.

In addition, the so-called stateless HTTP protocol means that each request is completely independent. In the definition of the 1.0 memorandum, it can be seen that an HTTP connection is actually a TCP connection. In HTTP1.1, one TCP and multiple HTTP connections are still implemented. It can be seen as an independent HTTP request.

Having said so much, in fact, it is right to say that HTTP1.X is stateless when it is not supported by Cookie and Session. It is like the comparison between the value of the character itself in the game and the added value of the weapon. Although the weapon can make the character Obtain a certain state, but this state is not unique to the character itself, but borrowed by external force.

However, with the development of the Internet, after HTTP/2 and HTTP3, HTTP itself has a "state" definition. For example, in 2.0, the HPACK algorithm generated by HEADER compression (static tables and dynamic tables need to be maintained), and 3.0 also upgraded the HPACK algorithm to QPACK again to make the transmission more efficient.

So the conclusion is that strictly speaking, HTTP1.X is stateless , and it realizes the preservation of session access state with the help of Cookie and Session.

After HTTP/2, HTTP is stateful , because some state tables appear in the communication protocol to maintain the Header fields that are repeatedly transmitted by both parties to reduce data transmission.

2.4 Summary

This chapter should have been the core content of the whole book, but the author doesn't seem to want readers to be afraid, so it is relatively simple. I have spent a lot of energy collecting online data and combining my own thinking to sort out the content of the second chapter.

It is necessary to grasp the entire development history of HTTP, because Bagu sometimes mentions related issues, and it is indeed unbearable to ask in depth. The HTTP protocol is also the core of the application layer communication protocol. Secondly, as a WEB developer, I personally think it is It is even more necessary to master.

In addition, understanding the design of HTTP itself can allow us to transition to the understanding of the TCP protocol. The design of TCP has led to the impact of HTTP design and other issues can be considered more.

For more suggestions, you can look at the reading notes of "How the Network is Connected". The original book describes the basic context of the development of the Internet from the perspective of the entire TCP/IP structure, and this one describes the HTTP The basic history of development and the future direction of development.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。