Introduction: Author: Wang Yu (Otian). When you step into the field of programming, code and logs will be your most important partners." Log-based troubleshooting is an important part of the field of R&D efficiency. Alibaba Group lives locally under the background of supporting multiple ecological companies and multiple technology stacks. , and gradually precipitated a cross-application and cross-domain log troubleshooting solution-Xlog. This article provides some references for students who are or will use SLS to help better implement the log troubleshooting solution.

1. Background

Programmers learn every language by printing " hello world ". This enlightening exploration is sending us a message: " When you step into the field of programming, code and logs will be your most important partners ". In the code part, with more and more powerful idea plug-ins and shortcut keys, the coding efficiency of developers has been greatly improved. In the log section, various teams are also innovating and trying in the direction of investigation. This is also an important component in the field of R&D effectiveness.

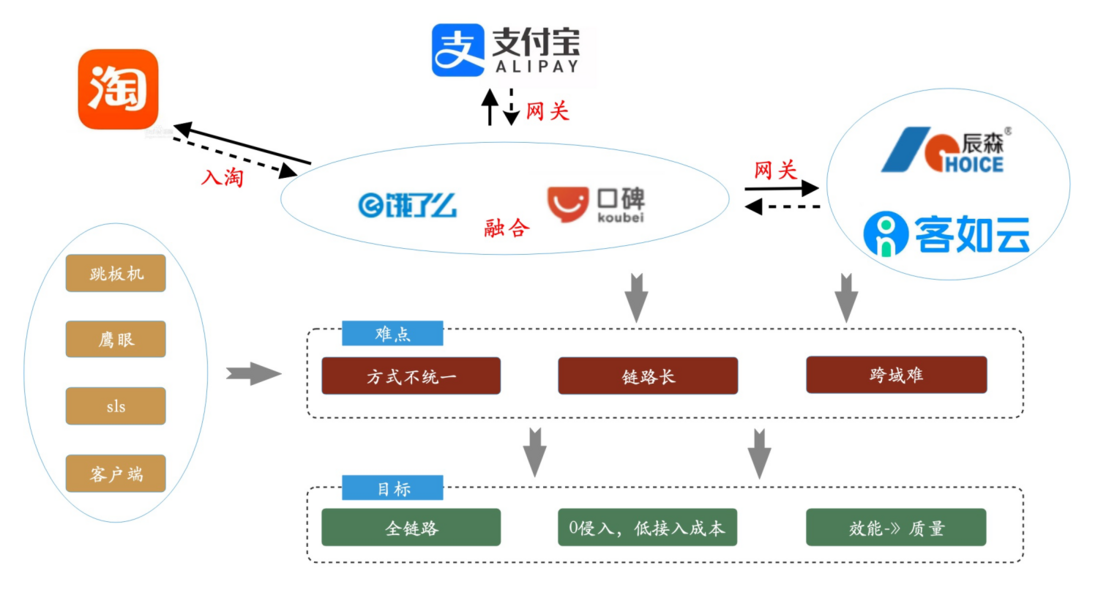

Ali Group's local life, under the background of supporting multiple ecological companies and multiple technology stacks, has gradually precipitated a cross-application and cross-domain log troubleshooting solution - Xlog. At present, it also supports icbu, local life, new retail, Hema, ant, Alibaba cto, Alibaba Cloud, Taote, Lingxi Interactive Entertainment and other teams. Also won the praise of the sls development team.

I hope this article can bring some input to the students who are using or planning to use sls, and help the team to implement the log troubleshooting plan as soon as possible. The first part focuses on the challenges faced by log troubleshooting under the microservice framework and how we solved them. The second part talks about several difficulties and overcoming strategies of program design from the perspective of details. The third part is about the current capabilities of Xlog. The fourth part is about the main capacity, how to build ecological capacity.

1.1 Problems solved by Xlog

When troubleshooting through the log, I believe there are several steps that everyone is familiar with: 1. Log in to the springboard. 2. Switch the jumper. 3. Log in to Alibaba Cloud Platform sls. 4. Switch to Alibaba Cloud sls project logstore. cycle back and forth.

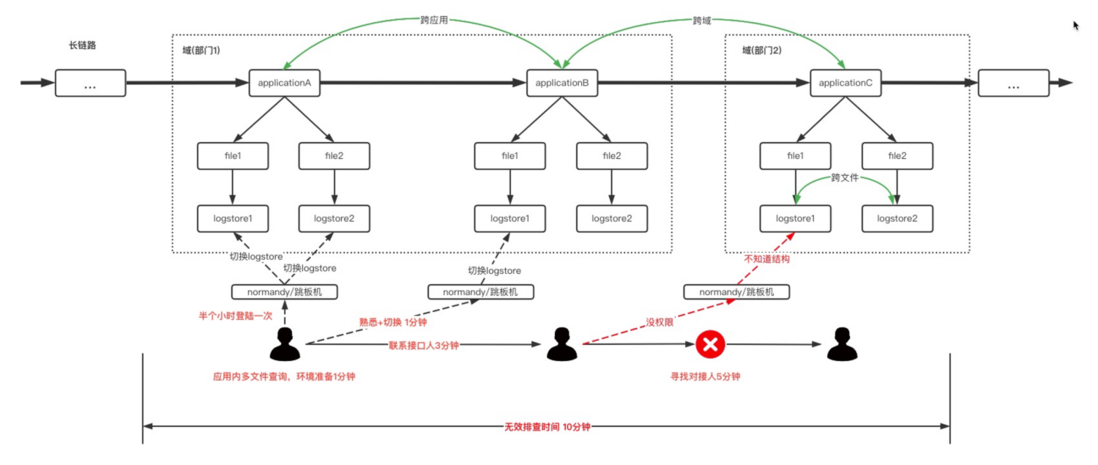

As an example, the following figure shows a fragment of a long-link system (real links are more complicated): Application1, Application2, Application3. Where Application1 and Application2 are the same domain (similar to: a sub-team), and Application3 belongs to another domain. Then this query involves two scenarios: cross-application query and cross-domain query.

After the person in charge of Application1 took over the problem, through the springboard or sls log, it was found that the upstream classmates needed to assist in the investigation. At this time, whether it is switching the springboard or sls, or contacting the person in charge of Application2 to assist in the query, a response time of 1min->3min is required. It will be more difficult to find the person in charge of Application3 from the person in charge of Application2, because it may not be clear about the sls information of Application3 (our bu has logstore information of 100,000 levels ), and there is no springboard login permission, and I don’t know the information of Application3. principal. As a result, the investigation time was greatly increased. The time for environment preparation ( invalid investigation time ) is even much longer than the time for effective investigation.

The previous example only shows the query scenarios of three applications, and the real link is often much more complicated than this. So is there a platform that can query the required logs in one click and one-stop? Therefore , Xlog, which is dedicated to solving the frequent switching of cross-application and cross-domain search under long links, was born!

1.2 Scenarios supported by Xlog

Cross-application query under the microservice framework, cross-domain query in the context of cross-domain integration.

- If your team uses sls, or plans to collect logs to sls;

- If you wish, you can have better log retrieval and display capabilities;

- If you want to be able to search logs across applications, across domains;

This article introduces xlog to everyone, helping the business within the group to build a larger ecosystem, which is easy to use and non-invasive, and as more and more domains are connected, the dots can be connected and the lines can be combined to create an economic body, or a log full-link solution for a larger ecosystem.

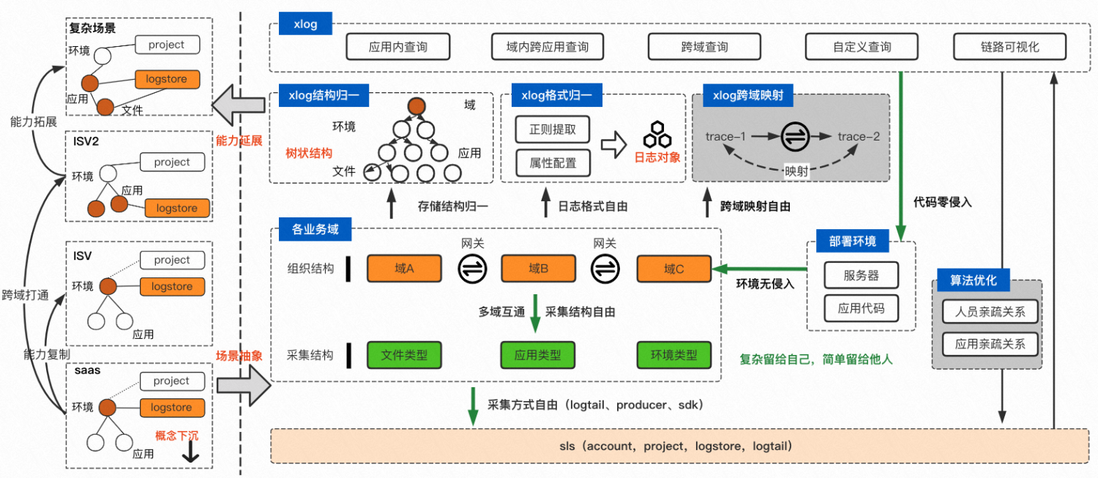

1.3 Current system construction of Xlog

For applications that have already collected sls, we can achieve zero modification of the code, no invasion of the deployment environment, and the structure of collection and the channels of collection are free. Basically, as long as you have access to sls, you can access Xlog. Through the normalization of structure, format, and cross-domain capabilities, Xlog supports several scenarios that are most commonly used for troubleshooting: intra-application cross-file search, intra-domain cross-application search, and cross-domain search.

Qiao Liang, the author of "Continuous Delivery 2.0" mentioned: Consistency is the only way to improve R&D efficiency . The entire economy has developed for more than 20 years, and it is difficult to achieve full coverage of consistency. However, Xlog innovatively proposed a solution to convert inconsistency into consistency. Whether it is for query or other log-based technical system construction, there are milestones. significance.

2. Schematic design

This paragraph will describe the design idea and development process of Xlog in detail. If you have already connected to sls, you can skip to 2.2; if you have not connected to sls, you can read 2.1 and there will be some innovative ideas.

2.1 The initial plan: innovation and isolation

Saas has just been established in 2019, and many infrastructures need to be improved. Like many teams, we mainly used two ways to query logs:

1. Login springboard query: Use the query link of Traceid->Eagle->Machine ip->Login springboard->grep keyword . Disadvantages: 4-6 minutes per query, poor log retrieval and visualization, unable to query across applications, unable to view historical logs. 2. Log in to Alibaba Cloud sls web console to query: log in to sls->keyword query . Disadvantages: 1-2 minutes per query, poor log visualization, unable to query across applications and across domains.

Based on this background, we did 3 things to improve query efficiency:

- Unified log format : A set of standards is used for patterns in logback.

%d{yyyy-MM-dd HH:mm:ss.SSS}{LOG\_LEVEL\_PATTERN:-%5p}{LOG\_LEVEL\_PATTERN:-%5p}{PID:- } --- [%t] [%X{EAGLEEYE\_TRACE\_ID}] %logger-%L: %m%n

in:

%d{yyyy-MM-dd HH:mm:ss.SSS} : time accurate to milliseconds

${LOG\_LEVEL\_PATTERN:-%5p} : log level, DEBUG, INFO, WARN, ERROR, etc.

${PID:- } : Process id

--- : The separator has no special meaning

[%t] : thread name

[%X{EAGLEEYE\_TRACE\_ID}] : Eagle eye tracking id

%logger : log name

%m%n : The same log format is used in the message body and newline field, which has proved to be more profitable than expected. Analysis, monitoring, troubleshooting, and even future intelligent troubleshooting of the entire link will bring great convenience.

- sls structure design and unification : unify the collection formats of all applications in the domain into a set of structures, and provide fields in a regular way to facilitate collection and configuration. The configuration of logtail is implemented in the base image of docker, so that all applications in the domain can inherit the same log collection information.

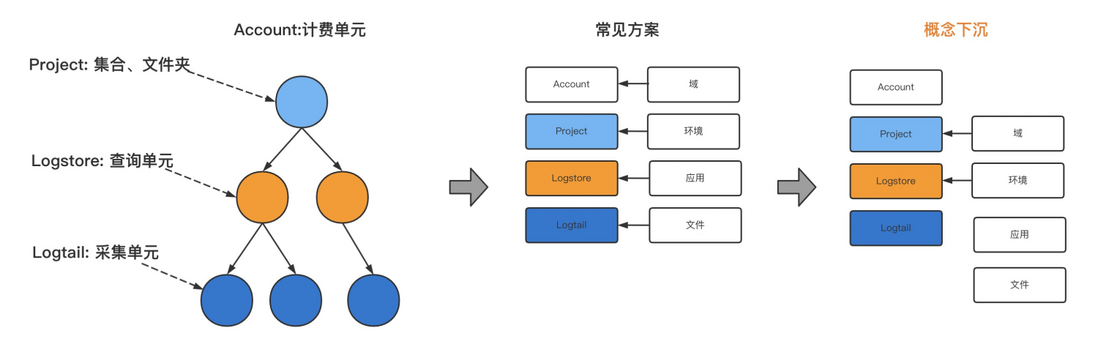

- SLS acquisition structure sinking : Here we innovatively propose a concept of concept sinking. And in this way, cross-application query can be implemented very conveniently. As shown in the figure below, the sls structure can be understood as 4 layers: account, project, logstore, logtail. Among them, the logstore layer is very critical, which is related to the dimension of relational query. The common solution is to use one application to correspond to one lossre, which leads to frequent switching of logstore when multiple applications are queried. Therefore, we innovatively proposed the concept of concept sinking. Let each environment correspond to a logstore, so that you only need to determine the query environment, you can clearly know which logstore to query, so as to achieve the effect of cross-application query.

This solution has very good performance in solving single application and cross-application in the domain, and only needs to complete one API call. If your team is preparing to use sls, and if the data of sls is only used for troubleshooting (sunfire of the monitoring class can directly read the local log of the server), we still recommend this solution. It can well complete the investigation needs. Solutions based on these conditions have been deposited into Xlog and can be directly connected to Xlog to enjoy the full set of capabilities of Xlog.

2.2 The current plan: innovation and helping the world

The solution just now has a good performance in solving the troubleshooting problem of your own domain. However, in 2020, saas began to support multiple ecological companies, and the scenarios faced are no longer within its own domain, and multiple domains need to be connected together. At this point we are faced with two major challenges:

- Access cost: We have specified a set of sls collection methods and log format, which can facilitate data collection and efficient query and display. However, when other departments connected, it was found that the log format could not be modified because the existing system had been configured with modules such as monitoring and reporting. Because some systems have already collected sls before, the collection structure cannot be modified . The inconsistency of the two pieces of information results in a high access cost.

- Cross-domain scenarios: As the system becomes more and more complex, there are more and more cross-domain scenarios. Take the business scenario of local life as an example. Under the background of entering Taobao, some systems have completed the migration of Taoyuan, and some systems are still in the ant domain; the connection between the two domains needs to go through the gateway. In the case of deep integration of word of mouth and Ele.me, calls to each other also need to go through the gateway. At present, it is also getting through with the acquired isv, which also needs to go through the gateway. Because the link tracking solutions adopted by each company are inoperable, the traceid and other information are inconsistent, and the entire link cannot be checked. So how to solve the cross-domain scenario log search becomes the second biggest problem.

Therefore, in the previous scheme, we upgraded Xlog and redefines the goal:

- Core capabilities: application->cross-application->cross-domain->global multi-scenario log troubleshooting . From only checking the logs of one application, to checking the logs of one link in the domain, it opens the door to true full-link log checking.

- In terms of access cost: access in ten minutes . Changes to existing log formats and collection methods are reduced through code logic. Support logtail, produce, sdk and other collection methods.

- Intrusion: Zero intrusion , no intrusion to application code, direct interaction with sls, and decoupling.

- Customization: support query interface customization, display result interface customization.

2.2.1 Model Design

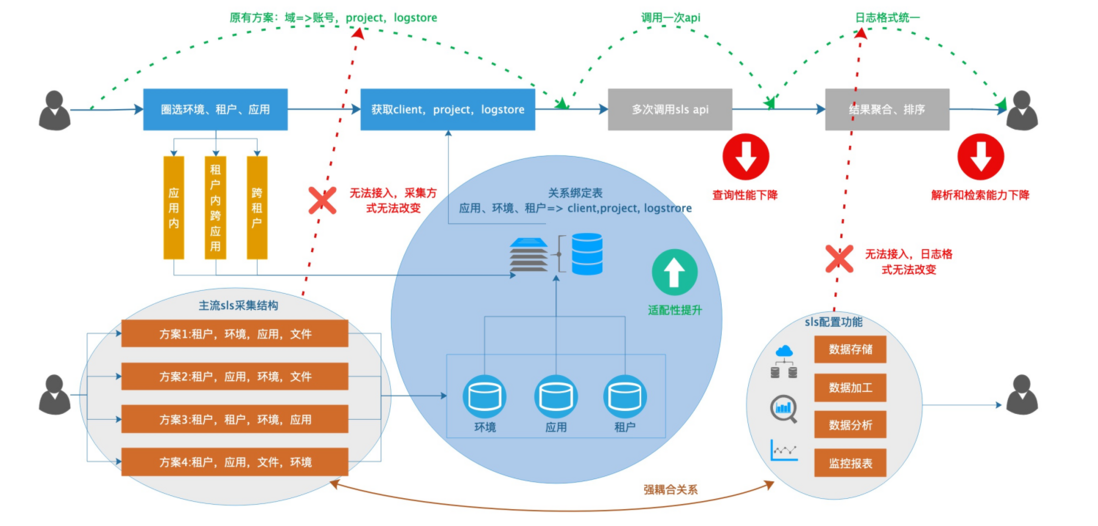

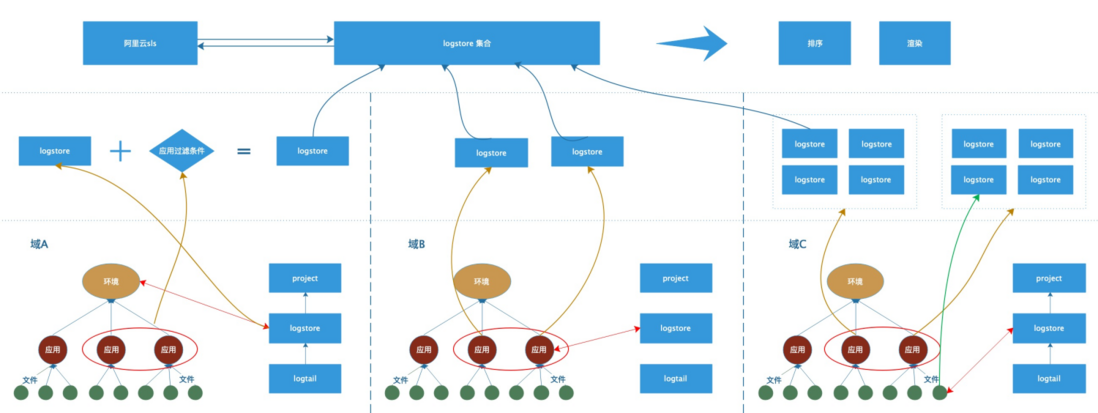

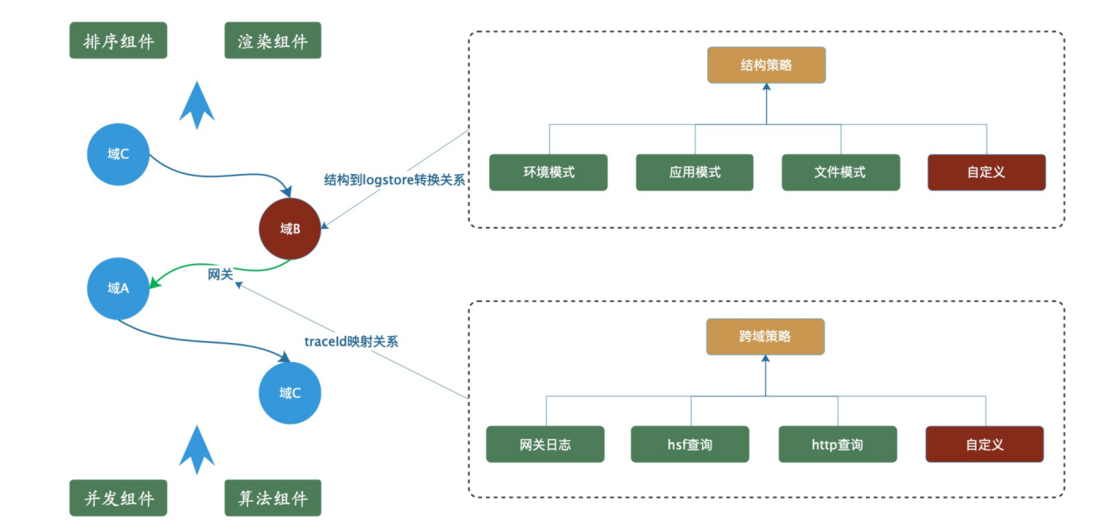

Since the unit that calls the sls api to query the log is logstore, we can decompose the various collection structures into a combination of the following three units (of course, most domains may be one of these structures).

- 1. One environment corresponds to one logstore , (for example: in this domain, all logs applied in the daily environment are in one logstore). Domain A as shown in the figure below.

- 2. One application corresponds to one logstore , (for example, the daily environment of application A corresponds to logstore1, the pre-release environment of application A corresponds to logstore2, and the daily environment of application B corresponds to logstore3). Domain B as shown in the figure below.

- 3. One file corresponds to one logstore , (for example, the a file of application A corresponds to logstore1 in the daily environment, and the b file of application A corresponds to logstore2 in the daily environment). Domain C as shown in the figure below.

With such an atomic structure, you only need to create a mapping relationship between domains, environments, applications, files => logstore when configuring on xlog. In this way, application-granularity and file-granularity queries can be performed within the domain.

Also in the cross-domain scenario without a gateway, cross-domain queries can be completed by combining the logstores of the two domains. As shown in the figure above: Specify two applications in domain A, which can be converted into logstore plus filter conditions. Specify two applications in domain B, which can be converted into two logstores. Specifying two applications in domain C can first search for the files under the applications, and then find the logstore collection corresponding to the files. At this point, there are all logstores that need to query logs in Alibaba Cloud sls. The final result is obtained by combining and sorting the query results. Similarly, if you want to perform cross-domain search, you only need to splicing the logstores of multiple domains. Then make a query.

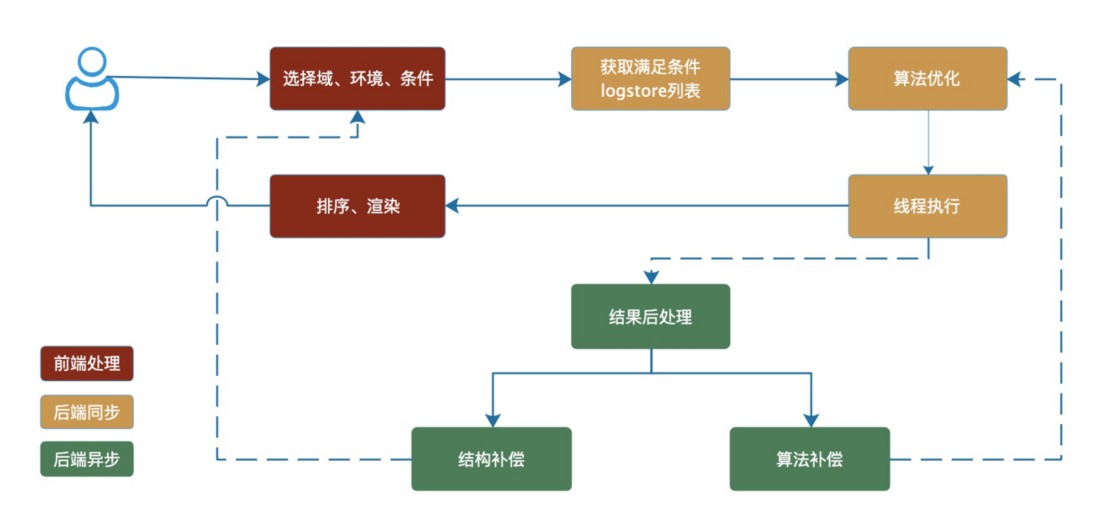

2.2.2 Performance optimization

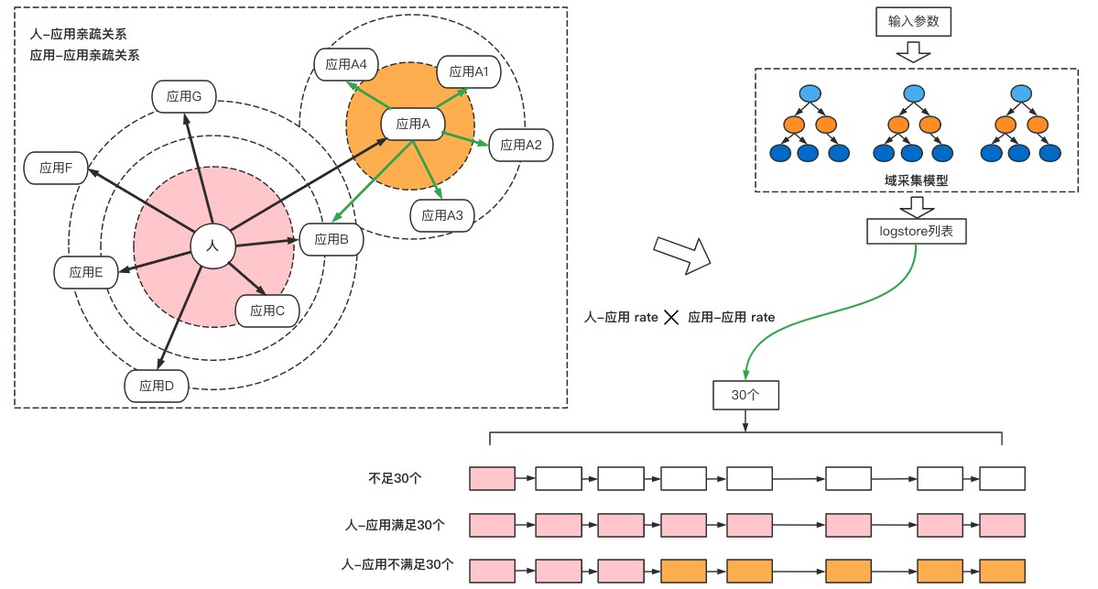

Through the description of 2.2.1 model design, whether it is the sls structure of environment type, application type or file type, as well as single application, multi-application, and multi-domain queries can be converted into a set of logstores, and then traversed and executed logstores. But this will introduce new problems. If there are many logstores, how can the efficiency be improved. For example, when docking a team's log, it was found that their logstore has 3,000, and each environment has 1,000 applications. Assuming that each query takes 150ms, 1000 applications need to execute 150s (2.5 minutes). Just imagine how much it will cost if it takes 2.5 minutes to search the entire domain for a log without specifying the application. In response to this problem, we have optimized the performance. The following methods are mainly used, as shown in the following figure:

- Logstore link pool preload : deduplicate and link logstore to reduce the time for creating links for each query. Downgrade and lazy load the logstore with low activity.

-Multi -threaded concurrency : perform concurrent queries for multiple logstore scenarios to reduce query time.

-Algorithm priority queue : Optimize the affinity algorithm for query personnel, simplify the number of logstore queries, thereby reducing overhead.

- Front-end combined sorting : The back-end only performs query operations, and operations such as sorting and searching are completed in the front-end, reducing the concurrent pressure on the back-end.

As shown in the figure above, when the user selects the corresponding operation domain and query conditions through the front end. The back-end analysis obtains the logstore list to be queried (shown in A, B, C, D, E in the figure). Then, sorting and filtering are performed by analyzing the user's intimate applications to obtain a priority queue (B, A, C in the figure). Use the created link pool to perform concurrent queries against the priority queue to obtain a set of log results. Finally, the front end completes the sorting and assembly, and renders it to complete a cycle. This article mainly explains the thread pool concurrency and algorithm optimization modules.

2.2.3 Thread pool concurrency

Compared with the traditional thread pool concurrent execution, there is not much difference. Insert the logstore to be queried into the thread pool queue in order. Through this method, the query time can be effectively reduced when the number of logstore queries per time is small (less than the number of core threads). For a large number of scenarios, it is supported by algorithm optimization.

For the compensation operation after the query, the asynchronous processing method is also used to reduce the query time.

- Post-processing of the structure:

In the domain of the environment structure, the configuration process does not require configuring applications and files. These data come from processing operations that automatically fill in missing data into the structure after each query. For these operations that do not affect the results of the current query, they are added to the asynchronous thread pool as post-processing.

- Algorithm post-processing, in the processing of the relationship between people and the scoring logic of applying the relationship, the continuous training method is used. This part does not affect the results of the current query, and is also placed in the asynchronous thread pool.

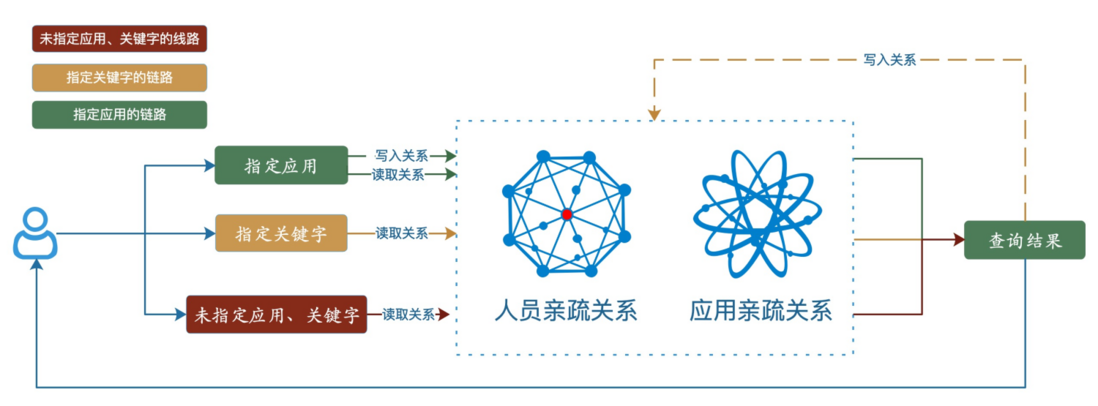

2.2.4 Algorithm optimization

For scenarios where there are many logstores that meet the conditions (more than the number of core threads), the results cannot be obtained quickly by concurrently querying through the thread pool. After a year of data accumulation and analysis of log quicksort, we found that even if no application and search conditions were specified , the most likely logstore sequence could be located by querying the operating habits of the personnel or paying attention to the application habits .

For example, in the merchant saas center, there are about 500 applications. The system in charge of classmate A is Application1, and the applications with more queries are Application11 and Application12. In addition, the applications in a close upstream and downstream relationship with Application1 are Application2 and Application3. If so, we can think that classmate A will pay more attention to applications Application1, Application11, Application12, Application2, and Application3 than other applications. For these applications, priority query can be performed. Thereby reducing the 500 query tasks to 5.

Combined with the situation in daily life, the number of applications that each developer pays attention to will most likely be controlled within 30.

Through the above analysis, we established two sets of affinity networks to locate query batches and echelons.

-Personal relationship <br>When the user calls each time, the query conditions, query results and users can be analyzed and the relationship can be created. Because the application can be specified in the query condition, it is also not necessary to specify the application.

If it is a specified application, it means that the user explicitly queried the content of the application. Add 5 points to the user's intimacy with the app.

If no application is specified, the query results can be analyzed according to the keyword query. Extract the application corresponding to each log of the query result, and then add 1 point (because it is not explicitly specified, but radiated according to the keyword).

So far, after multiple user operations, the intimacy between the user and each application can be obtained. When encountering multiple logstore queries, the 15 applications with the highest intimacy can be filtered out according to users. as the first batch of query objects.

-Apply affinity :

There is also an affinity between apps. The higher the intimacy of the application, the greater the probability of being searched by association. For example, the two applications center and prod have this close relationship in system design. If user A's kinship includes application center, there is a high probability that it will radiate to application prod when querying logs. Based on this idea, the relationship matrix can be created by analyzing the results of each query log.

After each time the log result of the keyword query is obtained, the pairwise intimacy of the involved applications is incremented by 1. It is equivalent to adding 1 to the application intimacy on a link. It is convenient for future queries without losing application intimacy information due to personnel intimacy, resulting in link distortion.

The above is a general overview of how we train the affinity matrix. Let's talk about how to optimize the query algorithm through this matrix. As shown in the figure below, the upper left corner is the relationship matrix of people-application and application-application we recorded. Specifically, for the relationship between users and application A, application B, application C, etc., we will use a score to measure their affinity, which can mainly describe people's attention to the application. Between app-app, we recorded the degree of coupling to each other. The upper right corner is the query condition. According to the query condition and the collection structure of each domain, the list of logstores to be queried can be quickly calculated. But not all logstores need to be queried . Here, the relationship matrix and the list of logstores will be intersected, and then sorted and searched.

As shown in the figure below, for the applications that hit the intersection, the calculation will be performed according to the relationship between people and applications, and the ones with higher scores will be selected. Those below the 30 threshold were then supplemented with app-app affinity. There is a comparison logic involved here, which will be based on the proportional score of the person and the application * the score of the application and application ratio, which is similar to the meaning of the path weight in Huffman coding. Finally get a list of 30 logstores that need to be queried.

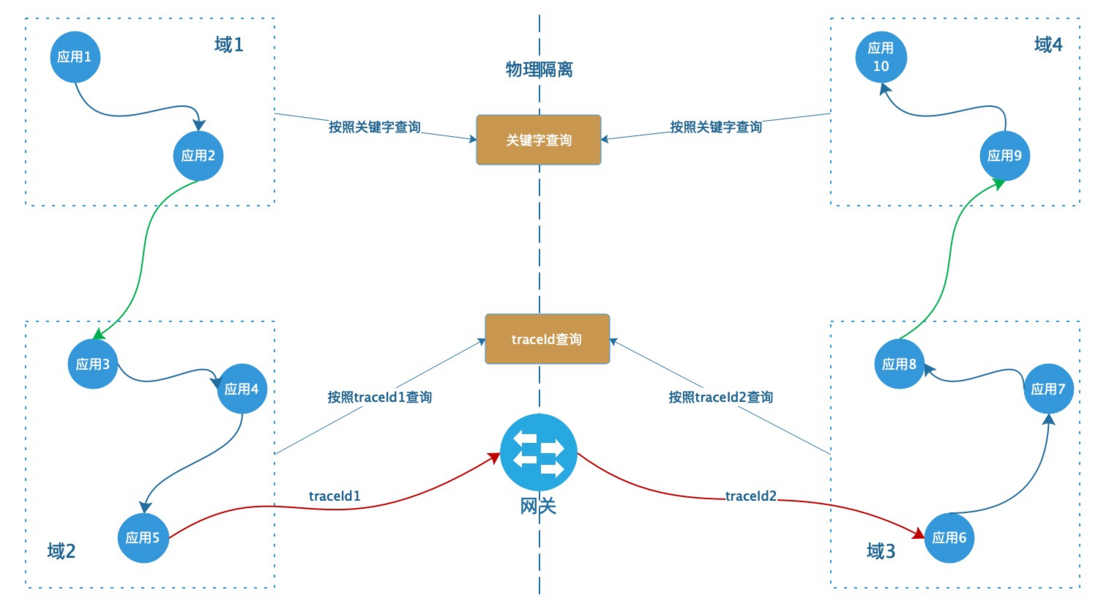

2.2.5 Cross-domain mapping

Cross-domain is a challenge that must be faced when performing full-link troubleshooting. In terms of implementation principle, there are two scenarios for cross-domain: through the gateway and without the gateway.

- Scenarios that do not pass through the gateway, such as: mutual calls between different domains of the Tao Department. This scenario is actually not much different from intra-domain search in essence, so I won't introduce it here.

- Scenarios that pass through the gateway, such as the Tao system and the ant system calling each other, because each domain uses a different link tracking scheme, it is impossible to use one traceId to connect the entire link in series. Here we mainly talk about how to deal with this scenario.

As shown in the figure above, the call links of domain 1, domain 2, domain 3, and domain 4 are shown. Domain 1 calls domain 2, domain 3 calls domain 4 without going through the gateway, and the traceId does not change. When domain 2 calls domain 3, it needs to go through the gateway, and the traceId changes.

We can divide the query methods into two types. 1. Keyword query, such as entering order number. This is not actually affected by the link tracking scheme, nor is it affected by the gateway. So you can still search by keywords in each domain. 2. Query by traceId. This first needs to obtain the mapping relationship through the gateway information. That is, traceId1->traceId2. Then use these two traceIds to search in their respective domains.

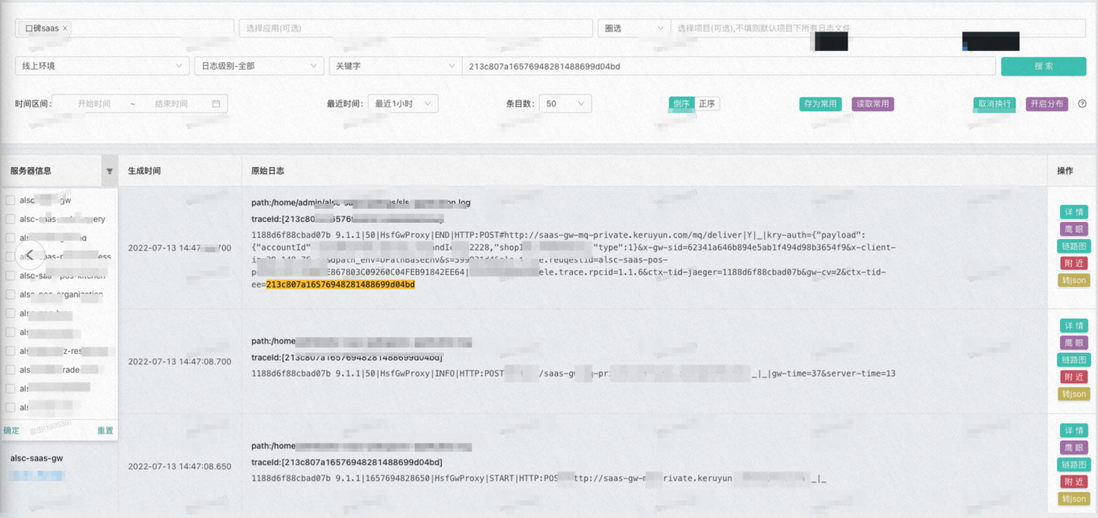

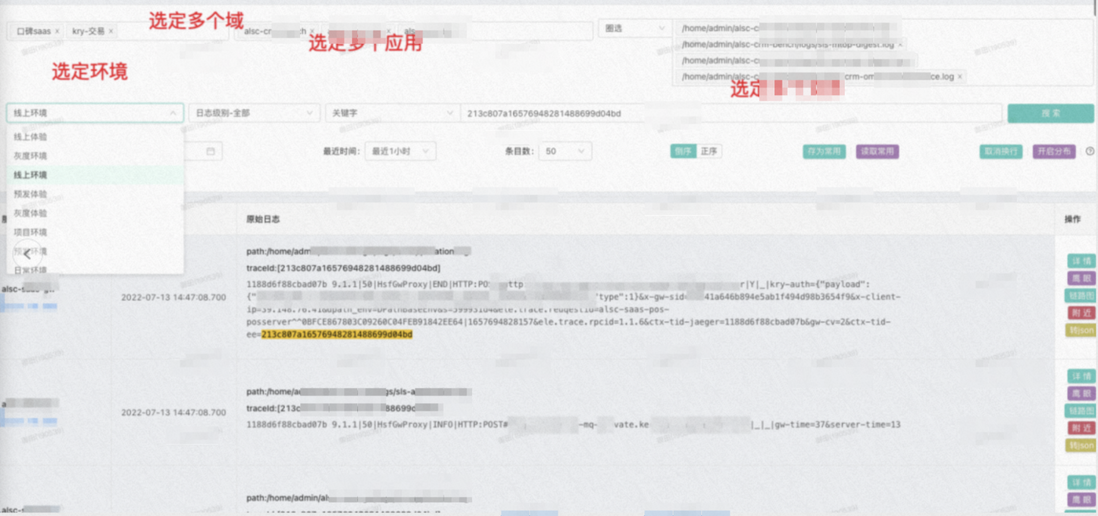

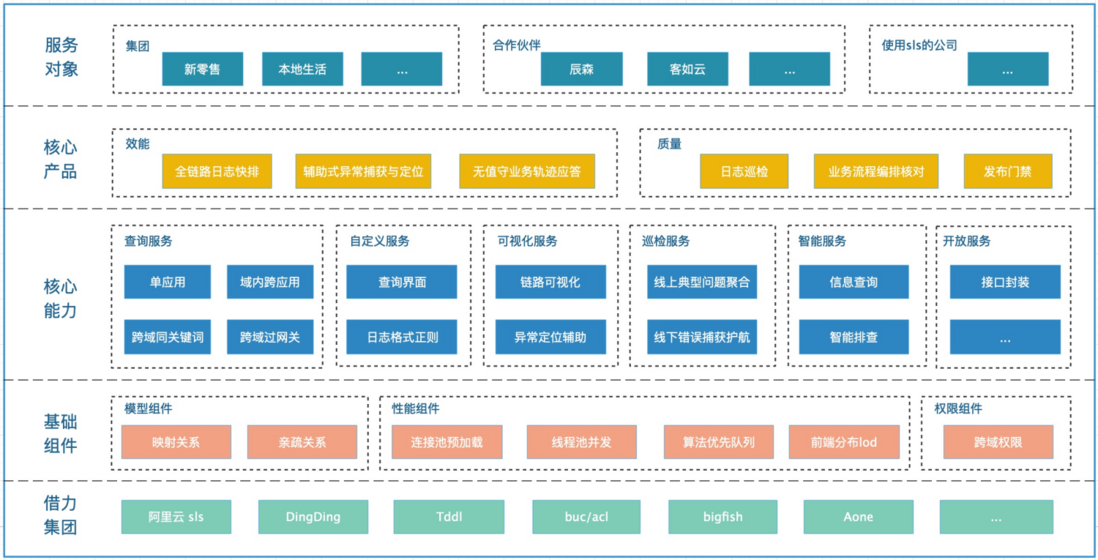

3. Existing Capabilities

Through the improvement of the original Feiyun log quick sorting function and the improvement of the access cost. Xlog has completed the development and implementation of the main functions. Link: Xlog.

Cross-domain query operation:

- Multi-scene coverage :

Through the analysis of user usage habits, it currently supports a single application, intra-domain cross-application, and cross-domain. Search by file, log level, keyword, time, etc. At the same time, it supports the preservation of user operation habits. - Multi-mode support :

To support the Alibaba Cloud sls collection structure, as long as it can be disassembled into the above three modes of collection, it can be supported. If there are very special circumstances, you can contact Otian for customization. - Zero cost access :

For systems already connected to sls, there is no need to change the sls configuration, just configure it on Xlog. The storage time, collection method, and budget of sls collection logs are distributed to each business team, and can be adjusted according to their actual situation. - Custom interface :

For different domains, the sensitivity to some key fields may be different. For example, some need to use traceid, some need to use requestid, and games need to use messageid. For this scenario, custom search boxes are supported, and key fields are highlighted when displaying logs. - Performance guarantee :

Through the performance optimization of the above methods, the current performance indicators are as follows: 150ms for a single application query. 400ms for 32 applications. More than 50 applications, algorithm optimization, time in 500ms.

4. Ecological construction

This chapter records the optimization and construction of the log level on this system. Most of the ideas and strategies can be reused, and I hope it can help students with the same demands.

4.1 Cost optimization

After the Xlog system is built, how to reduce costs has become a new challenge. After the implementation of the following methods, the cost is reduced by 80% . The main operations are also listed here, hoping to give some help to users who are also using sls.

-Out-of- domain logs are uploaded directly into the domain :

Alibaba Cloud has additional discounts for internal accounts compared to external accounts. Therefore, if there is a department that deploys outside the bomb, you can consider uploading the logs directly to the account in the domain, or apply for the account to become an account in the domain.

-Single app optimization :

In fact, when printing the log, the cost is often not taken into account, and many are printed at will. Therefore, we design the domain value for each application according to the transaction volume, and optimize it if it exceeds the needs of the indicator.

- Storage time optimization :

Optimizing storage time is the easiest and most direct way. We reduced offline (daily and pre-release) log storage to 1 day, and online to 3 days -> 7 days. Then use the archiving capability together to optimize the cost.

- Index optimization :

Index optimization is relatively complex, but it is also the most effective. After analysis, most of our costs are distributed in indexing, storage, and delivery. The index accounts for about 70%. The operation of optimizing the index is actually to reduce the proportion of the log occupied by the index. For example, only a query capability of the first few bytes is supported, and the following details part is the attached detailed information. Since there is a unified log format in our domain, only the traceid index is left in the logs in the domain, and the full index is maintained for the summary log. Therefore, the subsequent query method is to first query the traceid through the summary log, and then query the details through the traceid.

4.2 Archiving Capability

While building the entire architecture, we also considered the cost factor. While reducing costs, we have shortened storage time. However, shortening the storage time will inevitably lead to the lack of ability to troubleshoot historical problems. Therefore, we also propose the construction of archiving capabilities.

In the logstore of sls, data delivery can be configured: https://help.aliyun.com/document\_detail/371924.html. This step is actually to talk about the information in sls and store it in oss. In layman's terms, it is the ability to save the database table in the form of a file and delete the index. Encryption will be performed during the delivery process. Currently, Xlog supports downloading and archived logs on the interface, and then searching locally. In the future, you can re-import oss data to sls as needed, refer to: https://help.aliyun.com/document\_detail/147923.html .

4.3 Exception log scan

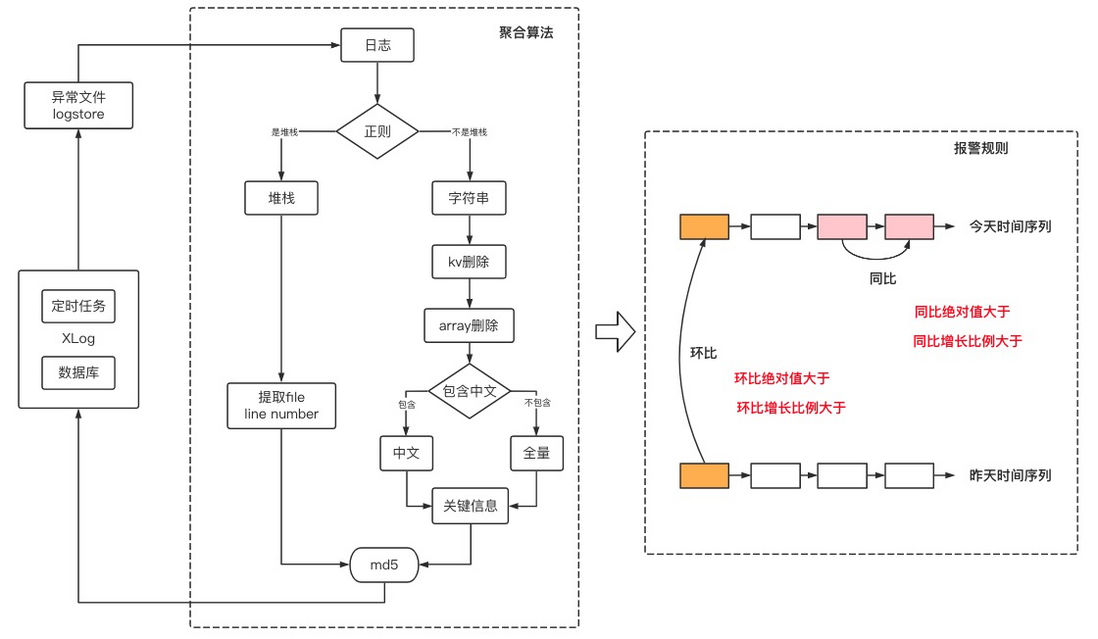

With the help of the previous architecture, in fact, you can clearly know where the content of each log is, and you can accurately query the file content that records the error log. Therefore, the inspection is performed every 10 minutes, and the abnormal logs in each application are aggregated to obtain the number of abnormal information during this period. Then you can know from the previous comparison whether there are new errors, explosion errors and so on.

As shown in the figure above, after getting all the exception logs, md5 will be calculated according to a rule. The stack type and the exception log type have different algorithms for the two types, but the essential goal is the same, which is to calculate the md5 of the paragraphs that are most likely to be reread, and then perform clustering. After the clustering is completed, the differences can be obtained and compared, so as to determine whether it is a new addition or a sudden increase.

5. Planning

At present, the basic components and functions of Xlog have been implemented. In the access of various applications and domains, the entire link will become more and more complete. Next, it will supplement the full link, visual inspection, intelligent inspection and problem discovery.

- Exception location assistance: On the visualization link, applications with exceptions are marked in red, so that the error log can be quickly and easily found.

- Online typical problem aggregation: log inspection.

- Offline error capture and escort: During the business test, the error information in the log is often not checked in real time, and the escort mode is turned on to apply the error to the ship. Once it occurs during the offline execution, it will be pushed.

- Information query: connect with DingTalk for quick query. DingTalk@robot, send a keyword, and DingTalk will return the latest execution result.

- Intelligent investigation: @DingTalk enters traceid, order number, etc., and returns the detected abnormal application and exception stack.

Business process choreography check: Allows the application of log process choreography, and the link process log in series according to the keyword needs to meet certain rules. Check with this rule. => The real-time requirements are not high, and only the process is verified. - Release access control: no business abnormality in offline use case playback, and no business abnormality in online safety production => allow release

6. Use and co-build

Referring to the collection structure, log form, query method, and display style requirements of many other teams, the access cost has been reduced and customization has been improved. For teams that have already met the conditions, it can be easily accessed.

For some special or customized needs, Xlog has reserved expansion modules to facilitate co-construction.

As shown in the figure above, the green components in the figure can be reused, and you only need to customize the structure and cross-domain mapping for your own domain. It only needs to be implemented according to the interface of the defined strategy pattern.

Copyright statement: The content of this article is contributed by Alibaba Cloud's real-name registered users. The copyright belongs to the original author. The Alibaba Cloud developer community does not own the copyright and does not assume the corresponding legal responsibility. For specific rules, please refer to the "Alibaba Cloud Developer Community User Service Agreement" and "Alibaba Cloud Developer Community Intellectual Property Protection Guidelines". If you find any content suspected of plagiarism in this community, fill out the infringement complaint form to report it. Once verified, this community will delete the allegedly infringing content immediately.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。