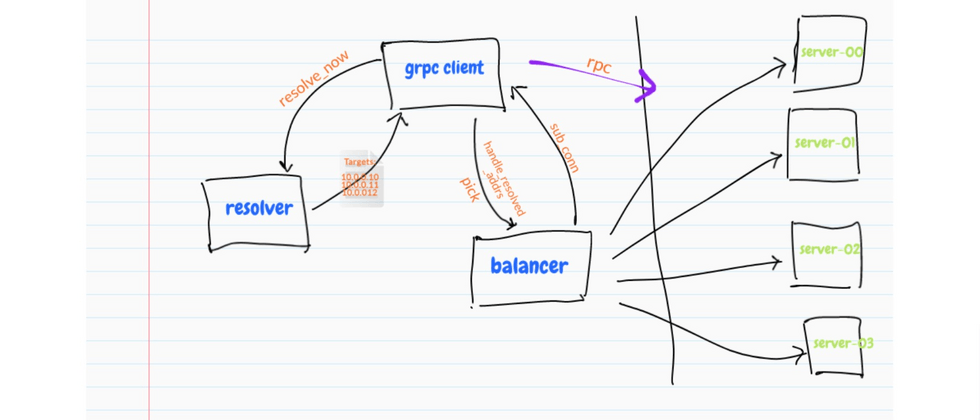

In the previous article, we learned the principle and source code analysis of Resolver together. In this article, we will continue to learn about Balancer, which is closely related to Resolver. The load balancing mentioned here mainly refers to the load balancing in the data center, that is, the load balancing between RPCs.

Portal Service Discovery Principle Analysis and Source Code Interpretation

Based on go-zero v1.3.5 and grpc-go v1.47.0

load balancing

Each called service will have multiple instances, so the caller of the service should send the request to which service instance of the called service, this is the business scenario of load balancing.

The first key point of load balancing is fairness, that is, load balancing needs to pay attention to the fairness between the called service instance groups, and avoid the situation of drought and drought and flooding.

The second key point of load balancing is correctness, that is, for stateful services, load balancing needs to care about the status of the request, and schedule the request to the back-end instance that can handle it. Happening.

Stateless Load Balancing

Stateless load balancing is a load balancing model that we are exposed to a lot in our daily work. It refers to the fact that the backend instances participating in load balancing are stateless, and all backend instances are peer-to-peer. A request can be sent regardless of the destination. Which instance, will get the same and correct processing results, so the stateless load balancing strategy does not need to care about the state of the request. Two stateless load balancing algorithms are described below.

polling

The load balancing strategy of round robin is very simple. It only needs to allocate requests to multiple instances in sequence, and no other processing is required. For example, a round-robin strategy would assign the first request to the first instance, then assign the next request to the second instance, and so on, and then go back to the beginning and assign to the first instances, and then assign them in turn. Polling does not use the state information of the request when routing, and is a stateless load balancing strategy, so it cannot be used for load balancers with stateful instances, otherwise there will be problems with correctness. In terms of fairness, because the round-robin strategy just distributes requests sequentially, it is suitable for situations where the workload of the requests and the processing power of the instances are both relatively small.

Weight polling

The load balancing strategy of weighted round robin is to assign a weight to each backend instance, and the number of allocated requests is proportional to the weight of the instance round robin. For example, there are two instances A and B. Suppose we set the weight of A to 20 and the weight of B to 80, then load balancing will allocate 20% of the number of requests to A, and 80% of the number of requests to B. Weight polling does not use the state information of the request when routing, and it is a stateless load balancing strategy, so it cannot be used for load balancers with stateful instances, otherwise there will be problems with correctness. In terms of fairness, because the weight strategy will allocate the number of requests according to the weight ratio of the instance, we can use it to solve the problem of the difference in the processing capacity of the instances, and think its fairness is better than the polling strategy.

Stateful Load Balancing

Stateful load balancing means that some states of the server are saved in the load balancing strategy, and then corresponding instances are selected according to a certain algorithm according to these states.

P2C+EWMA

The P2C load balancing algorithm is used by default in go-zero. The principle of the algorithm is relatively simple, that is, randomly select two nodes from all available nodes, then calculate the load of these two nodes, and select a node with a lower load to serve this request. In order to avoid imbalance caused by some nodes not being selected all the time, it will be forced to be selected once after a certain period of time.

In this complex equalization algorithm, the EWMA exponential moving weighted average algorithm is used, which means the average value over a period of time. Compared with the arithmetic average, the algorithm is less sensitive to sudden network jitter, and the sudden jitter will not be reflected in the request lag, which can make the algorithm more balanced.

go-zero/zrpc/internal/balancer/p2c/p2c.go:133

atomic.StoreUint64(&c.lag, uint64(float64(olag)*w+float64(lag)*(1-w)))go-zero/zrpc/internal/balancer/p2c/p2c.go:139

atomic.StoreUint64(&c.success, uint64(float64(osucc)*w+float64(success)*(1-w)))The coefficient w is a time decay value, that is, the larger the interval between two requests, the smaller the coefficient w.

go-zero/zrpc/internal/balancer/p2c/p2c.go:124

w := math.Exp(float64(-td) / float64(decayTime))The load value of the node is obtained by the product of the request delay lag of the connection and the current number of requests inflight . If the request delay is larger or the number of requests currently being processed is larger, the load of the node is higher.

go-zero/zrpc/internal/balancer/p2c/p2c.go:199

func (c *subConn) load() int64 {

// plus one to avoid multiply zero

lag := int64(math.Sqrt(float64(atomic.LoadUint64(&c.lag) + 1)))

load := lag * (atomic.LoadInt64(&c.inflight) + 1)

if load == 0 {

return penalty

}

return load

}Source code analysis

The following source code will involve go-zero and gRPC, please distinguish according to the code path given

In gRPC, Balancer and Resolver can also be customized, and they are also registered through the Register method.

grpc-go/balancer/balancer.go:53

func Register(b Builder) {

m[strings.ToLower(b.Name())] = b

}The parameter Builder of Register is an interface. In the Builder interface, the first parameter ClientConn of the Build method is also an interface, and the return value of the Build method, Balancer, is also an interface, which is defined as follows:

It can be seen that if you want to implement a custom Balancer, you must implement the balancer.Builder interface.

After understanding the registration method of the Balancer provided by gRPC, let's take a look at where go-zero registers the Balancer

go-zero/zrpc/internal/balancer/p2c/p2c.go:36

func init() {

balancer.Register(newBuilder())

}In go-zero, the balancer.Builder interface is not implemented, but the base.baseBuilder provided by gRPC is used for registration, and base.baseBuilder implements the balancer.Builder interface. When the baseBuilder is created, the base.NewBalancerBuilder method is called, and the PickerBuilder parameter needs to be passed in. PickerBuilder is an interface, which is implemented by p2c.p2cPickerBuilder in go-zero.

PickerBuilder interface Build method return value balancer.Picker is also an interface, p2c.p2cPicker implements this interface.

grpc-go/balancer/base/base.go:65

func NewBalancerBuilder(name string, pb PickerBuilder, config Config) balancer.Builder {

return &baseBuilder{

name: name,

pickerBuilder: pb,

config: config,

}

}The relationship between the structures is shown in the following figure, in which the packages corresponding to each structure module are:

- balancer: grpc-go/balancer

- base: grpc-go/balancer/base

- p2c: go-zero/zrpc/internal/balancer/p2c

Where can I get a registered Balancer?

Through the above process steps, you already know how to customize the Balancer and how to register the customized Balancer. Now that you have registered, you will definitely get it. Next, let's take a look at where to get the registered Balancer.

We know that the Resolver obtains the name of the Resolver by parsing the second parameter target of DialContext, and then obtains the corresponding Resolver according to the name. Obtaining the Balancer is also based on the name. The name of the Balancer is passed in through the configuration item when creating the gRPC Client. The p2c.Name here is the name p2c_ewma specified when registering the Balancer, as follows:

go-zero/zrpc/internal/client.go:50

func NewClient(target string, opts ...ClientOption) (Client, error) {

var cli client

svcCfg := fmt.Sprintf(`{"loadBalancingPolicy":"%s"}`, p2c.Name)

balancerOpt := WithDialOption(grpc.WithDefaultServiceConfig(svcCfg))

opts = append([]ClientOption{balancerOpt}, opts...)

if err := cli.dial(target, opts...); err != nil {

return nil, err

}

return &cli, nil

}In the previous article, we already know that when the gRPC client is created, the Build method of the custom Resolver will be triggered. After the service address list is obtained inside the Build method, the state is updated through the cc.UpdateState method, and later when listening When the service state changes, cc.UpdateState will also be called to update the state, and cc here refers to the ccResolverWrapper object. If you forget this part, you can review the article explaining Resolver again, so that it can be smooth Access this article:

go-zero/zrpc/resolver/internal/kubebuilder.go:51

if err := cc.UpdateState(resolver.State{

Addresses: addrs,

}); err != nil {

logx.Error(err)

}There are several important module objects here, as follows:

- ClientConn: grpc-go/clientconn.go:464

- ccResolverWrapper: grpc-go/resolver_conn_wrapper.go:36

- ccBalancerWrapper: grpc-go/balancer_conn_wrappers.go:48

- Balancer: grpc-go/internal/balancer/gracefulswitch/gracefulswitch.go:46

- balancerWrapper: grpc-go/internal/balancer/gracefulswitch/gracefulswitch.go:247

After listening to the change of the service state (first start or monitoring the change through Watch), call ccResolverWrapper.UpdateState to trigger the process of updating the state. The call link between each module is as follows:

The action of getting the Balancer is triggered in the ccBalancerWrapper.handleSwitchTo method, the code is as follows:

grpc-go/balancer_conn_wrappers.go:266

builder := balancer.Get(name)

if builder == nil {

channelz.Warningf(logger, ccb.cc.channelzID, "Channel switches to new LB policy %q, since the specified LB policy %q was not registered", PickFirstBalancerName, name)

builder = newPickfirstBuilder()

} else {

channelz.Infof(logger, ccb.cc.channelzID, "Channel switches to new LB policy %q", name)

}

if err := ccb.balancer.SwitchTo(builder); err != nil {

channelz.Errorf(logger, ccb.cc.channelzID, "Channel failed to build new LB policy %q: %v", name, err)

return

}

ccb.curBalancerName = builder.Name()Then in the Balancer.SwitchTo method, the Build method of the custom Balancer is called:

grpc-go/internal/balancer/gracefulswitch/gracefulswitch.go:121

newBalancer := builder.Build(bw, gsb.bOpts)As mentioned above, the first parameter of the Build method is the interface balancer.ClientConn , and the balancerWrapper is passed in here, so gracefulswitch.balancerWrapper implements this interface:

Here we already know where to get the custom Balancer, where to get the custom Balancer, and where the Build method of balancer.Builder is called.

It can be seen from the above that the balancer.Builder here is baseBuilder, so the Build method called is the Build method of baseBuilder. The definition of the Build method is as follows:

grpc-go/balancer/base/balancer.go:39

func (bb *baseBuilder) Build(cc balancer.ClientConn, opt balancer.BuildOptions) balancer.Balancer {

bal := &baseBalancer{

cc: cc,

pickerBuilder: bb.pickerBuilder,

subConns: resolver.NewAddressMap(),

scStates: make(map[balancer.SubConn]connectivity.State),

csEvltr: &balancer.ConnectivityStateEvaluator{},

config: bb.config,

}

bal.picker = NewErrPicker(balancer.ErrNoSubConnAvailable)

return bal

}The Build method returns baseBalancer, you can know that baseBalancer implements the balancer.Balancer interface:

Let’s review this process again, in fact, it mainly does the following things:

- Listen for service state changes in a custom resolver

- Update the state with UpdateState

- Get a custom Balancer

- Execute the Build method of the custom Balancer to obtain the Balancer

How to create a connection?

Go back to the updateResolverState method of ClientConn, and call the balancerWrapper.updateClientConnState method at the end of the method to update the connection state of the client:

grpc-go/clientconn.go:664

uccsErr := bw.updateClientConnState(&balancer.ClientConnState{ResolverState: s, BalancerConfig: balCfg})

if ret == nil {

ret = uccsErr // prefer ErrBadResolver state since any other error is

// currently meaningless to the caller.

}The following call link is shown in the following figure:

Eventually the baseBalancer.UpdateClientConnState method will be called:

grpc-go/balancer/base/balancer.go:94

func (b *baseBalancer) UpdateClientConnState(s balancer.ClientConnState) error {

// .............

b.resolverErr = nil

addrsSet := resolver.NewAddressMap()

for _, a := range s.ResolverState.Addresses {

addrsSet.Set(a, nil)

if _, ok := b.subConns.Get(a); !ok {

sc, err := b.cc.NewSubConn([]resolver.Address{a}, balancer.NewSubConnOptions{HealthCheckEnabled: b.config.HealthCheck})

if err != nil {

logger.Warningf("base.baseBalancer: failed to create new SubConn: %v", err)

continue

}

b.subConns.Set(a, sc)

b.scStates[sc] = connectivity.Idle

b.csEvltr.RecordTransition(connectivity.Shutdown, connectivity.Idle)

sc.Connect()

}

}

for _, a := range b.subConns.Keys() {

sci, _ := b.subConns.Get(a)

sc := sci.(balancer.SubConn)

if _, ok := addrsSet.Get(a); !ok {

b.cc.RemoveSubConn(sc)

b.subConns.Delete(a)

}

}

// ................

}When the call to UpdateClientConnState is triggered for the first time, ok is false in the following code:

_, ok := b.subConns.Get(a);So a new connection will be created:

sc, err := b.cc.NewSubConn([]resolver.Address{a}, balancer.NewSubConnOptions{HealthCheckEnabled: b.config.HealthCheck})The b.cc here is the balancerWrapper . For those who have forgotten, you can look up and review it, that is, it will call balancerWrapper.NewSubConn to create a connection.

grpc-go/internal/balancer/gracefulswitch/gracefulswitch.go:328

func (bw *balancerWrapper) NewSubConn(addrs []resolver.Address, opts balancer.NewSubConnOptions) (balancer.SubConn, error) {

// .............

sc, err := bw.gsb.cc.NewSubConn(addrs, opts)

if err != nil {

return nil, err

}

// .............

bw.subconns[sc] = true

// .............

}bw.gsb.cc is ccBalancerWrapper, so ccBalancerWrapper.NewSubConn will be called here to create a connection:

grpc-go/balancer_conn_wrappers.go:299

func (ccb *ccBalancerWrapper) NewSubConn(addrs []resolver.Address, opts balancer.NewSubConnOptions) (balancer.SubConn, error) {

if len(addrs) <= 0 {

return nil, fmt.Errorf("grpc: cannot create SubConn with empty address list")

}

ac, err := ccb.cc.newAddrConn(addrs, opts)

if err != nil {

channelz.Warningf(logger, ccb.cc.channelzID, "acBalancerWrapper: NewSubConn: failed to newAddrConn: %v", err)

return nil, err

}

acbw := &acBalancerWrapper{ac: ac}

acbw.ac.mu.Lock()

ac.acbw = acbw

acbw.ac.mu.Unlock()

return acbw, nil

}The final return is the acBalancerWrapper object, acBalancerWrapper implements the balancer.SubConn interface:

The call flow chart is as follows:

The default state for creating a connection is connectivity.Idle :

grpc-go/clientconn.go:699

func (cc *ClientConn) newAddrConn(addrs []resolver.Address, opts balancer.NewSubConnOptions) (*addrConn, error) {

ac := &addrConn{

state: connectivity.Idle,

cc: cc,

addrs: addrs,

scopts: opts,

dopts: cc.dopts,

czData: new(channelzData),

resetBackoff: make(chan struct{}),

}

// ...........

}There are five states defined for connections in gRPC, as follows:

const (

// Idle indicates the ClientConn is idle.

Idle State = iota

// Connecting indicates the ClientConn is connecting.

Connecting

// Ready indicates the ClientConn is ready for work.

Ready

// TransientFailure indicates the ClientConn has seen a failure but expects to recover.

TransientFailure

// Shutdown indicates the ClientConn has started shutting down.

Shutdown

)Save the created connection through b.scStates in baseBalancer , the initial state is also connectivity.Idle, and then connect through sc.Connect():

grpc-go/balancer/base/balancer.go:112

b.subConns.Set(a, sc)

b.scStates[sc] = connectivity.Idle

b.csEvltr.RecordTransition(connectivity.Shutdown, connectivity.Idle)

sc.Connect()Here sc.Connetc calls the Connect method of acBalancerWrapper, you can see that the connection is created asynchronously:

grpc-go/balancer_conn_wrappers.go:406

func (acbw *acBalancerWrapper) Connect() {

acbw.mu.Lock()

defer acbw.mu.Unlock()

go acbw.ac.connect()

}Finally, the addrConn.connect method is called:

grpc-go/clientconn.go:786

func (ac *addrConn) connect() error {

ac.mu.Lock()

if ac.state == connectivity.Shutdown {

ac.mu.Unlock()

return errConnClosing

}

if ac.state != connectivity.Idle {

ac.mu.Unlock()

return nil

}

ac.updateConnectivityState(connectivity.Connecting, nil)

ac.mu.Unlock()

ac.resetTransport()

return nil

}The call chain from connect is as follows:

At the end of the UpdateSubConnState method of baseBalancer, update the Picker to a custom Picker:

grpc-go/balancer/base/balancer.go:221

b.cc.UpdateState(balancer.State{ConnectivityState: b.state, Picker: b.picker})At the end of the addrConn method, ac.resetTransport() is called to actually create the connection:

When the connection has been created and is in the Ready state, the baseBalancer.UpdateSubConnState method is finally called. At this time, s==connectivity.Ready is true, and oldS==connectivity.Ready is false, so the b.regeneratePicker() method will be called:

if (s == connectivity.Ready) != (oldS == connectivity.Ready) ||

b.state == connectivity.TransientFailure {

b.regeneratePicker()

} func (b *baseBalancer) regeneratePicker() {

if b.state == connectivity.TransientFailure {

b.picker = NewErrPicker(b.mergeErrors())

return

}

readySCs := make(map[balancer.SubConn]SubConnInfo)

// Filter out all ready SCs from full subConn map.

for _, addr := range b.subConns.Keys() {

sci, _ := b.subConns.Get(addr)

sc := sci.(balancer.SubConn)

if st, ok := b.scStates[sc]; ok && st == connectivity.Ready {

readySCs[sc] = SubConnInfo{Address: addr}

}

}

b.picker = b.pickerBuilder.Build(PickerBuildInfo{ReadySCs: readySCs})

}The connections available in the connectivity.Ready state are obtained in regeneratePicker, and the picker is updated at the same time. Remember b.pickerBuilder? bbpickerBuilder is a custom implementation of the base.PickerBuilder interface in go-zero.

go-zero/zrpc/internal/balancer/p2c/p2c.go:42

func (b *p2cPickerBuilder) Build(info base.PickerBuildInfo) balancer.Picker {

readySCs := info.ReadySCs

if len(readySCs) == 0 {

return base.NewErrPicker(balancer.ErrNoSubConnAvailable)

}

var conns []*subConn

for conn, connInfo := range readySCs {

conns = append(conns, &subConn{

addr: connInfo.Address,

conn: conn,

success: initSuccess,

})

}

return &p2cPicker{

conns: conns,

r: rand.New(rand.NewSource(time.Now().UnixNano())),

stamp: syncx.NewAtomicDuration(),

}

}Finally, assign the custom Picker to the ClientConn.blockingpicker.picker property.

grpc-go/balancer_conn_wrappers.go:347

func (ccb *ccBalancerWrapper) UpdateState(s balancer.State) {

ccb.cc.blockingpicker.updatePicker(s.Picker)

ccb.cc.csMgr.updateState(s.ConnectivityState)

}How to select the created connection?

Now you know how to create a connection, and the connection is actually managed in baseBalancer.scStates . When the state of the connection changes, baseBalancer.scStates is updated. Then let's take a look at how gRPC selects a connection to send the request.

When the gRPC client initiates a call, it will call the Invoke method of ClientConn. Generally, it will not actively use this method to make a call. The call of this method is generally automatically generated:

grpc-go/examples/helloworld/helloworld/helloworld_grpc.pb.go:39

func (c *greeterClient) SayHello(ctx context.Context, in *HelloRequest, opts ...grpc.CallOption) (*HelloReply, error) {

out := new(HelloReply)

err := c.cc.Invoke(ctx, "/helloworld.Greeter/SayHello", in, out, opts...)

if err != nil {

return nil, err

}

return out, nil

}The following is the call link for initiating the request, and finally the p2cPicker.Pick method will be called to obtain the connection. Our custom load balancing algorithm is generally implemented in the Pick method. After the connection is obtained, the request is sent through sendMsg.

grpc-go/stream.go:945

func (a *csAttempt) sendMsg(m interface{}, hdr, payld, data []byte) error {

cs := a.cs

if a.trInfo != nil {

a.mu.Lock()

if a.trInfo.tr != nil {

a.trInfo.tr.LazyLog(&payload{sent: true, msg: m}, true)

}

a.mu.Unlock()

}

if err := a.t.Write(a.s, hdr, payld, &transport.Options{Last: !cs.desc.ClientStreams}); err != nil {

if !cs.desc.ClientStreams {

return nil

}

return io.EOF

}

if a.statsHandler != nil {

a.statsHandler.HandleRPC(a.ctx, outPayload(true, m, data, payld, time.Now()))

}

if channelz.IsOn() {

a.t.IncrMsgSent()

}

return nil

}This is the end of the source code analysis. Due to the limited space, it is impossible to cover everything, so this article only lists the main paths in the source code.

concluding remarks

The source code related to Balancer is still a bit complicated, and the author has read it several times to clarify the context, so if you feel like you have no clue after reading it once or twice, don't worry, you can understand it by reading the context of the article several times.

If you have any questions, you can feel free to contact me to discuss, and you can search for dawn_zhou in the community group to find me.

I hope this article is helpful to you. Your likes are the biggest motivation for the author to continue to output.

project address

https://github.com/zeromicro/go-zero

Welcome go-zero and star support us!

WeChat exchange group

Follow the official account of " Microservice Practice " and click on the exchange group to get the QR code of the community group.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。