The content of "K8S Ecological Weekly" mainly includes some recommended weekly information related to the K8S ecology that I have come into contact with. Welcome to subscribe to know the column "k8s ecology" .

Hello everyone, my name is Zhang Jintao.

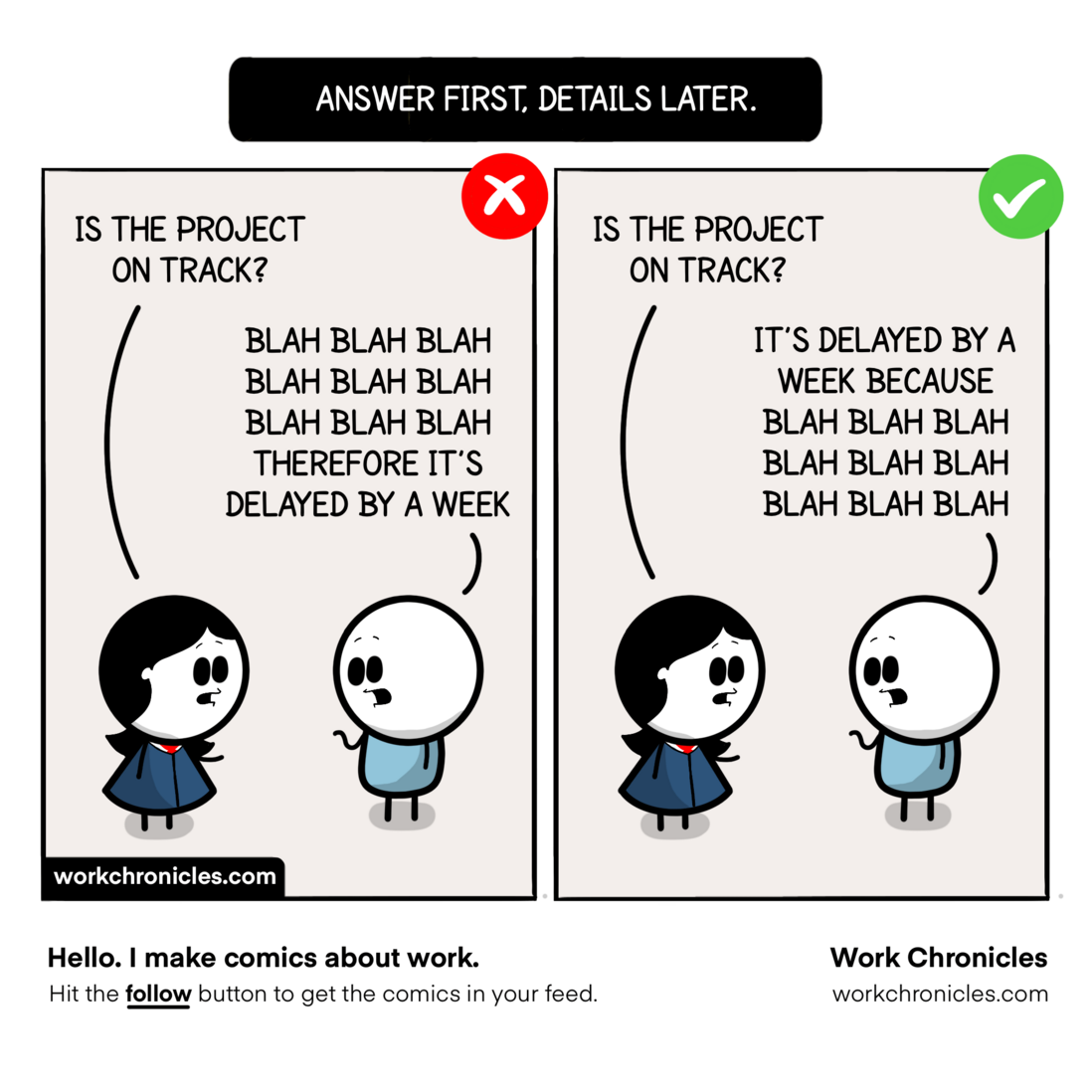

This week is also a busy week, an experience to share with everyone, answering questions rather than explaining details. That's why I often start the article by explaining what the article mainly covers, which helps save time.

In my spare time this week, I basically paid attention to the progress of the upstream, and had no time to toss with other things.

Kubernetes v1.25.0-rc.0 has been released this week, and the official version will be released this month under normal circumstances.

Let me introduce some of the more noteworthy things that we have focused on this week:

Upstream progress

- Add support for user namespaces phase 1 (KEP 127) by rata Pull Request #111090 kubernetes/kubernetes

This PR implements the first phase of KEP127, which aims to add support for user namespaces to Pods.

For those who are not familiar with user namespaces, you can read my previous series of articles: "Understanding the Cornerstone of Container Technology: Namespace (Part 1)" and "Understanding the Cornerstone of Container Technology: Namespace (Part 2)" .

The advantage of supporting the use of user namespaces in Kubernetes is that it is possible to run processes in Pods with different UID/GID from the host, so that privileged processes in the Pod actually run as normal processes in the host. In this way, assuming that the privileged process in the Pod is breached due to security loopholes, the impact on the host can also be reduced to a relatively low level.

Directly related vulnerabilities, such as CVE-2019-5736, I also introduced it in my 2019 article "On the occasion of the release of runc 1.0-rc7" ,

Interested partners can go to this article for details.

The implementation adds a boolean HostUsers field to the Pod's Spec to determine whether to enable the host's user namespaces, and the default is true.

In addition, it is currently foreseeable that if the Linux kernel version used by the Kubernetes cluster is below v5.19, using this feature may lead to increased Pod startup time.

Looking forward to further progress.

- cleanup: Remove storageos volume plugins from k8s codebase by Jiawei0227 · Pull Request #111620 · kubernetes/kubernetes

- cleanup: Remove flocker volume plugins from k8s codebase by Jiawei0227 · Pull Request #111618 · kubernetes/kubernetes

- cleanup: Remove quobyte volume plugins from k8s codebase by Jiawei0227 · Pull Request #111619 · kubernetes/kubernetes

As I introduced in last week's "k8s Ecology", the in-tree GlusterFS plugin was deprecated in PR #111485.

The above-mentioned PRs do basically similar things, which are some cleaning operations.

Deleted in-tree volume plugins such as StorageOS , Flocker and Quobyte in the Kubernetes project.

Among them StorageOS and Quobyte if you are using it, it is recommended to migrate to the CSI plugin, and Flocker is no longer maintained, so there is no migration plan .

Although Flocker no longer maintained, I think I can still introduce it a little (it's like pouring out some things I remember, and I can safely delete it in the future, hahah)

If you are a small partner who participated in the Docker/Kubernetes ecosystem early, you may still remember the tool Flocker.

This tool mainly solves the problem of data volume management in containerized applications, which is one of the main problems solved by the container ecosystem around 2015.

At that time, a lot of Docker volume plugins emerged, which actually did the same thing, but the company/team behind this project was more interesting.

This company named ClusterHQ, similar to many open source commercial companies now, takes the open source software Flocker as its starting point, and has completed the integration of various platforms such as Docker/Kubernetes/Mosos.

Follow-up launched its SaaS service FlockerHub.

Most of the team members are from the well-known open source project Twisted . There should be few friends who use Python who do not know about this project.

Naturally, Flocker is also built in Python.

But just such a company that seemed to be doing fine, suddenly announced that it was shutting down after a period of time after its SaaS service was launched in preview.

At the time, many people speculated that maybe the company put its business core on SaaS, but the product didn't really catch on.

In fact, this kind of thing has happened a lot in the entire container ecosystem, and it is still happening.

The logic behind this is actually more commercialization. Although Flocker was also popular for a while, after the commercial company closed, it stopped maintenance.

Now that it's removed from Kubernetes, it's likely the last time Flocker's name will be seen on popular projects.

The CronJob TimeZone feature has reached the Beta stage. However, according to the latest feature policy of Kubernetes, this feature still needs to be manually enabled CronJobTimeZone feature gate .

Note that if CronJob does not set TimeZone, it will use the TimeZone of the kube-controller-manager process by default.

I have encountered a small partner who wasted some time on this problem before.

Cloud Native Security Whitepaper version 1.0 Audiobook Released

The audio is the 2020 version of the Cloud Native Security Whitepaper and can be heard from soundcloud .

Now the text version of Cloud Native Security Whitepaper v2 has also been released and can be obtained from the following repositories.

https://github.com/cncf/tag-security/tree/main/security-whitepaper

cri-dockerd released v0.2.5

Presumably you still remember the much-discussed incident of Kubernetes removing its dockershim component. I also wrote an article "K8S Abandoned Docker? Docker doesn't work anymore? Give me a break! " to explain the incident.

Now that Kubernetes has completely removed its in-tree dockershim component, Mirantis is maintaining the Mirantis/cri-dockerd project as promised.

The project has gradually entered a relatively standardized maintenance period, and is also following the upstream for continuous evolution.

For example, in the v0.2.5 version, the default network plug-in was modified to CNI.

If you have a small partner's Kubernetes cluster that still uses Docker as the container runtime, and you want to upgrade the Kubernetes cluster, you can check out this project.

It's not a hassle to use.

Looking forward to the follow-up performance.

Well, that's all for this issue. I want to launch a poll to find out which Kubernetes version you are currently using. Everyone is welcome to participate. The voting will be closed automatically after the release of v1.25, and I will share the data with you at that time.

https://wj.qq.com/s2/10618008/29e3/

Welcome to subscribe my article public account [MoeLove]

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。