This article is organized by community volunteer Zou Zhiye, and the content comes from the speech delivered by Alibaba Cloud real-time computing product manager Li Jialin (Feng Yuan) at the Flink Summit (CSDN Cloud Native Series) on July 5. The main contents include:

- Building a risk control system based on Flink

- Ali risk control practice

- Difficulties in large-scale risk control technology

- Alibaba Cloud FY23 Risk Control Evolution Plan

Click to view the live replay & speech PPT

At present, Flink basically serves all the BUs of the group. During the peak of Double Eleven, the computing power reached 4 billion per second, the computing tasks reached more than 30,000, and a total of 1 million+ Cores were used; almost all specific businesses within the group were covered. , such as: data center, AI center, risk control center, real-time operation and maintenance, search recommendation, etc.

1. Building a risk control system based on Flink

Risk control is a big topic, involving rule engines, NoSQL DB, CEP, etc. This chapter mainly discusses some basic concepts of risk control. On the big data side, we divide risk control into a 3 × 2 relationship:

- 2 means that risk control is either rule-based or algorithm or model-based;

- 3 means that there are three types of risk control: prior risk control, mid-event risk control, and post-event risk control.

1.1 Three Risk Control Businesses

For the risk control during the event and the risk control after the event, the perception on the terminal is asynchronous, and for the risk control in advance, the perception on the terminal is synchronous.

Here is a little explanation for the prior risk control. Prior risk control is to store the trained model or the calculated data in databases such as Redis and MongoDB;

- One way is to have a rule engine like Sidden, Groovy, and Drools on the side to directly go to Redis and MongoDB to fetch data to return the result;

- Another way is based on Kubeflow KFserving, and after the request comes over, the result is returned based on the trained algorithm and model.

Generally speaking, the delay of these two methods is about 200 milliseconds, which can be used as a synchronous RPC or HTTP request.

For Flink-related big data scenarios, it is an asynchronous risk control request, and its asynchronous timeliness is very low, usually one or two seconds. If ultra-low latency is pursued, it can be considered as a kind of risk control, and the risk control decision-making process can be handled by machines.

A very common type is to use Flink SQL for the statistics of indicator thresholds, Flink CEP for behavior sequence rule analysis, and another is to use Tensorflow on Flink to describe the algorithm in Tensorflow, and then use Flink to perform the calculation of Tensorflow rules .

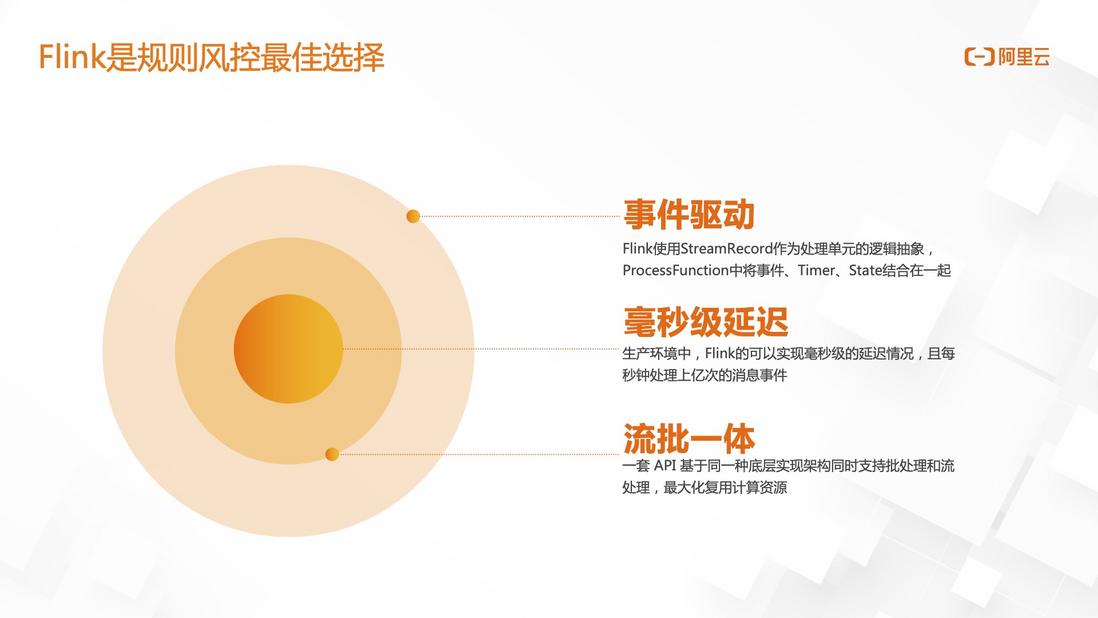

1.2 Flink is the best choice for regulatory risk control

At present, Flink is the best choice for risk control within the Alibaba Group, mainly for three reasons:

- event driven

- millisecond latency

- flow batch integration

1.3 Three elements of rules and risk control

There are three elements in rule risk control, and all the content described later is based on these three elements:

- Facts: refers to risk control events, which may come from the business side or log buried points, and are the input of the entire risk control system;

- Rules: It is often defined by the business side, that is, what business goals does this rule meet;

- Threshold: The severity of the description corresponding to the rule.

1.4 Flink rule expression enhancement

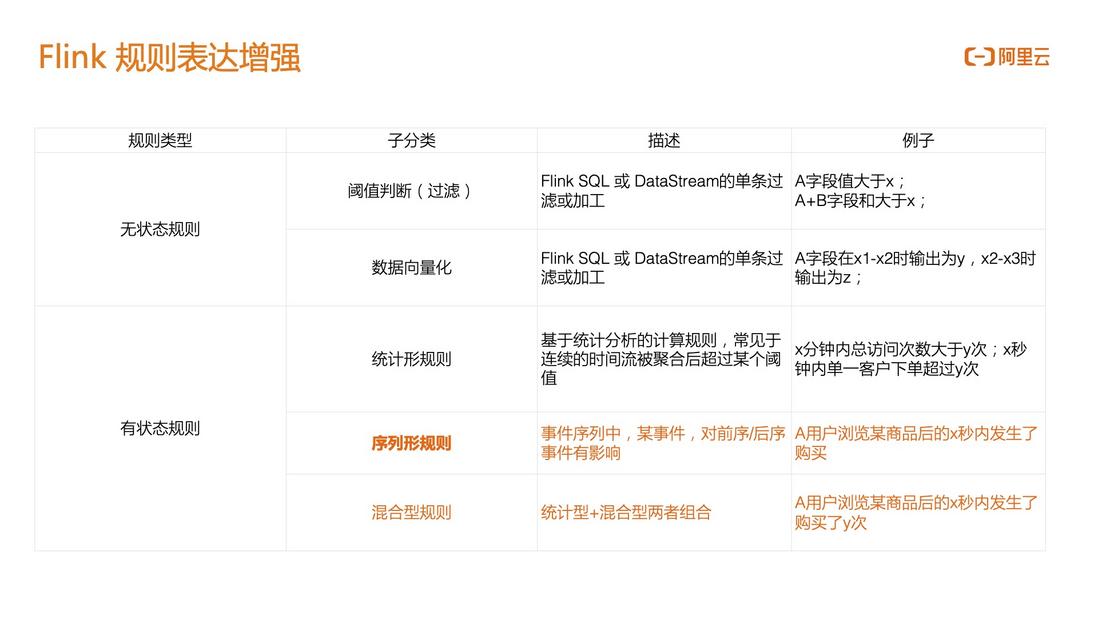

For Flink, it can be divided into two categories: stateless rules and stateful rules. Stateful rules are the core of Flink risk control:

- Stateless rules : mainly for data ETL. One scenario is that when a field value of an event is greater than X, the current risk control behavior is triggered; another scenario is that the downstream of the Flink task is a model or algorithm-based For risk control, there is no need to make rule judgments on the Flink side, but to vectorize and normalize the data, such as multi-stream association, Case When judgment, etc., to turn the data into a 0/1 vector, and then push it to the downstream TensorFlow for prediction.

Stateful rules :

- Statistical rules : calculation rules based on statistical analysis. For example, if the number of visits within 5 minutes is greater than 100, it is considered that risk control has been triggered;

- Sequence type rule : In the event sequence, an event has an impact on the pre-sequence and post-sequence events, such as clicking, adding to the shopping cart, and deleting three events. This continuous behavior sequence is a special behavior, and it may be considered that this behavior is maliciously reducing. The evaluation scores of the merchant's products, but these three events are not a risk control event independently; Alibaba Cloud's real-time computing Flink improves the sequence-based rule capabilities, providing technical escort for e-commerce transaction scenarios on the cloud and within the group;

- Mixed rules : a combination of both statistical and sequential.

2. Ali risk control actual combat

This chapter mainly introduces how Ali meets the three elements of risk control mentioned above in engineering.

From the overall technical point of view, it is currently divided into three modules: perception, disposal and insight:

- Perception : The purpose is to perceive all anomalies and detect problems in advance, such as capturing some data types that are different from common data distributions, and outputting a list of such anomalies; another example is the increase in helmet sales due to the adjustment of riding policies in a certain year. With this, the click rate and conversion rate of related products will increase. This situation needs to be detected and captured in time, because it is a normal behavior rather than cheating;

- Disposal : that is, how to implement the rules. Now there are three lines of defense: hourly, real-time, and offline. Compared with the previous matching of a single policy, the accuracy of association and integration will be higher. For example, to associate some users in the recent period of time to conduct comprehensive research and judgment;

- Insight : In order to discover some risk control behaviors that are currently not perceived and cannot be directly described by rules, for example, risk control needs to be highly abstracted to represent samples, which must first be projected into a suitable subspace, and then combined with the time dimension in the Some features are found in high dimensions to identify new anomalies.

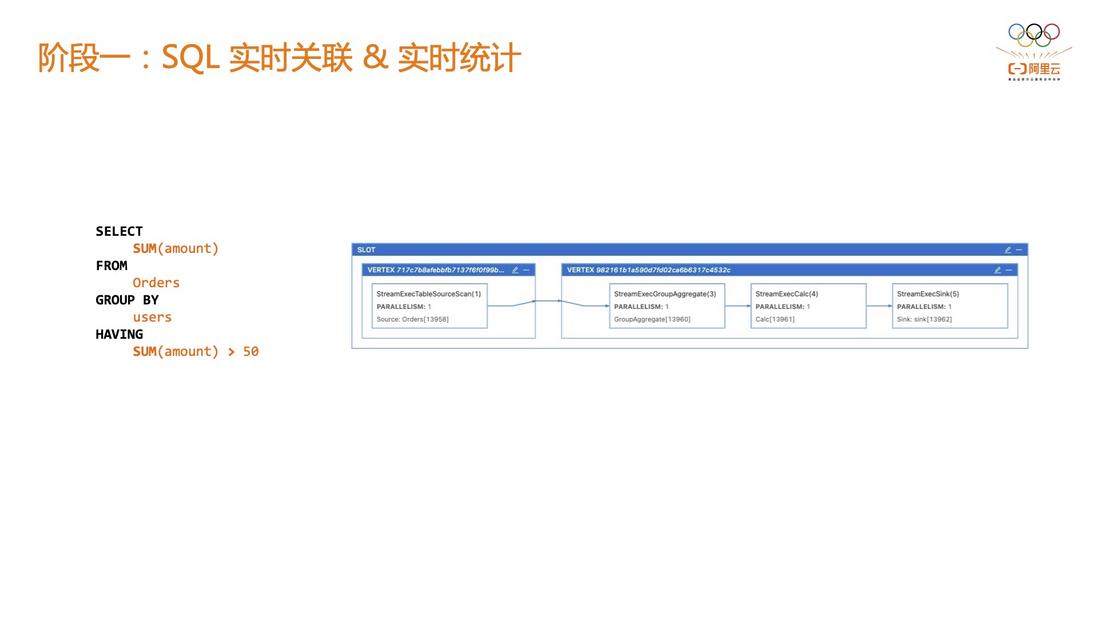

2.1 Phase 1: SQL real-time association & real-time statistics

At this stage, there is a SQL-based evaluation risk control system, which uses simple SQL to do some real-time associations and statistics, such as using SQL for aggregation operations SUM(amount) > 50, where the rule is SUM(amount), and the threshold corresponding to the rule is 50; Assuming that there are 4 rules of 10, 20, 50, and 100 running online at the same time, because a single Flink SQL job can only execute one rule, then it is necessary to apply for 4 Flink jobs for these 4 thresholds respectively. The advantage is that the development logic is simple and the job isolation is high, but the disadvantage is that it greatly wastes computing resources.

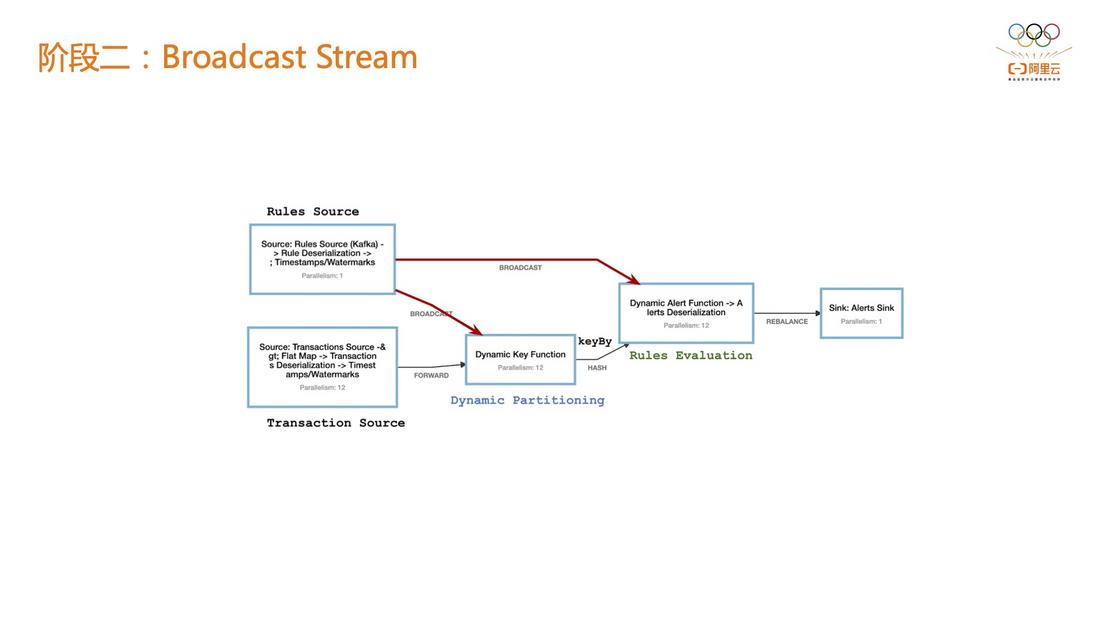

2.2 Phase 2: Broadcast Stream

The main problem of the risk control rules in phase 1 is that the rules and thresholds are immutable. There are currently some solutions in the Flink community, such as implementation based on BroadcastStream. In the following figure, the Transaction Source is responsible for event access, and the Rule Source is a BroadcastStream , when there is a new threshold, it can be broadcast to each operator through BroadcastStream.

For example, it is judged that a risk control object is accessed more than 10 times in a minute, but it may be changed to 20 or 30 times during 618 or Double 11 before it can be perceived by the online system downstream of the risk control system.

In the first stage, there are only two options: the first is to run all jobs online; the second is to stop a Flink job at a certain moment and start a new job based on the new metrics.

If it is based on BroadcastStream, the rule indicator threshold can be issued, and the online indicator threshold can be directly modified without restarting the job.

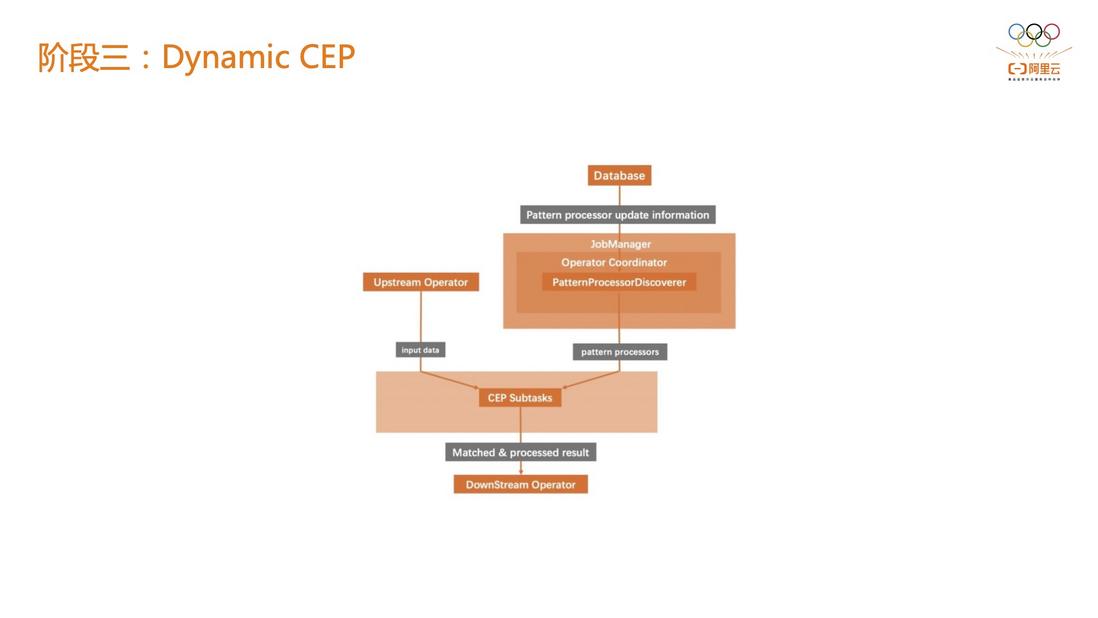

2.3 Phase 3: Dynamic CEP

The main problem of Phase 2 is that it can only update the indicator threshold. Although it greatly facilitates a business system, it is actually difficult to satisfy the upper-level business. There are two main demands: combining CEP to realize the perception of behavior sequences; combining CEP can still dynamically modify the threshold and even the rules themselves.

In the third stage, Alibaba Cloud Flink has made a high degree of abstraction related to CEP, and decoupled CEP rules and CEP execution nodes. That is to say, rules can be stored in external third-party storage such as RDS and Hologres. After the CEP job is published, the database can be loaded. To achieve dynamic replacement, the expressive power of the job will be enhanced.

Secondly, the flexibility of the job will be enhanced. For example, if you want to see some behaviors under an APP and update the indicator threshold of this behavior, you can update the CEP rules through third-party storage instead of Flink itself.

Another advantage of doing this is that the rules can be exposed to the upper-level business side, so that the business can truly write risk control rules, and we become a real rule center, which is the benefit of the dynamic CEP capability. In Alibaba Cloud's services, the dynamic CEP capability has been integrated into the latest version. Alibaba Cloud's fully managed Flink service greatly simplifies the development cycle of risk control scenarios.

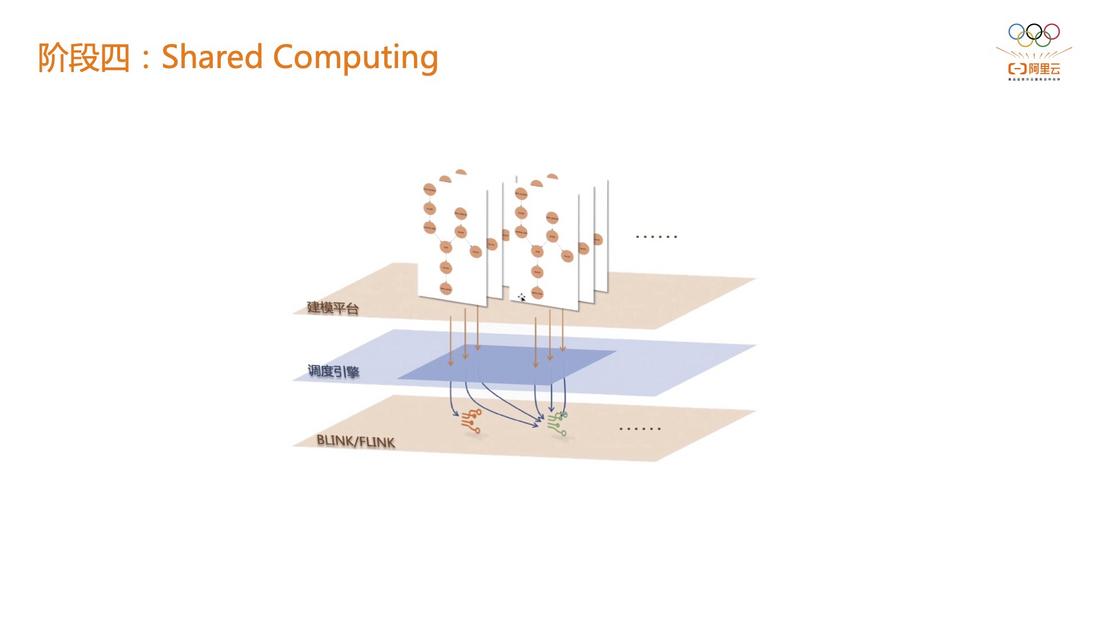

2.4 Stage 4: Shared Computing

Going a step further on the basis of Phase 3, Alibaba Cloud has implemented a "shared computing" solution. In this shared computing solution, CEP rules can be completely described by the modeling platform, and a very friendly rule description platform is exposed to upper-level customers or business parties. on Select an event access source to run the rule. For example, both models now serve the Taobao APP, and can be completely decoupled from the Flink CEP job of the same Fact, so that the business side, execution layer and engine layer can be completely decoupled. At present, Alibaba Cloud's shared computing solutions are very mature, with rich customer implementation practices.

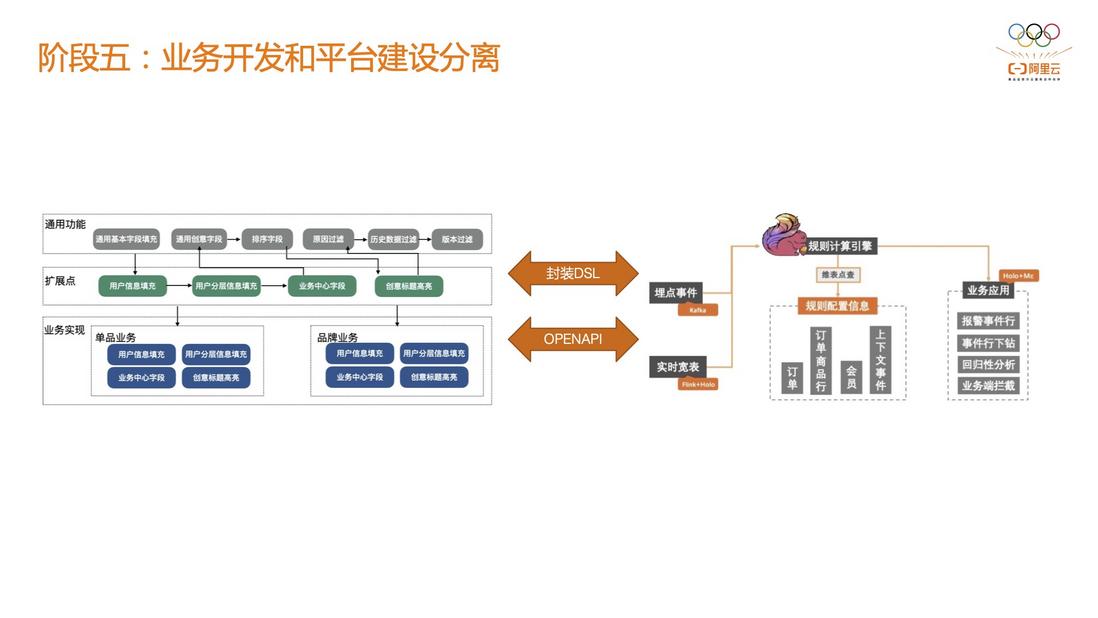

2.5 Stage 5: Separation of business development and platform construction

Between the engine side, the platform side, and the business side, Phase 4 can achieve decoupling between the engine side and the platform side, but it is still highly bound to the business side. The working mode of the two is still the collaborative relationship between Party A and Party B, that is, the business side holds the business rules, and the platform side accepts the risk control requirements of the business team, so as to develop the risk control rules. But the platform team usually gives priority to personnel, while the business team will grow stronger as the business develops.

At this time, the business side can abstract some basic concepts, precipitate some common business specifications, assemble them into a friendly DSL, and then implement job submission through Alibaba Cloud's fully decoupled Open API.

Since we have to support nearly 100 BUs in the group at the same time, there is no way to provide customized support for each BU. We can only open up the capabilities of the engine as much as possible, and then submit the business side to the platform through DSL encapsulation. When it comes to exposing only one middle office to customers.

3. Difficulties in Large-scale Risk Control Technology

This chapter mainly introduces some technical difficulties in large-scale risk control, and how Alibaba Cloud overcomes these technical difficulties in fully managed Flink commercial products.

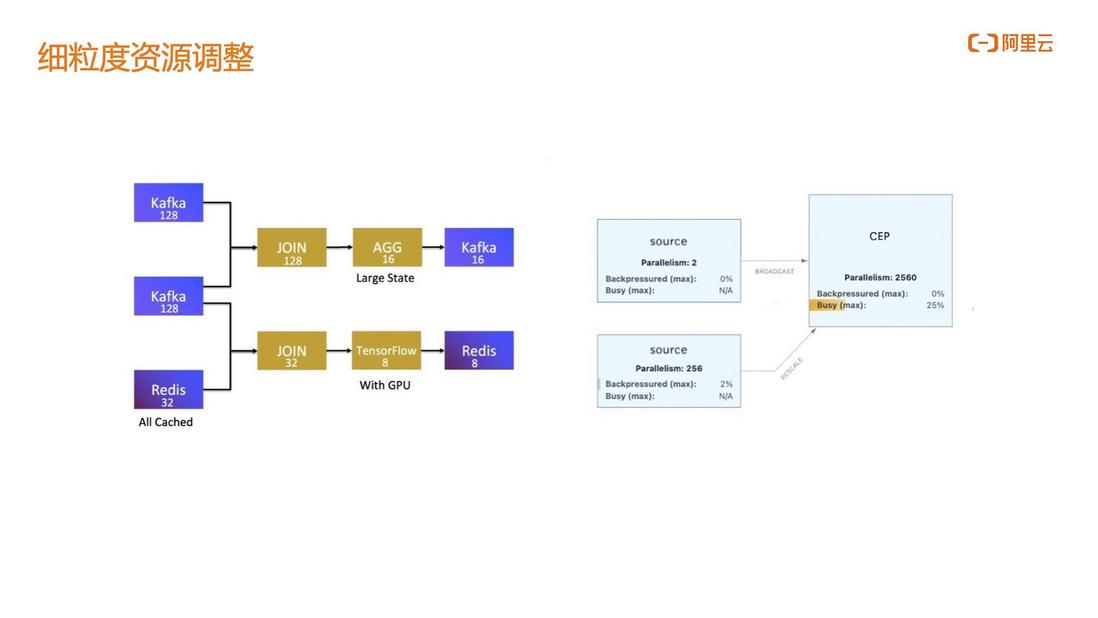

3.1 Fine-grained resource adjustment

In stream computing systems, data sources are often not blocking nodes. The upstream data reading node does not have performance problems because there is no computing logic, and the downstream data processing node is the performance bottleneck of the entire task.

Since Flink's jobs are divided into slots, the default source node and worker node have the same degree of concurrency. In this case, we hope that the concurrency of the Source node and the CEP worker node can be adjusted separately. For example, in the figure below, we can see that the concurrency of the CEP worker node of a job can reach 2000, while the Source node only needs 2 degrees of parallelism. , which can greatly improve the performance of CEP nodes.

The other is the division of TM memory and CPU resources where the CEP worker nodes are located. In the open source Flink, the TM is homogeneous as a whole, that is to say, the source node and the worker node have exactly the same specifications. From the perspective of saving resources, in a real production environment, the source node does not need as much memory and CPU resources as the CEP node, and the source node only needs a smaller CPU and memory to satisfy data capture.

Alibaba Cloud's fully managed Flink enables Source nodes and CEP nodes to run on heterogeneous TMs, that is, the TM resources of the CEP worker nodes are significantly larger than the TM resources of the Source nodes, and the CEP work execution efficiency will become higher. Considering the optimization brought by fine-grained resource adjustment, the fully managed service on the cloud can save 20% of the cost compared to the self-built IDC Flink.

3.2 Stream Batch Integration & Adaptive Batch Scheduler

If the stream engine and batch engine do not use the same set of execution modes, they will often encounter inconsistent data calibers. The reason for this problem is that it is difficult to fully describe the stream rules under batch rules; for example, in Flink there is a A special UDF, but there is no corresponding UDF in the Spark engine. When such data calibers are inconsistent, it becomes a very important issue to choose which data caliber to use.

On the basis of Flink's stream-batch integration, the CEP rules described in the stream mode can be run again in the batch mode with the same caliber and get the same results, so that there is no need to develop CEP jobs related to the batch mode.

On top of this, Ali has implemented an adaptive Batch Scheduler. In fact, the daily output of CEP rules is not necessarily balanced. For example, there is no abnormal behavior in today's behavior sequence, and there is only a small amount of data input downstream. At this time, an elastic cluster will be reserved for batch analysis; When the results of CEP are few, the downstream batch analysis requires only a small amount of resources, and even the parallelism of each batch analysis worker node does not need to be specified at the beginning. To automatically adjust the degree of parallelism in batch mode, it truly achieves elastic batch analysis, which is the unique advantage of Alibaba Cloud's Flink batch scheduler.

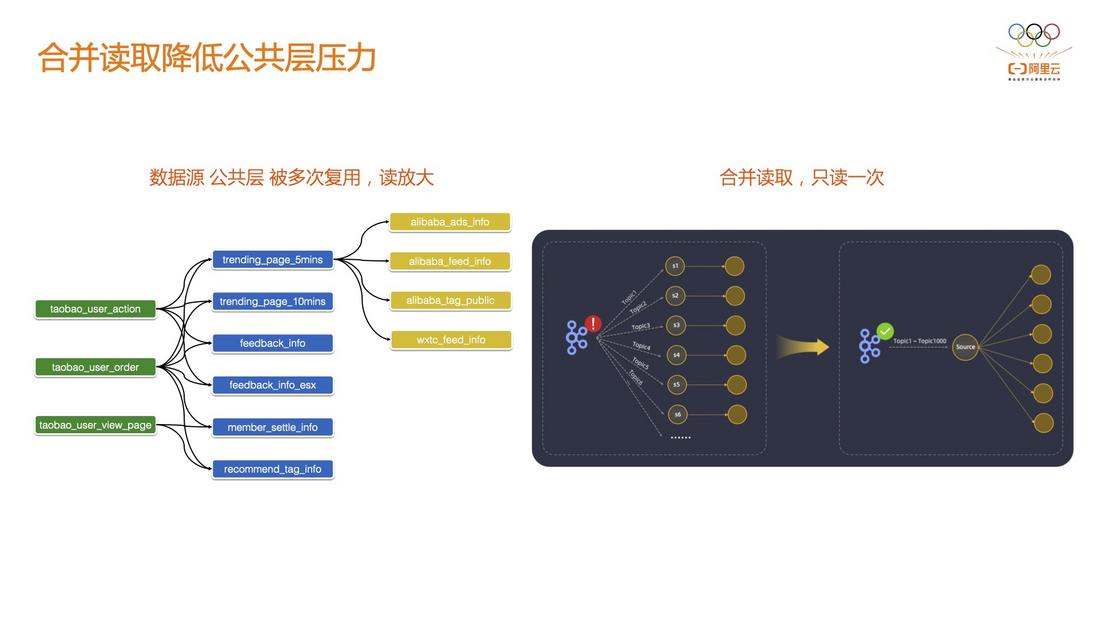

3.3 Merging reads to reduce pressure on the public layer

This is a problem encountered in practice. The current development model is basically based on the data center, such as real-time data warehouse. In the real-time data warehouse scenario, there may not be many data sources, but the middle layer DWD will become many, the middle layer may be evolved into many DWS layers, and even many data marts for use by various departments , in this case, the reading pressure of a single table will be very large.

Often multiple source tables are related (widened) to each other to form a DWD layer that, from the perspective of a single source table, is dependent on multiple DWD tables. The DWD layer is also consumed by jobs in multiple different business domains to form DWS. Based on this situation, Alibaba has implemented Source-based merging. You only need to read DWD once. On the Flink side, it will help you process DWS tables of multiple business domains, which can greatly reduce the execution pressure on the public layer.

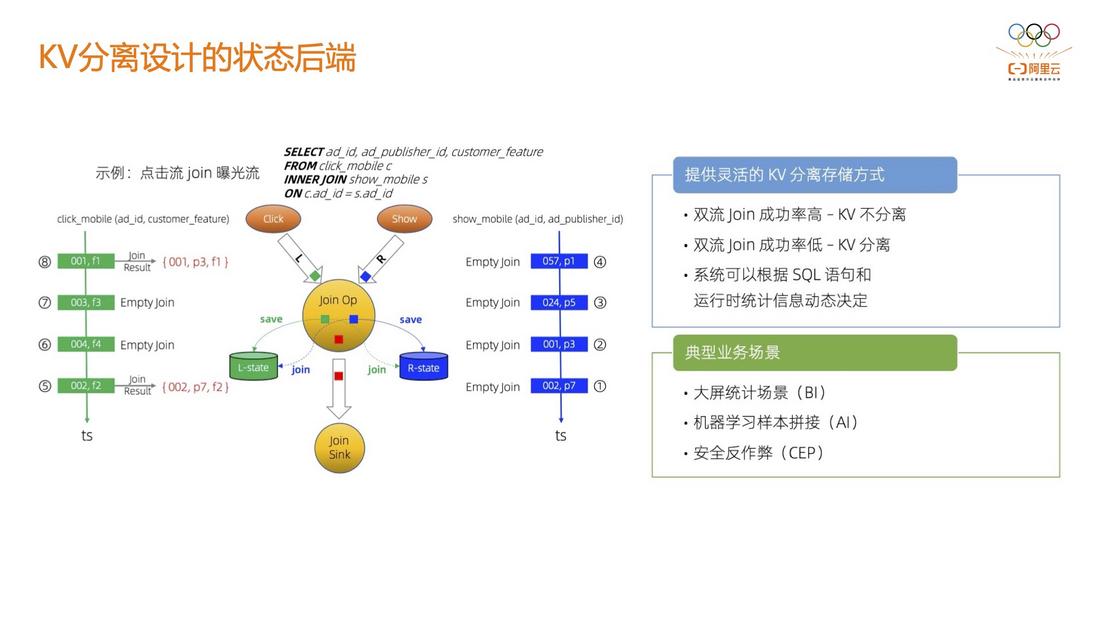

3.4 State Backend for KV Separation Design

When the CEP node is executed, it will involve a very large-scale local data read, especially in the calculation mode of the behavior sequence, because it needs to cache all the previous data or the behavior sequence within a certain period of time.

In this case, a big problem is that there is a very large performance overhead for back-end state storage (such as RocksDB), which in turn affects the performance of CEP nodes. At present, Alibaba has implemented the state backend of the KV separation design. Alibaba Cloud Flink uses Gemini as the state backend by default. The measured performance in the CEP scenario is improved by at least 100%.

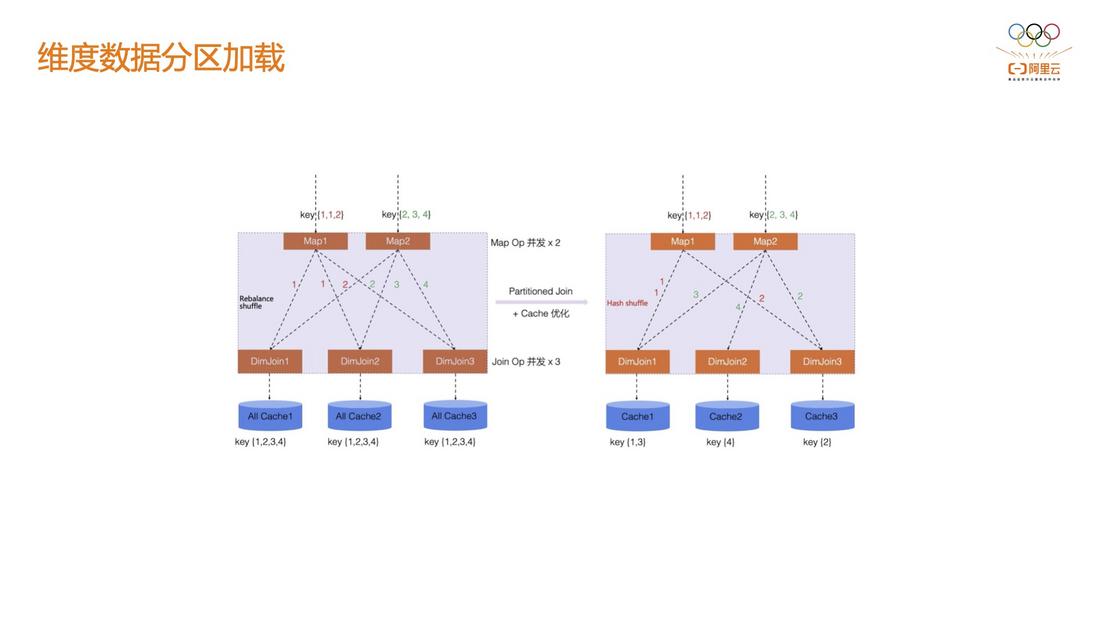

3.5 Dimensional data partition loading

In many cases, risk control needs to be analyzed based on historical behavior. Historical behavior data is generally stored in Hive or ODPS tables. The scale of this table may be terabytes. The open source Flink needs to load this super large dimension table on each dimension table node by default, which is actually unrealistic. Alibaba Cloud implements the partitioning of memory data based on Shuffle, and the dimension table node only loads the data belonging to the current Shuffle partition.

4. Alibaba Cloud Flink FY23 Risk Control Evolution Plan

For Alibaba Cloud as a whole, the evolution plan for FY23 includes the following:

- Enhanced expressiveness

- observational enhancement

- Enhanced ability to execute

- Performance enhancement

Welcome to use cloud products to experience, give more opinions, and make progress together.

Click to view the live replay & speech PPT

2022 4th Real-time Computing FLINK Challenge

490,000 bonuses are waiting for you!

Continue the "Encouraging Teacher Program" and win generous gifts!

Click to enter the official website of the competition to register for the competition

For more technical issues related to Flink, you can scan the code to join the community DingTalk exchange group to get the latest technical articles and community dynamics as soon as possible. Please pay attention to the public number~

Recommended activities

Alibaba Cloud's enterprise-level product based on Apache Flink - real-time computing Flink version is now open:

99 yuan to try out the Flink version of real-time computing (yearly and monthly, 10CU), and you will have the opportunity to get Flink's exclusive custom sweater; another package of 3 months and above will have a 15% discount!

Learn more about the event: https://www.aliyun.com/product/bigdata/en

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。