Author|Xu Bangjiang, Yu Wenbing, Edited by Zhao Hongmei|Wu Chong

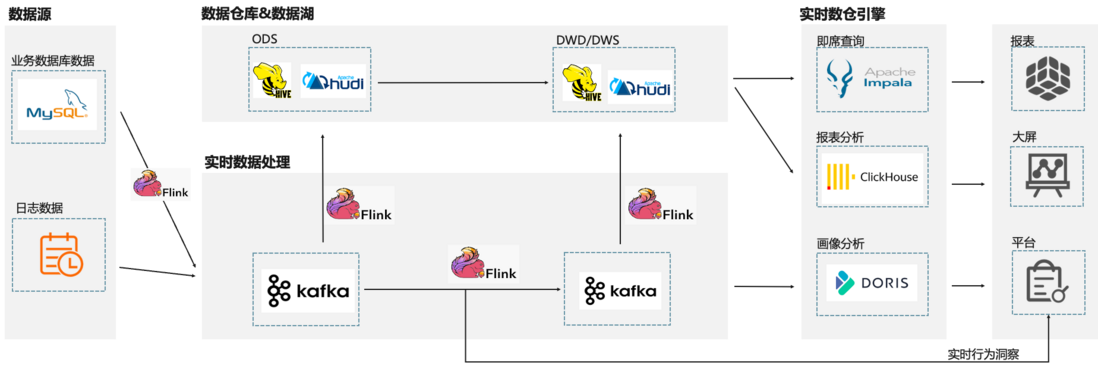

With the rapid development of big data, enterprises pay more and more attention to the value of data, which means that data needs to reach enterprise analysis and decision-making personnel as soon as possible to maximize the value of data. The most common practice of enterprises is to meet the rapid exploration of data by building real-time data warehouses. In the process of business construction, the real-time data warehouse needs to support a series of requirements such as real-time data writing and updating, agile and rapid business response, self-service data analysis, convenient operation and maintenance, and cloud-native elastic expansion and contraction, which depends on a powerful Real-time data warehouse solution. Alibaba Cloud's real-time computing Flink version (hereinafter referred to as "Alibaba Cloud Flink") provides full-incremental integrated data synchronization technology, powerful streaming ETL and other capabilities, and supports real-time warehousing of massive data into the lake. As a new generation of real-time data warehouse engine, Alibaba Cloud Hologres can simultaneously solve multiple business query scenarios such as OLAP multi-dimensional analysis, online services, and offline data acceleration. Through the strong combination of Alibaba Cloud Flink and Hologres, real-time data exploration of the whole link can be realized. , Data analysis is agile, and quickly helps businesses build an enterprise-level one-stop real-time data warehouse to achieve more timely and intelligent business decisions.

In this article, we will introduce the core capabilities of Alibaba Cloud Flink and Alibaba Cloud Hologres in building real-time data warehouses and the best solution for combining the two. Through the Alibaba Cloud Flink+Hologres real-time data warehouse solution , users can Significantly lower the threshold for data warehouse construction, let data play a greater value, and help all walks of life to achieve digital upgrades.

1. Core capabilities of Flink CDC

Apache Flink is an open source big data streaming computing engine that supports processing of various real-time data such as databases, binlogs, and online logs, provides end-to-end sub-second real-time data analysis capabilities, and lowers the threshold for real-time business development through standard SQL. With the development and deepening of the real-time wave, Flink has gradually evolved into a leading role and de facto standard for stream processing, and has been ranked as the most active project in the Apache community.

Flink CDC is a data integration framework open sourced by Alibaba Cloud Computing Platform Division in July 2020. It is deeply integrated with the Flink ecosystem and has technical advantages such as full incremental integration, lock-free reading, concurrent reading, and distributed architecture. It can not only replace the traditional DataX and Canal tools for data synchronization, but also support real-time database data into the lake and warehouse, and also has powerful data processing capabilities.

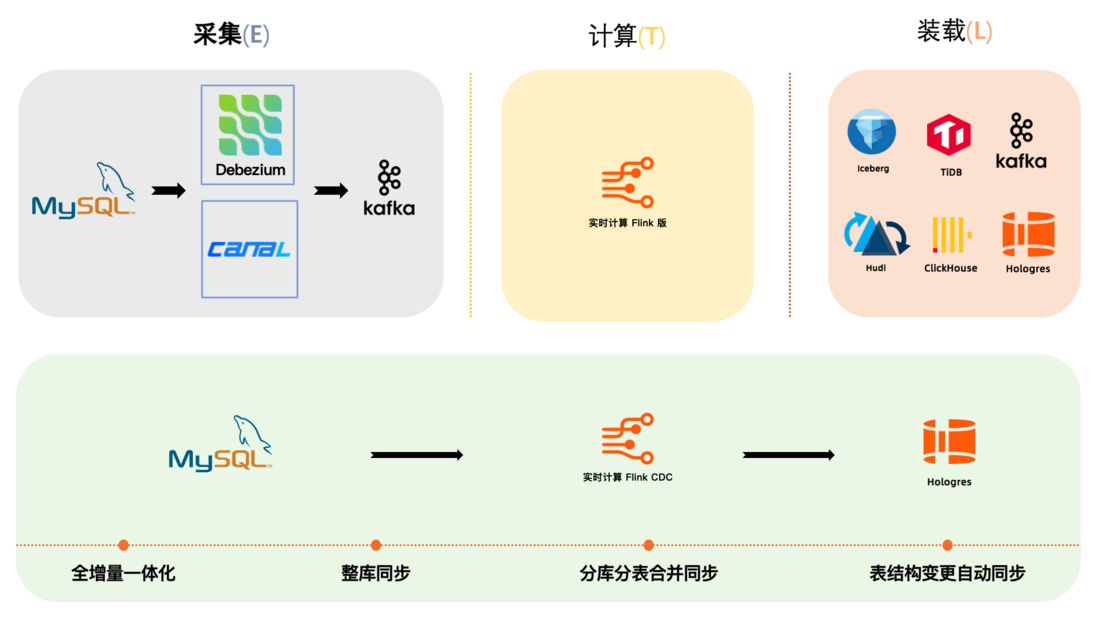

In the process of building a real-time data warehouse, data acquisition is a necessary component. In the traditional ETL architecture, foreign users at the acquisition layer usually choose Debezium, while domestic users are accustomed to using DataX and Canal. The acquisition tool is responsible for collecting the full data and incremental data of the database. The collected data will be output to the message middleware such as Kafka, and then the message middleware data will be consumed in real time through the Flink computing engine for data cleaning and data processing at the computing layer, and then written to the destination (loading layer) after the processing is completed. database, data lake and data warehouse. In traditional ETL links, data collection tools and message queues are relatively heavy components, which may be maintained in different teams. When the upstream data source has business changes or these components need to be upgraded and maintained, the maintenance cost of the entire link will be very high. big.

By using Flink CDC to replace the data acquisition components and message queues in the above figure, the acquisition layer (Extraction) and the calculation layer (Transformation) are merged, which simplifies the entire ETL analysis link, and users can use fewer components to complete the data link. The overall architecture brings lower operation and maintenance overhead and lower hardware costs, better data link stability, and lower end-to-end data latency. In addition to the improvement of stability, another advantage of Flink CDC is that users only need to write SQL scripts to complete the cleaning, processing and synchronization of CDC data, which greatly reduces the user threshold.

In addition to the full-incremental integrated synchronization capability, Alibaba Cloud Flink CDC also provides many enterprise-level features such as automatic table structure change synchronization, whole database synchronization, sub-database and sub-table merge synchronization, etc., to facilitate users to quickly open up data silos and realize business value.

1.1 Full-incremental integrated synchronization

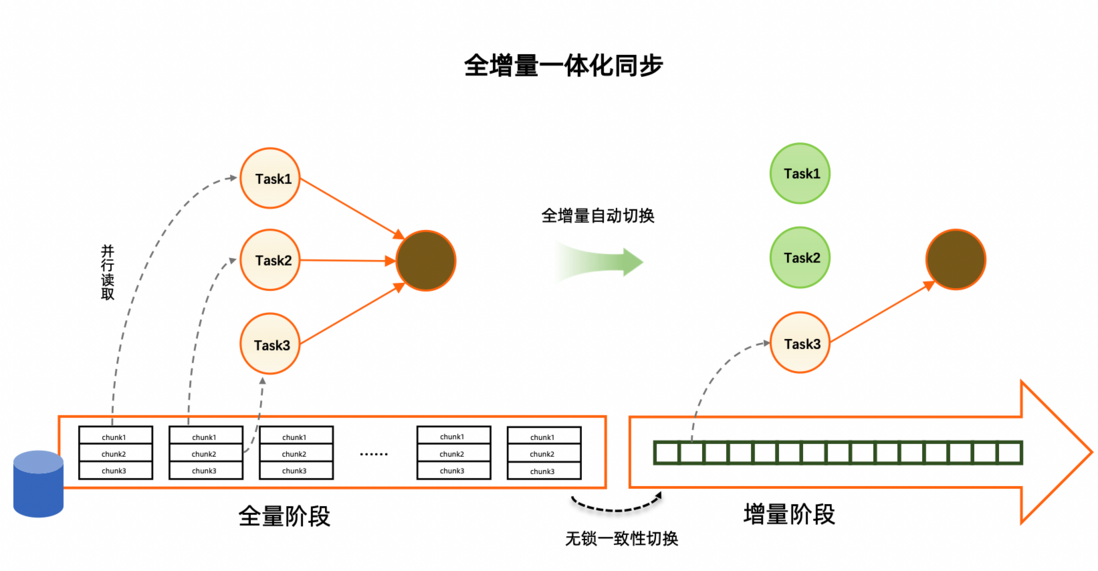

Flink CDC is the first to support four important features in the field of open source data integration through the incremental snapshot read algorithm, namely, lock-free reading, parallel reading, breakpoint resuming, and no loss or weight. Among them, the lock-free reading completely solves the deadlock risk of data synchronization on the upstream business database, and the parallel reading can well meet the needs of massive data synchronization. stability and reliability.

The core idea of the incremental snapshot reading algorithm is to divide the table into chunks for concurrent reading in the full reading phase. After entering the incremental phase, only one task is required to read Binlog logs concurrently. When switching, consistency is guaranteed through a lock-free algorithm. This design not only improves the reading efficiency, but also further saves resources and realizes the data synchronization of full incremental integration. With the automatic resource tuning feature provided by Alibaba Cloud's real-time computing products, the resources of Flink CDC jobs can be automatically scaled up and down without manual intervention.

1.2 Automatic synchronization of table structure changes

With the iteration and development of the business, the table structure change of the data source is a frequent operation. Users need to modify the data synchronization job in time to adapt to the latest table structure, which brings a large operation and maintenance cost, and also affects the stability of the synchronization pipeline and the timeliness of the data. Alibaba Cloud Flink supports automatic discovery and management of metadata through Catalog. With the CTAS (Create Table AS) syntax, users can synchronize data and automatically synchronize table structure changes through a single line of SQL.

Flink SQL> USE CATALOG holo;

Flink SQL> CREATE TABLE user AS TABLE mysql.`order_db`.`user`;The CTAS statement will be parsed into a Flink job for execution. The source of this Flink job supports reading data changes and table structure changes and synchronizing them to the downstream. The order of data and table structure changes can be guaranteed. The effect of synchronization of structure changes when the above CTAS statement is run is as follows shown.

Example If a new column age is added to the user table of upstream MySQL, and a record with an id of 27 and an age of 30 is inserted.

MySQL> ALTER TABLE `user` ADD COLUMN `age` INT;

MySQL> INSERT INTO `user` (id, name, age) VALUES (27, 'Tony', 30);The data and structure changes on the user table can be automatically synchronized to the user table of the downstream Hologres in real time. For the historical data with ids 12, 16 and 19, the newly added columns will be automatically filled with NULL values.

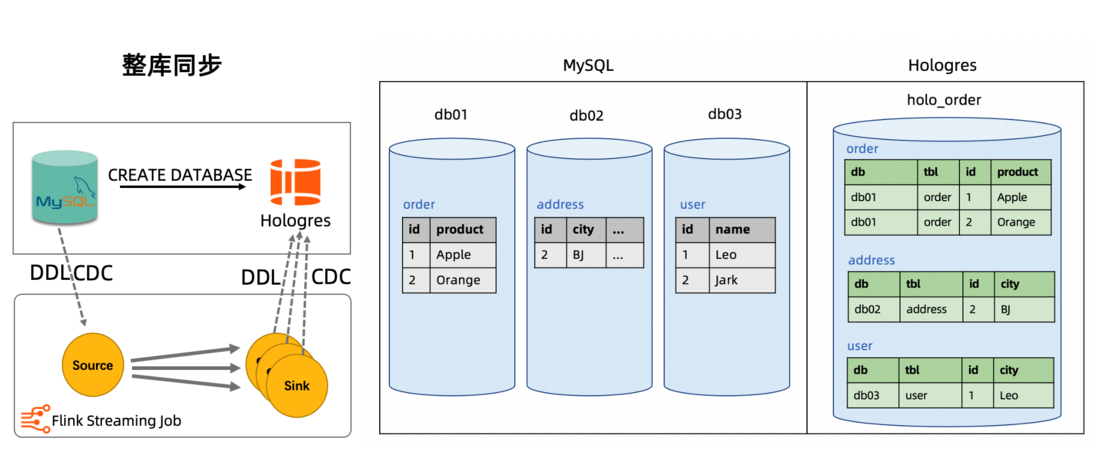

1.3 Whole library synchronization

In the construction of real-time data warehouse, users often need to synchronize the entire database to the data warehouse for further analysis. The method of one table and one synchronization job not only wastes resources, but also puts greater pressure on the upstream database. For such user pain points, Alibaba Cloud Flink CDC provides the entire database synchronization feature. The synchronization function of the whole database is realized through the CDAS (Create Database AS) syntax in conjunction with the Catalog.

Flink SQL> USE CATALOG holo;

Flink SQL> CREATE DATABASE holo_order AS DATABASE

mysql.`order_db` INCLUDING ALL TABLES;For example, MySQL Catalog and Hologres Catalog cooperate with CDAS syntax to complete the full incremental data synchronization from MySQL to Hologres. The CDAS statement will be parsed into a Flink job for execution. This Flink job automatically parses the table structure and corresponding parameters of the source table, and synchronizes the specified database or databases to the downstream Hologres data warehouse. The user does not need to write DDL statements in the whole process. Without the need for users to create tables in Hologres in advance, the entire database of data can be synchronized quickly.

By default, CDAS jobs provide table structure change synchronization capabilities. All table structure changes will be synchronized to the downstream Hologres real-time data warehouse in the order of occurrence. CDAS syntax also supports filtering of tables that do not need synchronization.

1.4 Merge synchronization of sub-database and sub-table

Sub-database and sub-table is a classic database design adopted by high-concurrency business systems. Usually, we need to aggregate the business data of sub-database and sub-table into a large table in a data warehouse to facilitate subsequent data analysis, that is, sub-database and sub-table merge and synchronization scene. For this scenario, Alibaba Cloud Flink CDC provides the feature of merging and synchronizing sub-databases and sub-tables. By supporting the regular expressions of the source database and the source table in CTAS syntax, the sub-tables of the source database can be efficiently merged and synchronized to the downstream Hologres data warehouse. .

Flink SQL> USE CATALOG holo;

Flink SQL> CREATE TABLE order AS TABLE mysql.`order_db.*`.`order_.*`;The source database name order_db. in the above CTAS statement is a regular expression, which can match the three databases order_db01, order_db02 and order_db03 under the current MySQL instance, and the source table name order_ is also a regular expression, which can match all the order_db01, order_db02 and order_db03 databases under the current MySQL instance. _ start with the table.

For sub-database and sub-table synchronization scenarios, users only need to provide the regular expression of sub-database sub-tables to merge and synchronize these sub-database sub-tables to the order table of the downstream Hologres data warehouse. Like other CDAS statements, the sub-database sub-table synchronization scenario provides automatic synchronization of table structure changes by default, and the schema of the downstream Hologres table is the widest schema after all sub-tables are merged. When the sub-database and sub-table are synchronized, the database name and table name to which each row of records belongs will be automatically written into the user table as two additional fields, the database name (the db column in the above figure), the table name (the tbl column in the above figure) and The original primary key (the id column in the above figure) will be used together as the joint primary key of the downstream Hologres user table to ensure the uniqueness of the primary key on the Hologres user table.

2. The core competence of Hologres

Alibaba Cloud Hologres is a self-developed one-stop real-time data warehouse engine that supports real-time writing, real-time updating, and real-time analysis of massive data, supports standard SQL (compatible with PostgreSQL protocol), and provides PB-level data multidimensional analysis (OLAP) and ad hoc analysis and The online data service (Serving) with high concurrency and low latency is deeply integrated with Alibaba Cloud Flink, MaxCompute, DataWorks, etc., to provide enterprises with an off-line integrated full-stack data warehouse solution.

2.1 High-performance real-time writing and updating

The timeliness of data writing is one of the important capabilities of real-time data warehouses. For latency-insensitive business queries such as BI, it may be acceptable if the write latency is several seconds or even minutes. For many production systems, such as real-time risk control, real-time large-screen and other scenarios, data is required to be written and visible. If there is a delay in writing, the latest data cannot be queried, which seriously affects online business decisions. In the entire data processing link of the real-time data warehouse, Hologres, as a one-stop real-time data warehouse engine, provides high-performance real-time writing of massive data, and data can be queried after writing without delay.

At the same time, in the data warehouse scenario, the data source is complex, and it will involve a lot of data update and correction scenarios. Hologres can provide high-performance Upsert capabilities through the primary key (PK), and the entire writing and update process ensures Exactly Once , to meet the needs of merging and updating data.

The following figure shows the test results of 10 concurrent real-time write 20-column column-stored tables under the Hologres 128C instance. The vertical axis represents the number of records written per second, and the horizontal axis represents 4 write scenarios:

- Append Only : The write table has no primary key, and the write capacity is 2.3 million+ RPS.

- INSERT : The write table has a primary key. If the primary key conflicts, the new row will be discarded. The write capacity is 2 million RPS.

- UPDATE-1 : The write table has a primary key, and the original data volume in the table is 200 million. According to the primary key Upsert, the write capacity is 800,000 RPS.

- UPDATE-2 : The write table has a primary key, and the data volume in the table is 2 billion. Upsert is performed according to the primary key, and the write capacity is 700,000 RPS.

2.2 Real-time OLAP Analysis

Hologres adopts scalable MPP full parallel computing, supports multiple storage modes such as row storage, column storage, row and column coexistence, and supports multiple index types. Through distributed processing of SQL and vectorized operators, CPU resources can be maximized to support sub-second analysis of massive data, without pre-computing, to support real-time multi-dimensional analysis, ad hoc analysis and other real-time OLAP analysis scenarios , and then directly and seamlessly connect to upper-layer applications/services to meet the WYSIWYG analysis experience.

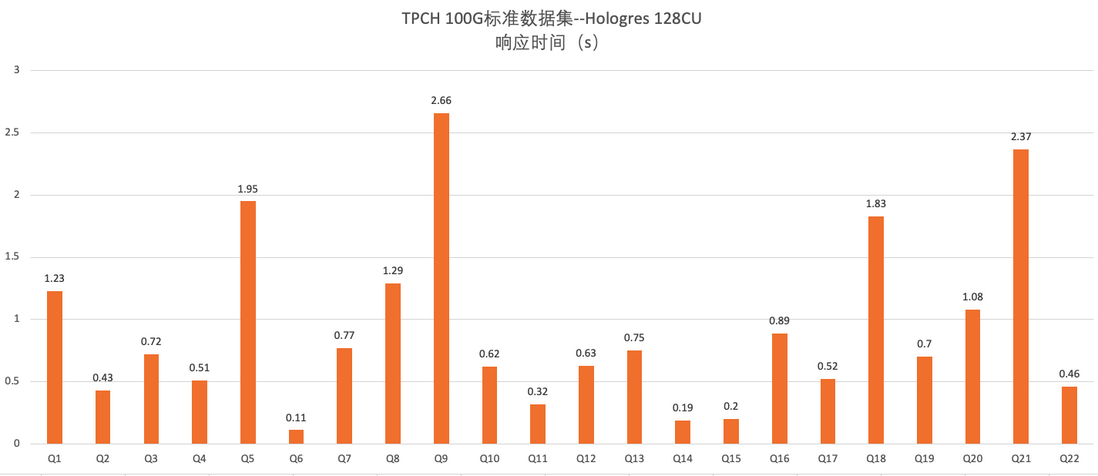

The following figure shows the test results under the TPCH 100G standard data set under the Hologres 128C instance, the horizontal axis represents the query, and the vertical axis is the response time:

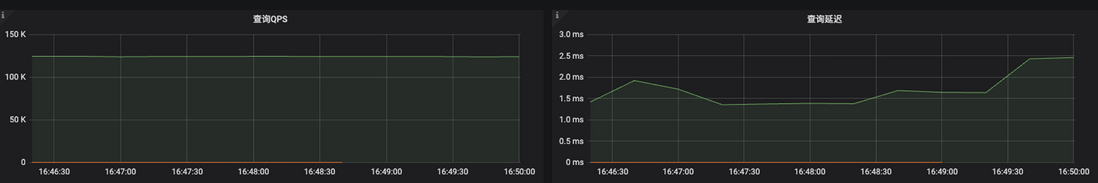

2.3 High-performance online services

With the wide application of real-time data warehouses, more and more enterprises use real-time data warehouses as online service systems to provide online queries. As the best implementation practice of HSAP (Hybrid Serving and Analytics Processing), Hologres not only has the ability to process analytical queries, but also has very powerful online service Serving capabilities (high QPS check), such as KV Click and vector search. In the KV check scenario, Holgres can support millions of QPS throughput and extremely low latency through the SQL interface. Through Hologres, one system and one data set can support two scenarios of OLAP analysis and online service at the same time, simplifying the data architecture.

The following figure shows the point-check test performance with CPU consumption of 25% under the Hologres 128C instance:

2.4 High Availability of Read-Write Separation

The real-time data warehouse Hologres provides high-QPS and low-latency writing capabilities, supports online service query scenarios, and supports complex multi-dimensional analysis OLAP queries. When different types and complex tasks are requested to the Hologres instance, Hologres not only needs to ensure the normal operation of the task, but also ensure the stability of the system. Currently, Hologres supports the high-availability architecture of one master and multiple slave sub-instances through shared storage, which realizes the complete read-write separation function and guarantees the SLA of different business scenarios.

- Read-write separation: The complete read-write separation function is implemented to ensure the SLA of different business scenarios. In scenarios such as high-throughput data writing and complex ETL jobs, OLAP queries, AdHoc queries, and online services, the system load is physically complete. Isolation, there will be no query task jitter due to write tasks.

- Isolation of multiple types of load resources: One master instance can be configured with four read-only instances, and the instances can be configured with different specifications according to business conditions. The system load is physically completely isolated to avoid jitter caused by mutual influence.

- Data millisecond-level asynchronous synchronization delay between instances: P99 within 5ms.

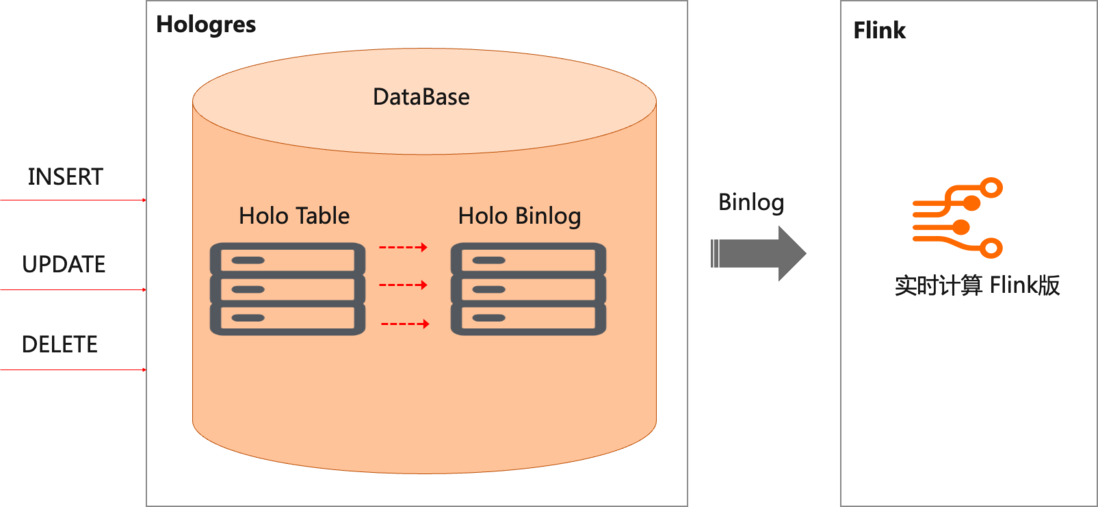

2.5 Binlog subscription

Similar to the Binlog concept in the traditional database MySQL, Binlog is used to record the modification records of table data in the database, such as Insert/Delete/Update operations. In Hologres, the Binlog of a table is data in a strong schema format. The sequence number (BigInt) recorded in the Binlog increases monotonically within a single shard, similar to the concept of Offset in Kafka. By consuming Hologres Binlog through Alibaba Cloud Flink, real-time development of the full link between data warehouse layers can be realized. Under the premise of layered governance, the end-to-end delay of data processing can be shortened, and the development efficiency of real-time data warehouse layers can be improved at the same time.

3. Alibaba Cloud Flink x Hologres one-stop enterprise-level real-time data warehouse solution

3.1 Real-time warehouse ETL

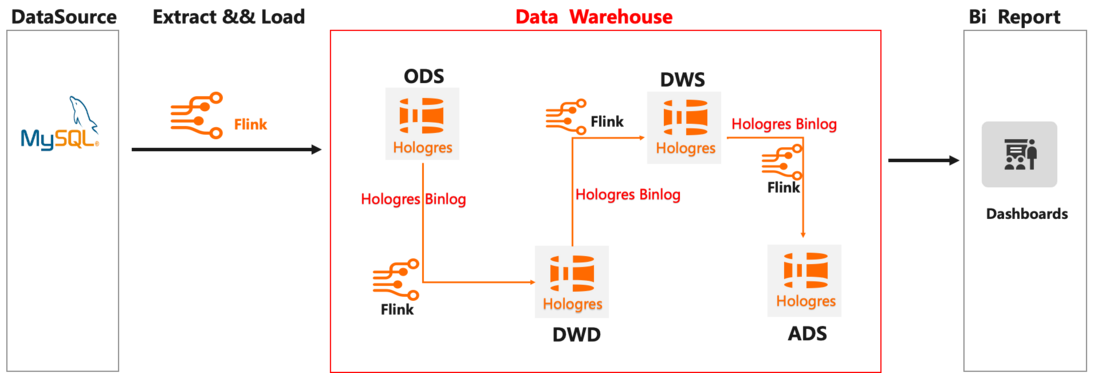

ETL (Extract-Transform-Load) is a relatively traditional data warehouse construction method. After the data Binlog of the business library is processed by Alibaba Cloud Flink's ETL, the data is written to the real-time data warehouse Hologres, and then various data query and analysis are performed. The core of the ETL method is to have a complete data warehouse model layering in the data warehouse, usually according to ODS (Operational Data Source) > DWD (Data Warehouse Detail) > DWS (Data Warehouse Summary) > ADS (Application Data Service) layering , the entire data warehouse link is relatively complete.

In this link, it is necessary to synchronize the data source such as MySQL's Binlog data to the message queue Kafka through Alibaba Cloud Flink CDC, and then filter, clean, and logically transform the ODS data through Alibaba Cloud Flink to form different services. The DWD data detail layer of the topic model, at the same time, sends the data to the Kafka cluster, and then the DWD data is lightly aggregated through Alibaba Cloud Flink to form the DWS light summary layer data that is more convenient for business queries, and then the data is aggregated. Write to the Kafka cluster. Finally, to meet the specific application layer requirements of the business, on the basis of the DWS layer, the ADS data application layer is formed through real-time processing by Alibaba Cloud Flink, which is written into the real-time data warehouse Hologres for storage and analysis, and supports various types of business reports, portraits, etc. Business scene.

The advantages of real-time data warehouse ETL processing are that the various levels of the data warehouse are relatively complete and the responsibilities are clear, but the disadvantage is that Flink combined with Kafka cluster maintenance is complex, the processing link is relatively long, the correction of historical data is complex, and the real-time data of the ADS application layer will be weak. Secondly, it is not convenient to query the data in each Kafka, it is not convenient to check the data quality, and it is not convenient to realize the dynamic change of the schema.

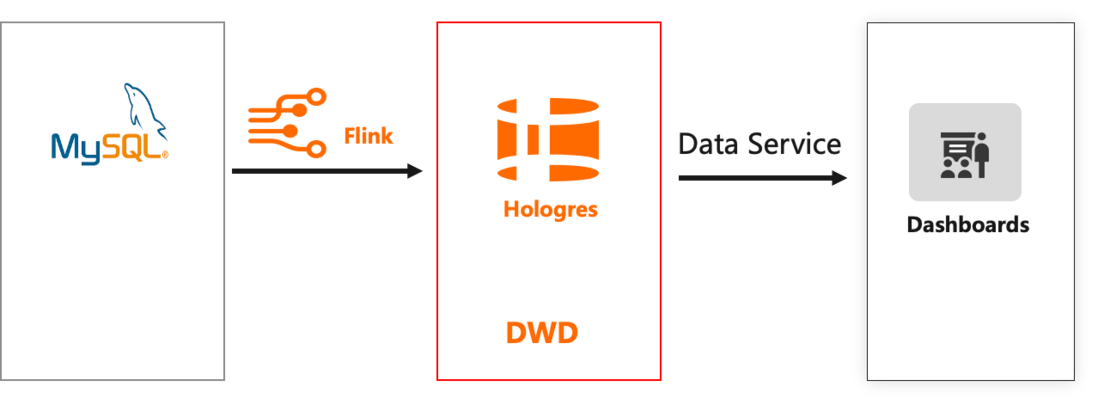

3.2 Real-time warehouse ELT

As business requirements for data timeliness are getting higher and higher, compared to ETL's complex processing links, businesses need to store data in real time faster, so ELT has become a more popular processing method. ELT is the abbreviation of English Extract-Load-Transform, we can understand ELT as a process of data migration and integration. In this process, we can collect data such as logs from data source relational databases such as MySQL, PostgresSQL, non-relational databases such as HBase, Cassandra and other business libraries, and message queues such as Datahub, buried points in Kafka, etc. Flink extracts in real time, and then loads it into Hologres for related OLAP analysis and online services.

In this link, Alibaba Cloud Flink is responsible for real-time data warehousing and data cleaning and association. The cleaned data is written to Hologres in real time, and Hologres directly stores detailed data. In Hologres, stratification can be simplified, focusing on the detail layer, with other summary layers as needed, and directly connecting to upper-layer query services such as reports and applications through the powerful data processing capabilities of Hologres. The upper-layer analysis SQL cannot be solidified. Usually, the SQL logic is encapsulated in the logical view (View) at the ADS layer, and the upper-layer application directly queries the encapsulated View to realize ad hoc query.

The construction of real-time data warehouses by means of ELT will bring relatively large benefits to data and business. The details are as follows:

- Flexibility: The original business data is directly put into the warehouse to form the data of the ODS layer. In the data warehouse, the View can flexibly transform the data, and the View can be adjusted according to the business at any time.

- Low cost: The structure of the data warehouse is relatively clear, the link is relatively short, and the operation and maintenance cost is relatively low.

- The index correction is simple: the upper layers are all View logic encapsulation, only need to update the data of the bottom table, no need to correct the data layer by layer.

However, this solution also has some shortcomings. When the logic of the View is complex and the amount of data is large, the query performance is low. Therefore, it is more suitable for scenarios where the data comes from the database and the buried point system, the QPS requirements are not high, the flexibility requirements are relatively high, and the computing resources are relatively sufficient.

3.3 Real-time data warehouse layering (Streaming Warehouse solution)

According to the traditional data warehouse development methodology, the ODS>DWD>DWS>ADS development method is adopted, and the combination of Alibaba Cloud Flink and Hologres Binlog supports real-time driving of stateful full-link events between layers. In this solution, data is stored in Hologres in real time through Alibaba Cloud Flink CDC, and then subscribed to Hologres Binlog through Alibaba Cloud Flink to realize continuous processing of data between different levels, and finally write to Hologres for docking application query.

Through this solution, Hologres can achieve the same capabilities as message queues such as Kafka and Datahub, and increase the capability of data reuse. The data of a Table can be provided to downstream Alibaba Cloud Flink task consumption, and can also be connected to upstream OLAP/online service query. It not only saves costs, but also simplifies the data warehouse architecture. At the same time, each layer in the data warehouse can be constructed and queried in real time to improve the efficiency of data flow.

3.4 Streaming batch data warehouse

In the real-time data warehouse, both stream computing tasks and batch tasks are developed in two workflows, which is the Kappa architecture mode. In this data warehouse architecture, there will be some problems such as high labor costs, redundant data links, inconsistent data calibers, and low development efficiency.

In order to solve these problems, Alibaba Cloud Flink+Hologres provides the capability of stream-batch integration. In this scenario, the input layer is unified into Hologres, and the capability of streaming and batch processing is achieved through a set of business logic codes. The Stream task of Flink SQL consumes Hologres Binlog to provide streaming processing, and the Batch task of Flink SQL reads the Hologres table. The raw data reaches the batch processing capability. After the unified calculation and processing by Flink, it is unifiedly written and stored to Hologres.

Alibaba Cloud Flink combines the streaming-batch integration technology of Hologres to unify the data input layer, real-time offline computing layer, and data analysis storage layer, which greatly improves the efficiency of data development and ensures data quality.

4. Typical application scenarios

Alibaba Cloud Flink and Hologres are deeply integrated to help enterprises quickly build a one-stop real-time data warehouse:

- Hologres can be written to Hologres in real time through Alibaba Cloud Flink, high-performance writing and updating, and data writing can be seen immediately without delay, meeting the high-performance and low-latency writing requirements of real-time data warehouses;

- Hologres source table data can be read through Alibaba Cloud Flink's full-scale reading, Binlog reading, CDC reading, and full-incremental integration, without the need for additional components, unified computing and storage, and speeding up data flow efficiency;

- The Hologres dimension table can be read through Alibaba Cloud Flink to facilitate high-performance dimension table association, data widening and other application scenarios;

- Alibaba Cloud Flink is connected with Hologres metadata, and through the Hologres Catalog, metadata is automatically discovered, which greatly improves the efficiency and correctness of job development.

The real-time data warehouse standard solutions of Alibaba Cloud Flink and Hologres can support a variety of real-time data warehouse application scenarios, such as real-time recommendation, real-time risk control, etc., to meet the real-time analysis needs of enterprises. Below we will introduce the typical application scenarios of Alibaba Cloud Flink + Hologres to help businesses build real-time data warehouses more efficiently.

4.1 Real-time warehousing of massive data

The first step in building a real-time data warehouse is the real-time warehousing of massive data. Based on Alibaba Cloud Flink CDC, massive data can be synchronized to the real-time data warehouse simply and efficiently, and incremental data and table structure changes can be synchronized to the data warehouse in real time. in the warehouse. The whole process only needs to define a SQL of CREATE DATABASE AS DATABASE on Alibaba Cloud Flink (for detailed steps, please refer to the Quick Start of Real-time Warehousing ). After testing, for the TPC-DS 1T data set in MySQL, using Alibaba Cloud Flink 64 concurrency, it only takes 5 hours to fully synchronize to Hologres, and the TPS is about 300,000 per second. In the incremental Binlog synchronization stage, Alibaba Cloud Flink is used for single concurrency, and the synchronization performance reaches 100,000 records per second.

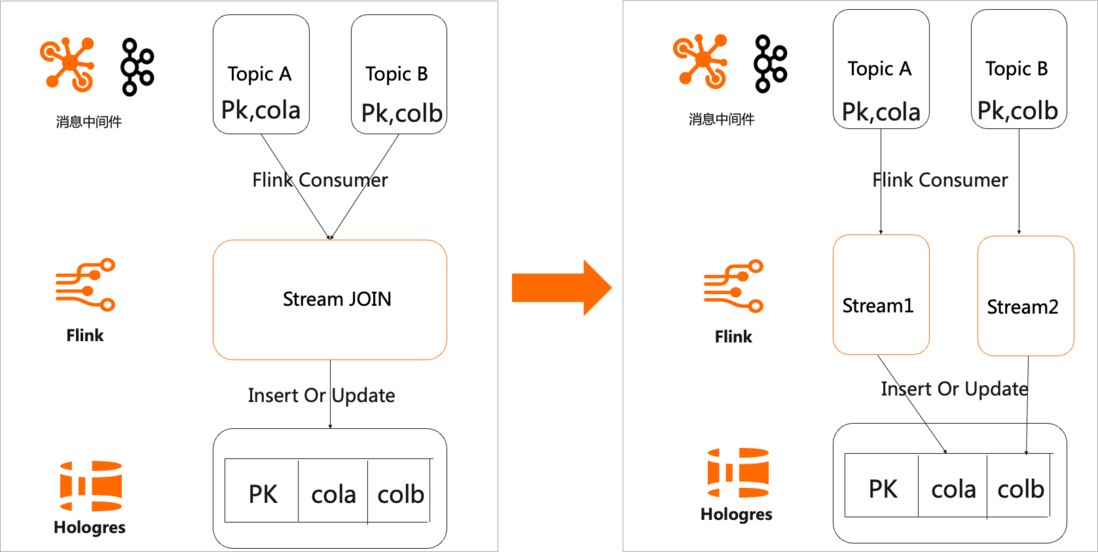

4.2 Dual Stream Join

After the data is stored in real time to form the data of the ODS layer, it is usually necessary to flatten the fact data and dimensional data into a wide table in real time by using the capabilities of Flink multi-stream join. Combined with the excellent multi-dimensional analysis performance of Hologres wide table, it helps to speed up upper-layer business queries. . Alibaba Cloud Flink supports reading Hologres tables in a fully incremental and integrated mode, that is, reading the full amount of data first and then smoothly switching to reading CDC data. The whole process ensures that the data is not duplicated or lost. Therefore, based on Alibaba Cloud Flink, it is very convenient to process and widen the ODS layer data of Hologres in real time, and complete the wide table model construction of the DWD layer.

4.3 Wide table Merge

In data warehouses, we usually need to care about modeling. Data models are usually divided into four types: wide table model, star model, snowflake model, and constellation model (both supported by Hologres). Here we focus on the wide table model. Model construction. A wide table model usually refers to a model table that associates indicators, dimension tables, and attributes related to business entities, and can also generally refer to a wide table formed by correlating multiple fact tables and multiple dimension tables.

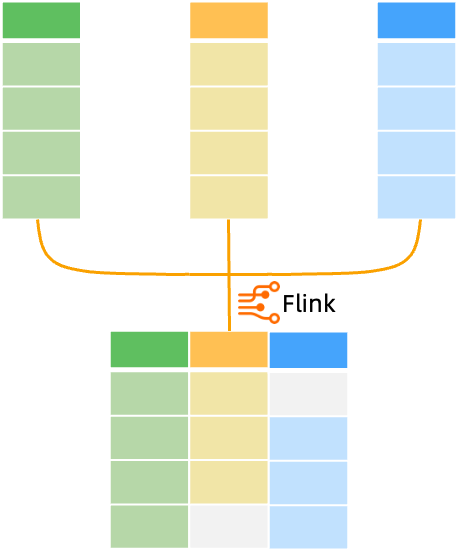

The usual practice of wide table construction is to use Alibaba Cloud Flink's dual-stream Join, including Regular Join, Interval Join, and Temporal Join. For the scenario of primary key association (that is, the Join condition is the primary key of the two streams), we can sink the work of Join to Hologres, and realize the wide table Merge through the local update function of Hologres, thus eliminating the need for Flink Join. Condition maintenance costs. For example, in the advertising scenario, a Flink task processes the advertising exposure data stream, counts the exposure of each product, and uses the product ID as the primary key to update the product index wide table. At the same time, another Flink task processes the ad click data stream, counts the clicks of each product, and also uses the product ID as the primary key to update the product index wide table. The entire process does not require dual-stream Join, and finally Hologres will complete the assembly of the entire row of data by itself. Based on the obtained wide table of product indicators, users can easily analyze advertising marketing in Hologres, such as calculating the CTR=number of clicks/number of impressions of a product. The following diagram and code example show how to change from a two-stream Join to a wide-table Merge.

CREATE TABLE ods_ad_click (

product_id INT,

click_id BIGINT,

click_time TIMESTAMP

) WITH ('connector'='datahub', 'topic'='..');

CREATE TABLE ods_ad_impressions (

product_id INT,

imp_id BIGINT,

imp_time TIMESTAMP

) WITH ('connector'='datahub', 'topic'='..');

CREATE TABLE dws_ad_product (

product_id INT,

click_cnt BIGINT,

imp_cnt BIGINT,

PRIMARY KEY (product_id) NOT ENFORCED

) WITH ('connector'='hologres','insertOrUpdate'='true');

INSERT INTO dws_ad_product (product_id, click_cnt)

SELECT product_id, COUNT(click_id) as click_cnt

FROM ods_ad_click

GROUP BY product_id;

INSERT INTO dws_ad_product (product_id, imp_cnt)

SELECT product_id, COUNT(imp_id) AS imp_cnt

FROM ods_ad_impressions

GROUP BY product_id;Using the Merge capability of the Hologres wide table can not only improve the development efficiency of stream jobs, but also reduce the resource consumption required by stream jobs, and make it easier to maintain each stream job so that jobs will not affect each other. However, it should be noted that the wide table Merge is only used in the primary key association scenario, and is not suitable for the common star model and snowflake model in the data warehouse. Therefore, in most scenarios, Flink's dual-stream Join is still required to complete the wide table. modeling.

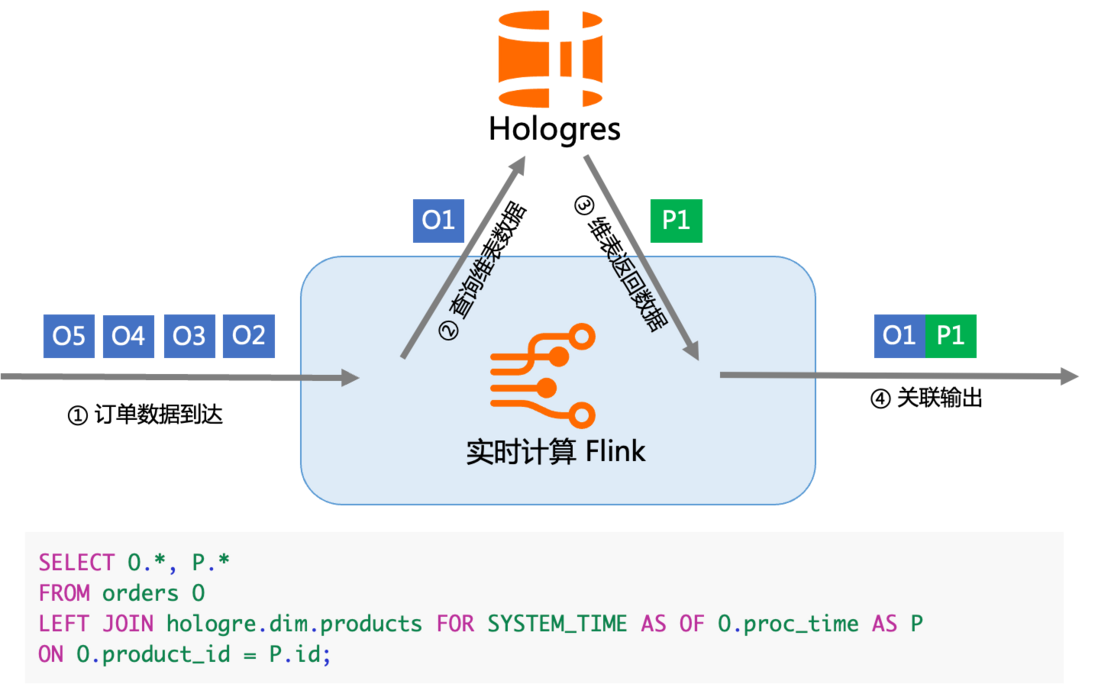

4.4 Real-time dimension table Lookup

In the real-time data warehouse, in the process of constructing the data of the DWD layer, Alibaba Cloud Flink is generally used to read the ODS data on message queues such as Datahub, and at the same time, it is necessary to associate dimension tables to form the DWD layer. In the calculation process of Alibaba Cloud Flink, the ability to efficiently read dimension tables is required. Hologres can meet the needs of such scenarios through the high-QPS and low-latency query capabilities. For example, when we need to join dimension table through ODS data to form DWD layer, we can use the lookup capability provided by Hologres. In this mode, the primary key lookup mode of row-stored table is usually used to improve the Lookup efficiency of dimension table. The specific implementation is similar to the following:

V. Typical User Cases

Relying on Alibaba Cloud's Flink+Hologres solution, enterprises can quickly build a one-stop real-time data warehouse to help real-time recommendation, real-time risk control, real-time large-screen and other business scenarios, realize fast data processing, and speedy exploration and query. At present, the solution has been implemented in Alibaba and many cloud enterprises, and has become one of the best solutions for real-time data warehouses.

Taking the business of a well-known global TOP20 game company as an example, it replaced the open-source Flink+Presto+HBase+ClickHouse architecture through Alibaba Cloud's Flink+Hologres real-time data warehouse solution, simplifying data processing links, unified data warehouse architecture, unified storage, and query performance The increase of 100% or more can perfectly support multiple scenarios such as data analysis, advertisement placement, real-time decision-making, etc., and help the rapid business growth.

5.1 Business difficulties: complex ETL links and slow OLAP queries

The customer's original data warehouse architecture uses a full set of open source components. The architecture diagram is as follows. Among them, the open source Flink does ETL processing, and after processing, it is written to OLAP engines such as ClickHouse and StarRocks.

The main pain points encountered by this architecture are:

1. The ETL link is complex

- In order to solve the real-time ETL of the data, the customer uses Flink CDC + Hudi to do a stream-batch integration. However, because the upstream business data often changes the table structure, and the open source Flink CDC lacks the ability of Schema Evolution, each time the table structure changes, the task needs to be restarted, which is very troublesome and wastes a lot of development time.

- The query performance of Hudi does not meet the business requirements, and a Presto needs to be added to accelerate the query, resulting in link redundancy.

2. The OLAP architecture is redundant and the query is slow

Clients mainly rely on purchase volume distribution as an important means of game promotion. In order to solve the need for query acceleration in real-time decision-making scenarios of advertising attribution, multiple clusters such as open source Presto, ClickHouse, and HBase are deployed to build a hybrid OLAP platform. The problems that arise are:

- The platform needs to maintain multiple clusters, which makes operation and maintenance very complicated.

- Development requires switching between various SQLs, causing a lot of trouble for the development team.

- Since ClickHouse lacks a primary key, the Last Click model is required for attribution analysis, which brings a lot of extra work.

- At the same time, the query performance of the OLAP engine cannot meet business needs well, and there is no way to make real-time decisions based on data.

- Data needs to be stored in multiple OLAP systems, resulting in storage redundancy and a sharp increase in cost pressure.

Based on the above pain points, the customer started to re-select the technology and used Alibaba Cloud Flink+Hologres to replace the existing open source data warehouse architecture.

5.2 Architecture upgrade: Alibaba Cloud Flink+Hologres unified data storage and service

The data link replaced by Alibaba Cloud Flink+Hologres is as follows:

- Data source data is written to Kafka through Flink CDC capability for pre-cleaning, and after cleaning, ETL processing is performed through Alibaba Cloud Flink.

- Alibaba Cloud Flink writes the data after ETL to Hologres in real time, and replaces Kafka as the intermediate data layer of the real-time data warehouse through Hologres, unifying the stream batch storage.

- Processes are aggregated in Hologres according to the ODS > DWD > DWS layers. At the ODS layer, Alibaba Cloud Flink subscribes to Hologres Binlog, and writes it to the Hologres DWD layer after calculation. The DWD layer is aggregated into the DWS layer in Hologres, and finally the DWS is connected to the upper-layer report and data services.

- In order to unify storage, the original offline Hive data is also replaced with Alibaba Cloud MaxCompute, with MaxCompute as the main offline link. Due to the efficient interoperability between Hologres and MaxCompute, Hologres can query MaxCompute offline through external tables, and archive historical data to MaxCompute on a regular basis.

5.3 Business benefits: unified architecture and 100% performance improvement

After the architecture upgrade, the customer's significant business benefits are as follows:

- Relying on Alibaba Cloud Flink+Hologres, data can be written to Hologres in real time, and the data can be seen immediately after writing. Hologres has a primary key, which can support high-performance write and update capabilities, with a millisecond-level delay for millions of updates.

- Alibaba Cloud Flink provides the capability of Schema Evolution, which automatically senses upstream table structure changes and synchronizes Hologres. The transformed real-time ETL link is completed by subscribing to Hologres Binlog logs, reducing link maintenance costs.

- The data query export is unified through Hologres. According to the actual measurement by customers, Hologres can achieve millisecond-level delay, which is 100% or more improved compared to the open source ClickHouse, and the JOIN query performance is 10 times faster.

- After the upgrade, the data warehouse architecture has become more flexible and concise, and the storage is unified. Only one system is needed to meet business needs, reducing operation and maintenance pressure and operation and maintenance costs.

understand more:

[1] Aliyun real-time computing Flink: https://www.aliyun.com/product/bigdata/sc

[2] Aliyun real-time data warehouse Hologres: https://www.aliyun.com/product/bigdata/hologram

[3] Flink X Hologres joint solution: https://developer.aliyun.com/article/786306

[4] Quick start for real-time data warehousing and lake entry: https://help.aliyun.com/document_detail/374270.html

2022 4th Real-time Computing FLINK Challenge

490,000 bonuses are waiting for you!

Continue the "Encouraging Teacher Program" and win generous gifts!

Click to enter the official website of the competition to register for the competition

For more technical issues related to Flink, you can scan the code to join the community DingTalk exchange group to get the latest technical articles and community dynamics as soon as possible. Please pay attention to the public number~

Recommended activities

Alibaba Cloud's enterprise-level product based on Apache Flink - real-time computing Flink version is now open:

99 yuan to try out the Flink version of real-time computing (yearly and monthly, 10CU), and you will have the opportunity to get Flink's exclusive custom sweater; another package of 3 months and above will have a 15% discount!

Learn more about the event: https://www.aliyun.com/product/bigdata/en

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。