1. Background

In the Flutter 3.0 version officially released by Google in June 2022, the renderer Impeller was officially merged from the independent repository into the Flutter Engine trunk for iteration. This is the first time that Impeller has been clarified since the Flutter team promoted the re-implementation of the Flutter rendering backend in 2021. In the future, it will replace Skia as the main rendering solution for Flutter. The emergence of Impeller is an important attempt by the Flutter team to completely solve the Jank problem introduced by SkSL (Skia Shading Language). So what is the shader compilation Jank problem?

As we all know, the bottom layer of Flutter uses skia as a 2D graphics rendering library, and skia defines a set of SkSL (Skia shading language) internally, and SkSL belongs to the GLSL variant. How to understand this sentence? In layman's terms, SkSL is a design based on a fixed version of GLSL syntax. Its function is to erase the differences in the shader syntax of different GPU driver APIs, so that different GPU drivers can be further output as the target shader language, so SkSL can Think of it as a precompiled language for shaders.

In Flutter's rasterization phase, Skia generates SkSL based on drawing commands and device parameters the first time the shader is used, and then converts the SkSL to a specific backend (GLSL, GLSL ES, or Metal SL) shader and executes it on the device. Compiled as a shader program. Compiling shaders can take hundreds of milliseconds, resulting in tens of frames lost. To locate shader compilation Jank problems, check the trace information for GrGLProgramBuilder::finalize calls, eg.

The official first noticed the Jank problem of Flutter in 2015. The most important optimization introduced at that time was to use AOT compilation to optimize the execution efficiency of Dart code, but Jank also brought new problems. The Jank problem can be divided into early-onset Jank and non-first-run jank. The essence of the first-time jank is that the compilation behavior of the shader at runtime blocks the submission of rendering instructions by the Flutter Raster thread. In the process of native application development, developers usually develop applications based on system-level UI frameworks such as UIkit, and seldom use custom shaders, so native applications rarely have performance problems caused by shader compilation, and it is more common for users to The logic is overly busy on the UI thread.

In order to optimize the first run of the carton, the official launched the Warmup scheme of SkSL. The principle of the Warmup scheme is to advance the generation time of some performance-sensitive SkSLs to the compilation period, and it is still necessary to convert SkSL to MSL at runtime to execute on the GPU. The Warmup solution needs to capture the SkSL export configuration file on the real device during development, and some SkSL can be preset in the application through compilation parameters when the application is packaged. In addition, since the specific parameters of the user's device are captured during the creation of SkSL, the Warmup configuration files of different devices cannot be shared with each other, and the performance improvement brought by this solution is very limited.

At the same time, another challenge brought to the Warmup solution is that Apple announced in 2019 that it would abandon OpenGL technology in its ecosystem and use the new Metal renderer instead. To this end, Flutter completed the adaptation of the Metal renderer in version 2.5. match. However, contrary to expectations, the switching of Metal made the situation of Early-onset Jank worse. The implementation of the Warmup solution needs to rely on the Skia team to support Metal's pre-compilation. Due to the scheduling problem of the Skia team, the Warmup solution was once Not available on Metal backend. At the same time, the community's feedback on the Jank issue on the iOS platform became stronger. The Flutter Engine Build that blocked Metal was once in the community and fell back to the GL backend. In contrast, because the Android platform has the ability to cache shader machine code that iOS lacks, the probability of Jank appearing on the Android platform is much lower than that on iOS.

Before the appearance of Impeller, Flutter's optimization of rendering performance mostly stayed on the top layer of Skia, such as increasing the priority of the rendering thread, and switching CPU drawing when the shader was compiled for too long. In order to completely solve the Jank problem, Flutter officially decided to reconsider the use of shaders to rewrite the image rendering backend, that is, the new impeller graphics rendering backend solution.

2. Metal evolution

Generally speaking, the shader language used by different rendering backends is also different, but the execution process is indeed the same. Like the execution process of common scripting languages such as JavaScript, shader programs written in different languages need to go through [lexical analysis]->[syntax analysis]->[Abstrat Syntax Tree in order to execute on GPU hardware. Hereinafter referred to as AST) construction] -> [IR optimization] -> [binary generation] process.

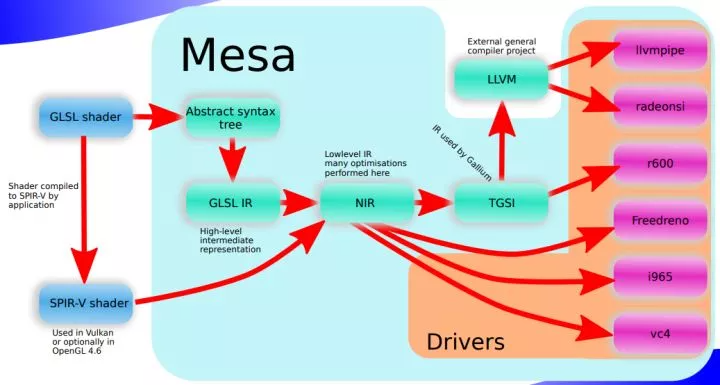

Usually, the shader compilation process is implemented in the driver provided by the manufacturer, and the details of the implementation are invisible to upper-level developers. Mesa is an open source 3D computer graphics library under the MIT license. It implements the OpenGL api interface in an open source form. The following figure shows the processing of GLSL in Mesa. You can observe the complete shader processing pipeline workflow.

After the GLSL source file provided by the upper layer is processed into AST by Mesa, it will first be compiled into GLSL IR, which is a kind of High-Level IR. After optimization, another kind of Low-Level IR will be generated: NIR, NIR combined with the hardware of the current GPU Information is processed into real executable files. Different IRs are used to perform optimization operations of different granularities. Usually, the low-level IR is more oriented towards the generation of executable files, while the upper-level IR can perform coarse-grained optimizations such as "dead code elimination". The compilation process of common high-level languages (such as Swift) is to process the conversion of High-Level IR (Swift IL) to Low-Level IR (LLVM IR).

With the development of Vulkan, OpenGL 4.6 also introduced support for the SPIR-V format. It needs to be explained here that both Vulkan and OpenGL are cross-platform image rendering engines, and they are both products of Khronos. SPIR-V (Standard Portable Intermediate Representation is a standardized IR that unifies the fields of graphics shader languages and parallel computing (GPGPU applications). It allows different shader languages to be converted into standardized intermediate representations for optimization or translation into other High-level languages, or passed directly to Vulkan, OpenGL, or OpenCL drivers for execution. SPIR-V eliminates the need for high-level language front-end compilers in device drivers, greatly reducing driver complexity, enabling a wide range of language and framework front-ends to Run on different hardware architectures. Shader programs using SPIR-V format in Mesa can be directly connected to the NIR layer when compiling, reducing the overhead of compiling shader machine code and improving system rendering performance.

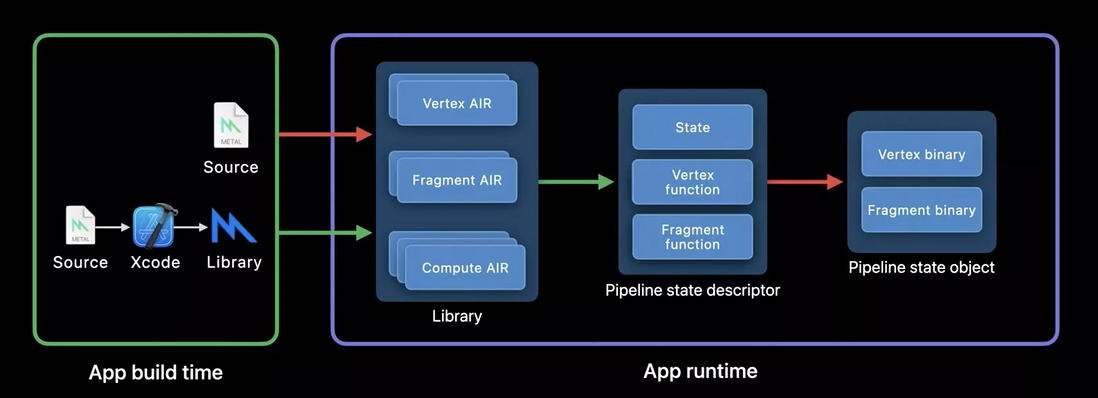

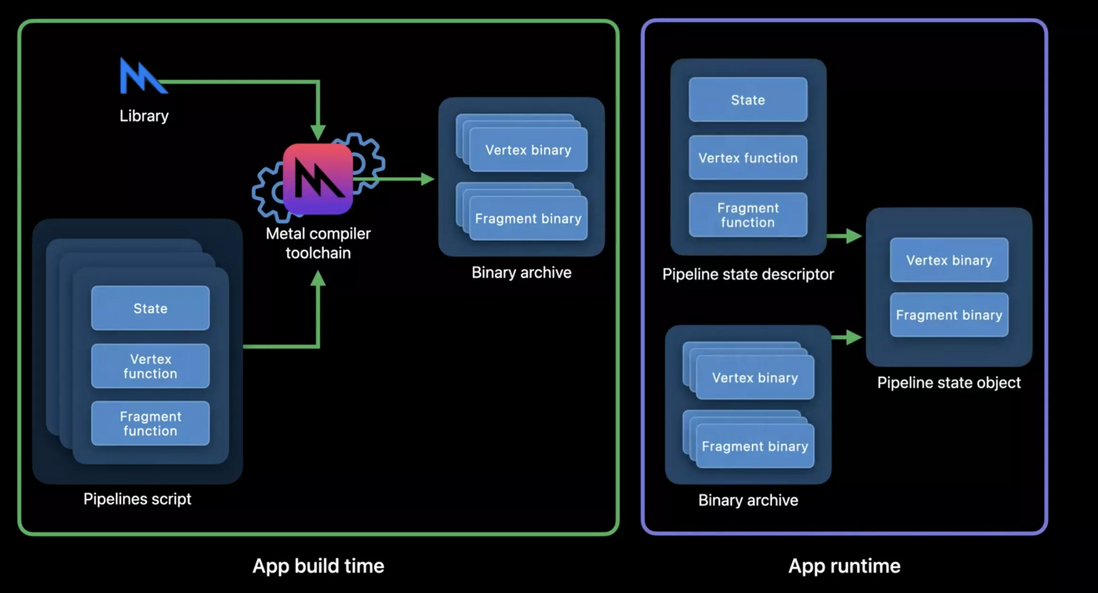

In Metal applications, shader source code written in Metal Shading Language (hereinafter referred to as MSL) is first processed as an intermediate product in AIR (Apple IR**) format. If the shader source code is referenced in the project in the form of characters, this step will be performed on the user device at runtime. If the shader is added as the target of the project, the shader source code will follow the project build in Xcode at compile time to generate MetalLib : A container format designed to hold AIR. Then AIR will be JIT compiled into executable machine code by Metal Compiler Service according to the hardware information of the current device GPU at runtime.

Packaging shader source code as MetalLib helps reduce the overhead of generating shader machine code at runtime compared to source form. The compilation of shader machine code occurs every time a pipeline state object (Pipeline State Object, hereinafter referred to as PSO) is created. A PSO holds all the states associated with the current rendering pipeline, including the shader machine code of each stage of rasterization. Color blending state, depth information, stencil mask state, multisampling information, and more. PSO is usually designed as an immutable object (immutable object), if you need to change the state in the PSO, you need to create a new PSO for copying.

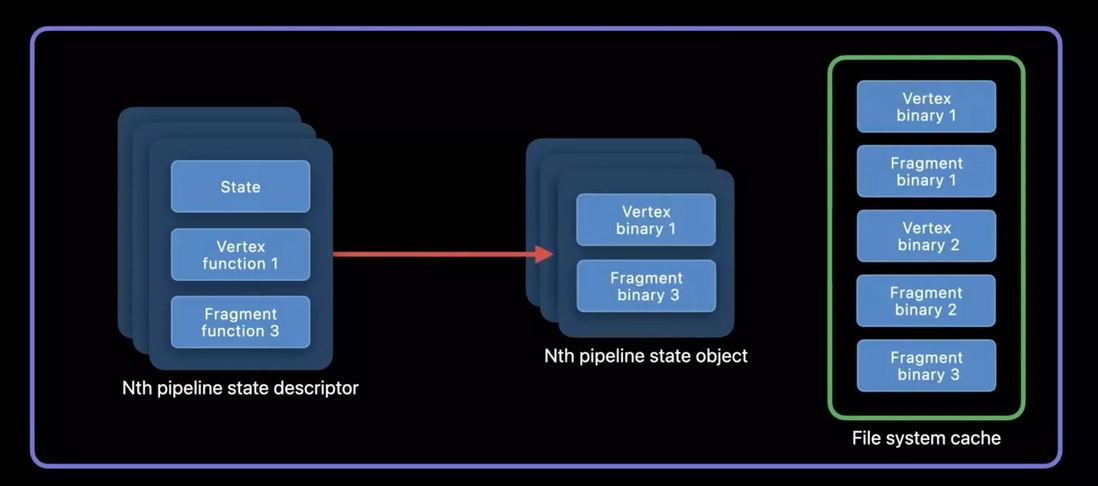

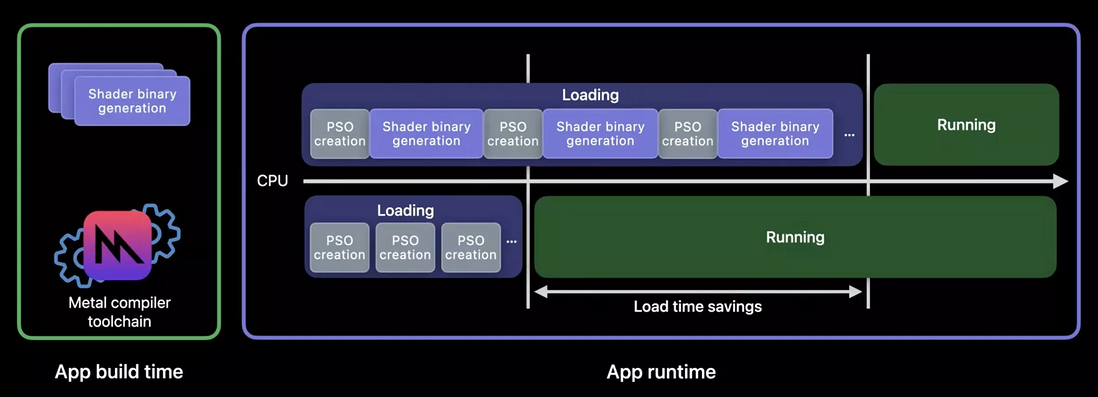

Since the PSO may be created multiple times in the application life cycle, in order to prevent the repeated compilation overhead of shaders, all compiled shader machine codes will be used by the Metal cache to speed up the subsequent PSO creation process. This cache is called Metal Shader Cache. Completely managed internally by Metal and not controlled by the developers. Applications usually create a large number of PSO objects at one time during the startup phase. Since there is no shader compilation cache in Metal at this time, the creation of PSO will trigger all shaders to complete the compilation process from AIR to machine code, and the entire centralized compilation phase. is a CPU intensive operation. In games, the Loading Screen is usually used to prepare the PSO needed for the next scene before the player enters a new level. However, in conventional apps, the user's expectation is to be able to click and use. Once the shader compilation time exceeds 16 ms, the user will feel to noticeable stuttering and frame drops.

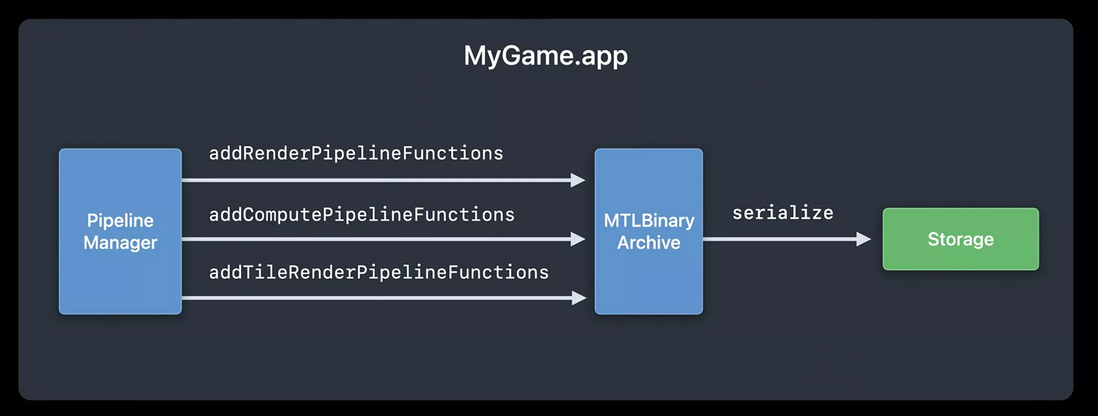

In Metal 2, Apple first introduced support for developers with the ability to manually control shader caches, the Metal Binary Archive. The cache level of Metal Binary Archive is above Metal Shader Cache, which means that the cache in Metal Binary Archive will be used first when PSO is created. At runtime, developers can manually add performance-sensitive shader functions to Metal Binary Archive objects and serialize them to disk through the Metal Pipeline Manager. When the application is cold restarted again, creating the same PSO is a lightweight operation without any shader compilation overhead.

At the same time, the cached Binary Archive can even be redistributed to users of the same device. If the machine code cached in the local Binary Archive does not match the hardware information of the current device, Metal will fall back to the complete compilation pipeline to ensure the normal execution of the application. The game "Fortnite" needs to create up to 1700 PSO objects during the startup phase. By using Metal Binary Archive to accelerate the creation of PSO, the startup time is optimized from 1m26s to 3s, and the speed is increased by 28 times.

Metal Binary Archive allows the GPU to directly access the shader cache in the file system through memory mapping, so when you open Metal Binary Archive, you will find that it occupies the precious virtual memory address space of the device. Compared with caching all shader functions, it is more sensible to layer the cache according to specific business scenarios, and close the corresponding cache in time after the page exits to release unnecessary virtual memory space.

At the same time, the black box management mechanism of Metal Shader Cache cannot guarantee that shaders will not be recompiled when they are used, while Metal Binary Archive can ensure that the cached shader functions are always available during the application life cycle. Although Metal Binary Archive allows developers to manually manage shader caches, it still needs to be built by collecting machine code at runtime, and cannot guarantee the user experience when the application is first installed. Fortunately, at WWDC 2022, Metal 3 finally made up for this legacy defect, bringing developers the ability to build Metal Binary Archive offline.

Building an offline Metal Binary Archive requires the use of a new configuration file, Pipeline Script. Pipeline Script is actually a JSON representation of Pipeline State Descriptor, which configures various state information required for PSO creation. Developers can directly edit and generate them. The PSO can be captured at runtime. Given the Pipeline Script and MetalLib, the Metal Binary Archive containing the shader machine code can be built offline through the metal command provided by the Metal toolchain. The machine code in Metal Binary Archive may contain multiple GPU architectures. Since Metal Binary Archive needs to be built into the application and submitted to the market, developers can comprehensively consider the factor of package size to eliminate unnecessary architecture support.

By building the Metal Binary Archive offline, the overhead of shader compilation only exists in the compilation phase, and the PSO creation overhead in the application startup phase is greatly reduced. Metal Binary Archive can not only optimize the performance of the first screen of the application, but in real business scenarios, some PSO objects will be delayed until specific pages are created, triggering a new shader compilation process. Once the compilation takes too long, it will affect the submission of Metal drawing instructions under the current RunLoop. Metal Binary Archive can ensure that the shader cache under the core interaction path is always available during the application life cycle, which will save CPU time slices It is used to handle logic that is strongly related to user interaction, greatly improving the responsiveness and user experience of the application.

3. Impeller architecture and rendering process

3.1 Impeller Architecture

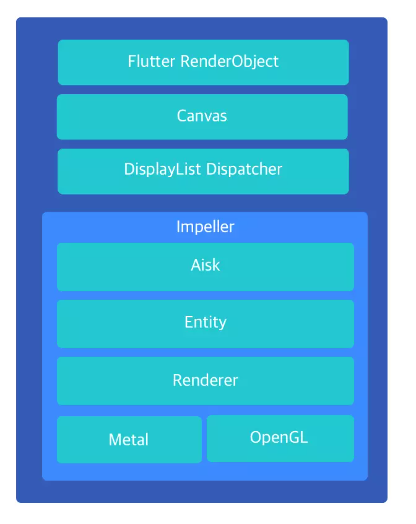

Impeller is a renderer tailored for Flutter. It is still in the early stage of development and experimentation, and only implements the metal backend, as well as iOS and Mac system support. In terms of engineering, he relies on flutter fml and display list, and implements the display list dispatcher interface, which can easily replace skia.

Impeller core goals:

- Predictable performance: All shaders are compiled offline at compile time, and pipeline state objects are pre-built from shaders.

- Detectable: All graphics resources (textures, buffers, pipeline state objects, etc.) are tracked and tagged. Animations can be captured and persisted to disk without affecting rendering performance.

- Portable: Not tied to a specific rendering API, shaders are written once and converted when needed.

- Use modern graphics APIs: Make heavy use of (but not rely on) features of modern graphics APIs such as Metal and Vulkan.

- Efficient use of concurrency: Single-frame workloads can be distributed across multiple threads.

In general, Impeller's goal is to provide Flutter with rendering support with predictable performance. Skia's rendering mechanism requires the application to dynamically generate SkSL during the startup process. This part of the shader needs to be converted to MSL at runtime before it can be further compiled into Executable machine code, the entire compilation process will block the Raster thread. Impeller abandoned SkSL and used GLSL 4.6 as the upper shader language. Through Impeller's built-in ImpellerC compiler, all shaders can be converted to Metal Shading language at compile time, and packaged as AIR bytes using MetalLib format The code is built into the app. Another advantage of Impeller is the extensive use of Modern Graphics APIs. The design of Metal can make full use of the CPU multi-core advantage to submit rendering instructions in parallel, which greatly reduces the state verification of the PSO by the driver layer. Compared with the GL backend, it only calls the upper-layer rendering interface. Switching to Metal can give your app a ~10% rendering performance boost.

Impeller can be roughly divided into several modules such as Compiler, Renderer, Entity, Aiks, and basic libraries Geomety and Base. The overall architecture is as follows.

- Compiler: Host-side tool, including shader Compiler and Reflector. The Compiler is used to compile GLSL 4.60 shader source code offline to a specific backend shader (eg MSL). Reflector generates C++ shader bindings offline from shaders to quickly build pipeline state objects (PSOs) at runtime.

- Renderer: used to create buffers, generate pipeline state objects from shader bindings, set up RenderPass, manage uniform-buffers, subdivide surfaces, perform rendering tasks, etc.

- Entity: used to build 2D renderers, contains shader bindings and pipeline state objects

- Aiks: Encapsulates Entity to provide Skia-like APIs for easy connection to Flutter.

3.2 Impeller drawing process

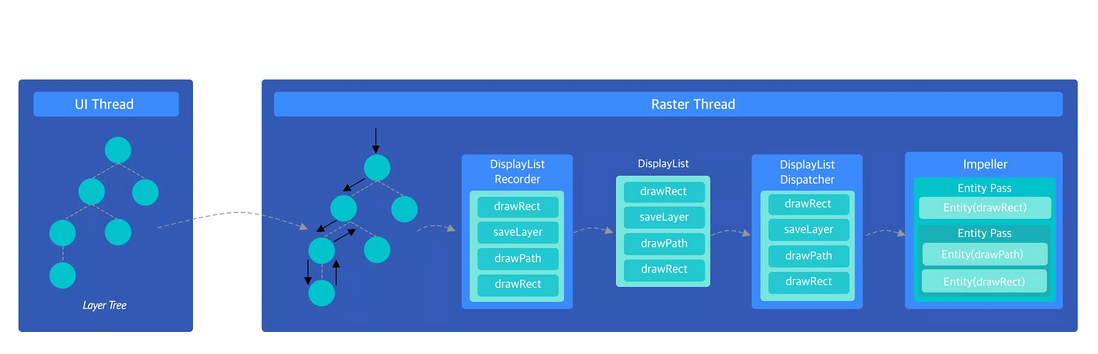

The overall drawing process of Impeller is shown in the figure below.

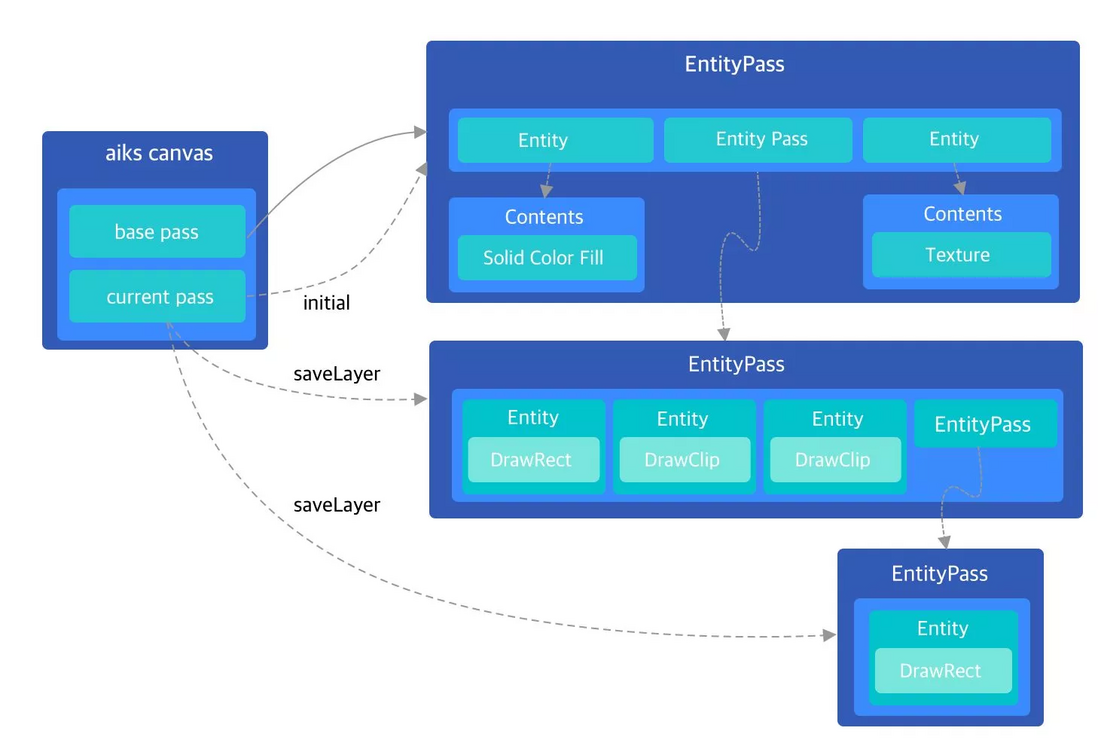

In general, the LayerTree of the Flutter Engine layer needs to be converted to EntityPassTree before being drawn by the Impeller. The UI thread will submit the LayerTree from the UI thread to the Raster thread after receiving the v-sync signal, and the LayerTree will be traversed in the Raster thread. Each node of the node and the drawing information of each node and the saveLayer operation are recorded through the DisplayListRecorder. In LayerTree, you can do subtrees that can be Raster Cache, and the drawing results will be cached as bitmaps. DisplayListRecorder will convert the drawing operation of the corresponding subtree into a drawImage operation. , to speed up subsequent rendering.

After the DisplayListRecorder finishes recording the command, it can submit the current frame. The instruction cache in DisplayListRecorder will be used to create DisplayList objects. DisplayList is consumed by the implementer of DisplayListDispatcher (Skia / Impeller). Playing back all DisplayListOptions in DisplayList can convert drawing operations to EntityPassTree.

After the construction of EntityPassTree is completed, the instructions in EntityPassTree need to be parsed and executed. EntityPassTree drawing operation is based on Entity objects, and Vector is used in Impeller to manage multiple different Entity objects in a drawing context. Usually Canvas will use SaveLayer to open up a new drawing context when performing complex drawing operations, which is called off-screen rendering on iOS. The SaveLayer operation will be marked as creating a new EntityPass in Impeller to record the Entity in the independent context. , the new EntityPass will be recorded in the EntityPass list of the parent node. The creation process of EntityPass is shown in the following figure.

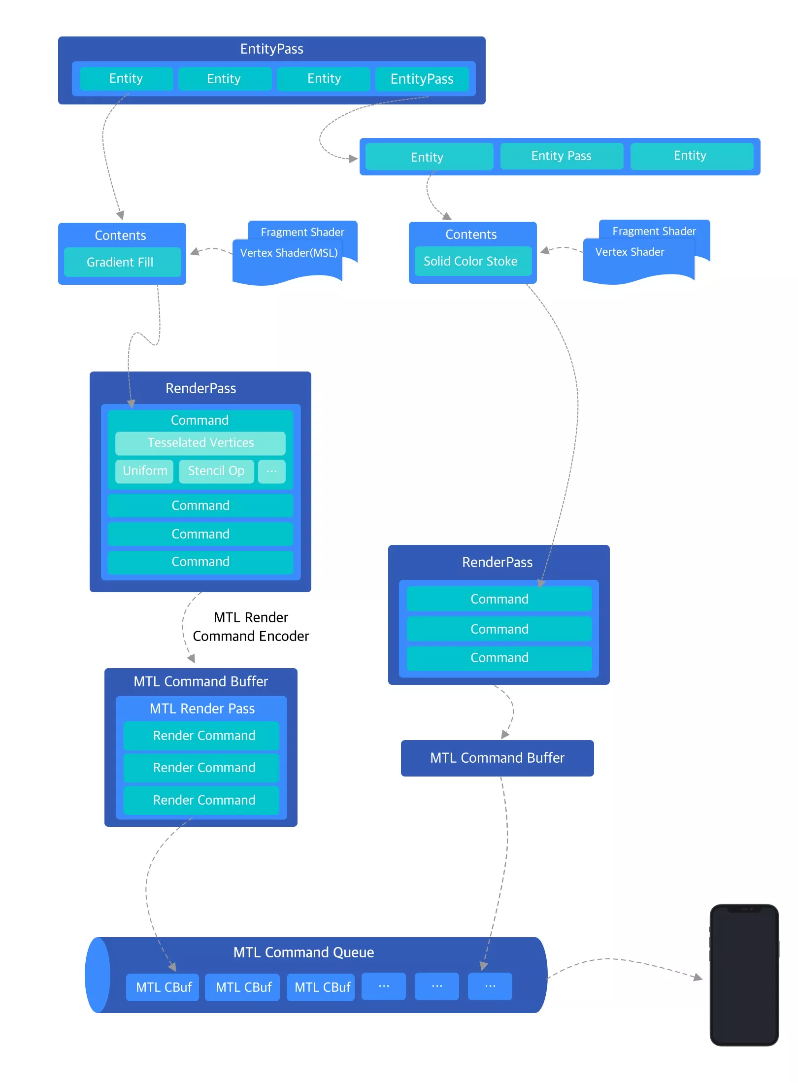

Metal abstracts the concept of CommandQueue for the GPU hardware of the device at the upper layer. CommandQueue corresponds to the number of GPUs one-to-one, and CommandQueue can contain one or more CommandBuffers. CommandBuffer is the queue where the actual drawing command RenderCommand is stored. A simple application can contain only one CommandBuffer, and different threads can speed up the submission of RenderCommand by holding different CommandBuffers. RenderCommand is generated by the Encode operation of RenderCommandEncoder. RenderCommandEncoder defines the way to save the drawing result, the pixel format of the drawing result, and the operations (clear / store) that the Framebuffer attachment needs to do when drawing starts or ends. RenderCommand contains the final delivery to Metal. the real drawcall operation.

When the Command in Entity is converted into a real MTLRenderCommand, it also carries an important information PSO. The drawing state inherited by Entity from DisplayList will eventually become the PSO associated with MTLRenderCommand. When MTLRenderCommand is consumed, the Metal driver layer will first read the PSO to adjust the rendering pipeline state, and then execute the shader to draw to complete the current drawing operation. The process is as follows.

3.3 ImpellerC Compiler Design

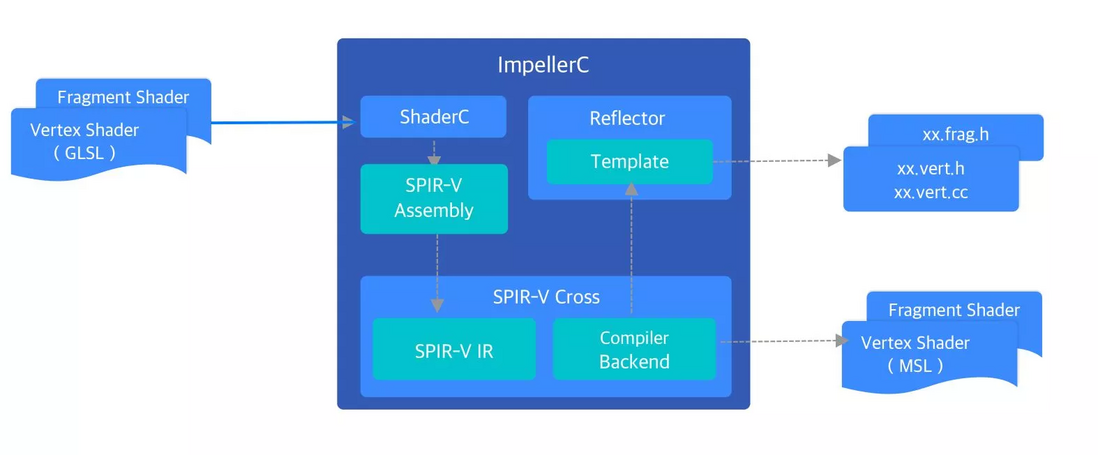

ImpellerC is Impeller's built-in shader compilation solution. The source code is located in the compiler directory of Impeller. It can convert the glsl source file written by the upper layer of Impeller into two products during compilation: 1. The shader file corresponding to the target platform; 2. The reflection file generated according to the shader uniform information contains information such as the struct layout of the shader uniform.

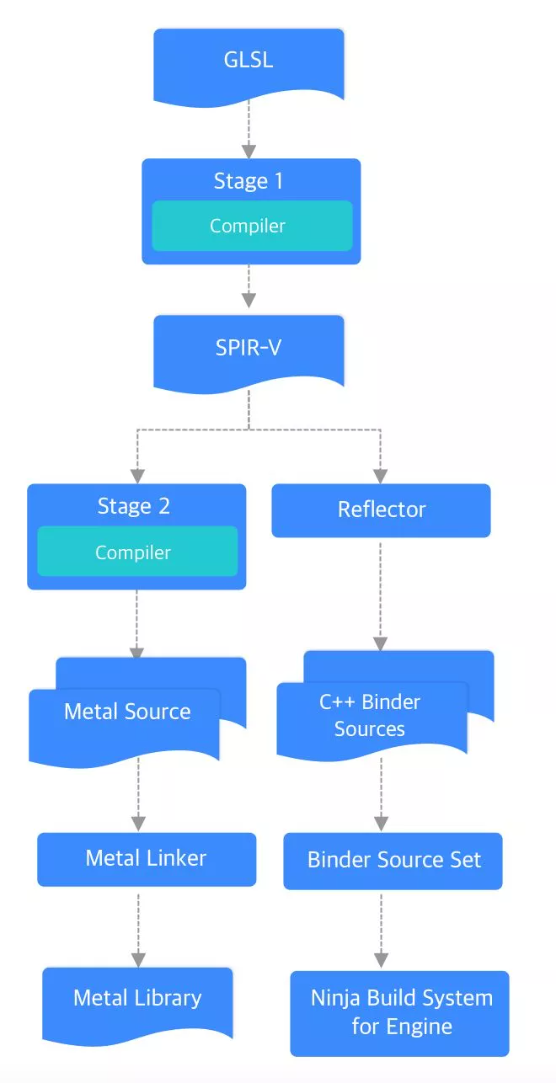

Among them, the struct type in the reflection file is used as the model layer, which enables the upper layer to use the uniform assignment method without caring about the specific backend, which greatly enhances the cross-platform properties of Impeller and provides convenience for writing shader codes for different platforms. When compiling the Impeller part of the Flutter Engine project, gn will first compile the files in the compiler directory into ImpellerC executable files, and then use ImpellerC to preprocess all shaders in the entity/content/shaders directory. The GL backend will process the shader source code into hex format and integrate it into a header file, while the Metal backend will further process it into MetalLib after GLSL completes the MSL translation.

When ImpellerC processes the glsl source file, it will call shaderc to compile the glsl file. shaderc is a shader compiler maintained by Google that can be compiled from glsl source code to SPIR-V. The compilation process of shaderc uses two open source tools, glslang and SPIRV-Tools. Among them, glslang is the compilation front end of glsl, which is responsible for processing glsl into AST. SPIRV-Tools can take over the rest of the work and further compile AST into SPIR-V. In this step of compilation, in order to obtain correct reflection information, ImpellerC limits the optimization level to shadercs.

Then ImpellerC will call SPIR-V Cross to disassemble the SPIR-V obtained in the previous step, and get SPIR-V IR , which is a data structure used internally by SPIR-V Cross, and SPIR-V Cross will be above it. for further optimization. ImpellerC will then call SPIR-V Cross to create the Compiler Backend of the target platform (MSLCompiler / GLSLCompiler / SKSLCompiler). The Compiler Backend encapsulates the specific translation logic of the target platform's shader language. At the same time, SPIR-V Cross will extract reflection information such as Uniform quantity, variable type and offset value from SPIR-V IR

struct ShaderStructMemberMetadata {

ShaderType type; // the data type (bool, int, float, etc.)

std::string name; // the uniform member name "frame_info.mvp"

size_t offset;

size_t size;

};After the Reflector obtains this information, it will fill in the built-in .h and .cc templates to obtain .h and .cc files that can be referenced by Impeller. The upper layer can reflect the type of the file to easily generate data memcpy and get the corresponding buffer. Communication with shaders. For Metal and GLES3, due to the native support for UBO, the value transfer will eventually be realized through the UBO interface provided by the corresponding backend. For GLES2 that does not support UBO, the assignment to UBO needs to be converted to glUniform* series API for Uniform. The individual assignment of each field, after the shader program link, Impeller obtains the location of all fields in the buffer through glGetUniformLocation at runtime, combined with the offset value extracted from the reflection file, Impeller can get the location of each Uniform field Information, this process will be generated once when the Imepller Context is created, and then Impeller will maintain the information of the Uniform field. For the upper layer, whether it is GLES2 or other backends, the process of communicating with shaders through Reflector is the same.

After the shader translation and reflection file extraction are completed, the binding of the uniform data can be actually performed. When the Entity triggers the drawing operation, the Render function of the Content will be called first, which will create a Command object for Metal consumption, and the Command will be submitted to RenderPass In waiting for scheduling, the binding of uniform data occurs in the Command creation step. As shown in the following figure: VS::FrameInfo and FS::GradientInfo are two Struct types generated by reflection. After initializing the instances of VS::FrameInfo and FS::GradientInfo and assigning them, pass the VS::BindFrameInfo and FS::BindGradientInfo functions The binding of data and uniform can be realized.

VS::FrameInfo frame_info;

frame_info.mvp = Matrix::MakeOrthographic(pass.GetRenderTargetSize()) * entity.GetTransformation();

FS::GradientInfo gradient_info;

gradient_info.start_point = start_point_;

gradient_info.end_point = end_point_;

gradient_info.start_color = colors_[0].Premultiply();

gradient_info.end_color = colors_[1].Premultiply();

Command cmd;

cmd.label = "LinearGradientFill";

cmd.pipeline = renderer.GetGradientFillPipeline(OptionsFromPassAndEntity(pass, entity));

cmd.stencil_reference = entity.GetStencilDepth();

cmd.BindVertices(vertices_builder.CreateVertexBuffer(pass.GetTransientsBuffer()));

cmd.primitive_type = PrimitiveType::kTriangle;

FS::BindGradientInfo(cmd, pass.GetTransientsBuffer().EmplaceUniform(gradient_info));

VS::BindFrameInfo(cmd, pass.GetTransientsBuffer().EmplaceUniform(frame_info));

return pass.AddCommand(std::move(cmd)); Impeller's complete shader processing pipeline is shown below.

Fourth, the relationship between CommandQueue, CommandList, CommandAllocator

When reading some rendering-related articles, I often encounter the terms CommandQueue, CommandList, and CommandAllocator.

CommandQueue (command queue): In the GPU, it is a ring buffer, which is the command execution queue of the GPU, and each GPU maintains at least one CommandQueue. If the queue is empty, the GPU will idle idle until there is an instruction; if the queue is full, it will block the execution of the CPU.

CommandList (command list): In the CPU, it is used to record the execution instructions of the GPU, and we expect the tasks performed by the GPU to be recorded through it.

CommandAllocator: In the CPU, it is used to allocate space for the instructions recorded in the CommandList. This space is used to store instructions on the CPU side without storing resources.

DX12 submits the commands recorded in the CommandList to the CommandQueue in the GPU through the ExecuteCommandLists function. CPU and GPU are two processors, they run in parallel on two independent runways, CommandQueue is the runway of GPU. When CommandList calls the functions such as SetViewPort, ClearRenderTarget, and DrawIndex, it does not actually perform these operations, but only records these instructions until the ExecuteCommandLists function is executed, and the instructions are sent from the CPU. In the CommandQueue sent to the GPU, the process of sending the GPU from the CPU is not necessarily immediately sent. Of course, the GPU is not called immediately, but executes the instructions in the order in the CommandQueue.

Is there any connection between the three? A GPU maintains at least one CommandQueue. CommandList is used to record instructions on the CPU side, and there can be multiple CommandLists. To create a CommandList, you need to specify the allocator CommandAllocator, and there can be multiple CommandAllocators. At the same time, a CommandAllocator can be associated with multiple CommandLists, but the CommandLists associated with the same CommandAllocator cannot record commands at the same time, because the memory of the commands recorded by the CommandList is allocated by the CommandAllocator, we need Guarantee (((recorded instruction) memory) continuity), so that it can be sent to the CommandQueue, and concurrent records will destroy memory continuity.

At the same time, CommandQueue is in the GPU, which is the execution runway of the GPU and cannot be reset. CommandList can be reset after recording the command and submitting it (then it can be reused to record new commands), because CommandAllocator is still maintaining this memory after submitting the command. The CommandAllocator cannot be reset until the GPU has finished executing the instructions in the CommandAllocator, because the underlying implementation may start a Job to send instructions to the GPU little by little, and the function operation that submits the instruction will return immediately.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。