Guest speaker: Sun Fangbin, Software Development Engineer, China Mobile Cloud Competence Center Edited by: Hoh Xil

Production platform: DataFunTalk

Introduction: In the era of cloud-native + big data, with the explosive growth of business data volume and the requirement for high timeliness, cloud-native big data analysis technology has gone from traditional data warehouse to data lake, and then to the integration of lake and warehouse. evolution. This article mainly introduces the overall architecture, core functions, key technical points of LakeHouse for mobile cloud-native big data analysis, and application scenarios in public cloud/private cloud.

The main contents include:

- Overview of Lake and Warehouse Integration

- Mobile Cloud LakeHouse

- Practical application scenarios

01 Overview of Lake and Warehouse Integration

- About Lake and Warehouse Integration

"Integration of lake and warehouse" is a relatively popular concept recently. The concept of "integration of lake and warehouse" originated from the Lakehouse architecture proposed by Databricks. It is not a product, but an open technical architecture paradigm in the field of data management. . With the development and integration of big data and cloud-native technologies, the integration of lakes and warehouses can bring out the flexibility and ecological richness of data lakes, as well as the growth of data warehouses. The growth here includes: server cost, business performance, enterprise-level security, governance and other features.

As you can see (picture on the left), before a specific business scale, the flexibility of the data lake has certain advantages. With the growth of the business scale, the growth of the data warehouse is more advantageous.

Two key points of the integration of lake and warehouse:

- The data/metadata of lakes and warehouses can be seamlessly opened up and flow freely and smoothly without the need for manual intervention by users (including: entering the lake from the outside to the inside, leaving the lake from the inside out, and surrounding the lake around the periphery);

- The system automatically caches and moves data between lakes and warehouses according to specific rules, and can connect with advanced functions related to data science to further realize agile analysis and deep intelligence.

- main idea

With the explosive growth of business data volume and the high timeliness requirements of business, big data analysis technology has undergone an evolution from traditional data warehouses to data lakes, and then to the integration of lakes and warehouses. The traditional Hadoop-based big data platform architecture is also a data lake architecture. The core concept of the integration of lakes and warehouses and the differences from the current Hadoop cluster architecture are roughly as follows:

- Store raw data in multiple formats: The current Hadoop cluster has a single underlying storage, mainly HDFS. For the integration of lakes and warehouses, it will gradually evolve into a unified storage system that supports multiple media and multiple types of data

- Unified storage system: Currently, it is divided into multiple clusters according to the business, and a large amount of data is transmitted between them. It gradually evolves into a unified storage system to reduce the transmission consumption between clusters.

- Support multiple upper-layer computing frameworks: The current computing framework of Hadoop architecture is dominated by MR/Spark, and the future evolution will be to build more computing frameworks and application scenarios directly on the data lake

There are roughly two types of product forms of lake and warehouse integration:

- Products and solutions based on the data lake architecture on the public cloud (for example: Alibaba Cloud MaxCompute Lake and Warehouse Integration, Huawei Cloud FusionInsight Intelligent Data Lake)

- Components based on the open source Hadoop ecosystem (DeltaLake, Hudi, Iceberg) as the data storage middle layer (for example: Amazon Smart Lake Warehouse Architecture, Azure Synapse Analytics)

02 Practice of Mobile Cloud LakeHouse The following introduces the overall architecture of Mobile Cloud LakeHouse and the exploration and practice of the integration of lakes and warehouses:

- Overall structure

The above picture is our overall architecture diagram, the name is LakeHouse for cloud native big data analysis. Cloud-native big data analysis LakeHouse adopts a computing and storage separation architecture. Based on mobile cloud object storage EOS and built-in HDFS, it provides an integrated lake and warehouse solution that supports Hudi storage mechanism. Interactive query through built-in Spark engine can quickly gain insight into business data changes.

Our architecture specifically includes:

- Data sources: including RDB, Kafka, HDFS, EOS, FTP, one-key lake through FlinkX

- Data storage (data lake): We have built-in HDFS and mobile cloud EOS, and use Hudi to achieve Upsert capability to achieve near real-time incremental updates. We also appropriately introduce Alluxio to cache data to accelerate SQL query for data analysis. ability.

- Computing Engine: Our computing engines are Severless and run in Kubernetes. We introduced YuniKorn, a unified resource access/scheduling component, which is similar to the resource scheduling of YARN in the traditional Hadoop ecosystem. There will be some common scheduling algorithms, such as common scheduling, first-in-first-out and other common scheduling.

- Smart metadata: Smart metadata discovery is to convert the data directory of our data source into a Hive table in the built-in storage, and manage metadata in a unified manner

- Data development: SQLConsole, users can directly write SQL on the page for interactive query; there are also SDK methods and JDBC/ODBC interfaces; in the future, we will support DevIDE and support SQL development on the page

- Core functions

The core functions mainly include the following four aspects:

① Separation of storage and computing:

- The storage layer and the computing layer are deployed separately, and the storage and computing support independent elastic expansion and contraction without affecting each other.

- Storage supports object storage and HDFS, HDFS stores structured data, provides high-performance storage, and object storage stores unstructured, raw data, and cold data, providing cost-effective

- The computing supports multiple engines. Spark, Presto, and Flink are all serverless, ready to use, to meet different query scenarios. ② One-key to the lake:

- Support connection to various databases, storage, message queues on and off the mobile cloud cloud

- The process of entering the lake is automated, reducing the configuration cost of users

- Reduce the additional load on the data source to within 10%, and support automatic adjustment of the number of connections according to the instance specifications of the data source (for example, when MySQL synchronizes data, the number of connections will be automatically adjusted if the MySQL load allows)

- Incremental update support (incremental update via Hudi)

③ Intelligent metadata discovery: - Based on specific rules, intelligently identify the metadata of structured and semi-structured files, and build a data catalog

- Automatically sense metadata changes

- Unified metadata, providing HiveMeta-like API to access underlying data for different computing engines

- Intelligent data routing and unified control of permissions (achieved by mobile cloud account system and Ranger)

④ Calculated by quantity: - Storage resources are billed according to usage

- Computing resources support multiple billing models

- Supports flexible adjustment of tenant cluster resource specifications and rapid expansion and contraction

- RBF-based logical view

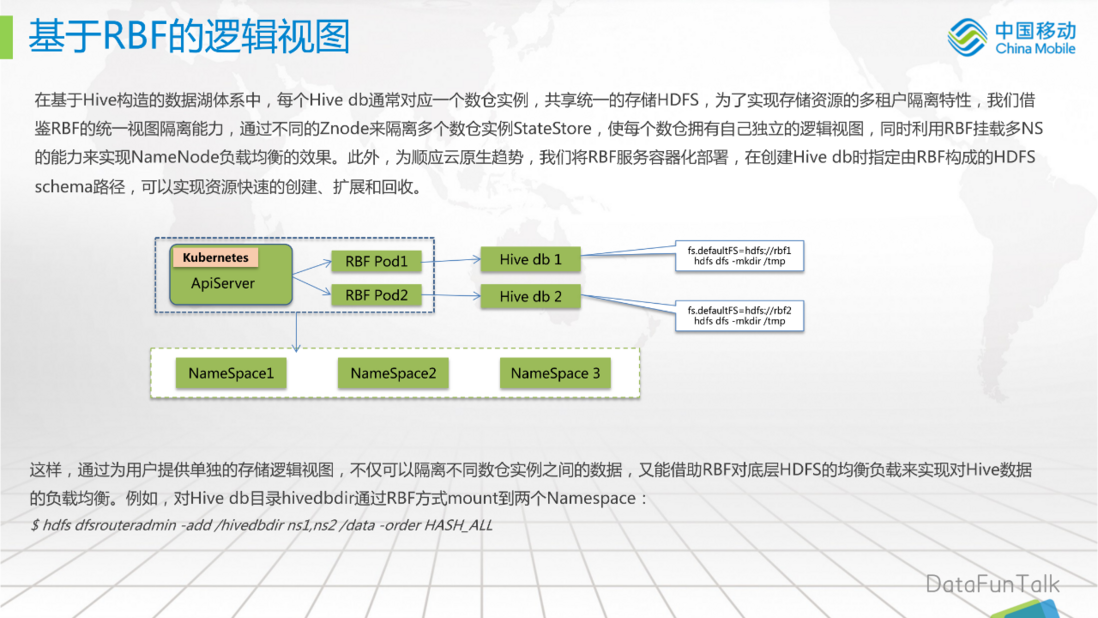

In the data lake system based on Hive construction, each Hive db usually corresponds to a data warehouse instance and shares a unified storage HDFS. In order to realize the multi-tenant isolation feature of storage resources, we draw on the unified view isolation capability of RBF, and use different data on Zookeeper The Znode is used to isolate multiple data warehouse instances StateStore, so that each data warehouse has its own independent logical view, and at the same time, the ability of RBF to mount multiple NameSpaces is used to achieve the effect of NameNode load balancing. In addition, in order to conform to the cloud-native trend, we deploy the RBF service in a containerized manner, and specify the HDFS schema path composed of RBF when creating the Hive db, which can realize the rapid creation, expansion and recovery of resources.

The above picture is a simple architecture diagram of ours. RBF is deployed in Kubernetes in the form of Pod, and then Hivedb is mapped to an RBF schema path. Then, the following is the load balancing ability with the help of NameSpace.

In this way, by providing users with a separate logical view of storage, not only can data between different data warehouse instances be isolated, but also the load balancing capability of Hive data can be achieved with the help of RBF's load balancing of the underlying HDFS.

For example, to mount the Hive db directory hivedbdir to two Namespaces through RBF, the mount command is as follows:

$ hdfs dfsrouteradmin -add/hivedbdir ns1,ns2 /data -order HASH_ALL- Multi-tenancy implementation of Hive in object storage

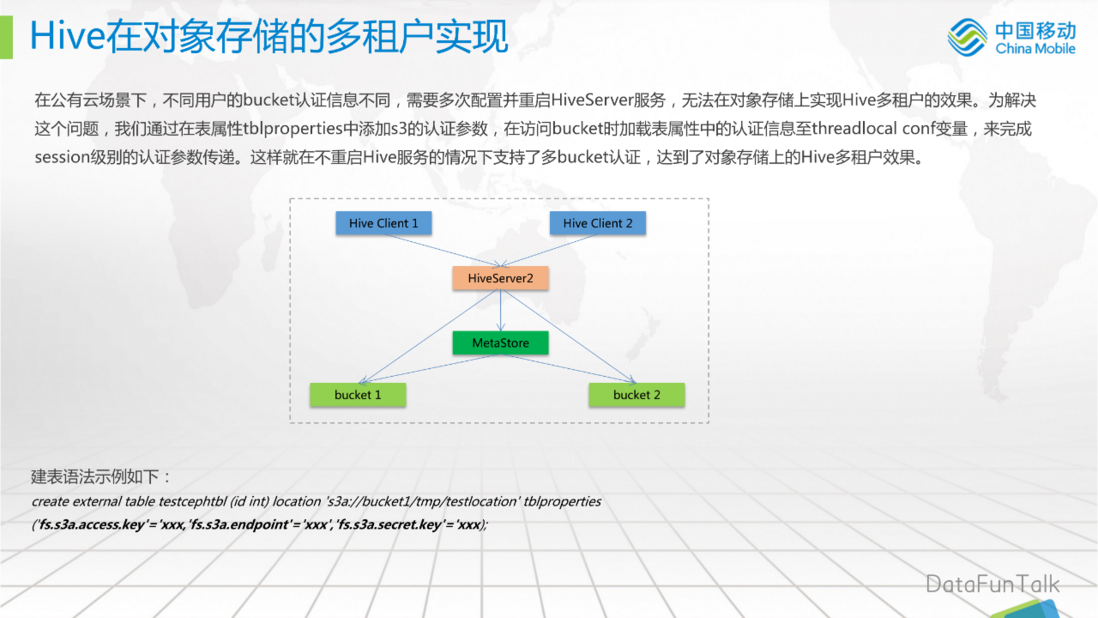

In the public cloud scenario, the bucket authentication information of different users is different, and the HiveServer service needs to be configured and restarted multiple times, and the Hive multi-tenancy effect cannot be achieved on the object storage. To solve this problem, we modify the Hive source code to add the s3 authentication parameter in the table attribute tblproperties, and load the authentication information in the table attribute to the threadlocal conf variable when accessing the bucket to complete the session-level authentication parameter transfer. In this way, multi-bucket authentication is supported without restarting the Hive service, and the Hive multi-tenancy effect on object storage is achieved.

As shown in the figure, if you configure different parameters for the user on the server side, you need to restart the service, which is not acceptable at this time. After our transformation, the table creation syntax has become the following format:

create external table testcephtbl(id int) location 's3a://bucket1/tmp/testlocation' tblproperties('fs.s3a.access.key'='xxx,'fs.s3a.endpoint'='xxx','fs.s3a.secret.key'='xxx);- Optimizing engine access to object storage

In the big data ecosystem, various computing engines can access data in Hive through the Metastore service. For example, to access Hive data stored in object storage in SparkSQL, you need to load the corresponding bucket authentication according to the location information of the table in the Driver module that submits the job. information, the SQL submit command is as follows:

$SPARK_HOME/bin/beeline-u “jdbc:hive2://host:port/default?fs.s3a.access.key=xxx;fs.s3a.endpoint=xxx;fs.s3a.endpoint=xxx”-e “selecta.id from test1 a join test2 on a.id=b.id”In other words, users need to perceive that data is stored in object storage, and it is difficult to determine which buckets multiple tables in a SQL belong to, which seriously affects the progress of business development. To this end, based on the previous Hive table attributes, we implemented the Get Object Storage Authentication Parameter Plugin. Users do not need to know which bucket the table in SQL comes from, nor do they need to specify authentication parameters when submitting SQL. As shown in the orange box in the above figure, Spark SQL implements parameters in the Driver to match the authentication parameter information. A unified access view to MetaStore.

The final command to submit the SQL job is as follows:

$SPARK_HOME/bin/beeline -u “jdbc:hive2://host:port/default”-e “select a.id from test1 a join test2 ona.id=b.id”- Serverless implementation

Taking Spark as an example, through the multi-tenancy implementation of RBF, the Spark process runs in a securely isolated K8S Namespace, and each Namespace corresponds to different computing units according to resource specifications (for example: 1CU=1 core * 4GB). For the micro-batch scenario, every time a task is submitted using the SQL Console, the engine module will start a Spark cluster, apply for the corresponding computing resources for the Driver and Executor to run the computing task according to a specific algorithm, and the resources will be recovered immediately after the task ends; for ad-hoc ad hoc In the -hoc scenario, you can use JDBC to submit tasks. The engine module starts a session-configurable spark cluster through the Kyuubi service, and recycles resources after a long period of time; all SQL tasks are only billed according to the actual resource specifications after running successfully. There is no charge if not used.

The logical view is as above, our Kubernetes isolates resources through each Namespace; the above is a unified scheduling YuniKorn for Capacity Management/Job Scheduling scheduling. Further up is the SQL Parser component, which will make SparkSQL and HiveSQL syntax compatible; at the top, we also provide the Spark JAR method, which can support the analysis of structured/semi-structured data in HBase or other media.

Through the implementation of Serverless, we have greatly reduced the user's usage process.

Process without Serverless:

① Purchase a server and build a cluster ② Deploy a set of open source big data basic components: HDFS, Zookeeper, Yarn, Ranger, Hive, etc. ③ Use different tools to import data ④ Write query SQL calculations and output results ⑤ Various tedious operation and maintenance

Process after using Sercerless:

① Register a mobile cloud account and order a LakeHouse instance ② Create a data synchronization task ③ Write a query SQL calculation, and output the result ④ The service is fully managed, with no operation and maintenance throughout

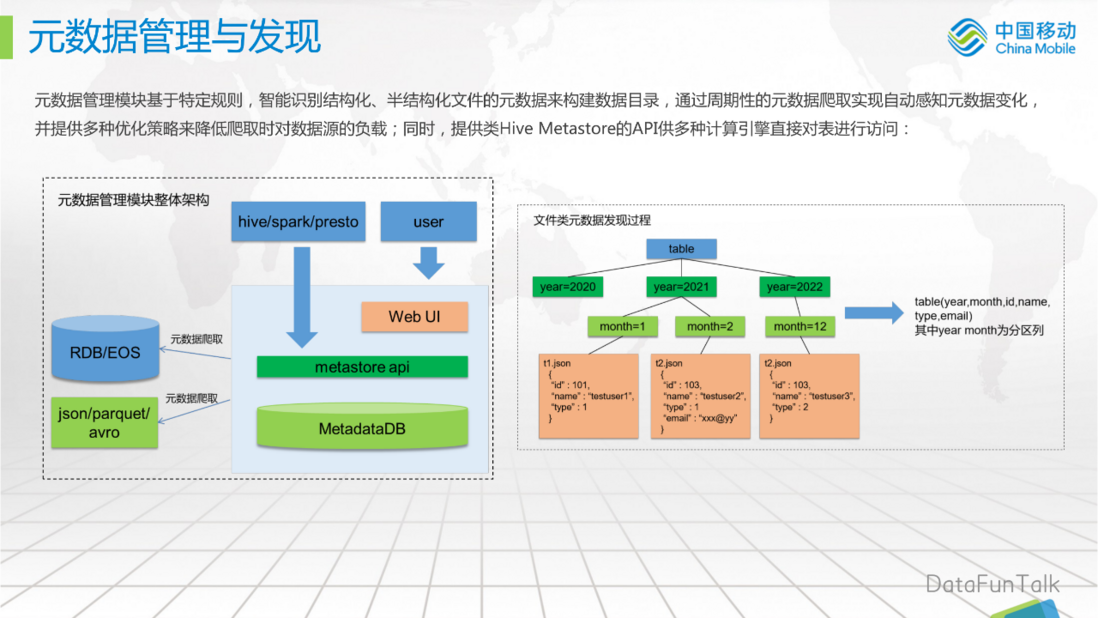

- Metadata management and discovery The metadata management module intelligently recognizes the metadata of structured and semi-structured files based on specific rules to build a data catalog, realizes automatic perception of metadata changes through periodic metadata crawling, and provides various optimizations strategy to reduce the load on data sources during crawling; at the same time, it provides APIs like Hive Metastore for multiple computing engines to directly access tables:

The overall architecture of the metadata management module is shown in the figure on the left: RDB/EOS data is crawled through metadata, and the format includes common semi-structured data such as json/parquet/avro, then the unified access layer of Hive MetaStore, and the computing engine hive/spark /presto can access the data stored in the lake through the class metastore api, and users can perform directory mapping through the Web UI.

The file metadata discovery process is shown in the figure on the right: there is a table, and there are several directories below, for example, separated by year, and then there are two subdirectories in a specific directory. For its metadata discovery process, it will be There are 3 rows of data, id, name and type, which will be mapped to the same table, and then different directories are partitioned by different fields.

- Serverless One Key Lake

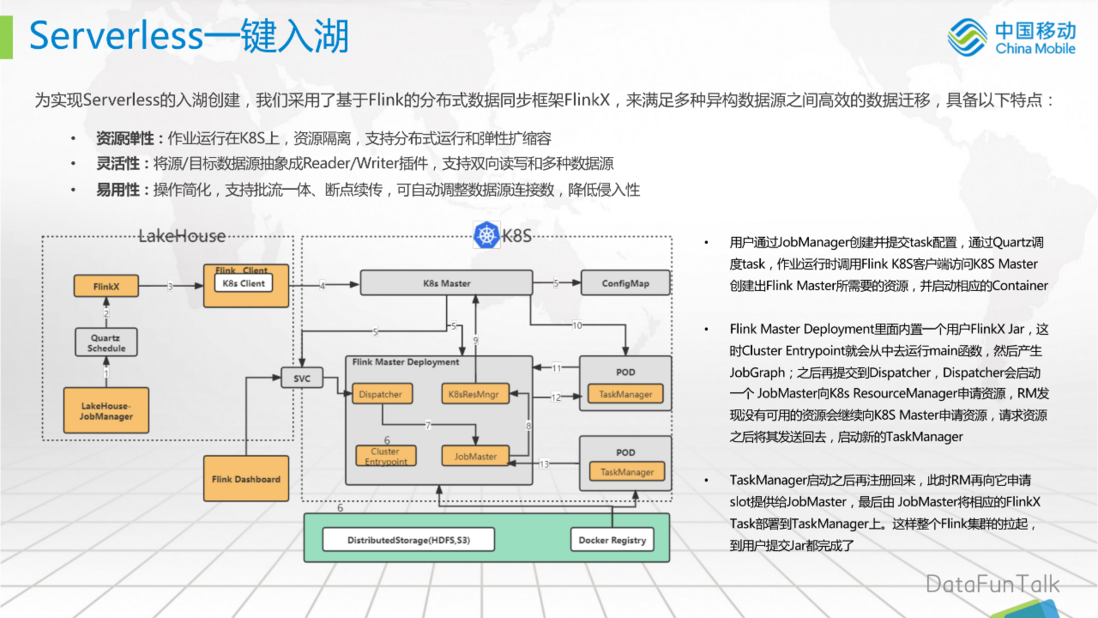

In order to realize the creation of serverless into the lake, we have adopted FlinkX, a distributed data synchronization framework based on Flink, to meet the efficient data migration between various heterogeneous data sources. It has the following characteristics:

- Resource elasticity: jobs run on Kubernetes, resource isolation, support for distributed operation and elastic scaling

- Flexibility: abstract source/target data sources into Reader/Writer plug-ins, support bidirectional reading and writing and multiple data sources

- Ease of use: Simplified operation, support for batch stream integration, breakpoint resuming, and automatic adjustment of the number of data source connections to reduce intrusiveness

The above figure is the process of scheduling tasks through FlinkX:

- The user creates and submits the task configuration through the JobManager, schedules the task through Quartz, and calls the Flink Kubernetes client when the job is running to access the Kubernetes Master to create the resources required by the Flink Master, and start the corresponding Container;

- There is a built-in user FlinkX Jar in Flink Master Deployment. At this time, the Cluster Entrypoint will run the main function from it, and then generate the JobGraph; then submit it to the Dispatcher, and the Dispatcher will start a JobMaster to apply for resources to the KubernetesResourceManager, and the RM will continue when it finds that there are no available resources. Apply for resources from the Kubernetes Master, send them back after requesting resources, and start a new TaskManager;

- After the TaskManager is started, it will be registered again. At this time, the RM will apply to it for a slot and provide it to the JobMaster. Finally, the JobMaster will deploy the corresponding FlinkX Task to the TaskManager. In this way, the entire Flink cluster is pulled up until the user submits the Jar.

Our Flink cluster is actually a serverless implementation.

- JDBC support

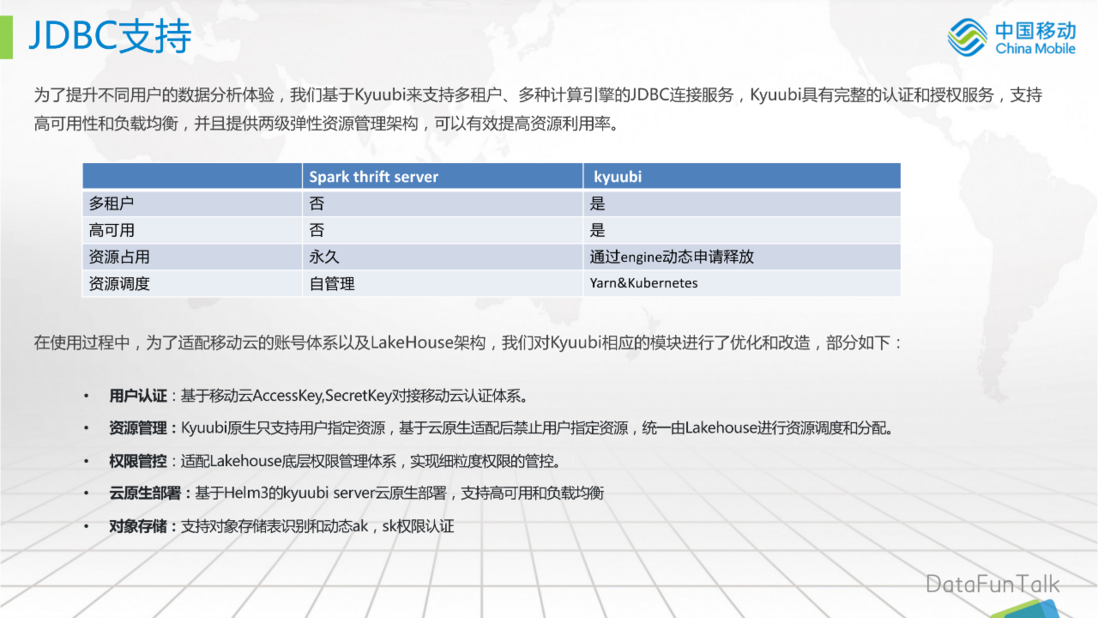

In order to improve the data analysis experience of different users, we support multi-tenant, multi-computing engine JDBC connection services based on Apache Kyuubi. Kyuubi has complete authentication and authorization services, supports high availability and load balancing, and provides two-level elastic resource management architecture, which can effectively improve resource utilization.

Before contacting Kyuubi, we tried to use the native Spark thrift server, but it has certain limitations, such as it does not support multi-tenancy, single-point does not have high availability, resources are long-term, and resource scheduling needs to be done by ourselves manage. We support multi-tenancy and high availability by introducing Kyuubi, dynamically apply for release through engine, and Kyuubi supports Yarn and Kubernetes resource scheduling.

In the process of use, in order to adapt to the mobile cloud account system and LakeHouse architecture, we have optimized and transformed the corresponding modules of Kyuubi, as follows:

- User authentication: Based on Mobile Cloud AccessKey, SecretKey is connected to the mobile cloud authentication system.

- Resource management: Kyuubi natively only supports user-specified resources. Based on cloud-native adaptation, user-specified resources are prohibited, and Lakehouse performs resource scheduling and allocation in a unified manner.

- Permission management and control: Adapt to the underlying permission management system of Lakehouse to realize fine-grained permission management and control.

- Cloud-native deployment: Helm3-based kyuubi server cloud-native deployment, supporting high availability and load balancing

- Object storage: support object storage table identification and dynamic ak, sk authorization authentication

- Incremental update

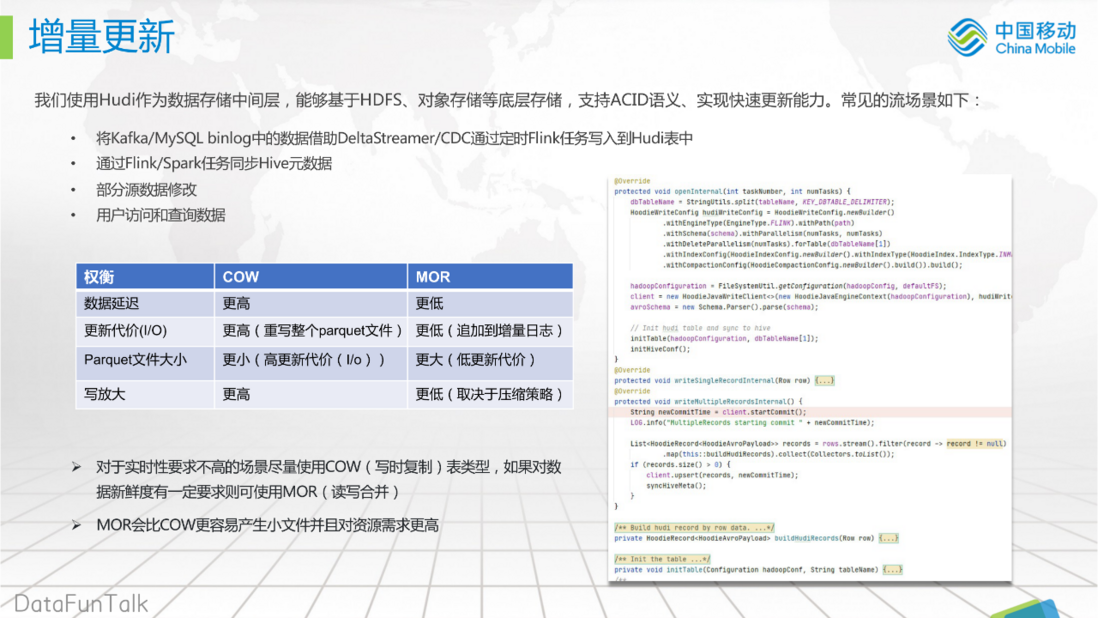

We use Hudi as the data storage middle layer, which can be based on HDFS, object storage and other underlying storage, support ACID semantics, and achieve rapid update capabilities. Common streaming scenarios are as follows:

- Write the data in the Kafka/MySQL binlog to the Hudi table with the help of DeltaStreamer/CDC through scheduled Flink tasks

- Sync Hive metadata with Flink/Spark tasks

- Partial source data modification

- User access and query data

As shown in the figure on the right, we encapsulate the DeltaStreamer / CDC that comes with Hudi, and customize the Reader / Writer features of FlinkX to achieve serverless access to the lake and data synchronization.

As shown on the left, we compare two data formats:

- For scenarios with low real-time requirements, try to use the COW (copy-on-write) table type. If you have certain requirements for data freshness, you can use MOR (read-write merge)

- MOR is easier to generate small files and has higher resource requirements than COW

The above are the details of the implementation of Mobile Cloud Lakehouse.

03 Application scenarios

The most important scenario is to build a cloud-native big data analysis platform: LakeHouse supports a variety of data sources, including but not limited to data generated by the application itself, various log data collected, and various data extracted from the database, and provides offline batch processing. , real-time computing, interactive query and other capabilities, saving a lot of software and hardware resources, research and development costs, and operation and maintenance costs that need to be invested in building a traditional big data platform.

In addition, in the private cloud scenario, on the premise of making full use of the existing cluster architecture, the Lakehouse capability is introduced in the form of new components; the data warehouse capability is introduced to adapt to the unified storage and management of various data; the metadata is unified to form a lake warehouse All-in-one metadata view:

- Hadoop platform view: Lakehouse, as a component on the Hadoop platform, can provide SQL query capabilities and support multiple data sources

- Lake warehouse view: Provides data lake warehouse platform based on LakeHouse, HDFS/OceanStor provides storage, computing cloud native, and unified metadata management of various services.

That's all for today's sharing, thank you all.

Sharing guests:

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。