The edge of the sky, the sincerity of the vastness, the beauty of the splendor; the understanding of the heart and the understanding, the good beginning and the end, the only good is the Tao! —— Chaojin's "The New Year of Chaojin"

write at the beginning

Regarding locks in the Java field, I believe that every Java Developer will have such a feeling since they have been in contact with Java. Whether it is Java's implementation or application of locks, it is really a "collection of heroes", and each lock has its own ass, its own book, and its own.

In many cases and in the application scenarios of various locks, various definitions will inevitably make us feel at a loss, and it is difficult to know how to handle these locks well.

In the world of concurrent programming, in general, we only need to understand how locks are used to meet most of our needs, but as a person with obsession and enthusiasm for technical research, in-depth exploration and Analysis is the joy of exploring technology.

As a Java Developer, to deeply explore and analyze and correctly understand and master the mechanisms and principles of these locks, we need to bring some practical problems, through exploration and analysis of them and practical application analysis, in order to truly understand and understand master.

Generally speaking, what problems are the locks provided for different scenarios used to solve? Whether it is in terms of implementation or usage scenarios, we can deal with the characteristics of these locks. How should we recognize and understand them?

Next, today we will discuss the concurrent locks in the Java field, take an inventory of the related locks, and discuss the overall analysis and discussion from the aspects of basic design ideas, design implementation, and application analysis.

key term

Some key words and common terms used in this article are as follows:

- Process: A running activity of a program in a computer on a data set, it is the basic unit of resource allocation and scheduling in the system, and the basis of the operating system structure. In the early computer structure of process-oriented design, the process is the basic execution entity of the program; in the contemporary computer structure of thread-oriented design, the process is the container of the thread.

- Thread: The smallest unit that the operating system can schedule operations on. It is contained in the process and is the actual operating unit in the process. In Unix System V and SunOS, it is also called Light-Weight Processes (Light-Weight Processes), but Light-Weight Processes are more referred to as Kernel Threads, while User Threads are called threads.

Basic overview

In the field of Java, from the perspective of how Java implements it, we can roughly divide it into locks based on Java syntax (keywords) and locks based on JDK.

Based on these two basic points, it can be used as a basic understanding of locks in the Java field, which guides a reference direction for us to understand and understand locks in the Java field.

In general, locks are the most basic and commonly used technology in concurrent programming, and they are widely used in Java's internal JDK.

Next, let's explore and understand the various locks in the Java field.

1. The basic theory of lock

The basic theory of lock mainly refers to the general model theory analyzed from the basic definition, basic characteristics and basic meaning of lock. It is a set of simple thinking methodology that helps us understand and unlock.

Generally, before understanding a thing, we will look at it according to its basic definition, basic characteristics and basic meaning. In the computer world, the lock itself is also a common thing with many application scenarios, just like our real life.

For example, in the operating system, various locks are also defined; locks also appear in the database system. Even locks are seen in the CPU processor architecture.

However, there will be a problem here: since they are all using locks, it seems difficult to give an accurate definition of how to define locks? In other words, this may be the basic reason why we only know this thing about the lock, but it has always been in the dark.

Essentially, a lock in the field of computer software development is a control mechanism for coordinating the access of multiple processes or threads to a certain resource. Its core is to act on the resource and also on the processes and threads, etc. in:

- Process: The basic unit of the operating system for resource allocation and scheduling. It is an entity in a computer program, where a program is a description of instructions, data, and their organization.

- Thread: The smallest unit that the operating system can perform operation scheduling. A thread refers to a single sequential control flow in a process. A process can have multiple threads concurrently, and each thread executes different tasks in parallel.

Generally speaking, threads are mainly divided into two types: threads located in the kernel space of the system, called Kernel Threads, and threads located in the user space of the application, called User Threads, among which:

That is to say, Java threads we generally say belong to user threads, while kernel threads are mainly function libraries and APIs encapsulated by the operating system.

And the most important thing is that the Java thread and JVM we mentioned on weekdays are located in the user space. From the Java layer to the thread scheduling sequence of the operating system, the general process is: java.lang.Thread(Target Thread)->Java Thread->OSThread->pthread->Kernel Thread.

Simply put, in the Java world, a lock is a tool used to control the access of multiple threads to a shared resource. In general, locks provide independent access to shared resources: only one thread can acquire the lock at a time, and all access to shared resources requires the lock to be acquired first. However, some locks allow concurrent access to shared resources.

For concurrent access to shared resources, it is mainly based on the preemptive scheduling of threads in most operating systems. Therefore, locking is to maintain the consistency and integrity of data, which is actually data security.

In summary, we can get a basic conceptual model of locks. Next, we will take stock of the following main locks one by one.

2. Basic classification of locks

In the field of Java, we can roughly divide locks into locks implemented at the Java syntax level (keywords) and locks implemented at the JDK level.

From the perspective of how Java implements it, we can roughly divide it into locks based on Java syntax (keywords) and locks based on JDK. in:

- Java built-in locks: locks based on Java syntax (keywords) are mainly implemented according to Java semantics. The most typical application is synchronized.

- Java explicit lock: The lock implemented at the JDK level is mainly implemented based on the Lock interface and the ReadWriteLock interface, as well as the unified AQS basic synchronizer. The most typical one is ReentrantLock.

It should be noted that in the field of Java, the lock based on the JDK level solves the atomicity problem in concurrent programming through the CAS operation, while the lock based on the Java syntax level solves the problem of atomicity and visibility in concurrent programming. .

In addition, a segmented lock implemented by a Segment array structure has been used in the Java concurrent container.

From the specific to the corresponding Java thread resources, we define the lock according to whether it contains a certain feature, mainly from the following aspects:

- From the perspective of the locked object, should the thread lock the synchronization resource? If locking is required to lock synchronization resources, it is generally called pessimistic locking; otherwise, if locking is not required and synchronization resources are not locked, it is called optimistic locking.

- From the perspective of the processing method of acquiring the lock, assuming that the synchronization resource is locked, does it enter the sleep state or block state for the thread? If it will enter the sleep state or blocking state, it is generally called a mutex lock. Otherwise, it will not enter the sleep state or blocking state, which belongs to a non-blocking lock, that is, a spin lock.

From the perspective of the changing state of the lock, are there any differences in the details of the process of competing for resources between multiple threads?

- First of all, for resources that will not be locked, only one thread of multiple threads can modify the resource successfully, and other threads will retry according to the actual situation, that is, there is no competition, which is generally lock-free.

- Second, for the same thread to execute synchronization resources, lock resources will be automatically acquired, which are generally biased locks.

- However, when multiple threads compete for synchronization resources, the threads that have not acquired the lock resources will spin and wait for the lock to be released, which is generally a lightweight lock.

- Finally, when multiple threads compete for synchronization resources, threads that do not acquire lock resources will block and wait for wake-up, generally belonging to heavyweight locks.

- From the perspective of fairness during lock competition, do multiple threads need to queue up when competing for resources? If it is necessary to wait in a queue, it is generally a fair lock; otherwise, it is an unfair lock to insert the queue first, and then try to queue.

- From the frequency of acquiring locks, can multiple processes in a thread acquire the same lock? If the lock operation can be performed multiple times, it is generally a reentrant lock; otherwise, the lock operation can be performed multiple times as a non-reentrant lock.

- From the perspective of the way of acquiring locks, can multiple threads share a lock? If the lock resource can be shared, it is generally a shared lock; otherwise, the exclusive lock resource is an exclusive lock.

For the various situations described above, let's take a detailed look at the specific situation of this lock in the field of Java.

3. Java built-in lock

In the Java field, Java built-in locks mainly refer to locks implemented at the Java syntax level (keywords).

In the Java field, we call the locks implemented based on the Java syntax level (keywords) as built-in locks, such as the synchronized keyword.

The most direct explanation of the synchronized keyword is the synchronization tool provided for developers in the Java language, which can be regarded as a kind of "syntactic sugar" in Java. The main purpose is to solve the problem of data synchronization in the process of multi-threaded concurrent execution.

Unlike other programming languages (C++), when dealing with synchronization problems, you need to perform lock processing yourself. The main feature is that it is simple and can be directly declared.

In a Java program, the synchronized keyword is used to lock the program, and the semantics of synchronization is mutual exclusion. It can be used to declare a synchronized block of code, or directly mark static methods or instance methods.

Among them, for the concept of mutual exclusion, in terms of mathematics, it is a mathematical term, indicating and describing that event A and event B will not occur at the same time in any experiment, then event A and event B are said to be mutually exclusive. reprimand.

Therefore, the mutual exclusion lock can be understood as: For a certain lock, only one thread can acquire the lock at any time, and when other threads want to acquire the lock, they have to wait or be blocked.

1. How to use

In the Java field, the synchronized keyword mutex mainly acts on object methods, on class static methods, on object methods, and on class static methods in four ways.

In the field of Java, the synchronized keyword can be mainly divided into:

Applied to object methods:

- Describes the methods of an object, representing that the methods of that object are synchronized. Because of the described methods of the object, the scope is in the object (Object), and the entire object acts as a lock.

- It should be noted that a class can instantiate multiple objects. At this time, each object is a lock, and the scope of each lock is equivalent to the current object.

Applied to class static methods:

- Describes a static method of a class, indicating that the method is synchronous. Due to the static method of the described class, the scope is in the class (Class), and the entire class acts as a lock.

- It should be noted that a certain class itself is also an object. When the JVM uses this object as a template to generate an object of this class, the scope of each lock is equivalent to that of the current class.

Applied to object methods:

- Describes a block of logic inside a method, indicating that the block of code is synchronized.

- It should be noted that we generally need to specify an object, such as synchronized(this){xxx} refers to the current object, or an object can be created as a lock.

In the static method of the class:

- Describes a block of logic inside a static method, indicating that the code block is synchronized.

- It should be noted that we generally need to specify the lock object. For example, synchronized(this){xxx} refers to the current class class as the lock object, or an object can be created as the lock.

Generally, when we are writing code, if we declare it in the above way, the code declared by the synchronized keyword will be more than ordinary code after compiling, use javap -c xxx.class to view the bytecode, we will find two more monitorenter and monitorexit directives.

2. Basic idea

In the field of Java, the synchronized keyword mutex is mainly based on a blocking queue and a waiting list, which is similar to a "wait-notice" working mechanism.

In general, the requirement of the "wait-notify" working mechanism is that the thread first acquires the mutex lock, where:

- When the conditions required by the thread are not met, the mutex is released and the waiting state is entered.

- When the required conditions are met, the waiting thread is notified to re-acquire the mutex.

In the field of Java, the built-in synchronized of the Java language and the three methods of wait(), notify(), notifyAll() defined by the java.lang.Object class can easily implement the wait-notification mechanism, among which:

- wait: Indicates that thread A holding the object lock is ready to release the object lock permission, release the cpu resources and enter the wait.

- notify: Indicates that the thread A holding the object lock is ready to release the object lock permission, and notifies the jvm to wake up a thread X that is competing for the object lock. After the scope of the synchronized code of thread A ends, thread X directly obtains the object lock permission, and other competing threads continue to wait (even if thread X synchronizes and releases the object lock, other competing threads still wait until a new notify, notifyAll is called).

- Indicates that thread A holding the object lock is ready to release the object lock permission, and informs the jvm to wake up all threads competing for the object lock. After the scope of the synchronized code of thread A ends, the jvm assigns the object lock permission to a thread X through an algorithm, and all the The awakened thread no longer waits. After the scope of thread X synchronized code ends, all previously awakened threads may obtain the object lock permission, which is determined by the JVM algorithm.

Once a thread calls the wait() method of any object, it becomes inactive until another thread calls the notify() method of the same object.

In order to call wait() or notify(), the thread must first acquire the lock on that object. That is, the thread must call wait() or notify() in a synchronized block.

For the working mechanism of the waiting queue, only one thread is allowed to enter the critical section protected by synchronized at the same time. When a thread enters the critical section, other threads can only enter the waiting queue on the left side of the figure to wait. This waiting queue and mutex are in a one-to-one relationship, and each mutex has its own independent waiting queue. In a concurrent program, where:

- When a thread enters the critical section, it needs to enter the waiting state because some conditions are not satisfied, and the wait() method of the Java object can meet this requirement.

- When the wait() method is called, the current thread is blocked and enters the waiting queue on the right, which is also the waiting queue for the mutex.

- When the thread enters the waiting queue, it will release the held mutex lock. After the thread releases the lock, other threads have the opportunity to obtain the lock and enter the critical section.

For the working mechanism of the notification queue, how should the waiting thread be notified when the conditions required by the thread are satisfied? Quite simply, the notify() and notifyAll() methods of Java objects. Calling notify() when the condition is met will notify the thread in the waiting queue (the waiting queue for the mutex) that the condition has been met. Why do you say it has been satisfied? in:

- Because notify() can only guarantee that the condition is satisfied at the notification time point.

- The execution time point of the notified thread and the notification time point basically do not coincide, so when the thread executes, it is very likely that the condition has not been met (there are other threads that may not be able to join the queue).

- In addition, there is another point that needs to be noted. If the notified thread wants to re-execute, it still needs to acquire the mutex lock (because the lock that was acquired has been released when calling wait()).

Above we have always emphasized that the waiting queue operated by the wait(), notify(), and notifyAll() methods is the waiting queue of the mutex, among which:

- If synchronized locks this, then the corresponding must be this.wait(), this.notify(), this.notifyAll();

- If the synchronized lock is target, then the corresponding must be target.wait(), target.notify(), target.notifyAll().

And the premise that the three methods wait(), notify(), notifyAll() can be called is that the corresponding mutex has been acquired, so we will find that wait(), notify(), notifyAll() are all synchronized {} is called inside.

If called outside of synchronized{}, or locked this, and called with target.wait(), the JVM will throw a runtime exception: java.lang.IllegalMonitorStateException.

For implementing the notification mechanism with notifyAll() and notify(), it is important to note the difference between the two:

- notify() method: Randomly notify a thread in the waiting queue.

- notifyAll() method: Notifies all threads in the waiting queue.

In a sense, notify() is better, because even if all threads are notified, only one thread can enter the critical section. But actually using notify() is also very risky, mainly because some threads may never be notified.

In the specific use process, unless after careful consideration, it is generally recommended to use notifyAll() as much as possible.

3. Basic implementation

In the Java field, the synchronized keyword mutex is mainly based on the Java HotSpot(TM) VM virtual machine implements the monitorenter and monitorexit instructions through the Monitor (monitor).

In the Java HotSpot(TM) VM virtual machine, the monitorenter and monitorexit instructions are mainly implemented through the Monitor (monitor), which generally includes a blocking queue and a waiting queue, where:

- Blocking Queue: Used to hold threads that have failed lock competition, they are in a blocking state.

- Waiting queue: used to hold the queue placed after calling the wait() method in the synchronized keyword block.

Among them, it should be noted that when the wait() method is called, the lock will be released and the blocking queue will be notified.

In general, when the Java bytecode (class) is hosted on the Java HotSpot(TM) VM virtual machine, the Monitor is taken over by ObjectMonitor, where:

- Each object has its own monitor, and if the thread does not obtain a singal (permission), the thread blocks.

- The monitor is equivalent to the key of an object. Only when the monitor of the object is obtained, can the synchronization code of the object be accessed. On the contrary, those who have not obtained the monitor can only block to wait for the thread holding the monitor to release the monitor.

For the monitorenter directive, where:

- An object is associated with a monitor monitor. When a monitor is occupied, it will be locked, and other threads cannot acquire the monitor. When the JVM executes the monitorenter inside a method of a thread, it tries to acquire the ownership of the monitor corresponding to the current object.

- The synchronized lock object will be associated with a monitor. This monitor is not created by us. The JVM thread executes the synchronized code block. If the lock object does not have a monitor, a monitor will be created. There are two important member variables inside the monitor. : The thread that owns the lock, recursions will record the number of times the thread has the lock. When a thread owns the monitor, other threads can only wait.

The main workflow is as follows:

- If the monitor's entry number is 0, the thread can enter the monitor. After entering, the monitor's entry number is set to 1. The current thread becomes the owner of the monitor.

- If the thread already owns the monitor and is allowed to re-enter the monitor, add 1 to the entry count of the monitor.

- If another thread already owns the monitor, the thread currently trying to acquire the monitor's ownership will be blocked. Until the monitor's entry count becomes 0, you can retry to acquire the monitor's ownership.

For the monitorexit directive, where:

- The thread that can execute the monitorexit instruction must be the thread that owns the monitor of the current object.

- When monitorexit is executed, the number of monitor entries is decremented by 1. When the number of monitor entries is reduced to 0, the current thread exits the monitor and no longer has the ownership of the monitor. At this time, other threads blocked by the monitor can try to obtain the ownership of the monitor.

The main workflow is as follows:

- monitorexit, the instruction appears twice, the first time is to release the lock for synchronous normal exit; the second time is to release the lock for abnormal exit.

- monitorexit releases the lock monitorexit is inserted at the end of the method and at the exception, and the JVM ensures that each monitorenter must have a corresponding monitorexit.

In summary, the execution of the monitorenter and monitorexit instructions is implemented by the JVM by calling the mutual exclusion primitive mutex of the operating system. The blocked thread will be suspended and wait for rescheduling, which will cause the switching between the two states of "user mode and kernel mode", which has a great impact on performance.

4. Concrete realization

In the Java field, each object in the JVM will have a monitor, and the monitor and the object are created and destroyed together. The monitor is equivalent to a special room used to monitor the entry of these threads, and its obligation is to ensure that only one thread can access the protected critical section code block (at the same time).

Essentially, a monitor is a synchronization tool, or a synchronization mechanism, and its main features are:

- Synchronization: The critical section code protected by the monitor is executed mutually exclusive. A monitor is a permission to run, which is required for any thread entering a critical section of code, and is returned when it leaves.

- Collaboration: The monitor provides a Signal mechanism that allows the thread that is holding the license to temporarily give up the license and enter the blocking waiting state, waiting for other threads to send Signal to wake up; other threads with permission can send Signal to wake up the thread that is blocking and waiting, so that it can wake up. Re-acquire permission and start execution.

In the Hotspot virtual machine, the monitor is implemented by the C++ class ObjectMonitor. The ObjectMonitor class is defined in the ObjectMonitor.hpp file. ObjectMonitor's Owner (_owner), WaitSet (_WaitSet), Cxq (_cxq), EntryList (_EntryList) Attributes are more critical.

ObjectMonitor's WaitSet, Cxq, and EntryList queues store threads that grab heavyweight locks, and the thread pointed to by ObjectMonitor's Owner is the thread that acquires the lock. in:

- Cxq: Contention Queue, all threads requesting locks are first placed in this contention queue

- EntryList: Threads in Cxq that qualify as candidate resources are moved to the EntryList.

- WaitSet: A thread that owns an ObjectMonitor will be blocked after calling the Object.wait() method, and then the thread will be placed in the WaitSet list.

Cxq is not a real queue, but a virtual queue. The reason is that Cxq is composed of Node and its next pointer logic, and there is no queue data structure. Each time a new Node is added, it will be performed at the head of the Cxq queue. The pointer of the first node is changed to a new node through CAS, and the next node of the new node is set to point to the subsequent node; when an element is obtained from Cxq, it will be obtained from the tail of the queue. Obviously, the Cxq structure is a lock-free structure.

Before the thread enters Cxq, the lock grabbing thread will first try to acquire the lock through CAS spin. If it cannot be acquired, it will enter the Cxq queue, which is obviously unfair to the thread that has entered the Cxq queue. Therefore, the heavyweight locks used by synchronized blocks are unfair locks.

Both EntryList and Cxq logically belong to the waiting queue. Cxq will be accessed concurrently by threads. In order to reduce the contention for the tail of Cxq, an EntryList is established. When the Owner thread releases the lock, the JVM will migrate the thread from the Cxq to the EntryList, and will designate a thread (usually Head) in the EntryList as the OnDeck Thread (Ready Thread). Threads in the EntryList exist as candidate contending threads.

The JVM does not directly pass the lock to the Owner Thread, but passes the right of lock competition to the OnDeck Thread, and OnDeck needs to compete for the lock again. Although this sacrifices some fairness, it can greatly improve the throughput of the system. In the JVM, this selection behavior is also called "competitive switching".

After the OnDeck Thread acquires the lock resource, it becomes the Owner Thread. The OnDeck Thread that cannot obtain the lock will remain in the EntryList. Considering the fairness, the position of the OnDeck Thread in the EntryList will not change (it is still at the head of the queue).

In the process of OnDeck Thread becoming the Owner, there is another unfair thing, that is, the new lock grabbing thread may grab the lock directly through the CAS spin to become the Owner.

If the Owner thread is blocked by the Object.wait() method, it will be transferred to the WaitSet queue until it is woken up by Object.notify() or Object.notifyAll() at some point, and the thread will re-enter the EntryList.

Threads in ContentionList, EntryList, and WaitSet are all blocked. The blocking or waking up of threads requires the help of the operating system. The pthread_mutex_lock system call is used under the Linux kernel to implement, and the process needs to switch from user mode to kernel mode.

5. Basic classification

In the field of Java, the synchronized keyword mutex mainly has four built-in lock states: no lock state, biased lock state, lightweight lock state and heavyweight lock state. These states are gradually escalated with competition.

In the field of Java, the general Java object (Object instance) structure includes three parts: object header, object body and alignment bytes, of which:

- Object Header: The object header includes three fields, mainly for Mark Word (marker field), Klass Pointer (type pointer) and Array Length (array length).

- Object body (Object Data): contains the instance variables (member variables) of the object, which are used for member property values, including the member property values of the parent class. This part of memory is aligned by 4 bytes.

Padding: Also known as padding alignment, its function is to ensure that the number of bytes of memory occupied by Java objects is a multiple of 8. The memory management of HotSpot VM requires that the starting address of the object must be an integer multiple of 8 bytes.

Generally, the object header itself is a multiple of 8. When the instance variable data of the object is not a multiple of 8, padding data is required to ensure the alignment of 8 bytes.

When classifying pessimistic locks and optimistic locks above, it is mentioned that synchronized is a pessimistic lock. Taking the Hotspot virtual machine as an example, it is necessary to lock the synchronization resources before operating the synchronization resources. This lock is stored in the Java object header, among which:

- Mark Word (mark field): HashCode, generation age and lock flag information of the object are stored by default. These information are all data unrelated to the definition of the object itself, so Mark Word is designed as a non-fixed data structure in order to store as much data as possible in a very small space memory. It will reuse its own storage space according to the state of the object, that is to say, the data stored in Mark Word will change with the change of the lock flag during operation.

Klass Pointer (type pointer): The pointer to the object's class metadata, the virtual machine uses this pointer to determine which class the object is an instance of.

For synchronized, synchronized realizes thread synchronization through Monitor. Monitor is thread synchronization that relies on the Mutex Lock (mutual exclusion lock) of the underlying operating system, mainly through the Monitor monitor in the JVM to implement the monitorenter and monitorexit instructions , and Monitor can be understood as a synchronization tool or a synchronization mechanism, usually described as an object. Every Java object has an invisible lock, called an internal lock or Monitor lock.

Monitor is a thread-private data structure. Each thread has a list of available monitor records, as well as a global available list. Each locked object is associated with a monitor, and there is an Owner field in the monitor that stores the unique identifier of the thread that owns the lock, indicating that the lock is occupied by this thread.

Before JDK 1.6, all Java built-in locks were heavyweight locks. Heavyweight locks will cause the CPU to frequently switch between user mode and kernel mode, so the cost is high and the efficiency is low.

In order to reduce the performance consumption caused by acquiring and releasing locks, JDK 1.6 introduced the implementation of biased locks and lightweight locks.

There are four states of built-in locks in JDK 1.6: no lock state, biased lock state, lightweight lock state, and heavyweight lock state. These states are gradually escalated with competition. in:

- Lock-free state: When the Java object is just created, there is no thread to compete, indicating that the object is in a lock-free state (no thread competing for it). At this time, the biased lock flag is 0 and the lock state is 01.

- Biased lock state: Refers to a piece of synchronization code that is always accessed by the same thread, then the thread will automatically acquire the lock, reducing the cost of acquiring the lock. If the built-in lock is in a biased state, when there is a thread competing for the lock, the biased lock is used first, indicating that the built-in lock favors this thread. When the thread wants to execute the synchronization code associated with the lock, it does not need to do any checking and switching. Biased locks are very efficient in the absence of intense competition.

- Lightweight lock state: When two threads start to compete for this lock object, the situation changes, it is no longer a biased (exclusive) lock, the lock will be upgraded to a lightweight lock, and the two threads compete fairly. Which one? The thread occupies the lock object first, and the Mark Word of the lock object points to the lock record in the stack frame of the thread.

- Heavyweight lock state: Heavyweight locks will block other applied threads and reduce performance. Heavyweight locks are also called synchronization locks. The lock object Mark Word changes again and points to a monitor object, which registers and manages queued threads in the form of a collection.

Therefore, according to the above lock status, we can divide Java built-in locks into four types of locks: no locks, biased locks, lightweight locks and heavyweight locks, among which:

- Lock-free: Indicates that the Java object instance has just been created, and there is no lock to compete. That is, without locking the resource, all threads can access and modify the same resource, but only one thread can successfully modify it at the same time.

- Bias lock: Bias lock mainly solves the problem of lock performance without competition. The so-called bias is eccentricity, that is, the lock will be biased towards the thread that currently owns the lock. Refers to a piece of synchronization code has been accessed by a thread, then the thread will automatically acquire the lock, reducing the cost of acquiring the lock.

- Lightweight locks: There are two main types of lightweight locks: ordinary spin locks and adaptive spin locks. When the lock is a biased lock and accessed by another thread, the biased lock will be upgraded to a lightweight lock, and other threads will try to acquire the lock in the form of spin without blocking, thereby improving performance. Since the JVM lightweight lock uses CAS for spin grab locks, these CAS operations are all in user mode, and there is no running switch between user mode and kernel mode for the process, and the JVM lightweight lock overhead is small.

- Heavyweight lock: The JVM heavyweight lock uses the mutual exclusion lock in the Linux kernel mode. When it is upgraded to a heavyweight lock, the threads waiting for the lock will enter the blocking state, which has a large overhead.

From the perspective of the state sequence of lock escalation, it can only be: no lock -> biased lock -> lightweight lock -> heavyweight lock, and the sequence is irreversible, that is, it cannot be downgraded.

To sum up, among the Java built-in locks, the biased lock solves the locking problem by comparing with Mark Word and avoids performing CAS operations. Lightweight locks solve the locking problem by using CAS operations and spins to avoid thread blocking and wake-up and affect performance. Heavyweight locks block all threads except the thread that owns the lock.

6. Application Analysis

In the Java field, the synchronized keyword mutex is mainly used for built-in locks, but the granularity of the lock is relatively large and cannot support timeouts.

The execution process from synchronized is roughly as follows:

- When a thread grabs a lock, the JVM first checks whether the biased_lock (biased lock flag) in the built-in lock object Mark Word is set to 1, and whether the lock (lock flag) is 01. If all are satisfied, confirm that the built-in lock object is in a biased state.

- After the built-in lock object is confirmed to be in a biased state, the JVM checks whether the thread ID in the Mark Word is the lock grabbing thread ID. If it is, it means that the lock grabbing thread is in a biased lock state. The lock grabbing thread quickly acquires the lock and starts executing the critical section. code.

- If the thread ID in the Mark Word does not point to the lock grabbing thread, the CAS operation is used to compete for the lock. If the competition is successful, the thread ID in Mark Word is set to the lock-grabbing thread, the bias flag is set to 1, and the lock flag is set to 01, and then the critical section code is executed. At this time, the built-in lock object is in the biased lock state.

- If the CAS operation fails to compete, it means that a competition has occurred, the biased lock is revoked, and then it is upgraded to a lightweight lock

- The JVM uses CAS to replace the Mark Word of the lock object with the lock record pointer of the lock grabbing thread. If successful, the lock grabbing thread acquires the lock. If the replacement fails, it means that other threads compete for the lock, and the JVM tries to use the CAS spin to replace the lock record pointer of the lock grabbing thread. If the spin is successful (the lock grab is successful), the lock object is still in the lightweight lock state.

- If the CAS replacement lock of the JVM fails to record the pointer spin, the lightweight lock will expand into a heavyweight lock, and the thread waiting for the lock will also enter the blocking state.

In general, biased locks are used when there is no lock contention; once there is a second thread contending for the lock, the biased lock will be upgraded to a lightweight lock; if the lock contention is intense, the lightweight lock After the CAS spin of the level lock reaches the threshold, the lightweight lock will be upgraded to a heavyweight lock.

4. Java explicit lock

In the Java field, Java explicit locks mainly refer to locks implemented at the JDK level.

In the Java field, the locks implemented at the JDK level all exist under the java.util.concurrent.locks package, which can be roughly divided into:

- Lock based on Lock interface

- A lock based on the ReadWriteLock interface

- A lock based on AQS basic synchronizer

- Locks based on custom API operations

For a long time, there are two core issues in the field of concurrent programming: one is mutual exclusion, that is, only one thread is allowed to access shared resources at the same time; the other is synchronization, that is, how to communicate and cooperate between threads.

The Java SDK concurrent package implements the monitor process through the Lock and Condition interfaces, where Lock is used to solve the mutual exclusion problem, and Condition is used to solve the synchronization problem.

1. JDK source code

In the field of Java, the locks expressed by Java explicit locks from the JDK source code can be roughly divided into locks based on the Lock interface, locks based on the ReadWriteLock interface, locks based on the AQS basic synchronizer, and locks based on custom API operations. lock etc.

In the field of Java, the locks based on the JDK source code level are mainly divided into the following categories:

- Locks based on the Lock interface: The locks based on the Lock interface mainly include ReentrantLock.

- Locks based on the ReadWriteLock interface: The locks based on the ReadWriteLock interface mainly include ReentrantReadWriteLock.

- Locks based on the AQS basic synchronizer: The locks based on the AQS basic synchronizer mainly include CountDownLatch, Semaphore, ReentrantLock, ReentrantReadWriteLock, etc.

- Locks implemented based on custom API operations: Directly encapsulate the implemented locks without relying on the above three methods, the most typical is StampedLock provided in JDK1.8.

To a certain extent, Java explicit locks are all locks based on the AQS basic synchronizer. The StampedLock provided in the JDK1.8 version is an improvement to the ReentrantReadWriteLock read-write lock.

To sum up, understanding and mastering Java built-in locks requires the design and implementation of the AQS basic synchronizer, which is the foundation and core implementation of ava built-in locks.

2. Basic idea

In the Java field, the basic idea of Java explicit locks comes from Doug Lea, the author of the JDK concurrent package JUC, who published the paper java.util.concurrent Synchronizer Framework.

In the field of Java, a synchronizer refers to a synchronization mechanism specially designed for multi-thread concurrency. Under this mechanism, threads are synchronized through a certain shared state during concurrent execution of multi-threads. Only when certain conditions are met, threads can be synchronized. implement.

In different application scenarios, the requirements for synchronizers are also different. JDK abstractly encapsulates the same part of various synchronizers into a unified basic synchronizer, and then uses this synchronizer as a template to implement different synchronization methods through inheritance. The synchronizer is what we call the unified basic AQS synchronizer.

Under the concurrent package java.util.concurrent. of JDK, various synchronization tools are provided, most of which are implemented based on the AbstractQueuedSynchronizer class, that is, the AQS synchronizer, which provides a basic framework for implementing locks and synchronization mechanisms for different scenarios. , which provides a general mechanism for atomic management of synchronization state, thread blocking and release, and queue management.

Among them, the theoretical basis of AQS is Doug Lea, the author of JDK concurrent package JUC, and the published paper is java.util.concurrent Synchronizer Framework [AQS Framework Paper ], which includes the basic principles, requirements, design, implementation ideas, design and users of the framework. and performance analysis.

3. Basic implementation

In the field of Java, Java explicit locks To a certain extent, Java explicit locks are all locks implemented based on the AQS basic synchronizer.

From the source code of JDK1.8 version, AbstractQueuedSynchronizer mainly inherits the abstract class AbstractOwnableSynchronizer, which mainly encapsulates two methods, setExclusiveOwnerThread() and getExclusiveOwnerThread(). in:

- setExclusiveOwnerThread() method: Set the thread exclusive mode, its parameter is java.lang.Thread object.

- getExclusiveOwnerThread() method: Get the thread in exclusive mode, and its return parameter type is java.lang.Thread object.

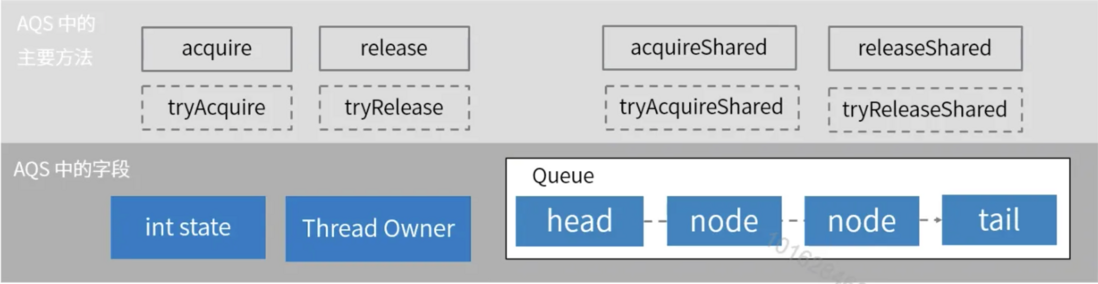

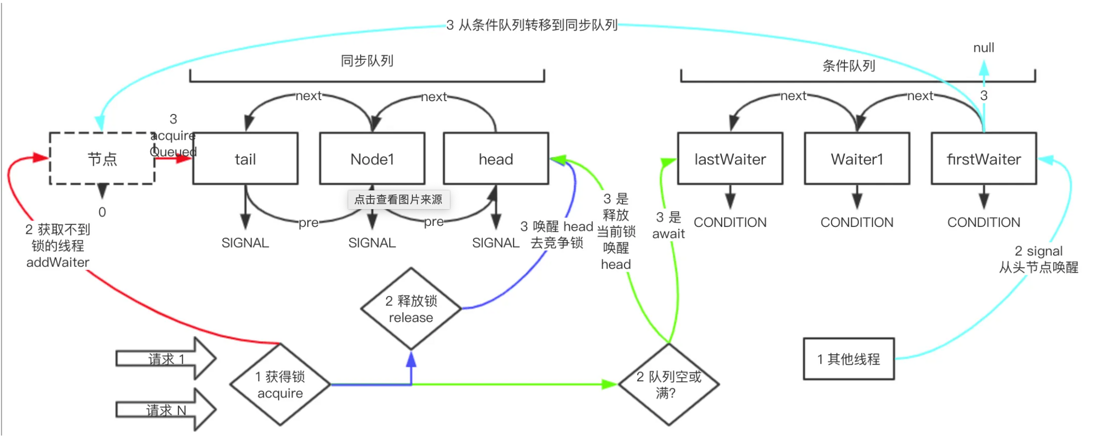

From the internal structure of an AbstractQueuedSynchronizer (AQS synchronizer), there are mainly five core elements: synchronization state, waiting queue, exclusive mode, shared mode, conditional queue. in:

- Synchronizer Status: used to implement the locking mechanism

- Wait Queue: used to store threads waiting for locks

- Exclusive Model: Implements exclusive locks

- Shared Model: Implementing shared locks

- Condition Queue: Provides a conditional queue mode that can replace the wait/notify mechanism

From the data structure used, the AQS synchronizer mainly encapsulates the thread into a Node, and maintains a CLH Node FIFO queue (non-blocking FIFO queue), which means that under concurrent conditions, insert and move into this queue. The removal operation will not block, mainly using CAS + spin lock to ensure the atomic operation of node insertion and removal, so as to achieve fast insertion.

From the source code of JDK1.8 version, the source code structure of AbstractQueuedSynchronizer is mainly as follows:

- Waiting queue: mainly defines two Node class variables, mainly the structure variables head and tail of the waiting queue, etc.

- The synchronization state is state, which must be a 32-bit integer type, and must be atomic when updating

- Variables of CAS operation: defines the handles of stateOffset, headOffset, tailOffset, waitStatusOffset and nextOffset, which are mainly used to perform CAS operations. The CAS operation of JDK is mainly implemented by the Unsafe class, which is provided in the class provided below in sun.misc.

- Threshold: spinForTimeoutThreshold, the dividing line that determines whether to use the spin method to consume time or to use the system blocking method to consume time, the default value is 1000L (ns), which is a long integer type, indicating that the lock competition is less than 1000 (ns). 1000(ns) uses system blocking.

- Condition queue object: Encapsulates a ConditionObject object based on the Condition interface.

However, it is important to note that after the JDK1.8 version, the source code structure of AbstractQueuedSynchronizer is different:

- Waiting queue: mainly defines two Node class variables, mainly the structure variables head and tail of the waiting queue

- The synchronization state is state, which must be a 32-bit integer type, and must be atomic when updating

- Variables of CAS operation: Use VarHandle to define the handles of state, head, and tail, which are mainly used to perform CAS operations. The CAS operation of JDK1.9 mainly uses VarHandle to replace the Unsafe class, which is located below java.lang.invoke.

- Threshold: spinForTimeoutThreshold, the dividing line that determines whether to use the spin method to consume time or to use the system blocking method to consume time, the default value is 1000L (ns), which is a long integer type, indicating that the lock competition is less than 1000 (ns). 1000(ns) uses system blocking.

- Condition queue object: Encapsulates a ConditionObject object based on the Condition interface.

It can be seen that the biggest difference is the use of VarHandle instead of the Unsafe class. Varhandle is a dynamically strongly typed reference to a variable or a family of variables defined by a parameter, including static fields, non-static fields, array elements or components of off-heap data structures. Access to these variables is supported in various access modes, including simple read/write access, volatile read/write access, and CAS (compare-and-set) access. Simply put, Variable is to bind these variables and directly manipulate these variables through Varhandle.

4. Concrete realization

In the field of Java, the locks based on the AQS basic synchronizer in Java explicit locks are mainly implemented by spin locks (CLH locks) + CAS operations.

When introducing built-in locks, it was mentioned that the main categories of lightweight locks are ordinary spin locks and adaptive spin locks, but in fact, in terms of the implementation of spin locks, they can be mainly divided into ordinary spin locks and self-adaptive spin locks. Adapt to 4 types of spin locks, CLH locks and MCS locks, among which:

- Ordinary spin lock: Multiple threads keep spinning and try to acquire locks, which is not fair and has a high overhead due to the need to ensure data consistency between the CPU, cache and main memory.

- Adaptive spin lock: mainly to solve the fairness problem of ordinary spin locks, a queuing mechanism is introduced, generally called exclusive spin locks, which are fair, but do not guarantee the relationship between CPU, cache and main memory. The data consistency problem is relatively large.

- CLH lock: The polling competition of a thread for a shared variable is transformed into a thread queue by certain means, and the threads in the queue each poll their own local variables.

- MCS lock: The main purpose is to solve the problem of CLH lock, which is also based on FIFO queue. Unlike CLH lock, it only spins local variables, and the precursor node is responsible for notifying the thread in the MCS lock to end automatically.

Spin lock is a solution to achieve synchronization, which belongs to a kind of non-blocking lock. The main difference from conventional locks is that the processing method after the acquisition of the lock fails is different, which is mainly reflected in:

- In general, after a regular lock fails to acquire the lock, the thread will be blocked and reawakened when appropriate

- The spin lock uses spin to replace the blocking operation, mainly because the thread will keep looping to check whether the lock is released, and once the thread is released, it will acquire the lock resource.

In fact, spin is a busy-waiting state that consumes CPU execution time all the time. In general, conventional mutex locks are suitable for holding locks for a long time, and spin locks are suitable for holding locks for short periods of time.

Among them, for the CLH lock, the core is to solve the cost problem caused by synchronization. Craig, Landim, Hagersten invented the CLH lock, which is mainly:

- Build a FIFO (first-in, first-out) queue. The queue is mainly implemented by moving the tail node tail during construction. Each thread that wants to obtain a lock will create a new node (next) and assign the new node to the CAS operation atomic operation. tail, the current thread polls the status of the previous node.

- After executing the thread, you only need to set the state of the node corresponding to the current thread to unlock, mainly to determine whether the current node is a tail node, and if it is directly set the tail node to be empty. Since the next node is always polling, the lock can be acquired.

CLH locks make many threads compete for resources for a long time, and by ordering these threads turns it into only local variable detection. The only place where there is competition is the competition for the tail node tail before joining the queue. Relatively speaking, the number of competitions for resources by the current thread is reduced, which saves the consumption of CPU cache synchronization and improves system performance.

However, there is also a problem. Although the CLH lock solves the overhead problem caused by a large number of threads operating the same variable at the same time, if the predecessor node and the current node do not exist in the local main memory, the access time will be too long, which will also cause performance problems. . The MCS lock was proposed to solve this problem at the time, and the authors were mainly invented by John Mellor Curmmey and Michhael Scott.

For the CAS operation, CAS (Compare And Swap, compare and exchange) operation is an optimistic locking strategy, mainly involving three operation data: memory value, expected value, new value, mainly refers to if and only if the expected value When it is equal to the memory value, modify the memory value to the new value.

The specific logic of CAS operation can be divided into three steps:

- First, check to see if a memory value is the same as the thread previously fetched.

- Secondly, if it is not the same, it means that the memory value has been modified by another thread, and this operation needs to be discarded.

- Finally, if the time is the same, indicating that no thread has changed during the period, you need to perform an update of the memory value with the new value.

In addition, it should be noted that the CAS operation is atomic, which is mainly guaranteed by CPU hardware instructions, and is implemented by calling local hardware instructions through the Java Native Interface (JNI).

Of course, the CAS operation avoids the problem of pessimistic policy exclusive objects and improves concurrent performance, but it also has the following three problems:

- The optimistic strategy can only guarantee the atomic operation of a shared variable. If there are multiple variables, CAS is not as good as a mutex, mainly due to the limitations of CAS operations.

- Long looping operations can result in excessive overhead.

- The classic ABA problem: The main purpose is to check whether a certain memory value is the same as the value obtained by the thread before. This judgment logic is not rigorous. The core of solving the ABA problem is to introduce a version number, and update the version number every time the variable value is updated.

Among them, in the Java field, for CAS operations in

- Before the JDK1.8 version, the CAS operation mainly used the Unsafe class. For details, you can refer to the source code to analyze it yourself.

- After the JDK1.8 version, the CAS operation of JDK1.9 mainly uses the VarHandle class. For details, you can refer to the source code for self-analysis.

To sum up, it mainly explains why Java explicit locks are implemented based on the AQS basic synchronizer. The locks are mainly implemented using spin locks (CLH locks) + CAS operations.

5. Basic classification

In the field of Java, the basic classification of Java explicit locks can be roughly divided into reentrant locks and non-reentrant locks, pessimistic locks and optimistic locks, fair locks and unfair locks, shared locks and exclusive locks, interruptible locks and non-reentrant locks Interrupt lock.

There are many kinds of explicit locks. From different perspectives, explicit locks are roughly classified into the following categories: reentrant locks and non-reentrant locks, pessimistic and optimistic locks, fair locks and unfair locks, shared locks and Exclusive locks, interruptible locks, and non-interruptible locks.

From the perspective of whether the same thread can repeatedly occupy the same lock object, explicit locks can be divided into reentrant locks and non-reentrant locks. in:

- Reentrant locks are also called recursive locks, which means that a thread can preempt the same lock multiple times. JUC's ReentrantLock class is a standard implementation class of reentrant locks.

- A non-reentrant lock is the opposite of a reentrant lock, which means that a thread can only preempt the same lock once.

From the perspective of whether a thread locks synchronization resources before entering a critical section, explicit locks can be divided into pessimistic locks and optimistic locks. in:

- Pessimistic lock: It is pessimistic thinking. Every time it enters the critical area to operate data, it thinks that other threads will modify it, so the thread will lock every time it reads and writes data, locking synchronization resources, so that other threads need to read and write this data. will block until the lock is obtained. In general, pessimistic locks are suitable for scenarios with more writes and fewer reads, and high performance when encountering high concurrent writes. Java's synchronized heavyweight lock is a pessimistic lock.

- Optimistic locking is an optimistic idea. Every time you go to get data, you think that other threads will not modify it, so it will not be locked. However, when updating, it will judge whether others have updated the data during this period. When writing, first read the current version number, and then lock the operation (compare with the previous version number, if it is the same, update it), if it fails, repeat the read-comparison-write operation. In general, optimistic locking is suitable for scenarios with more reads and fewer writes, and low performance when encountering high concurrent writes. Optimistic locking in Java is basically implemented through the CAS spin operation. CAS is an update atomic operation. It compares whether the current value is the same as the incoming value. If it is, it will be updated, and if it is not, it will fail. In the scenario of intense contention, a large number of empty spins will appear in the CAS spin, which will greatly reduce the performance of optimistic locking. Java's synchronized lightweight lock is an optimistic lock. In addition, the explicit locks (such as ReentrantLock) implemented based on the Abstract Queue Synchronizer (AQS) in JUC are optimistic locks.

From the fairness of preempting resources, display locks can be divided into fair locks and unfair locks, among which:

- A fair lock means that different threads have a fair and equal opportunity to preempt the lock. In terms of preemption time, the thread that preempts the lock first must be satisfied first, and the order of successful lock grabbing is reflected in the FIFO (first in, first out) order. . Simply put, fair lock is to ensure that each thread acquires the lock in order, and the thread that arrives first acquires the lock first.

- Unfair locks mean that the chances of different threads preempting locks are unfair and unequal. In terms of preemption time, the thread that preempts the lock first may not be satisfied first, and the order of successful lock grabbing will not be reflected as FIFO ( first in first out) order.

By default, a ReentrantLock instance is an unfair lock, but if the parameter true is passed in when the instance is constructed, the resulting lock is a fair lock. In addition, the tryLock() method of ReentrantLock is a special case. Once a thread releases the lock, the thread that is tryingLock can obtain the lock first, even if there are other threads waiting in the queue.

From the perspective that the preemption process can be terminated by some methods during the lock grabbing process, explicit locks can be divided into interruptible locks and non-interruptible locks, among which:

- Interruptible Locks: What are Interruptible Locks? If a thread A is holding the lock and executing the critical section code, and another thread B is blocking the preemption lock, it may be because the waiting time is too long, thread B does not want to wait, and wants to deal with other things first, we can let it interrupt its own blocking waiting,

- Unbreakable Locks: What are Unbreakable Locks? Once the lock is occupied by other threads, if you still want to preempt it, you can only choose to wait or block until other threads release the lock. If other threads never release the lock, you can only wait forever, and there is no way to terminate wait or block.

Simply put, during the lock grabbing process, the preemption process can be terminated by some methods, which is an interruptible lock, otherwise it is an uninterruptible lock.

Java's synchronized built-in lock is an uninterruptible lock, while JUC's explicit lock (such as ReentrantLock) is an interruptible lock.

- An exclusive lock is a lock that only one thread can hold at a time. Exclusive locking is a pessimistic and conservative locking strategy that unnecessarily limits read/read competition. If a read-only thread acquires the lock, then other read threads can only wait, which limits read Concurrency of operations, because read operations do not affect data consistency. JUC's ReentrantLock class is a standard exclusive lock implementation class.

- Shared locks allow multiple threads to acquire locks at the same time, allowing threads to enter critical sections concurrently. Different from exclusive locks, shared locks are optimistic locks, which relax the locking policy, do not limit read/read competition, and allow multiple threads performing read operations to access shared resources at the same time. JUC's ReentrantReadWriteLock (read-write lock) class is a shared lock implementation class. When this read-write lock is used, many threads can read together in the read operation, but only one thread can write in the write operation, and other threads cannot perform the read operation when writing. Replacing the ReentrantReadWriteLock lock with the ReentrantLock lock can ensure thread safety, but it will also waste some resources, because there is no thread safety problem for multiple read operations, so using the read lock in the read place and the write place in the write lock can improve program execution. efficiency.

In summary, for the basic classification of Java explicit locks, we can generally analyze them in this way.

6. Application Analysis

In the Java field, the Java explicit lock of the Java explicit lock is more fine-grained than the Java built-in lock, and the timeout mechanism can be set, which is more controllable and more flexible to use.

In contrast, a simple "wait-notify" inter-thread communication based on Java's built-in lock is implemented: the wait and notify methods of the Object object are used as switch signals to complete the communication between the notifier thread and the waiter thread.

The inter-thread communication mechanism of "wait-notify" mode, specifically, one thread A calls the wait() method of the synchronization object to enter the waiting state, while another thread B calls the notify() or notifyAll() of the synchronization object method to wake up the waiting thread, when thread A receives the wake-up notification from th

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。