Background introduction

Why do you need to learn Java concurrency?

From the point of view of improving performance

- Improve the efficiency of CPU usage : Most of the servers currently produced are multi-core, and the standard machines are 8C/16G. The operating system assigns different threads to different cores for processing. In theory, there are as many threads as there are cores to execute in parallel. If there is no concurrent programming, the utilization of the CPU will be greatly wasted. Assuming that time-consuming I/O operations are currently being processed, the entire CPU will be in a blocked idle state, and subsequent instructions must wait for the previous execution to complete before continuing.

- Reduce service RT : Large-scale Internet traffic can easily exceed 10,000 per second. If there is no concurrent processing, all user requests will be queued. Can you imagine the experience effect? How can such service capabilities retain customers? With concurrent programming, CPU computing power is fully released, and the operating system allows each customer to use CPU computing in turn, and each customer can get a fast response.

- High fault tolerance : the execution of threads will not interfere with each other, and the execution of a thread exits abnormally, which will not affect other threads.

From a developer's point of view

- Java basic interview skills : Java concurrency interview questions will basically appear. Students who have experience in large-scale project development and students who deal with many concurrent problems are often favored. Because the more complex the system, the more concurrent requests, simple business + concurrency = this business is not simple.

- Concurrency is inseparable from work : multithreading can give full play to the computing power of CPU, which makes us have to understand the principle of concurrency, so as to avoid thread safety problems and bring losses to production. Commonly used middleware uses a lot of concurrency knowledge, such as MQ, RPC, etc. If you are not familiar with the principles, how to tune the use of middleware.

Practice in concurrent programming business

Practice 1: Risk Control Rule Engine - Policy Execution

The risk control and security departments of Internet companies need to fight against black and gray products at all times to protect enterprises from unnecessary economic losses. In the process of confrontation, the risk control strategy team has developed a series of risk identification strategies to detect whether there are high-risk operations in the current business request.

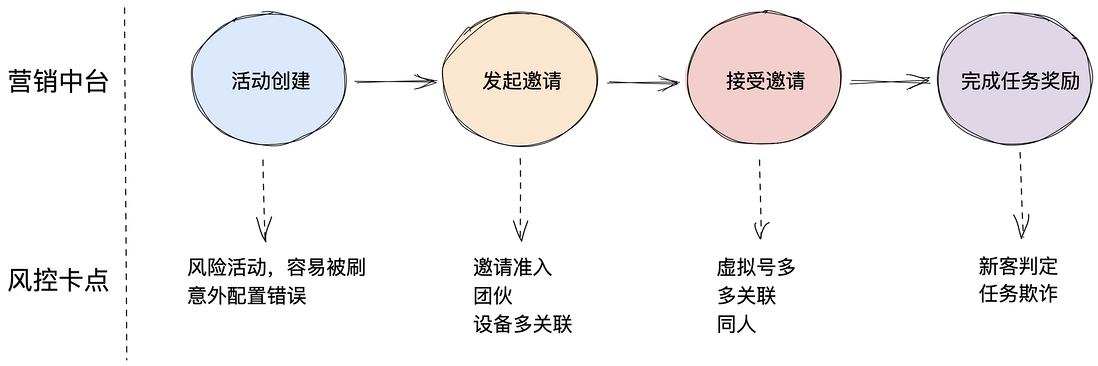

The risk control and security team needs to evaluate whether there are places where black products can obtain benefits in the operation process of the business, that is, " risk card points ". After the evaluation, the business needs to transparently transmit the business information to the risk control service at the risk card point in each process. Manual, requires further information to confirm/REJECT-reject, high-risk operation). The figure shows the risk card point of marketing activities - fission activities.

Marketing fission process risk card point diagram

A service request generally takes between 300 and 500 ms. If it exceeds this interval, it may be necessary to locate and tune which node takes more time. The system architecture of large Internet companies is relatively complex. A complete business may have dozens or even hundreds of service systems. A request you trigger may pass through more services than you can imagine.

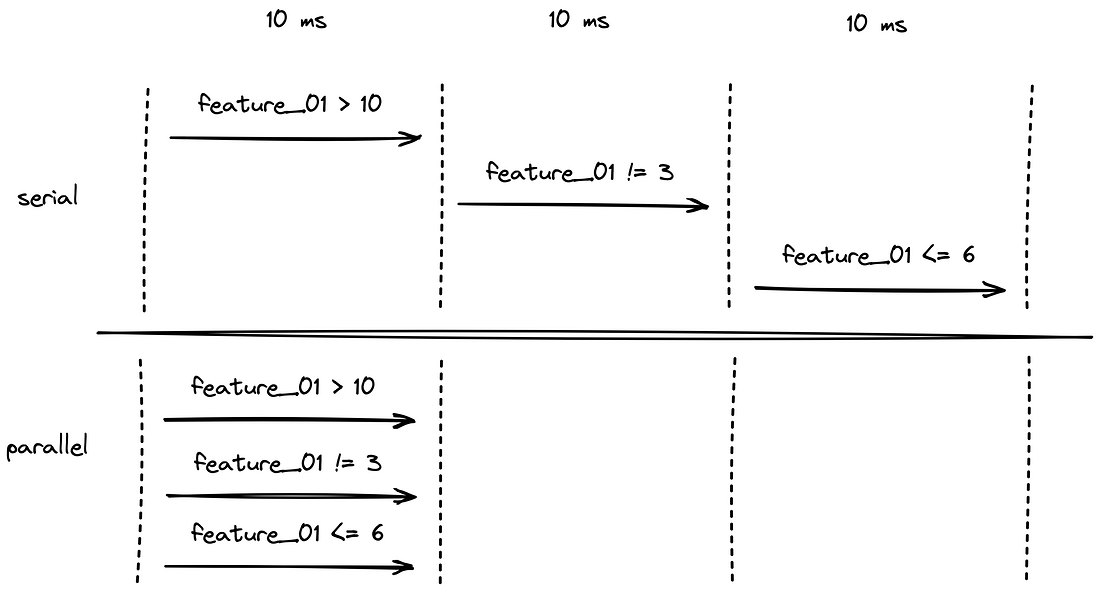

As mentioned above, business has high performance requirements for risk control services, which are generally controlled within 100 ms. However, the internal inspection of risk control business requests involves a large number of strategies and rules. How to execute them in a short time without casting off the strategy? The answer is that concurrency becomes. Concurrency is widely used in risk control to meet massive requests and computing needs. I will use the execution of policy rules as an example of how to write code. The following is the general execution process of a request for the risk control service:

Risk control process execution diagram

It can be seen that the judgment of a risk control request involves a large number of rule judgments. If there is no concurrency at this time, what will happen?

Serial & Parallel Execution Rule Graph

If all the policies and rules are executed serially, they may not be calculated in a few seconds. At this time, we need to use Java concurrency to meet the performance requirements.

The core code is as follows:

public class RuleSessionExecutor {

// 线程池

private final static ExecutorService executor = new ThreadPoolExecutor(

Runtime.getRuntime().availableProcessors() * 8,

Runtime.getRuntime().availableProcessors() * 8,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>(),

new CustomerThreadFactory("rule-executor"),

new ThreadPoolExecutor.AbortPolicy());

/**

* 规则执行

* @param rules

*/

public void execute(List<CustomerSession> rules) {

final CountDownLatch lock = new CountDownLatch(rules.size());

for (CustomerSession session : rules) {

try {

session.exec();

} catch (Throwable e) {

} finally {

lock.countDown();

}

}

try {

lock.await(50, TimeUnit.MILLISECONDS);

} catch (InterruptedException e) {

log.error("CountDownLatch error", e);

}

}

}The CountDownLatch concurrency tool class is used here, which will be introduced later.

Practice 2: Feature Loading of Risk Control Feature Platform

As in practice 1 above, a large number of features are required before a large number of rules are executed. If the features are acquired within each rule execution, it is feasible, but it will cause the problem of repeated acquisition of features and waste performance. For example: if the rules personnel do A/B testing, the two policy packages have a lot of overlapping features. At this time, if they are obtained in each rule, it means that the overlapping features are accessed twice. This waste is unnecessary. . At this point, we first obtain all the deduplication features under the current policy package before executing the rules, and then execute the rules after obtaining all the features.

So the question at this time is, how to obtain features in batches?

There are many types of features:

- Input type: not time-consuming - carried by the request context, such as order amount

- Derivative: Basically no time-consuming - derived based on input features, such as calculating distance based on latitude and longitude

- Real-time statistical features: basically not time-consuming - if you are interested, you can follow my article Flink's real-time feature implementation in risk control scenarios , detailed introduction

- Query class: time- consuming - if the order details are obtained by calling the business RPC interface according to the order number, the communication + business itself is time-consuming

- External category: time- consuming - such as third-party risk control company products, Tongdun, IPIP, etc.

Feature Synchronous Acquisition & Asynchronous Acquisition Comparison Chart

Obviously, we need concurrency to support performance. The core code is as follows:

public class DataSourceExecutor {

private final static ExecutorService executor = new ThreadPoolExecutor(

Runtime.getRuntime().availableProcessors() * 8,

Runtime.getRuntime().availableProcessors() * 8,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>(),

new HamThreadFactory("ds-executor"),

new ThreadPoolExecutor.AbortPolicy());

/**

* 特征获取

*

* @param dataSources

*/

public void execute(List<DataSource> dataSources) {

List<CompletableFuture> futures = Lists.newArrayList();

long timeout = ApolloConfig.getLongProperty(ApolloConfigKey.DS_TIMEOUT_OUTER_KEY, 150L);

for (DataSource ds : dataSources) {

timeout = ds.getExecutionTimeout() > timeout ? ds.getExecutionTimeout() : timeout;

CompletableFuture<Void> future = CompletableFuture.runAsync(() -> {

ds.execute();

}, executor);

futures.add(future);

}

CompletableFuture<Void> summaryFuture = CompletableFuture.allOf(futures.toArray(new CompletableFuture<?>[]{}));

try {

summaryFuture.get(timeout, TimeUnit.MILLISECONDS);

} catch (InterruptedException | ExecutionException | TimeoutException e) {

log.error("DataSource exec error", e);

}

}

}Similar to the batch execution of rules, the Java 8 CompletableFuture concurrency tool class is used here, and the function is enhanced, which will be introduced below.

Practice 3: Running batches of distributed tasks

Scheduled tasks should be a very common requirement in work, such as order status flow detection, reconciliation, etc. Tasks are generally run in batches, that is, they contain multiple subtasks. This scenario is very suitable for concurrent execution of thread pool task queues.

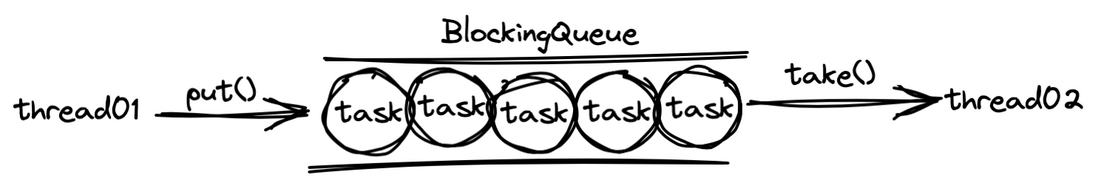

Task queue thread pool diagram

The core code is as follows:

public void execute(List<Task> tasks) {

tasks.forEach(t -> {

executor.execute(() -> {

try {

log.info("task id: {} begin exec", t.getId());

t.execute();

} catch (Throwable e) {

log.error(String.format("task execute error, uid: %s", t.getId()), e);

} finally {

log.info("task id: {} end exec", t.getId());

}

});

});

}Common tools for concurrent programming

Thread Pool

Thread pool : A thread usage pattern. Too many threads introduce scheduling overhead, which in turn affects cache locality and overall performance. The thread pool maintains multiple threads, waiting for the supervisory manager to assign tasks that can be executed concurrently. This avoids the cost of creating and destroying threads when dealing with short-lived tasks. The thread pool can not only ensure full utilization of the core, but also prevent over-scheduling. The number of available threads should depend on the number of available concurrent processors, processor cores, memory, network sockets, etc. [1].

Thread pool provided by JUC: The ThreadPoolExecutor class helps developers manage threads and easily execute parallel tasks. Understanding and using thread pools reasonably is a basic skill that a developer must learn.

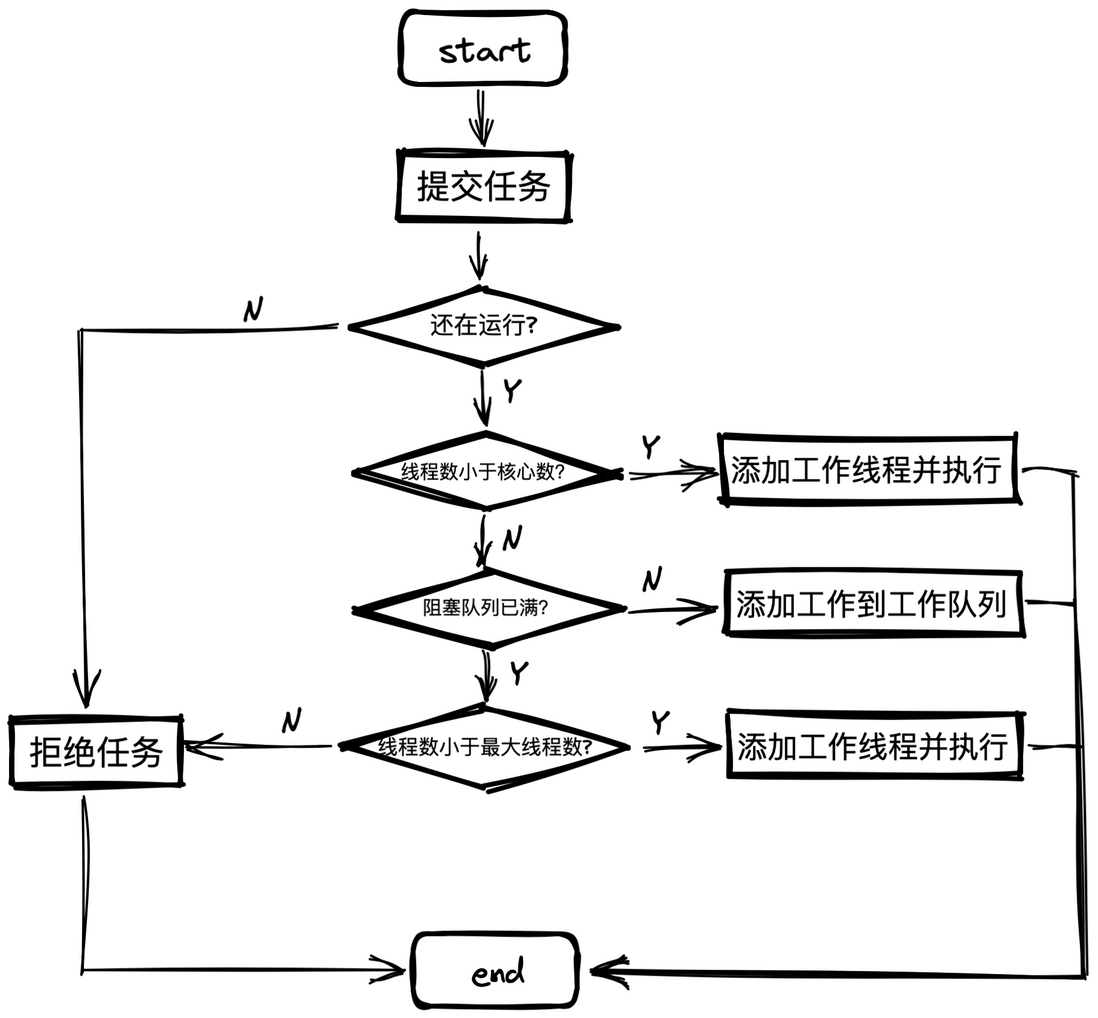

Task scheduling <br>When the user submits the task, the life cycle of the task will be controlled by the thread pool. A producer/consumer model is actually built inside the thread pool. Threads and tasks are decoupled, and there is no strong association, which is conducive to the buffering & multiplexing of tasks. The first step in understanding thread pools is to know how tasks work.

task execution diagram

The essence of the task queue <br>thread pool is the management of tasks and threads, and the key to this is to decouple tasks and threads, and prevent the two from being directly related, so as to achieve a reasonable allocation of work in the future. The thread pool is implemented in a producer-consumer mode through a blocking queue. The blocking queue caches tasks, and worker threads get tasks from the blocking queue.

BlockingQueue adds two new features to the queue.

- When the queue is empty, the thread that gets the element waits for the queue to become non-empty

- When the queue is full, the thread storing the element waits for the queue to become available

Blocking queues are often used in producer and consumer scenarios. The producer is the thread that adds elements to the queue, and the consumer is the thread that takes elements from the queue. A blocking queue is a container for producers to store elements, and consumers only get elements from the container.

Blocking queue diagram

The blocking queue can be selected from the following table:

| ArrayBlockingQueue | Bounded; Array Implementation; FIFO; |

|---|---|

| LinkedBlockingQueue | Bounded (default length Integer.MAX_VALUE, memory overflow will happen accidentally); linked list implementation; FIFO; |

| PriorityBlockingQueue | unbounded; balanced binary tree implementation; sorting |

| DelayQueue | Same as PriorityBlockingQueue; objects can only be taken from the queue when they expire |

| SynchronousQueue | No element is stored; no put operation waits for a take operation |

| LinkedTransferQueue | Bounded; the advantage is that the granularity of the lock is reduced relative to the LinkedBlockingQueue, and the performance is higher |

| LinkedBlockingDeque | Compared with LinkedBlockingQueue, double-end blocking is realized; the lock granularity is reduced, and the performance is better |

The task rejects the self-protection fuse part of the thread pool. When the task bounded cache queue is full, it proves that the thread pool has been overloaded and cannot handle it. At this time, it is necessary to reject new incoming tasks and adopt the set rejection policy to protect the thread pool.

Users can choose the four rejection strategies provided by JDK, or customize the implementation of the RejectedExecutionHandler interface.

| ThreadPoolExecutor.AbortPolicy() | Discard tasks and throw RejectedExecutionException exception; thread pool default rejection strategy; critical business should use this exception strategy to understand the health of thread pool |

|---|---|

| ThreadPoolExecutor.CallerRunsPolicy() | The main thread executes the current task |

| ThreadPoolExecutor.DiscardOldestPolicy() | Discard the old task and resubmit the task; production is not recommended, it is risky |

| ThreadPoolExecutor.DiscardPolicy() | Discard tasks & do not throw exceptions; production is not recommended, it is not easy to find problems |

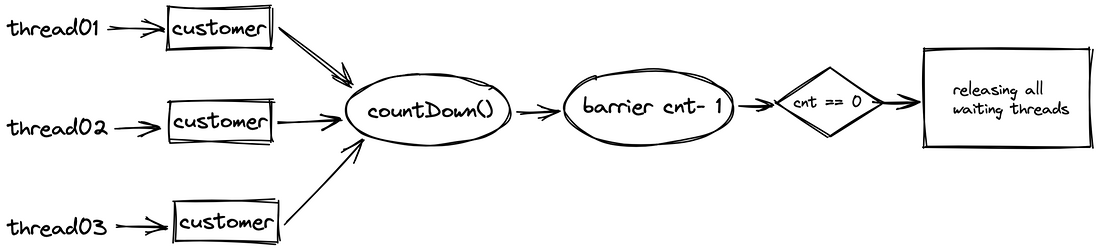

CountDownLatch - Synchronized Counter

CountDownLatch is implemented using a counter internally. The number of counters is equal to the number of waiting threads to be processed during initialization. When each thread is executed, the counter needs to be decremented by one. When the counter reaches 0, it means that all the threads that need to be executed have been executed. It will wake up the main thread to continue executing the main line task.

CountDownLatch flow chart

Common scenarios:

- 1, etc. N: Combined with the thread pool to release the CPU computing power, if the complex information flow on the home page is refined, the information of each module can be distributed and loaded, and after the count is completed, the structured data will be returned to the main thread

- Worst match: which thread finishes execution, it can immediately release the number of cnt to 0 and notify the main thread to execute

Core code:

public void demo() {

List<Task> tasks = ...

final CountDownLatch countDownLatch = new CountDownLatch(10);

tasks.forEach(task -> {

try {

// 自己的子线程逻辑

} catch (Throwable e) {

// 有时 Exception 接不到的异常,建议用 Throwable

} finally {

countDownLatch.countDown();

}

});

try {

countDownLatch.await(100, TimeUnit.MILLISECONDS);

} catch (InterruptedException e) {

log.error("countDownLatch InterruptedException", e);

}

}CompletableFuture

Java provides CompletableFuture in version 1.8 to support asynchronous programming. The function of CompletableFuture is really shocking. Its complexity should be one of the most complex I have ever seen.

Let's look at an example to intuitively feel the power of CompletableFurure

CompletableFuture Scrambled Eggs with Tomatoes

// 步骤一:准备西红柿

CompletableFuture<String> f1 =

CompletableFuture.supplyAsync(() -> {

System.out.println("洗西红柿");

System.out.println("切它");

return "切好的西红柿";

});

// 步骤二:准备鸡蛋

CompletableFuture<String> f2 =

CompletableFuture.supplyAsync(() -> {

System.out.println("洗鸡蛋");

System.out.println("煎鸡蛋");

return "煎好的鸡蛋";

});

// 步骤三:炒鸡蛋

CompletableFuture<String> f3 =

f1.thenCombine(f2, (__, tf) -> {

System.out.println("爆炒");

return "西红柿炒鸡蛋";

});

// 等待任务 3 执行结果

System.out.println(f3.join());CompletableFuture aims to solve the complex implementation logic between multiple threads. As shown above, it actually only contains the logic of business implementation. The logic of concurrent programming has been cleverly avoided by Lamda programming. It's perfect for doing the hardest things with the least amount of code.

There is no detailed description of CompletableFuture here. If you are interested, you can follow me, because the implementation of CompletableFuture may not be finished even with an article.

Common concurrency problems and solutions

Deadlock & Positioning

Deadlock (deadlock), when two or more computing units are waiting for each other to stop executing to obtain system resources, but no one exits in advance, it is called deadlock. 【1】

The four conditions for deadlock are:

- No preemption: System resources cannot be forced out of a process.

- Hold and wait: A process can hold system resources while waiting.

- Mutual exclusion : Resources can only be allocated to one process at the same time, and cannot be shared by multiple processes.

Circular waiting: A series of processes hold each other the resources needed by other processes.

position

jps jstack pid // 上面的信息截取 Found one Java-level deadlock: ============================= "Thread-1": waiting to lock monitor 0x00007fcc68023f58 (object 0x0000000795ea0c00, a java.lang.Object), which is held by "Thread-0" "Thread-0": waiting to lock monitor 0x00007fcc68022ab8 (object 0x0000000795ea0c10, a java.lang.Object), which is held by "Thread-1"jps locates the running java program, and then uses jstack pid to print thread information, and it is obvious that there is a prompt deadlock at the bottom, and then according to the thread number 0x00007fcc68023f58 to find the corresponding thread, you can analyze which piece of code caused the problem.

performance tuning

Java concurrent multithreaded programming, our preferred tool must be the thread pool. The core problem facing the use of thread pools is that the parameters of thread pools are not easy to configure!

Have you ever encountered inaccuracies or mistakes in estimating the minimum active threads and maximum active threads of a thread pool in an online scenario based on experience? In fact, there is no general calculation formula for thread pools, because there is not only one of your services on a machine, and there is not only one thread pool in a service. If it is estimated according to I/O-intensive or CPU-intensive, it is inevitable to repeat The pain of debugging.

So can we reduce the cost of modifying thread pool parameters, so that at least when a failure occurs, we can quickly adjust and shorten the failure recovery time?

This article will not talk much about the architectural design of dynamic thread pools. If you are interested, you can follow me. I will post articles in the future. Only a general idea is given here:

- Dynamic parameter adjustment: support dynamic adjustment of thread pool core parameters, minimum/maximum number of core threads

- Task monitoring: mainly monitors the accumulation of blocking queues and the time-consuming 95-99 lines of a single thread execution

- Warning: there is potential pressure and timely warning and correction

- Operation Notification & Authentication: Production operations are dangerous and require caution

Perfect monitoring

The operation of any system is inseparable from monitoring. It is only a matter of granularity. In concurrent scenarios, we especially need to pay attention to the monitoring status of service threads, especially important indicators such as the number of active threads, queue stacking length, average time consumption, and throughput. When an early warning occurs, the corresponding developers can be notified in time to downgrade, and it can also be automatically blown to protect the main service.

Wonderful past

- Performance tuning - a small log is a big pit

- Performance Optimization Essentials - Flame Graph

- Flink implements real-time features in risk control scenarios

Welcome to the official account: Gugu Chicken Technical Column Personal technical blog: https://jifuwei.github.io/

This article participated in the Sifu technical essay , and you are welcome to join.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。