Abstract: Based on the investigation of existing deep learning-based anomaly detection algorithms, this article introduces the existing deep anomaly detection algorithms, and roughly predicts the future development trend of deep anomaly detection algorithms.

This article is shared from Huawei Cloud Community " [Paper Reading] Anomaly Detection Algorithm and Development Trend Analysis ", the original author: MUR11.

The anomaly detection problem is an important problem in many practical application scenarios. Based on the investigation of existing deep-learning-based anomaly detection algorithms, this paper introduces the existing deep anomaly detection algorithms, and roughly predicts the future development trend of deep anomaly detection algorithms.

1. Anomaly detection application scenarios

Anomaly detection has a large number of applications in actual production and life. For example: find potential fraud or cash out records from credit card transaction records, find illegal traffic participants in traffic surveillance videos, find diseased organizations in medical images, find out intruders in the network, Find abnormal transmission from the signal transmitted by the Internet of Things.

At present, most anomaly detection scenarios are highly dependent on manual labor, and there is a large demand for manpower. In the future, as the aging population increases and the working population decreases, it is a general trend to use algorithms to replace manual abnormalities.

Two, common exception types

Common exception types can be divided into three categories:

- point abnormal: single sample/sampling point obviously deviates from the distribution of all other samples/sampling points. For example: in the credit card transaction record, if a user's daily transactions are small transactions, the sudden occurrence of a large transaction is a bit abnormal.

- abnormal conditions: a single sample/sampling point and the joint distribution of some other conditions significantly deviate from other samples/sampling points, which is called conditional abnormality. For example: the temperature range of a certain place in a year is from -10 degrees to 40 degrees. Normally, the daily temperature should be within the above range. However, if the temperature reaches -5 degrees on a certain day in summer, although the temperature is still within the normal range on that day, it is an obvious abnormality in combination with the season. This type of abnormality belongs to the abnormal condition.

- population abnormality: a single sample/sampling point is normal, but a large number of samples/sampling points are abnormal as a whole. For example: still taking credit card transaction records as an example, if a user’s daily transactions are small transactions, 10 small transactions suddenly appear on a certain day, and the amount of each transaction is the same, although every transaction is consistent The user’s usage habits, but these 10 transactions are combined together but there are abnormalities. This type of abnormality belongs to the group abnormality.

Among the above three types of exceptions, conditional exceptions are equivalent to point exceptions after a certain conversion. Therefore, in fact, the only common exception types are point exceptions and group exceptions. The current research and application in the industry are mainly focused on the detection of point anomalies, and the following will also focus on the detection algorithms of point anomalies.

Three, anomaly detection algorithm

The current mainstream anomaly detection algorithms are usually based on deep learning technology, and can be divided into supervised methods, unsupervised methods, and semi-supervised methods according to the supervised information used. In addition, there are also some anomaly detection algorithms that combine deep learning techniques with traditional non-deep learning techniques. These methods are introduced separately below.

3.1 Deeply supervised methods

Deep supervised learning is currently the most fully researched and widely used method of deep learning. When using this type of method for anomaly detection, it includes three stages: data collection, model training, and model inference:

- data collection: collects normal samples and abnormal samples, and labels the samples;

- model training: uses annotated samples to train the model, taking image-based anomaly detection as an example. Common models include image classification models (judge whether the samples are abnormal), target detection models (approximately locate the abnormal location in the image), semantics Segmentation model (precisely locate the abnormal area in the map);

- model reasoning: the sample to be analyzed to the model, and the model outputs the result after calculation.

The advantages of this type of method are simple implementation and high accuracy, but the disadvantage is that a large number of normal and abnormal samples need to be collected and labeled. In practice, abnormal samples are often scarce, and it is usually difficult to collect a sufficient number of abnormal samples to train the model, so it is impossible to apply deep supervised anomaly detection methods.

3.2 Deep unsupervised methods

In order to better deal with the situation that it is difficult to collect a sufficient number of abnormal samples in practical applications, the deep unsupervised abnormality detection method indirectly realizes the function of abnormality detection by modeling normal samples. Specifically, the steps of deep unsupervised anomaly detection are as follows:

- data collection: collects a large number of normal samples;

- model training: models normal samples through training models. Commonly used models include autoencoders, generative adversarial networks, etc.;

- model reasoning: the sample to be analyzed to the model, and obtains the output of the model;

- comparison: the difference between the sample to be analyzed and the output of the model, and judges whether there is an abnormality based on a preset threshold.

This type of method is currently the mainstream method for anomaly detection using deep learning technology, and the two representative models are autoencoders and generative adversarial networks.

3.2.1 Autoencoder

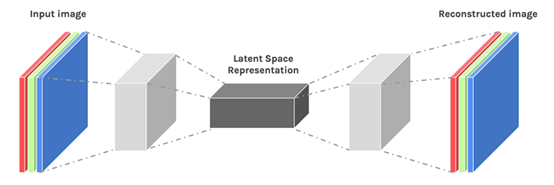

Still take the image anomaly detection task as an example. When using the autoencoder model to detect anomalies in the image, the process is as follows:

- Data collection: Collect a large number of normal pictures;

- Model training: The model structure is shown in the figure below [1]. When training, the input of the model is a normal picture; the goal of training is to make the output of the model as similar as possible to the input. Commonly used metrics include pixel-level L2 loss, L1 loss, and SSIM loss. The assumption of this type of method is: because the model has only seen normal pictures during the training process, no matter what kind of picture is input, the model will tend to reconstruct the input picture into a normal picture, so the reconstruction result of the abnormal picture will be the same as the input picture. There are obvious differences between;

- Model reasoning: The picture to be analyzed is used as the input of the model, and after the model is calculated, an image reconstructed by the model is output;

- Comparison: By judging the pixel-level reconstruction error between the input image and the output image (usually calculated by L2 loss or SSIM loss), by comparing the value of the reconstruction error with the preset threshold, if the reconstruction error If the value of is less than the threshold, it is determined that there is no abnormality, otherwise it is considered that there is an abnormality in the image.

3.2.2 Generating a confrontation network

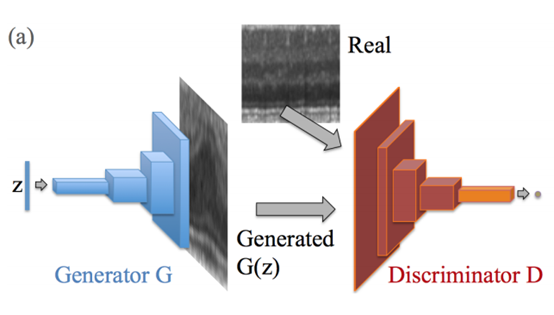

When using the generative adversarial network model to detect anomalies in an image, the process is as follows:

- Data collection: Collect a large number of normal pictures;

- Model training: The model structure is shown in the figure below [2]. During training, the input of the model is a normal picture, and the goal of training is to make the pictures generated by the model have the same distribution as the real normal pictures. The training process is realized by the two sub-models of the generator and the discriminator. The goal of the discriminator is to distinguish as far as possible which pictures are generated by the model and which are real; and the goal of the generator is to generate as realistic pictures as possible and let the discriminate Device can’t distinguish;

- Model reasoning: Through specific operation steps, the generator generates a picture that is as similar as possible to the picture to be analyzed. For example: through the method of gradient backpropagation, iteratively update the input variable z of the generator until the similarity between the output generated by the generator and the image to be analyzed reaches the maximum;

- Comparison: by judging the pixel-level difference between the image to be analyzed and the generated image (usually calculated by L2 loss or SSIM loss), by comparing the value of the difference with a preset threshold, if the difference is less than Threshold, it is determined that there is no abnormality, otherwise it is considered that there is an abnormality in the image.

3.2.3 Summary

The above two subsections briefly introduced the implementation steps of two typical deep unsupervised anomaly detection algorithms. It can be seen from the above steps that the unsupervised anomaly detection algorithm usually judges whether there is a fault by calculating the difference between the reconstructed or generated image and the actual image. Although this method does not require abnormal samples and is more suitable for actual scenarios, its shortcomings are also obvious-the ability to resist noise interference is relatively poor. Since pixel-level differences are not necessarily caused by actual faults, they may also be caused by innocuous interference such as stains. Deep unsupervised anomaly detection algorithms cannot distinguish these differences. Therefore, in practical applications, deep unsupervised anomaly detection Algorithms usually have more false positives.

3.3 Deep semi-supervised method

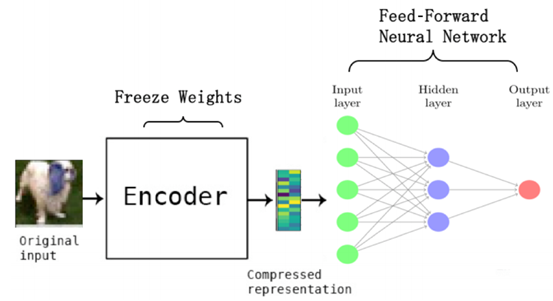

In practice, sometimes a small number of abnormal samples can be collected. In order to make full use of these abnormal samples to improve the accuracy of anomaly detection algorithms, academia has proposed a type of deep semi-supervised anomaly detection algorithm. The main steps of this type of algorithm are as follows:

- Data collection: Collect a large number of pictures, including both normal pictures and abnormal pictures;

- Model training: The model structure is shown in the figure below [3]. When training, the model does not know which are normal pictures and which are abnormal pictures, so it is not recognized in a supervised way, but the loss of the traditional non-deep One-class SVM is transferred to the deep learning model;

- Model reasoning: feed the image to be analyzed to the model. After a series of calculations, the model obtains a value as output. By comparing the output value with a preset threshold, if the output value is greater than the threshold, it is judged as abnormal, otherwise judged Is normal.

The advantages of this type of method are simple data collection, and end-to-end representation learning and classifier learning, but often a large number of parameters need to be tried during the training process, and the training takes a long time.

3.4 Depth-Non-Deep Hybrid Method

In addition to the above-mentioned anomaly detection algorithms based entirely on deep learning, in recent years there have also been some methods that combine deep learning with traditional non-deep anomaly detection methods, as shown in the figure below. In this type of method, the deep learning model is only used as a feature extractor, and the core function of identifying anomalies is implemented by traditional anomaly detection algorithms.

The advantage of this type of method is that it can flexibly combine different deep learning models and non-deep anomaly detection algorithms. The disadvantage is that the process of feature representation learning and the process of judgment are separated, resulting in the process of judgment cannot be counterproductive to the process of feature representation learning. The features extracted by the deep learning model may not be able to characterize anomalies, nor may they be able to match the subsequent anomaly detection algorithms.

Four, development trend

This section combines the previous content to summarize several valuable research scenarios in the field of anomaly detection, and make a rough forecast of future research trends:

- Deeply supervised methods: Although the accuracy of current deep-supervised anomaly detection algorithms is very high, this type of method is highly dependent on a large number of labeled normal samples and abnormal samples, so it is difficult to find applicable scenarios in practice. If you want to expand the scope of application, how to obtain a model with strong generalization performance under small sample conditions in the future is the key and difficult point that this type of method needs to break through.

- Deep unsupervised methods: The current deep unsupervised anomaly detection algorithms have low requirements for data and a large range of applications. However, due to the poor anti-interference ability of such methods, the number of false alarms in practice is often large, which affects actual use User experience. Therefore, how to further model noise interference or suppress noise interference is the focus of future research on this type of method.

- Group anomaly detection: Group anomaly detection is a research direction that has just emerged in recent years. It is still in its infancy. There are still great uncertainties in future research directions and methods, but this direction is expected to become one of the hot research directions in the future.

references

[1] Paul Bergmann, Sindy Loewe, Michael Fauser, David Sattlegger, Carsten Steger. Improving Unsupervised Defect Segmentation by Applying Structural Similarity To Autoencoders. arXiv 2019.

[2] Thomas Schlegl, Philipp Seeböck, Sebastian M. Waldstein, Ursula Schmidt-Erfurth, Georg Langs. Unsupervised Anomaly Detection with Generative Adversarial Networks to Guide Marker Discovery. arXiv 2017.

[3] Raghavendra Chalapathy. Anomaly Detection Using One-Class Neural Networks. arXiv 2019.

Click to follow and learn about Huawei Cloud's fresh technology for the first time~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。