Instance segmentation is one of the basic problems in computer vision. Although there have been many researches on instance segmentation in static images, there are relatively few researches on video instance segmentation (VIS). Whatever the real-world camera receives, regardless of the surrounding scenes perceived by the vehicle in real-time under the background of autonomous driving, or long and short videos in network media, most of them are video stream information rather than pure image information. Therefore, the research is of great significance to the video modeling model. This article is an interpretation of a paper published by the unmanned distribution team of Meituan in CVPR 2021.

Preface

Instance segmentation is one of the basic problems in computer vision. At present, the industry of instance segmentation in static images has conducted a lot of research, but there are relatively few researches on video instance segmentation (VIS for short). However, the real-world cameras receive, whether it is the surrounding scenes real-time perceived by the vehicle under the background of autonomous driving, or the long and short videos in network media, most of them are video stream information rather than pure image information. Therefore, the research is of great significance to the video modeling model. This article is an interpretation of an Oral paper published by the Meituan unmanned distribution team in CVPR2021: " End-to-End Video Instance Segmentation with Transformers ". This year's CVPR conference received a total of 7015 valid submissions, and finally a total of 1663 papers were accepted. The acceptance rate of papers was 23.7%, and the acceptance rate of Oral was only 4%.

background

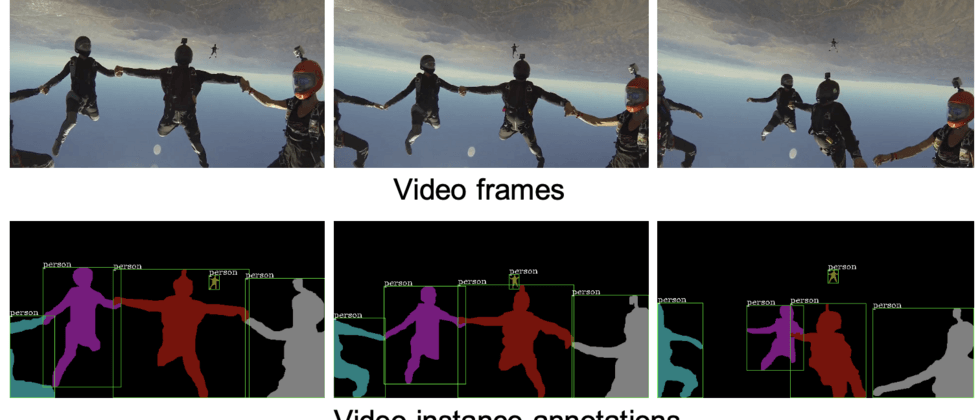

Image instance segmentation refers to the task of detecting and segmenting objects of interest in static images. Video is an information carrier that contains multiple frames of images. Compared with static images, video has richer information, so modeling is more complicated. Unlike static images that only contain spatial information, videos also contain time-dimensional information, which is closer to the portrayal of the real world. Among them, video instance segmentation refers to the task of detecting, segmenting and tracking objects of interest in the video. As shown in Figure 1, the first row is a multi-frame image sequence of a given video, and the second row is the result of segmentation of video instances, where the same color corresponds to the same instance. Video instance segmentation not only needs to detect and segment objects in a single frame of images, but also find the corresponding relationship of each object in the dimensions of multiple frames, that is, to associate and track them.

Related work

Existing video instance segmentation algorithms are usually complex processes that include multiple modules and multiple stages. The earliest Mask Track R-CNN[1] algorithm contains two modules of instance segmentation and tracking at the same time. It is implemented by adding a tracking branch to the network of the image instance segmentation algorithm Mask R-CNN[2]. This branch is mainly used for Instance feature extraction. In the prediction stage, the method uses an external Memory module to store the features of multiple frames of instances, and uses the feature as an element of the instance correlation for tracking. The essence of this method is still the segmentation of a single frame and the traditional method for tracking correlation. Maskprop[3] adds the Mask Propagation module on the basis of Mask Track R-CNN to improve the quality of segmentation mask generation and correlation. This module can realize the propagation of the mask extracted from the current frame to the surrounding frames, but due to the propagation of the frame. It is based on the pre-calculated segmentation mask of a single frame, so to obtain the final segmentation mask, a multi-step Refinement is required. The essence of this method is still the extraction of a single frame plus the propagation between frames, and because it relies on the combination of multiple models, the method is more complicated and the speed is slower.

Stem-seg [4] divides video instance segmentation into two modules: instance distinction and category prediction. In order to realize the distinction of instances, the model constructs the multi-frame Clip of the video as a 3D Volume, and realizes the segmentation of different objects by clustering the Embedding features of the pixels. Since the above clustering process does not include the prediction of instance categories, an additional semantic segmentation module is required to provide pixel category information. According to the above description, most of the existing algorithms follow the idea of single-frame image instance segmentation, and divide the video instance segmentation task into single-frame extraction and multi-frame association multiple modules, supervise and learn for a single task, and the processing speed is slow And it is not conducive to taking advantage of the continuity of video timing. This article aims to propose an end-to-end model that unifies the detection, segmentation and tracking of instances into one framework, which helps to better mine the overall spatial and timing information of the video, and can solve the video instances at a faster speed. The problem of segmentation.

Introduction to VisTR algorithm

Redefine the problem

First, we rethink the task of video instance segmentation. Compared with a single frame image, the video contains more complete and rich information about each instance, such as the trajectory and motion mode of different instances. This information can help overcome some of the more difficult problems in single frame instance segmentation tasks, such as appearance Similarity, proximity of objects, or occlusion, etc. On the other hand, the better feature representation of a single instance provided by multiple frames also helps the model to better track the object. Therefore, our method aims to achieve an end-to-end framework for modeling video instance targets. In order to achieve this goal, our first thought is: video itself is sequence-level data, can it be directly modeled as a sequence prediction task? For example, drawing on the idea of natural language processing (NLP) tasks, the segmentation of video instances is modeled as a sequence-to-sequence (Seq2Seq) task, that is, given a multi-frame image as input, directly output a multi-frame segmentation mask sequence. A model that can model multiple frames at the same time.

The second thought is: The instance segmentation of video actually includes two tasks of instance segmentation and target tracking at the same time. Can it be realized under one framework? Our idea for this is: segmentation itself is the learning of similarity between pixel features, and tracking is essentially the learning of similarity between instance features, so theoretically they can be unified under the same similarity learning framework.

Based on the above thinking, we selected a model that can simultaneously perform sequence modeling and similarity learning, that is, the Transformer [5] model in natural language processing. Transformer itself can be used for the task of Seq2Seq, that is, given a sequence, you can input a sequence. And this model is very good at modeling long sequences, so it is very suitable for modeling the timing information of multi-frame sequences in the video field. Secondly, the core mechanism of Transformer, the Self-Attention module, can learn and update features based on the similarity between the two, so that the similarity between pixel features and the similarity between instance features are unified. Realization is possible within a framework. The above characteristics make Transformer an appropriate choice for VIS tasks. In addition, Transformer has been applied to the practice DETR [6] of target detection in computer vision. Therefore, we designed the video instance segmentation (VIS) model VisTR based on the transformer.

VisTR algorithm flow

Following the above-mentioned ideas, the overall framework of VisTR is shown in Figure 2. The leftmost part of the figure represents the input multi-frame original image sequence (take three frames as an example), and the right represents the output instance prediction sequence, where the same shape corresponds to the output of the same frame of image, and the same color corresponds to the output of the same object instance. Given a multi-frame image sequence, first use convolutional neural network (CNN) to extract the initial image features, and then combine the multi-frame features as a feature sequence into Transformer for modeling to realize the input and output of the sequence.

It is not difficult to see that, first of all, VisTR is an end-to-end model, that is, modeling multiple frames of data at the same time. The way of modeling is: turn it into a Seq2Seq task, input a multi-frame image sequence, and the model can directly output the predicted instance sequence. Although the input and output of multiple frames in the timing dimension are ordered, the sequence of instances of a single frame input is disordered in the initial state, which still cannot achieve the tracking and correlation of the instances, so we force the output of each frame of image The order of the instances is the same (indicated by the symbols of the same shape in the figure with the same color change order), so as long as the output of the corresponding position is found, the association of the same instance can be realized naturally without any post-processing operations. In order to achieve this goal, it is necessary to model the sequence dimensions of the features that belong to the same instance position. Pertinently, in order to achieve sequence-level supervision, we proposed the Instance Sequence Matching module. At the same time, in order to achieve sequence level segmentation, we proposed the Instance Sequence Segmentation module. End-to-end modeling regards the spatial and temporal characteristics of the video as a whole, and can learn the information of the entire video from a global perspective, while the dense feature sequence modeled by Transformer can better retain detailed information.

VisTR network structure

The detailed network structure of VisTR is shown in Figure 3. The following is an introduction to the various components of the network:

- Backbone : Mainly used for the extraction of initial image features. For each frame of the input image of the sequence, the Backbone of CNN is used to extract the initial image features, and the extracted multi-frame image features are serialized into a multi-frame feature sequence along the time sequence and spatial dimensions. Because the process of serialization loses the original spatial and temporal information of pixels, and the task of detection and segmentation is very sensitive to position information, we encode its original spatial and horizontal position as Positional Encoding and superimpose it on the extracted sequence features To keep the original location information. The method of Positional Encoding follows the method of Image Transformer[7], but only changes the original two-dimensional position information into three-dimensional position information, which is explained in detail in the paper.

- Encoder : Mainly used to model and update multi-frame feature sequences as a whole. Input the previous multi-frame feature sequence, Transformer's Encoder module uses the Self-Attention module to perform the fusion and update of all the features in the sequence through the learning of the similarity between points. This module can better learn and enhance the features belonging to the same instance by modeling the overall time series and spatial features.

- Decoder : Mainly used to decode and output the predicted instance feature sequence. Since the Encoder input Decoder is a dense pixel feature sequence, in order to decode sparse instance features, we refer to the DETR method and introduce Instance Query to decode representative instance features. Instance Query is an Embedding parameter learned by the network itself, which is used to perform Attention operations with a dense input feature sequence to select features that can represent each instance. Taking the processing of 3 frames of images and predicting 4 objects per frame as an example, the model requires a total of 12 Instance Query to decode 12 instance predictions. Consistent with the previous representation, the same shape represents the prediction corresponding to the same frame of image, and the same color represents the prediction of the same object instance in different frames. In this way, we can construct the prediction sequence of each instance, corresponding to Instance 1...Instance 4 in Figure 3. In the subsequent process, the model treats the sequence of a single object instance as a whole for processing.

- Instance Sequence Matching : Mainly used for sequence-level matching and supervision of input prediction results. The process from the image input of the sequence to the instance prediction of the sequence has been introduced above. However, the order of the prediction sequence is actually based on an assumption, that is, the input order of the frame is maintained in the dimension of the frame, and in the prediction of each frame, the output order of different instances remains the same. The order of frames is easier to maintain, as long as the order of control input and output is the same, but the order of instances within different frames is actually not guaranteed, so we need to design a special supervision module to maintain this order. In general target detection, there is a corresponding Anchor at each location point, so the Ground Truth supervision corresponding to each location point is assigned, but in our model, there is actually no Anchor and location display. Therefore, for each input point, we do not have ready-made supervision information about which instance it belongs to. In order to find this supervision and directly supervise in the sequence dimension, we proposed the Instance Sequence Matching module. This module performs binary matching between the predicted sequence of each instance and the Ground Truth sequence of each instance in the labeled data, using Hungarian matching. Ways to find the latest labeled data for each prediction, as its Groud Truth to supervise, and perform the following Loss calculation and learning.

- Instance Sequence Segmentation : Mainly used to obtain the final segmentation result sequence. The sequence prediction process of Seq2Seq has been introduced earlier, and our model has been able to complete sequence prediction and tracking correlation. But so far, what we have found for each instance is only a representative feature vector, and the final task to be solved is the task of segmentation. How to turn this feature vector into the final Mask sequence is what the Instance Sequence Segmentation module wants to solve problem. As mentioned earlier, the essence of instance segmentation is the learning of pixel similarity, so our initial calculation of Mask is to use the prediction of the instance and the feature map after Encode to calculate the Self-Attention similarity, and use the obtained similarity map as this instance. The initial Attention Mask characteristics of the frame. In order to make better use of the timing information, we input the Attention Mask of multiple frames belonging to the same instance as the Mask sequence into the 3D convolution module for segmentation, and directly obtain the final segmentation sequence. In this way, the segmentation result of a single frame is enhanced by using the characteristics of the same instance of multiple frames, which can maximize the advantage of timing.

VisTR loss function

According to the previous description, there are two main areas for calculating the loss in network learning, one is the matching process in the Instance Sequence Matching stage, and the other is the final loss function calculation process of the entire network after the supervision is found.

The calculation formula of the Instance Sequence Matching process is shown in Equation 1: Since the Matching stage is only used to find supervision, and the calculation of the distance between the Masks is more intensive, we only consider the Box and the predicted category c at this stage. The yi in the first row represents the Ground Truth sequence corresponding to the i-th instance, where c represents the category, b represents the Bounding box, and T represents the number of frames, that is, the category and Bounding Box sequence corresponding to this instance of the T frame. The second and third rows respectively represent the results of the prediction sequence, where p represents the predicted probability of the ci category, and b represents the predicted Bounding Box. The calculation of the distance between the sequences is obtained by calculating the loss function between the values of the corresponding positions of the two sequences. The figure is represented by Lmatch. For each predicted sequence, find the Ground Truth sequence with the lowest Lmatch as its supervision. According to the corresponding supervision information, the loss function of the entire network can be calculated.

Since our method is to implement classification, detection, segmentation and tracking into an end-to-end network, the final loss function also includes three aspects of category, Bounding Box and Mask, and tracking is reflected by directly calculating the loss function of the sequence. Formula 2 represents the loss function of the segmentation. After obtaining the corresponding supervision result, we calculate the Dice Loss and Focal Loss between the corresponding sequences as the loss function of the Mask.

The final loss function is shown in formula 3, which is the sum of the sequence loss functions including classification (class probability), detection (Bounding Box), and segmentation (Mask).

Experimental results

In order to verify the effectiveness of the method, we conducted experiments on the widely used video instance segmentation dataset YouTube-VIS, which contains 2238 training videos, 302 verification videos and 343 test videos, as well as 40 object categories. The evaluation criteria of the model include AP and AR, and the IOU between the multi-frame masks of the video dimension is used as the threshold.

The importance of timing information

Compared with the existing methods, the biggest difference of VisTR is to directly model the video. The main difference between video and image is that video contains rich timing information. If effective mining and learning of timing information is the key to video understanding, We first explored the importance of timing information. Timing consists of two dimensions: the number of timings (number of frames) and the comparison between order and disorder.

Table 1 shows the final test results of our clip training model with different frame numbers. It is not difficult to see that as the number of frames increases from 18 to 36, the accuracy of the model is also improving, proving that multiple frames provide more abundant The timing information is helpful to the learning of the model.

Table 2 shows the comparison of the effect of using Clips that disrupt physical timing and Clips that follow physical timing for model training. It can be seen that the model trained in chronological order has a little improvement, which proves that VisTR has learned objects in physical time. Variations and rules, and modeling the video according to the physical time sequence also helps the understanding of the video.

Query exploration

The second experiment is the exploration of Query. Since our model directly models 36 frames of images and predicts 10 objects for each frame of image, 360 Query is needed, corresponding to the result (Prediction Level) in the last row of Table 3. We want to explore whether there is a certain association between Query belonging to the same frame or the same instance, that is, whether it can be shared. In response to this goal, we designed Frame Level experiments: one frame only uses one Query feature for prediction, and Instance level experiments: one instance uses only one Query feature for prediction.

It can be seen that the result of Instance Level is only one point less than the result of Prediction Level, while the result of Frame Level is 20 points lower, which proves that Query of different frames belonging to the same Instance can be shared, but Query information of different Instances of the same frame cannot be shared. shared. Prediction Level Query is proportional to the number of input image frames, while Instance Level Query can implement a model that does not depend on the number of input frames. Only the number of instances to be predicted by the model is limited, and the number of input frames is not limited. This is also a direction that can be continued in the future. In addition, we also designed a Video Level experiment, that is, the entire video is predicted with only one Embedding feature of Query, and this model can achieve an AP of 8.4.

Other designs

The following are other designs that we found effective during the experiment.

Since the original spatial and temporal information will be lost in the process of feature serialization, we provide the original Positional Encoding feature to retain the original location information. In Table 5, a comparison is made with or without this module. The position information provided by Positional Encoding can bring about 5 points of improvement.

In the process of segmentation, we obtain the initial Attention Mask by calculating the Prediction of the instance and the Self-Attention of the features after Encoded. In Table 6, we compare the effect of segmentation using the features of CNN-Encoded and the features of Transformer-Encoded. Using the features of Transformer can improve a point. The effectiveness of Transformer's global feature update is proved.

Table 6 shows the comparison of the effect of 3D convolution in the segmentation module. The use of 3D convolution can bring a point of improvement, which proves the effectiveness of using timing information to directly segment multi-frame masks.

Visualize the results

The visualization effect of VisTR in YouTube-VIS is shown in Figure 4, where each row represents the same video sequence, and the same color corresponds to the segmentation result of the same instance. It can be seen that whether it is in (a). The instance is occluded (b). There is a relative change in the position of the instance (c). The instance is close to the same type and (d). The instance is in different poses, the model can achieve better The segmentation and tracking of VisTR proves that VisTR still has a good effect in challenging situations.

Method comparison

Table 7 is a comparison between our method and other methods on the YoutubeVIS dataset. Our method achieves the best effect of a single model (where MaskProp contains a combination of multiple models), and achieves an AP of 40.1 at a speed of 57.7FPS. The first 27.7 refers to the speed of the sequential Data Loading part (this part can be optimized using parallelism), and 57.7 refers to the speed of the pure model Inference. Since our method directly models 36 frames of images at the same time, compared to the single frame processing of the same model, it can ideally bring about 36 times the speed increase, which is more conducive to the wide application of video models.

Summary and outlook

Video instance segmentation refers to the task of classifying, segmenting and tracking objects of interest in the video at the same time. Existing methods usually design complex processes to solve this problem. This paper proposes a new framework for video instance segmentation based on Transformer, VisTR, which treats the task of video instance segmentation as a direct end-to-end parallel sequence decoding and prediction problem. Given a video containing multiple frames of images as input, VisTR directly outputs the mask sequence of each instance in the video in sequence. The core of this method is a new strategy of instance sequence matching and segmentation, which can supervise and segment instances at the entire sequence level. VisTR unifies instance segmentation and tracking under the framework of similarity learning, which greatly simplifies the process. Without any tricks, VisTR achieved the best results among all methods using a single model, and achieved the fastest speed on the YouTube-VIS dataset.

As far as we know, this is the first method to apply Transformers to the field of video segmentation. We hope that our method can inspire more research on video instance segmentation, and we also hope that this framework can be applied to more video understanding tasks in the future. For more details on the paper, please refer to the original text: "End-to-End Video Instance Segmentation with Transformers" , and the code has also been open on GitHub: 160b9f2aca3347 https://github.com/Epiphqny/VisTR , welcome Everybody comes to understand or use.

Author

- Meituan Autonomous Vehicle Distribution Center: Yuqing, Zhaoliang, Baoshan, Shenhao, etc.

- University of Adelaide: Wang Xinlong, Shen Chunhua.

references

- Video Instance Segmentation, https://arxiv.org/abs/1905.04804.

- Mask R-CNN, https://arxiv.org/abs/1703.06870.

- Classifying, Segmenting, and Tracking Object Instances in Video with Mask Propagation, https://arxiv.org/abs/1912.04573.

- STEm-Seg: Spatio-temporal Embeddings for Instance Segmentation in Videos, https://arxiv.org/abs/2003.08429.

- Attention Is All You Need, https://arxiv.org/abs/1706.03762.

- End-to-End Object Detection with Transformers, https://arxiv.org/abs/2005.12872.

- Image Transformer, https://arxiv.org/abs/1802.05751.

Job Offers

Meituan Autonomous Vehicle Distribution Center is continuously recruiting a large number of positions, and we are looking for algorithm/system/hardware development engineers and experts. Interested students are welcome to send their resumes to: ai.hr@meituan.com (the subject of the email indicates: Meituan Autonomous Vehicle Team).

Read more technical articles from the

the front | algorithm | backend | data | security | operation and maintenance | iOS | Android | test

160b9f2aca34e9 | . You can view the collection of technical articles from the Meituan technical team over the years.

| This article is produced by the Meituan technical team, and the copyright belongs to Meituan. Welcome to reprint or use the content of this article for non-commercial purposes such as sharing and communication, please indicate "the content is reproduced from the Meituan technical team". This article may not be reproduced or used commercially without permission. For any commercial activity, please send an email to tech@meituan.com to apply for authorization.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。