Background introduction

Why choose RocketMQ

When we decided to introduce MQ a few years ago, there were already many mature solutions on the market, such as RabbitMQ, ActiveMQ, NSQ, Kafka, etc. Taking into account factors such as stability, maintenance costs, and company technology stack, we chose RocketMQ:

- Pure Java development, no dependencies, easy to use, and can hold when problems occur;

- After the test of Ali Double Eleven, performance and stability can be guaranteed;

- Practical functions, sending end: synchronous, asynchronous, unilateral, delayed sending; consumer end: message reset, retry queue, dead letter queue;

- The community is active and problems can be communicated and resolved in time.

Usage

- Mainly used for peak shaving, decoupling, and asynchronous processing;

- It has been widely used in core businesses such as train tickets, air tickets, hotels, etc., to carry the huge WeChat entrance traffic;

- Widely used in core processes such as payment, order, ticket issuance, and data synchronization;

- Daily turnover of 100+ billion messages.

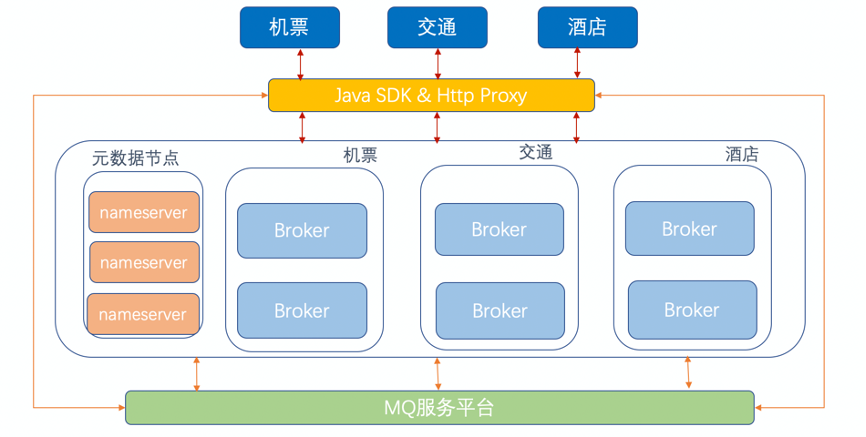

The following figure is the framework diagram of MQ access

Due to the company's technology stack, we provide java sdk for client sdk; for other languages, converge to http proxy, shield language details, and save maintenance costs. According to the major business lines, the back-end storage nodes are isolated without affecting each other.

MQ double center transformation

There was a network failure in a single computer room before, which had a great impact on the business. In order to ensure the high availability of business, the transformation of dual centers in the same city has been put on the agenda.

Why do dual centers

- Single room failure service is available;

- Ensure data reliability: If all data is in a computer room, once the computer room fails, there is a risk of data loss;

- Horizontal expansion: The capacity of a single computer room is limited, and multiple computer rooms can share the traffic.

Dual center plan

Before doing dual centers, I did some research on the dual center plan in the same city, mainly including cold (hot) backup and dual-active. (At that time, the community Dledger version did not appear, and the Dledger version can be used as an optional solution for dual centers.)

1) Cold (hot) backup in the same city

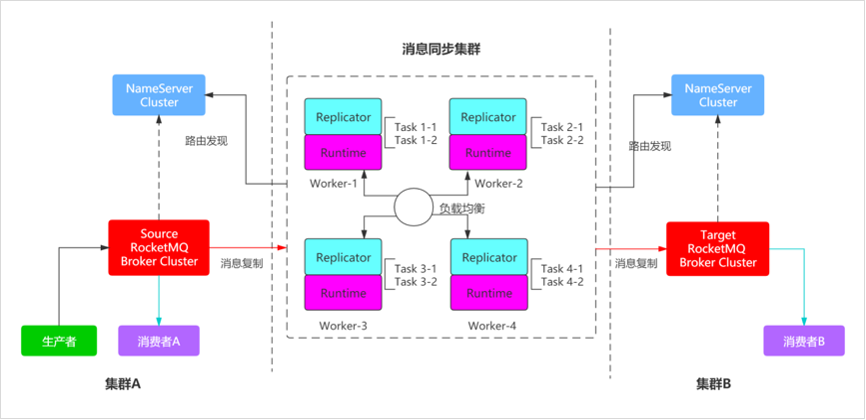

Two independent MQ clusters, user traffic is written to a main cluster, and data is synchronized to the standby cluster in real time. The community has a mature RocketMQ Replicator solution, which requires regular synchronization of metadata, such as topics, consumer groups, consumption progress, etc.

2) Double live in the same city

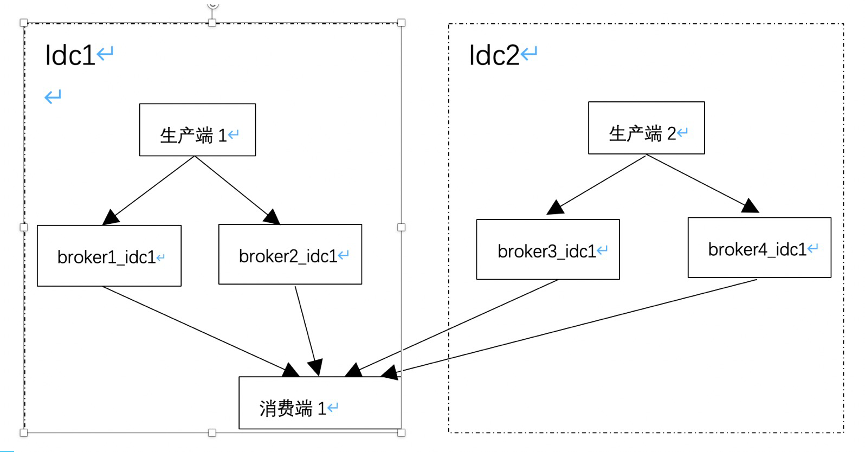

Two independent MQ clusters, user traffic is written to the MQ clusters in their respective computer rooms, and the data is not synchronized with each other.

Usually the business is written into the MQ cluster of the respective computer room. If one computer room is down, all user request traffic can be cut to another computer room, and the messages will also be produced to the other computer room.

For the dual-active solution, the MQ cluster domain name needs to be resolved.

1) If two clusters use one domain name, the domain name can be dynamically resolved to their respective computer rooms. This method requires that production and consumption must be in the same computer room. If the production is in idc1 and the consumption is in idc2, then production and consumption are connected to a cluster respectively, and data cannot be consumed.

2) If a cluster has one domain name, the business side changes a lot. The cluster we used to serve externally was deployed in a single center, and the business side has already accessed a large number of them. This solution is difficult to promote.

In order to minimize changes to the business side, the domain name can only continue to use the previous domain name. In the end, we adopt a Global MQ cluster, spanning dual computer rooms, regardless of whether the business is deployed in single center or dual center, it will not affect; and only need to upgrade the client. , No need to change any code.

Dual Center Appeal

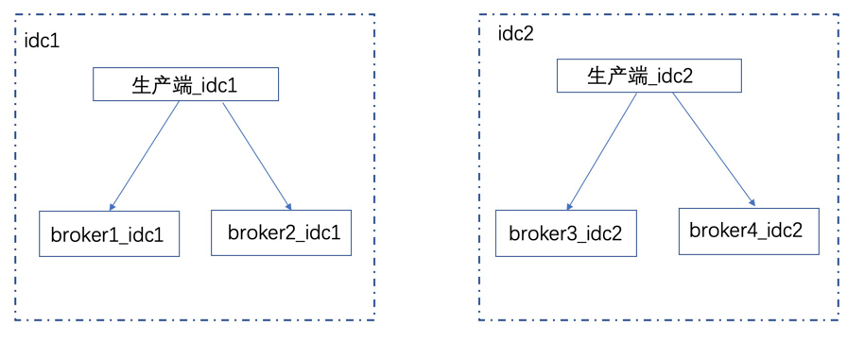

- The principle of proximity: the producer is in the A computer room, and the produced news is stored in the A computer room broker; the consumer is in the A computer room, and the consumption news comes from the A computer room broker.

- Single room failure: the production is normal, and the message is not lost.

- Broker master node failure: automatically choose the master.

The principle of proximity

Simply put, it is to determine two things:

- How does a node (client node, server node) determine which idc it is in;

- How does the client node determine which idc the server node is in?

How to judge which idc you are in?

1) ip query

When the node starts, it can obtain its own IP, and query the computer room where it is located through the internal components of the company.

2) Environmental perception

You need to cooperate with operation and maintenance students. When the node is installed, some of its own metadata, such as computer room information, will be written into the local configuration file, and the configuration file can be directly read and written during startup.

We adopted the second scheme, no component dependency, and the value of logicIdcUK in the configuration file is the sign of the computer room.

client node identify the server node in the same computer room?

The client node can get the ip of the server node and the name of the broker, so:

- ip query: query the information of the computer room where the ip is located through the company's internal components;

- Add computer room information to the broker name: Add computer room information to the broker name in the configuration file;

- The protocol layer adds the computer room identifier: when the server node registers with the metadata system, it registers its own computer room information together.

Compared with the first two, it is slightly more complicated to implement, and the protocol layer is changed. We use the second and third combination.

Nearest production

Based on the above analysis, the idea of nearby production is very clear, and the default is to give priority to nearby production in the machine room;

If the service node of the local computer room is not available, you can try to expand the computer room production, and the business can be specifically configured according to actual needs.

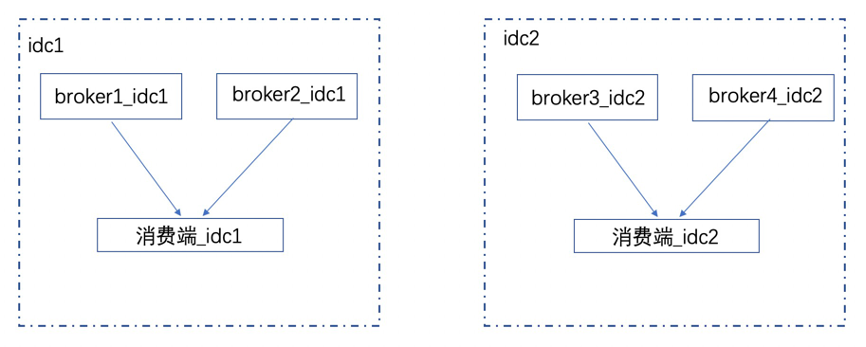

Consumption nearby

Give priority to local computer room consumption, and by default, it is necessary to ensure that all messages can be consumed.

The queue allocation algorithm adopts the allocation of queues according to the computer room

- Messages from each computer room are evenly distributed to the consumer end of the computer room;

- There is no consumer end in this computer room, and it is equally divided among the consumer end of other computer rooms.

The pseudo code is as follows:

Map<String, Set> mqs = classifyMQByIdc(mqAll);

Map<String, Set> cids = classifyCidByIdc(cidAll);

Set<> result = new HashSet<>;

for(element in mqs){

result.add(allocateMQAveragely(element, cids, cid)); //cid为当前客户端

}

The consumption scenarios are mainly unilateral deployment and bilateral deployment on the consumer side.

In unilateral deployment, the consumer will pull all messages from each computer room by default.

In bilateral deployment, the consumer will only consume messages from the computer room where it is located. Pay attention to the actual production volume of each computer room and the number of consumer terminals to prevent a certain computer room from having too few consumer terminals.

Single room failure

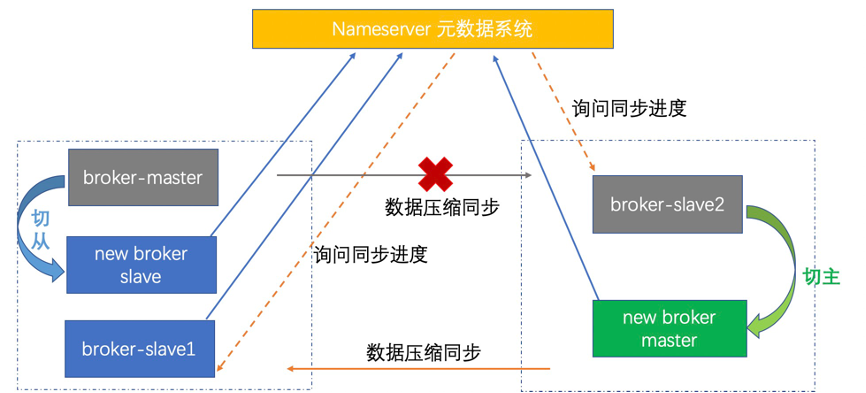

- Each group of broker configuration

One master and two slaves, one master and one slave in one computer room, and one slave in the other computer room; after one slave synchronizes the message, the message is sent successfully.

- Single room failure

Message production spans computer rooms; unconsumed messages continue to be consumed in another computer room.

Failover

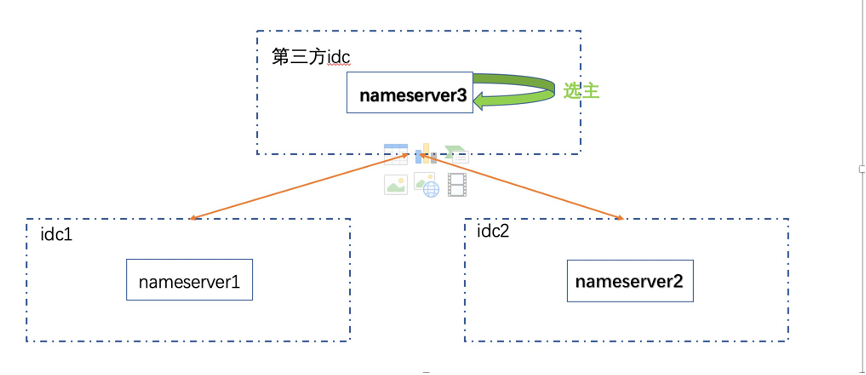

When a group of broker master nodes fails, in order to ensure the availability of the entire cluster, it is necessary to select the master and switch among slaves. To do this, you must first have a broker main failure arbitration system, namely the nameserver (hereinafter referred to as ns) metadata system (similar to the sentinel in redis).

The nodes in the ns metadata system are located in three computer rooms (there is a third-party cloud computer room, where ns nodes are deployed on the cloud, the amount of metadata is not large, and the delay is acceptable), and the ns nodes of the three computer rooms are selected through the raft protocol The leader and broker nodes will synchronize the metadata to the leader, and the leader is synchronizing the metadata to the follower.

When the client node obtains metadata, it can read data from leader and follower.

Cut the main process

- If the nameserver leader monitors that the broker master node is abnormal, and asks other followers to confirm; half of the followers believe that the broker node is abnormal, the leader informs the broker to choose the master from the broker slave nodes, and the slave node with a large synchronization progress is selected as the master;

- The newly elected broker master node performs the switching action and registers to the metadata system;

- The production side cannot send messages to the old broker master node.

The flow chart is as follows

cut center drill

The user requests the load to the dual center, and the following operation first cuts the traffic to the second center---returns to the dual center---cuts to the first center. Ensure that each center can bear the full amount of user requests.

First cut all user traffic to the second center

The flow returns to the double center and cuts to the first center

review

- Global cluster

- The principle of proximity

- One master and two slaves, write the message after half of the message is written successfully

- Master selection of metadata system raft

- The broker master node fails, and the master is automatically selected

MQ platform governance

Even if the system is high-performance and highly available, if it is used casually or irregularly, it will bring various problems and increase unnecessary maintenance costs. Therefore, necessary governance methods are indispensable.

purpose

Make the system more stable

- Alert in time

- Quick positioning, stop loss

What aspects of governance

theme/consumer group governance

- Apply to use

In the production environment MQ cluster, we have turned off the automatic creation of topics and consumer groups. Before using it, you need to apply for and record the project identifiers and users of the topics and consumer groups. Once there is a problem, we can immediately find the person in charge of the theme and consumer group to understand the relevant situation. If there are multiple environments such as testing, grayscale, and production, you can apply for multiple clusters to take effect at the same time, avoiding the trouble of applying for clusters one by one.

- Production speed

In order to avoid business negligence to send a large number of useless messages, it is necessary to control the topic production speed on the server side to avoid this topic squeezing the processing resources of other topics.

- Message backlog

For consumer groups that are sensitive to message accumulation, the user can set the threshold for the accumulation of messages and the alarm method. If this threshold is exceeded, the user will be notified immediately; the threshold of the message accumulation time can also be set, and the user will be notified immediately if it has not been consumed for a period of time. .

- Consumer node dropped

If the consumer node goes offline or does not respond for a period of time, it needs to be notified to the user.

Client governance

- Time-consuming detection of sending and consumption

Monitor the time it takes to send/consume a message, detect low-performance applications, and notify the user to start rebuilding to improve performance; at the same time, monitor the size of the message body, and promote the project to enable compression or messaging for items with an average message body size of more than 10 KB Refactor to control the message body within 10 KB.

- Message link tracking

Which ip a message was sent from, at which point in time, which ip was used, and at which point in time, plus the information on message reception and message push statistics on the server side, constitute a simple message link tracking, and the message The life cycle of the user can be connected in series, and the user can check the message and troubleshoot the problem by querying the msgId or the pre-set key.

- Low or potentially dangerous version detection

With the continuous iteration of functions, the SDK version will also be upgraded and risks may be introduced. Report the sdk version regularly to encourage users to upgrade the problematic or too low version.

server governance

- Cluster health inspection

How to judge a cluster is healthy? Regularly detect the number of nodes in the cluster, cluster write tps, and consume tps, and simulate user production and consumption messages.

- Cluster performance inspection

Performance indicators are ultimately reflected in the time to process the production and consumption of messages. The server counts the processing time for each production and consumption request. In a statistical period, if a certain percentage of the message processing time is too long, then the node performance is considered to be problematic; the main cause of the performance problem is the physical bottleneck of the system, such as disk The io util usage rate is too high and the cpu load is high. These hardware indicators are automatically alarmed by the Nighthawk monitoring system.

- Cluster high availability

High availability is mainly for the failure of the master node in the broker to work normally due to software and hardware failures, and the slave node is automatically switched to the master, which is suitable for scenarios where message sequence and cluster integrity are required.

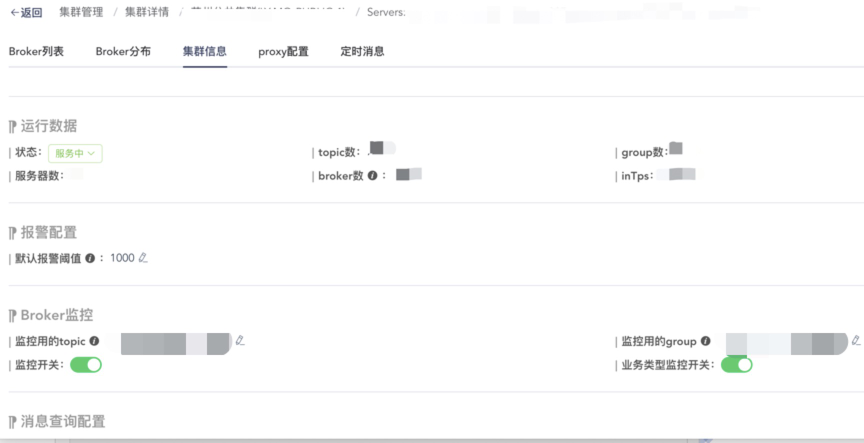

Part of the background operation show

Subject and consumer group application

Real-time statistics of production, consumption, and accumulation

Cluster monitoring

stepped on the pit

The community has experienced a long time of improvement and precipitation of the MQ system. We have encountered some problems in the process of using it. We are required to understand the source code in depth, so that we can not panic when there is a problem and stop the loss quickly.

- When the new and old consumers coexist, the queue allocation algorithm we implemented is not compatible, so it can be compatible;

- The number of topics and consumer groups is large, registration takes too long, memory oom, the registration time is shortened by compression, and the community has been repaired;

- The length of topic is inconsistent, causing messages to be lost after restart. The community has fixed it;

- In centos 6.6, the broker process suspended animation, just upgrade the os version.

MQ Future Outlook

The current message retention time is short, which is not convenient for troubleshooting and data prediction. Next, we will archive historical messages and predict data based on this.

- Historical data archiving

- Separation of underlying storage, separation of computing and storage

- Based on historical data, complete more data predictions

- The server is upgraded to Dledger to ensure that the messages are strictly consistent

To learn more about RocketMQ, you can join the community exchange group. Below is the DingTalk group. Welcome everyone to leave a message.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。