背景:

升级是一件持续的事情:Kubernetes 1.16.15升级到1.17.17,Kubernetes 1.17.17升级到1.18.20

集群配置:

| 主机名 | 系统 | ip |

|---|---|---|

| k8s-vip | slb | 10.0.0.37 |

| k8s-master-01 | centos7 | 10.0.0.41 |

| k8s-master-02 | centos7 | 10.0.0.34 |

| k8s-master-03 | centos7 | 10.0.0.26 |

| k8s-node-01 | centos7 | 10.0.0.36 |

| k8s-node-02 | centos7 | 10.0.0.83 |

| k8s-node-03 | centos7 | 10.0.0.40 |

| k8s-node-04 | centos7 | 10.0.0.49 |

| k8s-node-05 | centos7 | 10.0.0.45 |

| k8s-node-06 | centos7 | 10.0.0.18 |

1. 参考官方文档

参照:https://kubernetes.io/zh/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

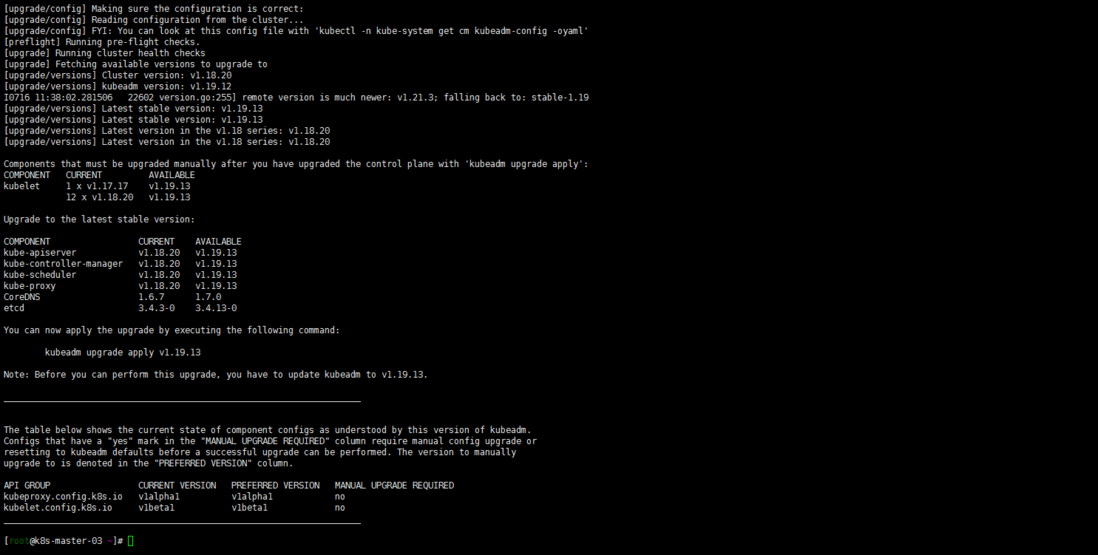

2. 确认可升级版本与升级方案

yum list --showduplicates kubeadm --disableexcludes=kubernetes通过以上命令查询到1.19当前最新版本是1.19.12-0版本。master有三个节点还是按照个人习惯先升级k8s-master-03节点

3. 升级k8s-master-03节点控制平面

依然k8s-master-03执行:

1. yum升级kubernetes插件

yum install kubeadm-1.19.12-0 kubelet-1.19.12-0 kubectl-1.19.12-0 --disableexcludes=kubernetes2. 腾空节点检查集群是否可以升级

依然算是温习drain命令:

kubectl drain k8s-master-03 --ignore-daemonsets

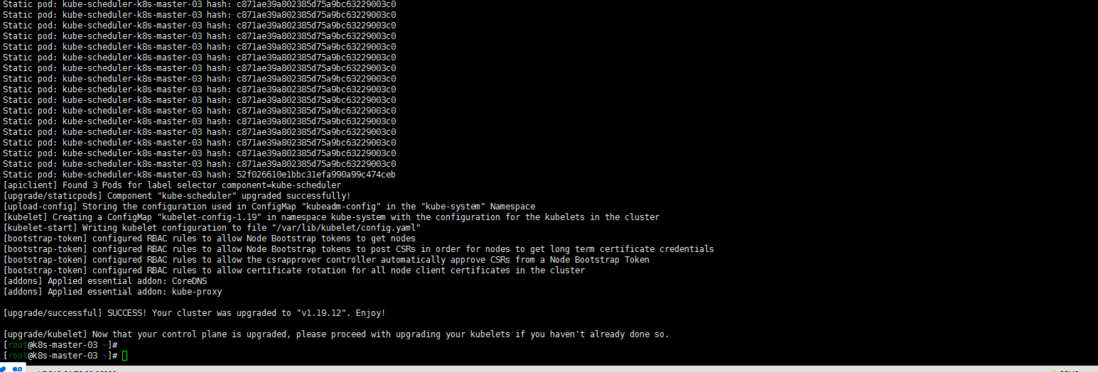

sudo kubeadm upgrade plan3. 升级版本到1.19.12

kubeadm upgrade apply 1.19.12注意:特意强调一下work节点的版本也都是1.18.20了,没有出现夸更多版本的状况了

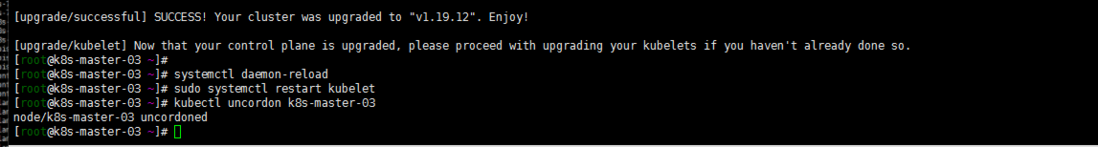

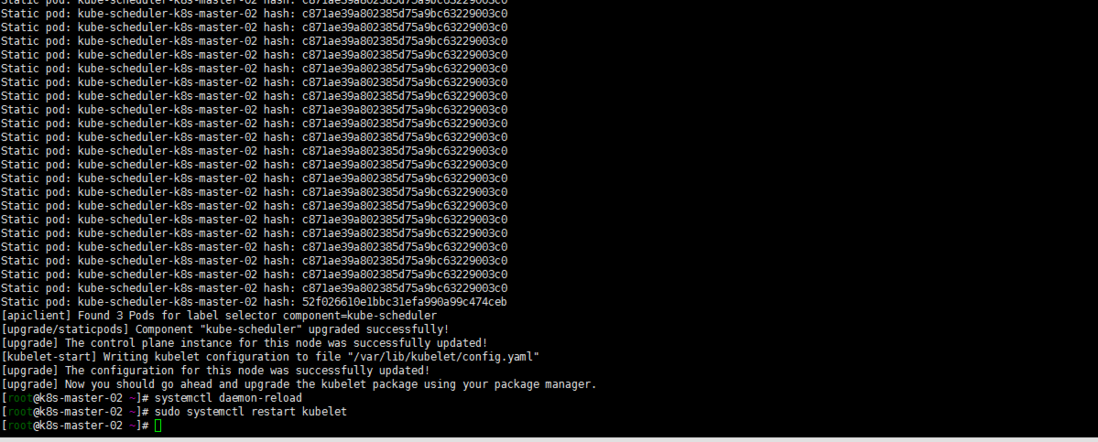

[root@k8s-master-03 ~]# sudo systemctl daemon-reload

[root@k8s-master-03 ~]# sudo systemctl restart kubelet

[root@k8s-master-03 ~]# kubectl uncordon k8s-master-03

node/k8s-master-03 uncordoned4. 升级其他控制平面(k8s-master-01 k8s-master-02)

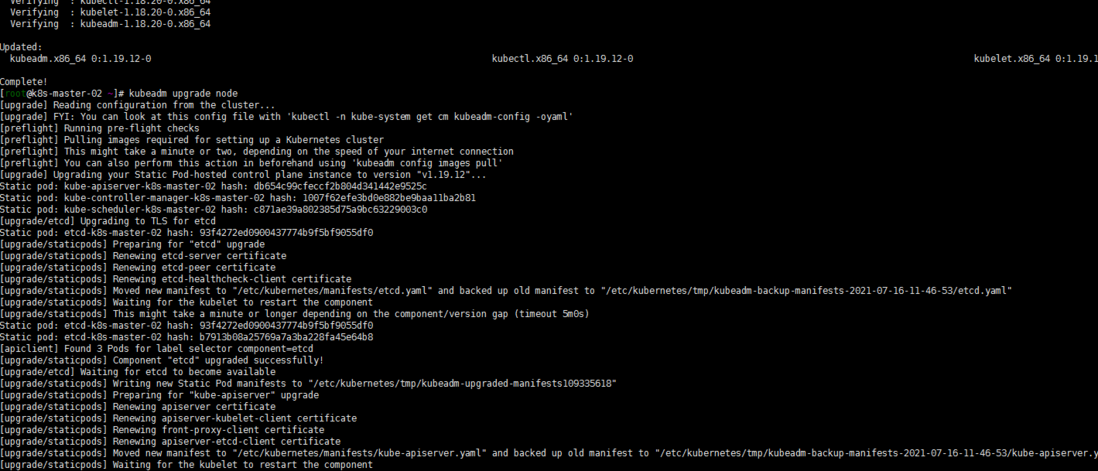

sudo yum install kubeadm-1.19.12-0 kubelet-1.19.12-0 kubectl-1.19.12-0 --disableexcludes=kubernetes

sudo kubeadm upgrade node

sudo systemctl daemon-reload

sudo systemctl restart kubelet5. work节点的升级

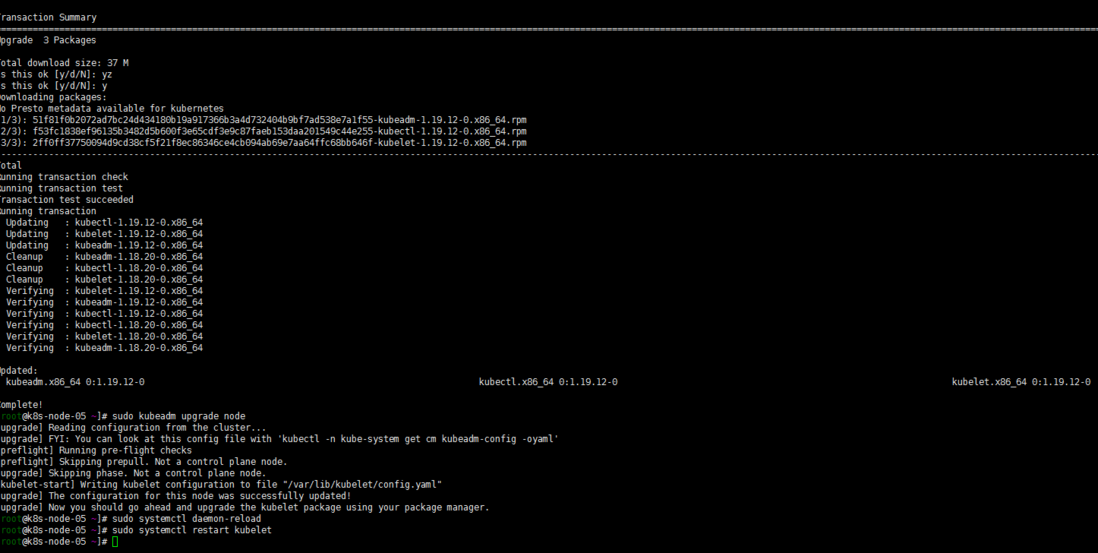

sudo yum install kubeadm-1.19.12-0 kubelet-1.19.12-0 kubectl-1.19.12-0 --disableexcludes=kubernetes

sudo kubeadm upgrade node

sudo systemctl daemon-reload

sudo systemctl restart kubelet6. 验证升级

kubectl get nodes7. 其他

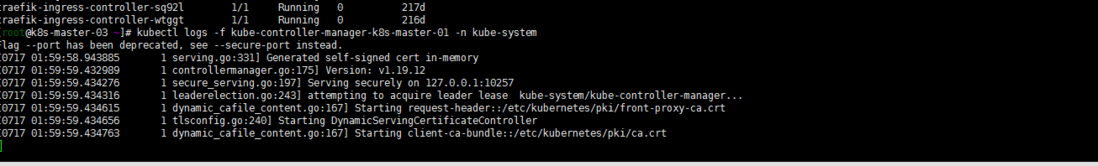

查看一眼kube-system下插件的日志,确认插件是否正常

kubectl logs -f kube-controller-manager-k8s-master-01 -n kube-system

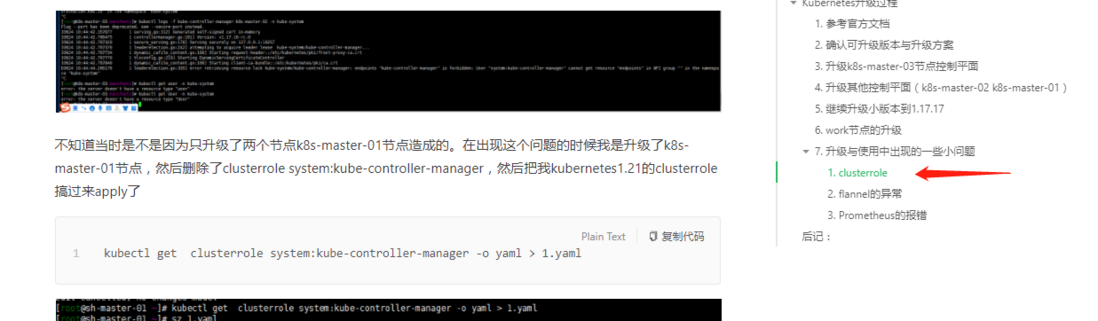

目测是没有问题的就不管了....嗯Prometheus的问题还是留着。本来也准备安装主线版本了。过去的准备卸载了.如出现cluseterrole问题可参照:Kubernetes 1.16.15升级到1.17.17

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。