Recently, because Hongxing Erke donated 50 million materials to Henan, I really saw a lot of netizens who cried a lot. An ordinary company donating 50 million may not have such empathy, but after reading the background of Hongxing Erke, I found that it is really irritating. Sad. Hongxing Erke’s revenue in 2020 is 2.8 billion, but the profit is a loss of 200 million, and even the official Weibo account is reluctant to open a member. In this case, it also donated 50 million arrogantly, which really broke the defense. .

Netizens also said that Hongxing Erke, especially like the savings that the older generation had saved up with a penny and a dime, was carefully stored in an iron box. As soon as I heard that the motherland needs it, I immediately took out the iron box, wow~ I'll give you all. Putting on the most expensive shoes, I took out a pair of 249.

Then I went to the official website of Hongxing Erke to check his shoes.

Good guy, after waiting for 55 seconds, , finally opened the website. . . (It seems that it is really in disrepair for a long time, which is so sad. As a front-end, it is really crazy to see this scene...)

On the weekend, I went to the nearest Hongxing Erke to take a look. I bought a pair of 136 shoes (it is really cheap, and the most important thing is comfortable).

After I bought it home, I thought to myself, like the Adi and Nike on the Poison APP, they have an online 360° view, and I wonder if I can make one for Hongxing Erke, which is regarded as a meager effort by a technician. .

action

With this idea, I immediately started to act. Then I roughly summarized the following steps:

1. Modeling

2. Use Thee.js to create a scene

3. Import the model

4. Join the Three.js controller

Because I have learned some knowledge about Three.js before, I have a good understanding of the display after having the model. Therefore, the most troublesome part is modeling, because we need to put a 3-dimensional thing in the computer. . For 2-dimensional objects, if you want to put them on the computer, we all know that it’s very simple, just use the camera to take a picture, but it’s different if you want to view 3-dimensional objects on the computer. It has one more dimension. The amount of increase has indeed doubled, so I began to consult various materials to see how to build a model of an object.

I checked a lot of information and wanted to build a shoe model. In summary, there are two modes.

1. Photogrammetry (photogrammetry) : By taking a photo, it is transformed into a 3d model through a pure algorithm, which is also called monocular reconstruction in graphics.

2. Lidar scan (Lidar scan) : It is scanned by lidar. He also mentioned this method to scan out the point cloud in his latest video.

Put an outline I summarized, most of which are foreign websites/tools.

At the beginning of the search results, most people mentioned 123D Catch and watched a lot of videos, saying that it builds the model quickly and realistically, but after further exploration, I found that it seems to be in business in 2017. The merger was integrated. After the integration of ReMake needs to be paid, I did not continue due to cost considerations. (After all, it’s just a demo attempt)

Later, I found a software called Polycam, the finished product effect is very good.

But when I chose to use it, I found that it requires a laser radar scanner (LiDAR), which can only be used with an iPhone 12 pro or higher model.

In the end I chose Reality Capture to create the model. He can synthesize a model from multiple pictures. I watched some videos of station b and felt that its image was good, but it only supports windows and runs on memory. It takes 8g. At this time, I moved out of my windows computer 7 years ago... It was a pleasant surprise to find that I didn't expect it to work.

Modeling

Let’s start the formal content below. The protagonist is the shoes I bought this time (the pair I put at the beginning)

Then we started shooting. First, I took a group of photos randomly around the shoes, but found that this model is really not satisfactory...

Later I also adopted the form of a white screen and added a layer of background. Later I found that it still didn't work. The application more often recognized the background numbers behind.

Finally... With the help of Nanxi, the background image P became white.

Huangtian paid off, and the final result was not bad. The basic point cloud model has been released. (It feels pretty good, it feels like the black technology in the movie)

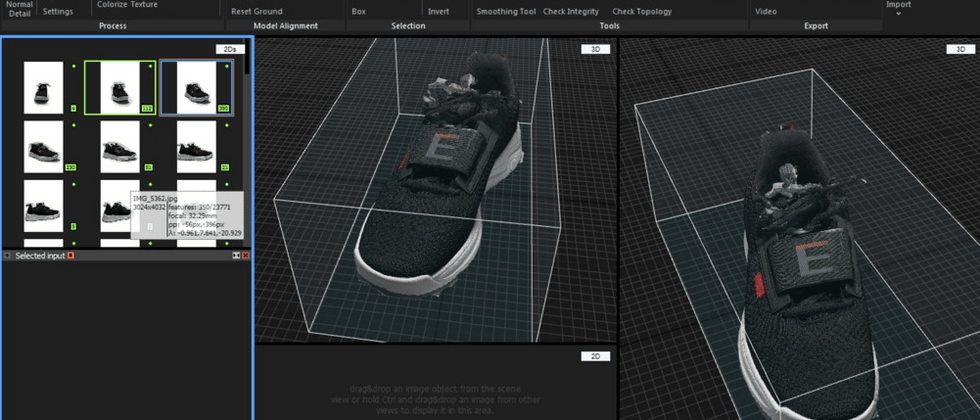

Here is what the model looks like. It is the best model I have trained in a day (but it is still slightly rough)

In order to make the model look perfect as much as possible, it took a day to test the model. Because of the shooting angle and very influence on the model generation, I took a total of about 1G pictures, about 500 pictures (due to the lack of understanding in the early stage) How to adjust the model, so I tried a lot of methods.)

After we have the model, we can display it on the Internet. Three.js is used here (because it is considered that many people are not related to this field, so it will be more basic, please forgive me, big guys. )

Build the app

Mainly composed of three parts (building scene, model loading, adding controller)

1. Build a 3d scene

First, we first load Three.js

<script type="module">

import * as THREE from 'https://cdn.jsdelivr.net/npm/three@0.129.0/build/three.module.js';

</script>Then create a WebGL renderer

const container = document.createElement( 'div' );

document.body.appendChild( container );

let renderer = new THREE.WebGLRenderer( { antialias: true } );

container.appendChild( renderer.domElement );Will add another scene and camera

let scene = new THREE.Scene();Camera syntax PerspectiveCamera(fov, aspect, near, far)

// 设置一个透视摄像机

camera = new THREE.PerspectiveCamera( 45, window.innerWidth / window.innerHeight, 0.25, 1000 );

// 设置相机的位置

camera.position.set( 0, 1.5, -30.0 );Add the scene and camera to the WebGL renderer.

renderer.render( scene, camera );2. Model loading

Since our exported model is in OBJ format and is very large, I have compressed it into gltf and glb formats. Three.js has already written the GLTF loader for us, and we can use it directly.

// 加载模型

const gltfloader = new GLTFLoader();

const draco = new DRACOLoader();

draco.setDecoderPath('https://www.gstatic.com/draco/v1/decoders/');

gltfloader.setDRACOLoader(draco);

gltfloader.setPath('assets/obj4/');

gltfloader.load('er4-1.glb', function (gltf) {

gltf.scene.scale.set(0.2, 0.2, 0.2); //设置缩放

gltf.scene.rotation.set(-Math.PI / 2, 0, 0) // 设置角度

const Orbit = new THREE.Object3D();

Orbit.add(gltf.scene);

Orbit.rotation.set(0, Math.PI / 2, 0);

scene.add(Orbit);

render();

});But opening our page through the above code will be completely dark, this is because our light has not been added. So we continue to add a beam of light to illuminate our shoes.

// 设置灯光

const directionalLight = new THREE.AmbientLight(0xffffff, 4);

scene.add(directionalLight);

directionalLight.position.set(2, 5, 5);Now we can clearly see our shoes, as if we see the light in the dark, but at this time, we can't control it with the mouse or gestures. We need to use our Three.js controller to help us control the angle of our model.

3. Add controller

const controls = new OrbitControls( camera, renderer.domElement );

controls.addEventListener('change', render );

controls.minDistance = 2; // 限制缩放

controls.maxDistance = 10;

controls.target.set( 0, 0, 0 ); // 旋转中心点

controls.update();At this time we can look at our shoes from all angles.

That's it!

Online experience address: https://resume.mdedit.online/erke/

Open source address (including tools, running steps and actual demo): https://github.com/hua1995116/360-sneakers-viewer

Follow-up planning

Due to the limited time (it took a whole day and weekend), I still did not get a perfect model. We will continue to explore the realization of this piece in the follow-up, and then we will explore whether an automated method can be realized from the shooting to the model. Show, and in fact, after we have the model, we are not far from AR shoes. If you are interested or have better ideas and suggestions, welcome to communicate with me.

Finally, I am very grateful to Nanxi, and put aside some things originally planned to help with the shooting and post-processing, and accompany me to deal with the model for a whole day. (Shooting with limited conditions is really too difficult.)

And I wish Hongxing Erke can become a long-term enterprise, keep innovating, make more and better sportswear, and keep the state favored by the whole people at this moment.

appendix

Several shooting techniques obtained are also officially provided.

1. Don't limit the number of images, RealityCapture can process any image.

2. Use high-resolution pictures.

3. Each point on the surface of the scene should be clearly visible in at least two high-quality images.

4. When taking pictures, move around the object in a circular manner.

5. The angle of movement should not exceed 30 degrees.

6. From taking a photo of the entire subject, moving it and focusing on the details, make sure that the size is similar.

7. Complete surround. (Don’t go around half a circle and it’s over)

back at the author's highly praised articles in the past, you may be able to gain more!

- 2021 front-end learning path book list-the road to self-growth

- Talk about the front-end watermark from cracking a design website (detailed tutorial)

- takes you to unlock the mystery of "File Download"

- 10 kinds of cross-domain solutions (with ultimate big move)

Concluding remarks

+Like+Favorite+Comment+ , the original is not easy, I encourage the author to create better articles

Pay attention to the notes of the autumn wind of the , a front-end public account that focuses on front-end interviews, engineering, and open source

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。