Author | Cao Guanglei (Fang Qiu)

background

It is a very common requirement for workloads to be distributed in different zones, different hardware types, and even different clusters and cloud vendors. In the past, it was generally only possible to split an application into multiple workloads (such as Deployment) for deployment, which were manually managed by the SRE team or deeply customized at the PaaS layer to support the refined management of multiple workloads for one application.

Furthermore, there are a variety of topological fragmentation and flexibility requirements in the application deployment scenario. The most common one is to break up according to one or more topological dimensions, such as:

- Application deployment needs to be broken up in the node dimension to avoid stacking (improve disaster tolerance).

- Application deployment needs to be broken up according to the AZ (available zone) dimension (to improve disaster tolerance).

- When dispersing by zone, you need to specify the proportion of deployment in different zones.

With the rapid popularity of cloud native at home and abroad, applications demand more and more flexibility. Public cloud vendors have successively launched serverless container services to support flexible deployment scenarios, such as Alibaba Cloud's elastic container service ECI, and AWS's Fragate container service. Taking ECI as an example, ECI can be connected to the Kubernetes system through Virtual Kubelet, and Pod can be scheduled to the ECI cluster behind the virtual-node with a certain configuration. Summarize some common elastic demands, such as:

- The application is first deployed to its own cluster, and then deployed to the elastic cluster when resources are insufficient. When shrinking, the elastic nodes are prioritized to shrink to save costs.

- Users plan the basic node pool and elastic node pool by themselves. Application deployment requires a fixed number or proportion of Pods to be deployed in the basic node pool, and the rest are expanded to the elastic node pool.

In response to these needs, OpenKruise added the WorkloadSpread feature in the v0.10.0 version. Currently, it supports deployment, ReplicaSet, and CloneSet workloads to manage the partition deployment and elastic scaling of their subordinate Pods. The following will introduce the application scenarios and implementation principles of WorkloadSpread in depth to help users better understand this feature.

Introduction to WorkloadSpread

Official documents (see related link one at the end of the article)

In short, WorkloadSpread can distribute the Pod to which the workload belongs to different types of Node nodes according to certain rules, which can meet the above-mentioned dispersal and flexible scenarios at the same time.

Comparison of existing solutions

Simply compare some existing solutions in the community.

Pod Topology Spread Constrains (see related link 2 at the end of the article)

Pod Topology Spread Constrains is a solution provided by the Kubernetes community, which can be defined to be scattered at the level of the topology key. After the user finishes the definition, the scheduler will select the node that meets the distribution conditions according to the configuration.

Since PodTopologySpread is more evenly scattered, it cannot support custom partition number and ratio configuration, and it will destroy the distribution when shrinking. WorkloadSpread can customize the number of each partition and manage the order of shrinking. Therefore, the shortcomings of PodTopologySpread can be avoided in some scenarios.

UnitedDeployment (see related link three at the end of the article)

UnitedDeployment is a solution provided by the Kruise community, which manages Pods in multiple regions by creating and managing multiple workloads.

UnitedDeployment very well supports the demand for fragmentation and flexibility, but it is a brand-new workload, and the user's use and migration costs will be relatively high. The WorkloadSpread is a lightweight solution that only requires simple configuration and connection to the workload.

Application scenario

Below I will list some application scenarios of WorkloadSpread and give the corresponding configuration to help you quickly understand the capabilities of WorkloadSpread.

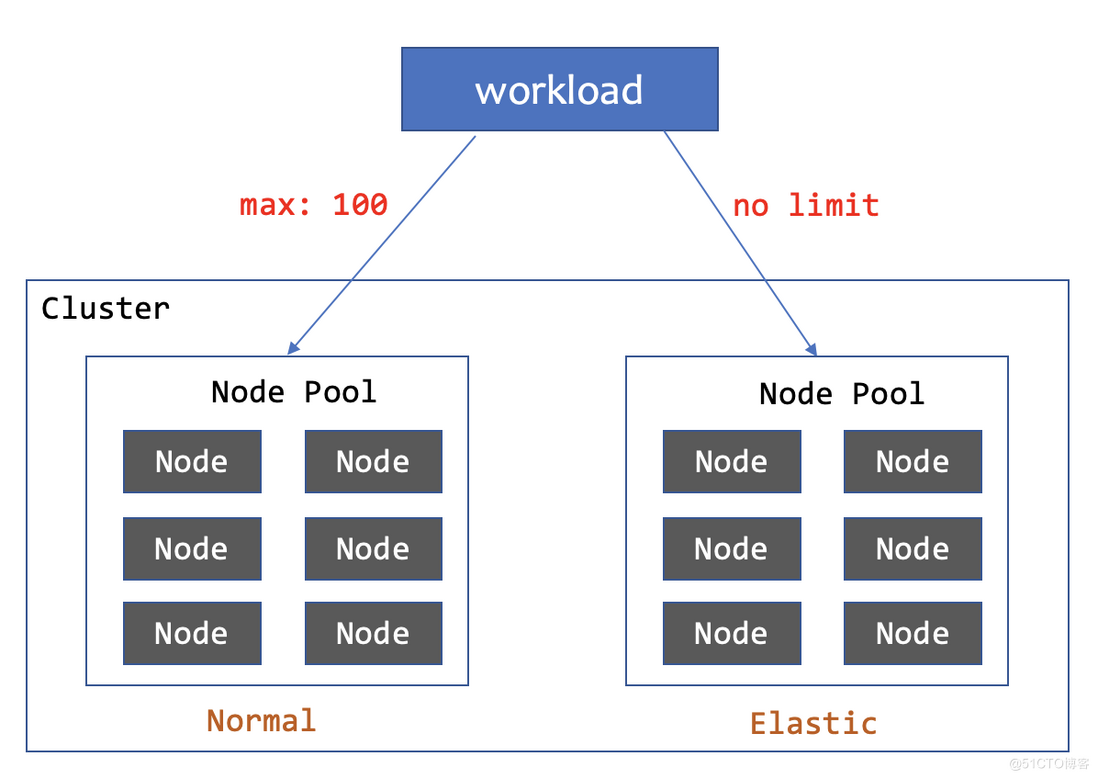

1. Up to 100 copies of the base node pool are deployed, and the rest are deployed to the elastic node pool

subsets:

- name: subset-normal

maxReplicas: 100

requiredNodeSelectorTerm:

matchExpressions:

- key: app.deploy/zone

operator: In

values:

- normal

- name: subset-elastic #副本数量不限

requiredNodeSelectorTerm:

matchExpressions:

- key: app.deploy/zone

operator: In

values:

- elasticWhen the workload is less than 100 copies, all are deployed to the normal node pool, and more than 100 are deployed to the elastic node pool. When shrinking, the Pod on the elastic node will be deleted first.

Since WorkloadSpread does not invade the workload, it only limits the distribution of the workload. We can also dynamically adjust the number of copies based on the resource load by combining HPA, so that when the business peaks, it will be automatically scheduled to the elastic node, and the elastic node will be released first when the business peaks. Resources on the pool.

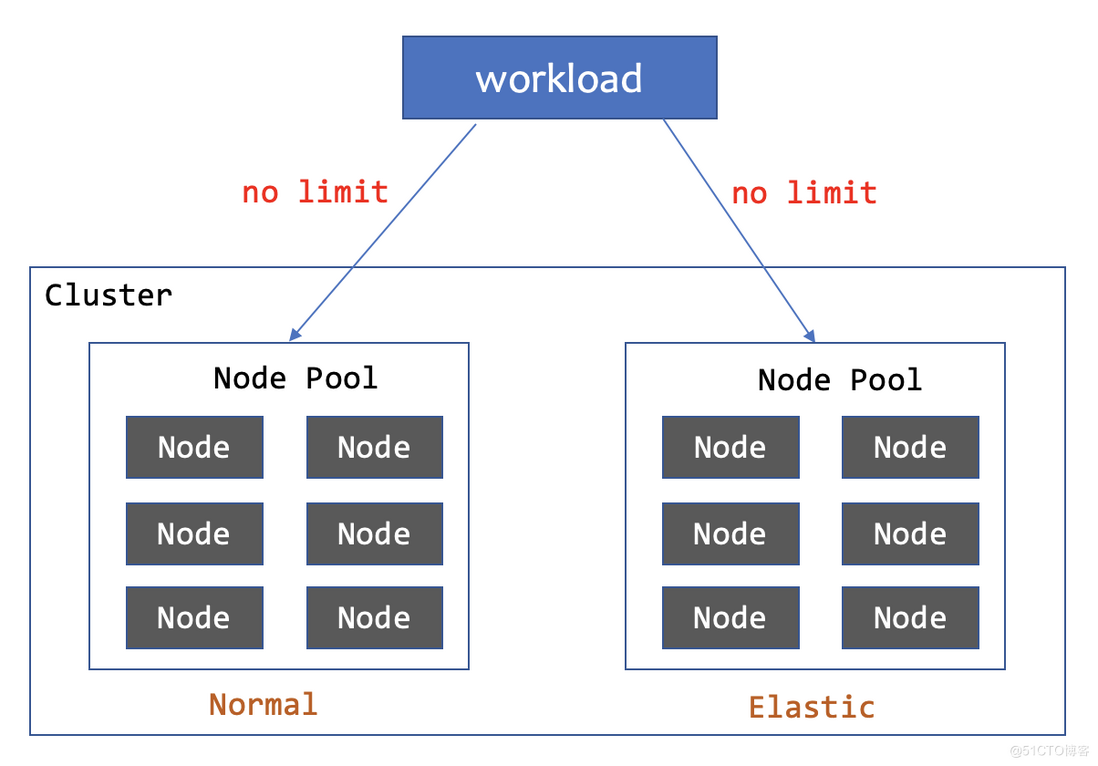

2. Deploy to the basic node pool first, and then deploy to the elastic resource pool if resources are insufficient

scheduleStrategy:

type: Adaptive

adaptive:

rescheduleCriticalSeconds: 30

disableSimulationSchedule: false

subsets:

- name: subset-normal #副本数量不限

requiredNodeSelectorTerm:

matchExpressions:

- key: app.deploy/zone

operator: In

values:

- normal

- name: subset-elastic #副本数量不限

requiredNodeSelectorTerm:

matchExpressions:

- key: app.deploy/zone

operator: In

values:

- elasticBoth subsets have no limit on the number of copies, and enable the analog scheduling and reschedule capabilities of the Adptive scheduling strategy. The deployment effect is to preferentially deploy to the normal node pool. When normal resources are insufficient, webhook will select elastic nodes through simulated scheduling. When the Pod in the normal node pool is in the pending state and exceeds the 30s threshold, the WorkloadSpread controller will delete the Pod to trigger the rebuild, and the new Pod will be scheduled to the elastic node pool. When shrinking, the Pod on the elastic node is prioritized to reduce the cost for users.

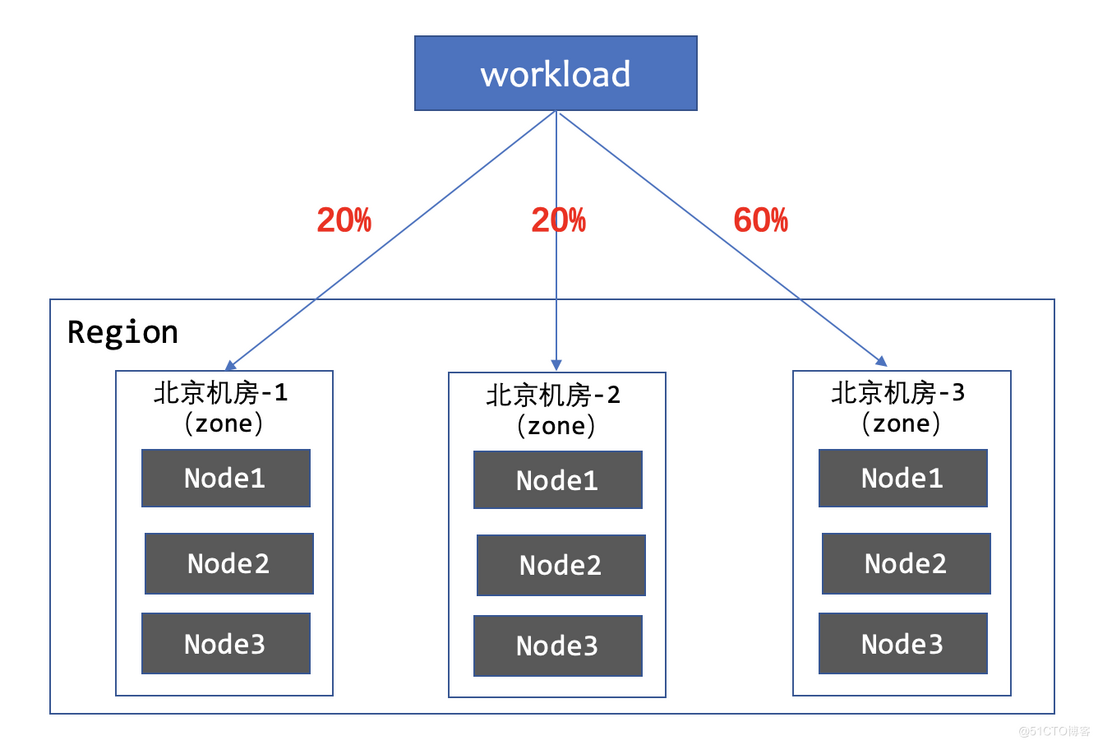

3. Disperse to 3 zones, the ratio is 1:1:3

subsets:

- name: subset-a

maxReplicas: 20%

requiredNodeSelectorTerm:

matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- zone-a

- name: subset-b

maxReplicas: 20%

requiredNodeSelectorTerm:

matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- zone-b

- name: subset-c

maxReplicas: 60%

requiredNodeSelectorTerm:

matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- zone-cAccording to the actual situation of different zones, the workload is broken up in a ratio of 1:1:3. WorkloadSpread will ensure that the workload is distributed according to the defined ratio when the workload is expanded and contracted.

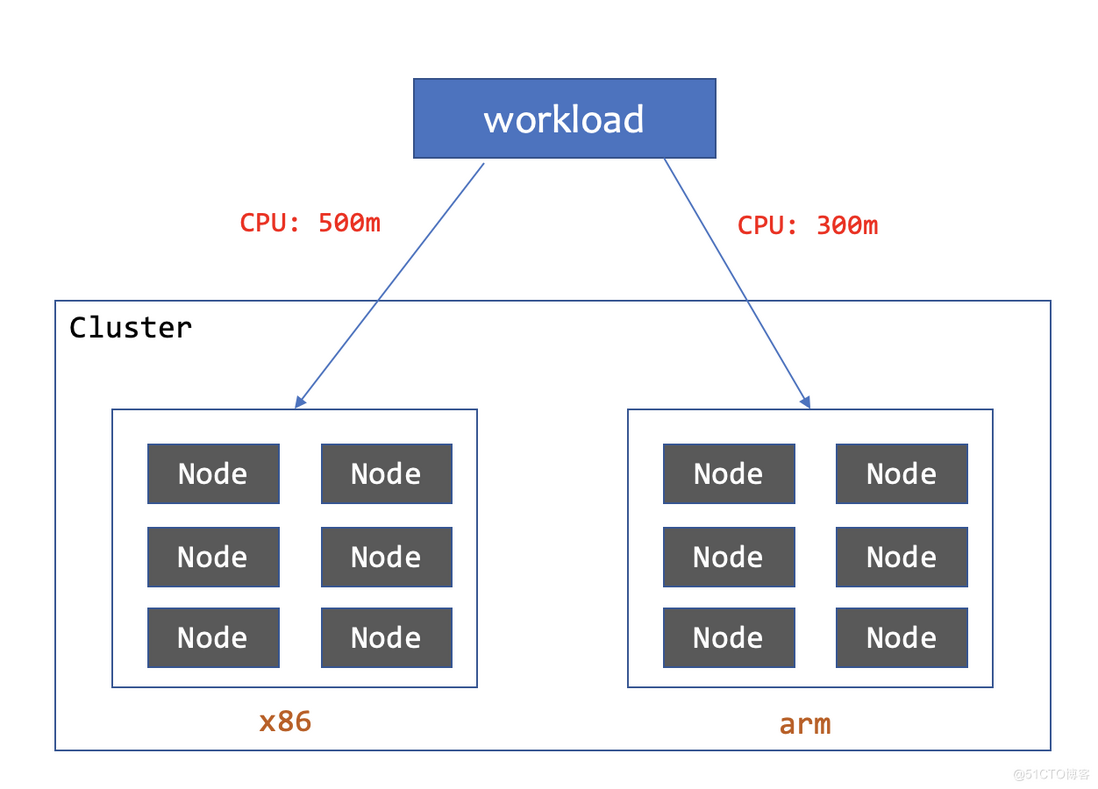

4. Workload configures different resource quotas on different CPU Arch

The Nodes where the workload is distributed may have different hardware configurations, CPU architectures, etc., which may require separate Pod configurations for different subsets. These configurations can be metadata such as label and annotation, or resource quotas, environment variables, etc. of the Pod's internal container.

subsets:

- name: subset-x86-arch

# maxReplicas...

# requiredNodeSelectorTerm...

patch:

metadata:

labels:

resource.cpu/arch: x86

spec:

containers:

- name: main

resources:

limits:

cpu: "500m"

memory: "800Mi"

- name: subset-arm-arch

# maxReplicas...

# requiredNodeSelectorTerm...

patch:

metadata:

labels:

resource.cpu/arch: arm

spec:

containers:

- name: main

resources:

limits:

cpu: "300m"

memory: "600Mi"From the above example, we have patched different labels and container resources for the Pods of the two subsets, which is convenient for us to do more refined management of the Pods. When workload Pods are distributed on nodes with different CPU architectures, different resource quotas are configured to make better use of hardware resources.

Realization principle

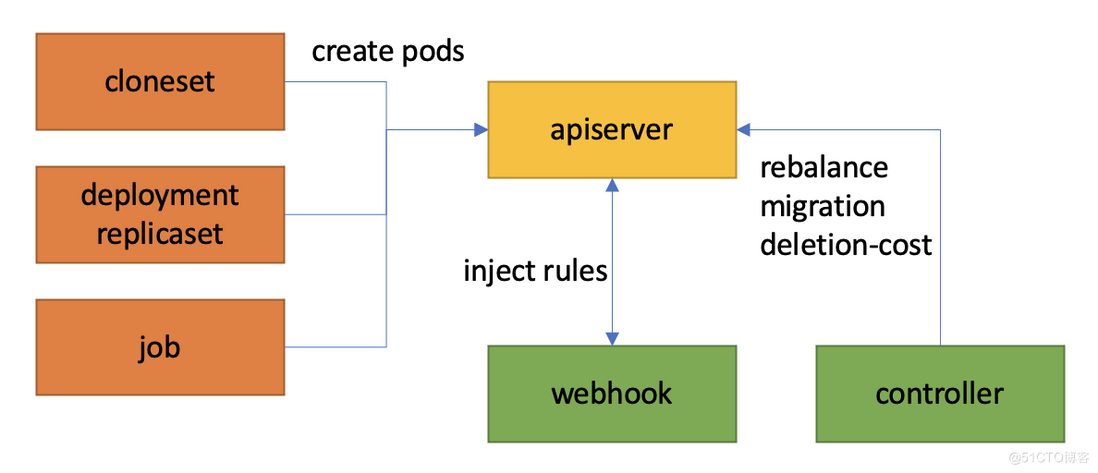

WorkloadSpread is a pure bypass flexible/topology control solution. Users only need to create the corresponding WorkloadSpread for their Deployment/CloneSet/Job objects. There is no need to make changes to the workload, and it will not cause additional costs for users to use the workload.

1. Subset priority and copy number control

Multiple subsets are defined in WorkloadSpread, and each subset represents a logical domain. Users can freely divide the subset according to node configuration, hardware type, zone, etc. In particular, we set the priority of subset:

- According to the order of definition, the priority is from high to low.

- The higher the priority, the earlier the expansion; the lower the priority, the earlier the expansion.

2. How to control the shrinking priority

In theory, the bypass scheme of WorkloadSpread cannot interfere with the shrinking sequence logic in the workload controller.

However, this problem has been resolved in the near future-after generations of users' unremitting efforts (feedback), K8s has supported ReplicaSet (Deployment) from version 1.21 by setting controller.kubernetes.io/pod-deletion-cost this annotation to specify "Deletion cost" of Pod: The higher the deletion-cost of the Pod, the lower the priority of deletion.

Kruise has supported the deletion-cost feature in CloneSet since version v0.9.0.

Therefore, the WorkloadSpread controller controls the reduction order of workload by adjusting the deletion-cost of the Pod under each subset.

For example: for the following WorkloadSpread and its associated CloneSet there are 10 copies:

subsets:

- name: subset-a

maxReplicas: 8

- name: subset-b # 副本数量不限Then the value of deletion-cost and the order of deletion are:

- 2 Pods on subset-b, with a deletion-cost of 100 (first shrinkage)

- 8 Pods on subset-a, with a deletion-cost of 200 (final reduction)

Then, if the user modifies WorkloadSpread to:

subsets:

- name: subset-a

maxReplicas: 5 # 8-3,

- name: subset-bThen the workloadspread controller will change the deletion-cost value of three Pods on susbet-a from 200 to -100

- 3 Pods on subset-a, with a deletion-cost of -100 (first shrinkage)

- 2 Pods on subset-b, with a deletion-cost of 100 (secondly shrink)

- 5 Pods on subset-a, with a deletion-cost of 200 (the final reduction)

This will give priority to shrinking those Pods that exceed the subset copy limit. Of course, the overall size is still reduced from back to front in the order defined by the subset.

3. Quantity Control

How to ensure that webhooks inject Pod rules in strict accordance with the priority order of subsets and the number of maxReplicas is a key problem in the implementation of WorkloadSpread.

3.1 Solve the problem of concurrency consistency

There is a status corresponding to each subset in the status of workloadspread, where the missingReplicas field indicates the number of Pods required by this subset, and -1 means there is no limit on the number (subset is not configured with maxReplicas).

spec:

subsets:

- name: subset-a

maxReplicas: 1

- name: subset-b

# ...

status:

subsetStatuses:

- name: subset-a

missingReplicas: 1

- name: subset-b

missingReplicas: -1

# ...When webhook receives a Pod create request:

- According to the order of subsetStatuses, find the suitable subset whose missingReplicas is greater than 0 or -1.

- After finding the suitable subset, if missingReplicas is greater than 0, first decrease 1 and try to update the workloadspread status.

- If the update is successful, the rules defined by the subset are injected into the pod.

- If the update fails, re-get the workloadspread to obtain the latest status, and return to step 1 (with a certain number of retries).

Similarly, when webhook receives a Pod delete/eviction request, it adds 1 to missingReplicas and updates it.

There is no doubt that we are using optimistic locking to resolve update conflicts. However, it is not appropriate to use only optimistic locking, because the workload creates a large number of Pods in parallel when creating a Pod. The apiserver will send many Pod create requests to the webhook in an instant, and parallel processing will cause a lot of conflicts. Everyone knows that too many conflicts are not suitable for using optimistic locking, because the retry cost of resolving conflicts is very high. To this end, we also added a workloadspread-level mutex to limit parallel processing to serial processing. There is a new problem with adding mutex locks, that is, after the current groutine acquires the lock, it is very likely that the workloadspread taken from the infromer is not the latest, or conflicts. Therefore, after groutine updates the workloadspread, it first caches the latest workloadspread object and then releases the lock, so that the new groutine can directly get the latest workloadspread from the cache after acquiring the lock. Of course, in the case of multiple webhooks, you still need to combine the optimistic locking mechanism to resolve conflicts.

3.2 Solve the problem of data consistency

So, can the value of missingReplicas be controlled by webhook? The answer is no, because:

- The Pod create request received by the webhook may not be successful in the end (for example, the Pod is illegal, or the subsequent verification link such as quota fails).

- The Pod delete/eviction request received by the webhook may not be really successful in the end (for example, it is subsequently intercepted by PDB, PUB, etc.).

- There are always various possibilities in K8s that cause Pod to end or disappear without going through webhook (for example, phase enters Succeeded/Failed, or etcd data is lost, etc.).

- At the same time, this does not conform to the final state-oriented design philosophy.

Therefore, workloadspread status is controlled by the cooperation of webhook and controller:

- The webhook requests link interception in Pod create/delete/eviction and modify the value of missingReplicas.

- At the same time, the controller's reconcile will also get all the Pods under the current workload, classify them according to the subset, and update the missingReplicas to the current actual missing number.

- From the above analysis, there is likely to be a delay in the controller getting the Pod from the informer, so we also added the creatingPods map to the status. When webook is injected, it will record an entry with the key as pod.name and the value as the timestamp to the map. , The controller combines with the map to maintain the true missingReplicas. Similarly, there is also a deletingPods map to record the delete/eviction events of Pod.

4. Adaptive scheduling capability

Support to configure scheduleStrategy in WorkloadSpread. By default, the type is Fixed, which means that the Pod is scheduled to the corresponding subset according to the order of each subset and the maxReplicas limit.

However, in real scenarios, in many cases, the resources of subset partition or topology may not fully satisfy the number of maxReplicas. The user needs to choose a resource-rich partition for the Pod to expand according to the actual resource situation. This requires the use of Adaptive scheduling allocation.

The Adaptive capabilities provided by WorkloadSpread are logically divided into two types:

- SimulationSchedule: In Kruise webhook, according to the nodes/pods data in the informer, a scheduling ledger is assembled to simulate the scheduling of Pods. That is to do a simple filtering through nodeSelector/affinity, tolerations, and basic resources resources. (Not suitable for vk nodes)

- Reschedule: After the Pod is scheduled to a subset, if the scheduling fails for more than the rescheduleCriticalSeconds time, the subset is temporarily marked as unschedulable, and the Pod is deleted to trigger the rebuild. By default, unschedulable will be reserved for 5 minutes, that is, Pod creation within 5 minutes will skip this subset.

summary

WorkloadSpread combines some of the existing features of kubernetes to give the workload flexible deployment and multi-domain deployment capabilities in a bypass form. We hope that users can reduce the complexity of workload deployment by using WorkloadSpread, and use its elastic scalability to effectively reduce costs.

At present, Alibaba Cloud is actively implementing it internally, and adjustments during the implementation process will be reported back to the community in time. In the future, WorkloadSpread also has some new capabilities plans, such as allowing WorkloadSpread to support workload inventory Pod takeover, support batch workload constraints, and even use labels to match Pods across the workload level. Some of these capabilities require actual consideration of the needs of community users. I hope you can participate in the Kruise community more and raise more issues and prs to help users solve more cloud-native deployment problems and build a better community.

- Github:

https://github.com/openkruise/kruise - Official:

https://openkruise.io/ - Slack:

Channel in Kubernetes Slack - Dingding exchange group:

Related Links:

Link 1: WorkloadSpread official document:

https://openkruise.io/zh-cn/docs/workloadspread.html

Link 2: Pod Topology Spread Constrains:

https://kubernetes.io/docs/concepts/workloads/pods/pod-topology-spread-constraints/

Link 3: UnitedDeployment:

https://openkruise.io/zh-cn/docs/uniteddeployment.html

Click on the link below to learn about the OpenKruise project now!

https://github.com/openkruise/kruise

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。