In the past work, we built a technology platform using microservices, containerization, and service orchestration. In order to improve the R&D efficiency of the development team, we also provide a CICD platform to quickly deploy the code to an Openshift (enterprise-level Kubernetes) cluster.

The first step of deployment is the containerization of applications. The deliverables of continuous integration have changed from jar packages and webpacks into container images. Containerization packages the software code and all required components (libraries, frameworks, runtime environments) together, so that they can run consistently on any infrastructure in any environment and "isolated" from other applications.

Our code needs to go from source code to compilation to final runnable image and even deployment. All of this is done in the CICD pipeline. Initially, we added three files to each code repository, and injected them into the new project through the project generator (similar to Spring Initializer):

- Jenkinsfile.groovy: used to define the Jenkins Pipeline, there will be multiple versions for different languages

- Manifest YAML: Used to define Kubernetes resources, that is, the description of the workload and its operation

- Dockerfile: used to build objects

These three files also need to be constantly evolving in the work. At first, when there are fewer projects (a dozen), our basic team can also go to various code warehouses to maintain and upgrade. With the explosive growth of the project, the cost of maintenance is getting higher and higher. We have iterated on the CICD platform, moved "Jenkinsfile.groovy" and "manifest YAML" from the project, and kept the Dockerfile with fewer changes.

As the platform evolves, we need to consider decoupling the only "nail user" Dockerfile from the code, and upgrade the Dockerfile when necessary. So after researching buildpacks, there is today's article.

What is Dockerfile

Docker automatically builds the image by reading the instructions in the Dockerfile. Dockerfile is a text file that contains instructions that can be executed by Docker to build an image. Before we used to take the Java test project Tekton of of Dockerfile example:

FROM openjdk:8-jdk-alpine

RUN mkdir /app

WORKDIR /app

COPY target/*.jar /app/app.jar

ENTRYPOINT ["sh", "-c", "java -Xmx128m -Xms64m -jar app.jar"]Mirror layering

You may have heard that a Docker image contains multiple layers. Each layer corresponds to each command in the RUN , COPY , ADD . Certain specific instructions will create a new layer. During the image building process, if some layers have not changed, they will be fetched from the cache.

In the following Buildpack, image layering and cache are also used to speed up the image building.

What is Buildpack

BuildPack is a program that can convert source code into container image and can run in any cloud environment. Usually the buildpack encapsulates the ecological tool chain of a single language. Suitable for Java, Ruby, Go, NodeJs, Python, etc.

What is Builder?

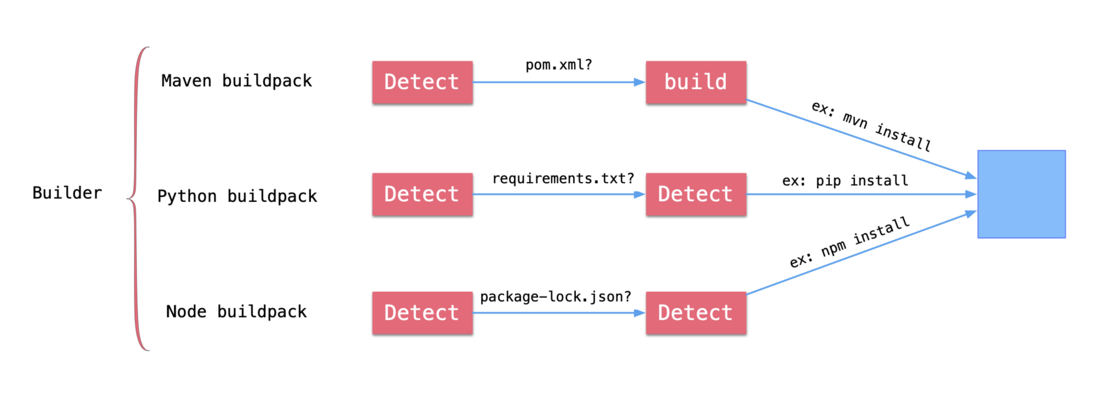

After some buildpacks are combined in order, it is builder . In addition to buildpacks, the builder also adds life cycle and stack container image.

The stack container image consists of two images: the image build image used to run the buildpack, and the basic image run image used to build the application image. As shown above, it is the running environment in the builder.

How Buildpack works

Each buildpack runtime contains two stages:

1. Detection phase

Judge whether the current buildpack is applicable by checking some specific files/data in the source code. If applicable, it will enter the construction phase; otherwise, it will exit. for example:

- Java maven's buildpack will check if there is

pom.xml - Python's buildpack will check if there is a

requirements.txtorsetup.pyfile in the source code - The Node buildpack will look for the

package-lock.jsonfile.

2. Construction phase

During the construction phase, the following operations will be performed:

- Set up the build environment and runtime environment

- Download dependencies and compile the source code (if needed)

- Set the correct entrypoint and startup script.

for example:

- Java maven buildpack will execute

mvn clean install -DskipTestsafter checking that there arepom.xml - After Python buildpack checks that there is

requrements.txt, it will executepip install -r requrements.txt - After Node build pack checks that there is

package-lock.json, executenpm install

Get started with BuildPack

So how do you use builderpack to build an image without a Dockerfile? After reading the above, everyone can basically understand that this core is written and used in the buildpack.

In fact, there are many open source buildpacks that can be used now, and there is no need to write them manually without specific customization. For example, the following buildpacks open sourced and maintained by major manufacturers:

But before we formally introduce the open source buildpacks in detail, we still have a deep understanding of how buildpacks work by creating buildpacks ourselves. For the test project, we still use test Tekton's Java project .

All the following content has been submitted to Github , you can visit: https://github.com/addozhang/buildpacks-sample get the relevant code.

The structure of the final directory buildpacks-sample is as follows:

├── builders

│ └── builder.toml

├── buildpacks

│ └── buildpack-maven

│ ├── bin

│ │ ├── build

│ │ └── detect

│ └── buildpack.toml

└── stacks

├── build

│ └── Dockerfile

├── build.sh

└── run

└── DockerfileCreate buildpack

pack buildpack new examples/maven \

--api 0.5 \

--path buildpack-maven \

--version 0.0.1 \

--stacks io.buildpacks.samples.stacks.bionicbuildpack-maven at the generated 0617bb8222076a directory:

buildpack-maven

├── bin

│ ├── build

│ └── detect

└── buildpack.tomlThe default initial test data in each file is of no use. Need to add something:

bin/detect:

#!/usr/bin/env bash

if [[ ! -f pom.xml ]]; then

exit 100

fi

plan_path=$2

cat >> "${plan_path}" <<EOL

[[provides]]

name = "jdk"

[[requires]]

name = "jdk"

EOLbin/build:

#!/usr/bin/env bash

set -euo pipefail

layers_dir="$1"

env_dir="$2/env"

plan_path="$3"

m2_layer_dir="${layers_dir}/maven_m2"

if [[ ! -d ${m2_layer_dir} ]]; then

mkdir -p ${m2_layer_dir}

echo "cache = true" > ${m2_layer_dir}.toml

fi

ln -s ${m2_layer_dir} $HOME/.m2

echo "---> Running Maven"

mvn clean install -B -DskipTests

target_dir="target"

for jar_file in $(find "$target_dir" -maxdepth 1 -name "*.jar" -type f); do

cat >> "${layers_dir}/launch.toml" <<EOL

[[processes]]

type = "web"

command = "java -jar ${jar_file}"

EOL

break;

donebuildpack.toml:

api = "0.5"

[buildpack]

id = "examples/maven"

version = "0.0.1"

[[stacks]]

id = "com.atbug.buildpacks.example.stacks.maven"Create stack

To build a Maven project, the environment that requires Java and Maven is the first choice. We use maven:3.5.4-jdk-8-slim as the base mirror of the build image. The Java environment is required for the runtime of the application, so openjdk:8-jdk-slim used as the base mirror of the run image.

In stacks create each directory build and run two directories:

build/Dockerfile

FROM maven:3.5.4-jdk-8-slim

ARG cnb_uid=1000

ARG cnb_gid=1000

ARG stack_id

ENV CNB_STACK_ID=${stack_id}

LABEL io.buildpacks.stack.id=${stack_id}

ENV CNB_USER_ID=${cnb_uid}

ENV CNB_GROUP_ID=${cnb_gid}

# Install packages that we want to make available at both build and run time

RUN apt-get update && \

apt-get install -y xz-utils ca-certificates && \

rm -rf /var/lib/apt/lists/*

# Create user and group

RUN groupadd cnb --gid ${cnb_gid} && \

useradd --uid ${cnb_uid} --gid ${cnb_gid} -m -s /bin/bash cnb

USER ${CNB_USER_ID}:${CNB_GROUP_ID}run/Dockerfile

FROM openjdk:8-jdk-slim

ARG stack_id

ARG cnb_uid=1000

ARG cnb_gid=1000

LABEL io.buildpacks.stack.id="${stack_id}"

USER ${cnb_uid}:${cnb_gid}Then use the following commands to build two images:

export STACK_ID=com.atbug.buildpacks.example.stacks.maven

docker build --build-arg stack_id=${STACK_ID} -t addozhang/samples-buildpacks-stack-build:latest ./build

docker build --build-arg stack_id=${STACK_ID} -t addozhang/samples-buildpacks-stack-run:latest ./runCreate Builder

After having buildpack and stack, the Builder is created. First, create the builder.toml file and add the following content:

[[buildpacks]]

id = "examples/maven"

version = "0.0.1"

uri = "../buildpacks/buildpack-maven"

[[order]]

[[order.group]]

id = "examples/maven"

version = "0.0.1"

[stack]

id = "com.atbug.buildpacks.example.stacks.maven"

run-image = "addozhang/samples-buildpacks-stack-run:latest"

build-image = "addozhang/samples-buildpacks-stack-build:latest"Then execute the command, Note that we have used the --pull-policy if-not-present parameter here, so there is no need to push the two images of stack to the mirror warehouse :

pack builder create example-builder:latest --config ./builder.toml --pull-policy if-not-presenttest

After having the builder, we can use the created builder to build the image.

--pull-policy if-not-present parameter is also added here to use the local builder image:

# 目录 buildpacks-sample 与 tekton-test 同级,并在 buildpacks-sample 中执行如下命令

pack build addozhang/tekton-test --builder example-builder:latest --pull-policy if-not-present --path ../tekton-testIf you see something similar to the following, it means that the image is successfully built (the first time the image is built may take a long time because of the need to download maven dependencies, and the follow-up will be very fast, you can perform two verifications):

...

===> EXPORTING

[exporter] Adding 1/1 app layer(s)

[exporter] Reusing layer 'launcher'

[exporter] Reusing layer 'config'

[exporter] Reusing layer 'process-types'

[exporter] Adding label 'io.buildpacks.lifecycle.metadata'

[exporter] Adding label 'io.buildpacks.build.metadata'

[exporter] Adding label 'io.buildpacks.project.metadata'

[exporter] Setting default process type 'web'

[exporter] Saving addozhang/tekton-test...

[exporter] *** Images (0d5ac1158bc0):

[exporter] addozhang/tekton-test

[exporter] Adding cache layer 'examples/maven:maven_m2'

Successfully built image addozhang/tekton-testStart the container and you will see that the spring boot application starts normally:

docker run --rm addozhang/tekton-test:latest

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v2.2.3.RELEASE)

...Summarize

In fact, there are many open source buildpacks that can be used now, and there is no need to write them manually without specific customization. For example, the following buildpacks open sourced and maintained by major manufacturers:

The contents of the above buildpacks libraries are relatively comprehensive, and there will be some differences in implementation. For example, Heroku uses Shell scripts in the execution phase, while Paketo uses Golang. The latter is more scalable, supported by the Cloud Foundry Foundation, and has a full-time core development team sponsored by VMware. These small modular buildpacks can be combined to expand and use different scenarios.

Of course, it is still the same sentence. It will be easier to understand how Buildpack works by writing one by yourself.

The article is published on the public

Cloud Native Guide North

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。