The past year has witnessed the unprecedented events of mankind in a century, and has also witnessed the accumulation of various video applications. The explosive growth of video data volume brought about by this has intensified the urgent demand for low-level hard-core technologies such as high-efficiency codecs.

Soon after the new video codec standard VVC was finalized, Alibaba's video team began to devote all efforts to the development of VVC software codec.

At the LiveVideoStackCon 2021 Beijing Summit, Alibaba researcher, Alibaba Cloud Smart Cloud Video Standards and Implementation Leader Ye Yan, shared the current situation of the video industry, the technological evolution history and business prospects of the Ali266 self-developed VVC codec, and the challenges faced by the video industry Future opportunities and challenges.

Wen | Ye Yan

Organize | LiveVideoStack

Hello everyone, I’m Ye Yan, and I’m the leader of the Alibaba Cloud Intelligent Video Standards and Implementation Team. The topic shared this time is the evolution of codec: Ali266 and the next generation of video technology.

This sharing is divided into four parts: first, the current situation of the video industry, then the technological evolution history and business prospects of Ali266's self-developed VVC codec, and finally the future opportunities and challenges faced by the video industry from the perspective of the video industry.

1. The status quo of the video industry

It is not an exaggeration to say that the new crown epidemic experienced in the past year and now is a major event that mankind has not seen in a century. The epidemic interrupted the normal pace of life and the habit of face-to-face communication between people, changed many game rules, and triggered the accumulation of advanced video technology products.

The epidemic situation is different around the world. China is a country with very good epidemic control, so people's daily life is basically normal. However, in countries and regions with more severe epidemics, people's lives and work have undergone radical changes due to the impact of the epidemic.

These changes include several aspects. First of all, whether it is work interaction from offline to online, cloud conferences are used extensively. Take DingTalk's video conferences, as of today, the cumulative daily user time exceeds 100 million minutes. In addition, more than half of employees in countries and regions that are more severely affected by the epidemic work from home, and work from home through remote collaboration, which has greatly changed the face-to-face communication they were used to before.

Not only work, but people’s entertainment has also shifted from offline to online. Take the United States as an example. In the United States, cinemas have been closed for more than a year. Although they started operating this summer, few people watched movies. Everyone’s entertainment life mainly relies on home theater, including celebrities who have also switched from offline to online performances, interacting with fans through online interaction.

From the perspective of the video industry, we have witnessed a very important milestone in the past year, which is the finalization of the H.266/VVC new generation international video standard. The VVC standard was officially standardized in April 2018. After more than two years, it will reach the Final Draft International Standard in the summer of 2020, which is the final version of the first edition.

During the entire journey of more than two years, especially in the last half of the year, VVC was affected by the epidemic. Nearly 300 video experts from all over the world participated in technical discussions in the form of online meetings, and finally completed the new H.266/VVC as scheduled. The first generation of standard formulation.

Similar to each previous generation of international video standards, VVC reduces the bandwidth cost by half compared to the previous generation of HEVC standards.

The above figure shows the subjective performance test results of VVC. What is shown here is the bandwidth savings that VVC can achieve compared to the HEVC reference platform under the premise of the same subjective quality.

The video content here is divided into 5 categories. The first two columns are UHD and HD, which are ultra-high-definition and high-definition videos. We can see that the VTM reference software of VVC can reach 43% to 49% compared with the HM reference software of HEVC. Bandwidth savings.

For the two more novel video formats, HDR and 360 panoramic video, VVC can achieve higher bandwidth savings, reaching 51% to 53% respectively.

The last column is a test for low-latency applications, that is, using the time domain prediction structure used in video conferencing. Because the prediction structure has received more restrictions, the bandwidth savings that VVC can achieve is slightly smaller, but it also reaches 37. %, quite impressive.

The space is limited. It only shows the highly summarized figures. If readers are interested in the intermediate details, you can check the subjective test set report of the three meetings of the JVET Standards Committee T/V/W2020. There are a lot of details available. refer to.

In the context of the video outbreak and the finalization of the latest standard VVC, Alibaba started the development of Ali266 technology. First look at the history of Ali266 technology evolution.

2. History of Ali266 Technology Evolution

What is Ali266? What do we want it to do?

Ali266 our codec to achieve the latest standards VVC, the first point hope to do high compression performance , get the bandwidth VVC brought savings bonus; the second point is HD real-time encoding speed , compared to the HEVC , There are more VVC coding tools, and maintaining real-time coding speed is of great significance for real commercial use; the third point is to let Ali266 have a complete self-contained codec capability , and better open the end-to-end ecology.

Doing Ali266 hopes to realize the above three very challenging technical points, achieve technological leadership, transform into product competitiveness, and help us expand our business.

The figure above shows many VVC coding tools. Here I divide the main functional modules in the traditional video coding and decoding framework into several categories, including block division, intra-frame prediction, inter-frame prediction, residual coding, change quantization, loop filtering, and other coding tools.

The upper blue circle is the HEVC encoding tool, and the lower purple circle is the VVC encoding tool. We can see that in the corresponding functional modules, HEVC has only three or four corresponding coding tools, while VVC supports a richer set of coding tools, which is also the main reason why it can have powerful compression capabilities and get bandwidth saving bonuses.

Coding tools have a certain degree of complexity, so every additional coding tool will increase the complexity and performance accordingly.

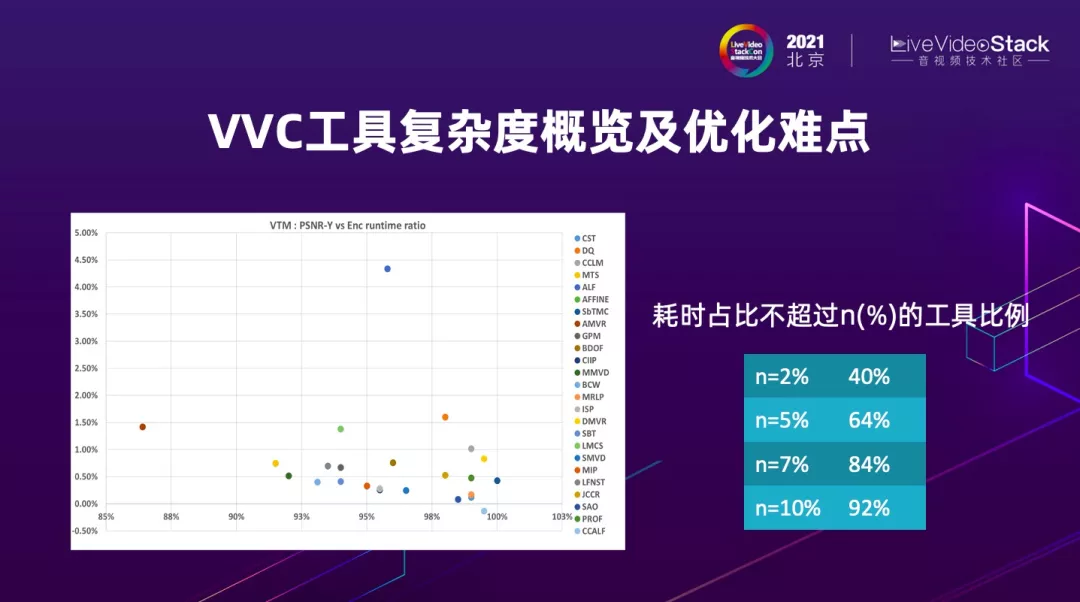

The figure above is a comprehensive overview of the complexity and coding performance of each coding tool tracked by the JVET Standards Committee during the development of the VVC standard.

In this figure, the horizontal axis is time, and the vertical axis is the increase in coding performance. Different color points correspond to different VVC coding tools. Among them, the more the horizontal axis is to the right, the lower the complexity of an encoding tool, and the higher the vertical axis, the higher the performance of the encoding tool.

Therefore, we hope that the coding tools will fall in the upper right corner, but in fact, we can see from the figure that the VVC coding tools are basically blank in the upper right corner. More coding tools can bring 1%, 1.5% performance gains, but there are also small gains. The complexity of the increase.

This poses a challenge for encoder optimization, because you can’t just grab a few major coding tools to optimize, but in a rich set of coding tools, you can quickly and accurately select the coding tools that should be used for the current input video. , This is the main optimization difficulty of H.266 encoder.

The table on the right of the above figure shows the profile of the time-consuming ratio of different coding tools in our soft-coding system. Corresponding to the figure on the left, it takes less time to verify 40% of the coding tools again, accounting for only about 2%. , But all provide performance, so we must decide how to choose. In addition, 92% of the coding tools consume less than 10% of the time, which poses a challenge to the optimization of the entire engineering algorithm.

The above figure shows not only the challenges faced by H.266 encoder optimization, but also the challenges faced by any real-time encoder.

Because in the video encoding process, there is a tug-of-war of increased compression performance and decreased encoding speed, so what we have to do is to overcome this tug-of-war.

If we compare the VVC reference platform VTM compared to the HEVC reference platform HM, although the bandwidth is halved, the encoding speed of VTM is only one-eighth of the encoding speed of HM, which is unacceptable for real-time encoding, so I will mainly Talk about the optimization performed by Ali266.

We will introduce the optimization work from two dimensions, the first is the encoding quality (encoding performance) .

Encoding quality (encoding performance) optimization

We have done a lot of work to maintain coding quality and performance. Due to limited space, I will only introduce an example. Here I choose an example of joint optimization of pre-analysis, pre-processing, and core coding tools.

pre-analysis selects scene switching detection. Encoder students know that it is necessary for every commercial encoder to be able to perform accurate scene switching detection; the pre-processing selected is the MCTF process. The following will briefly introduce what MCTF is ; The core coding tool selected is VVC's new coding tool LMCS.

This is an introduction to the MCTF pre-processing process.

MCTF is the meaning of motion conmpensated temporal filtering. It filters the input video signal in the time domain through layer-by-layer motion search and motion compensation. The bilateral filter is used to filter in the time domain, which can effectively perform video noise reduction, and While noise reduction occurs in the time domain, it also has a noise reduction effect in the airspace.

MCTF can effectively improve coding efficiency. Because of this, both VTM and VVEnc (VVC's open source encoder) platforms support the MCTF pre-processing process.

So let's take a look at how scene switching and MCTF are combined.

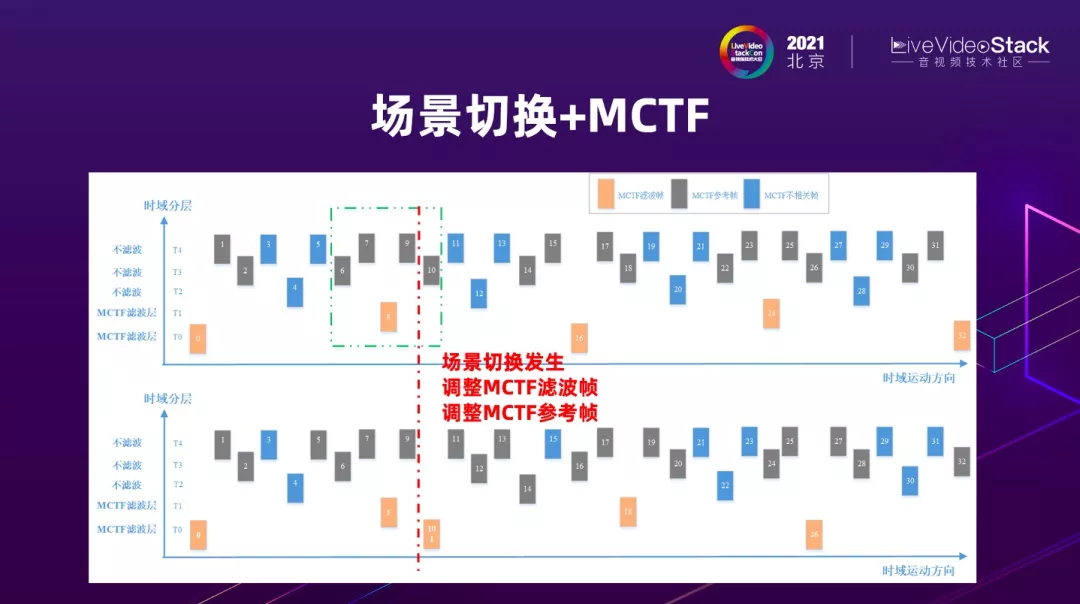

The above figure shows that the encoder performs MCTF on light yellow low-time-domain video frames. Since MCTF uses time-domain motion compensation and search, each light yellow frame has a corresponding light gray frame as the reference frame of MCTF. The light blue frame has nothing to do with MCTF. Due to the relationship of domain reference sometimes, MCTF needs to be modified when encountering scene switching.

We can see that under normal circumstances, the eighth frame is an MCTF frame, and the four frames before and after it are MCTF reference frames. In the case of scene switching, such as scene switching in the tenth frame, the tenth frame was originally the MCTF reference frame, but due to the scene switching, the tenth frame will become a new I frame, and its time domain layer will drop accordingly. The MCTF filtered frame and MCTF reference frame must be adjusted, that is, the light yellow and light gray frames will be adjusted. It can be seen from the top and bottom comparison that because of the scene switching, the MCTF reference frame of the eighth frame is adjusted to its first three frames and the next frame, and the tenth frame becomes the MCTF filtered frame, and the MCTF reference frame used is its last four frames .

Take a look at how scene switching and LMCS are combined.

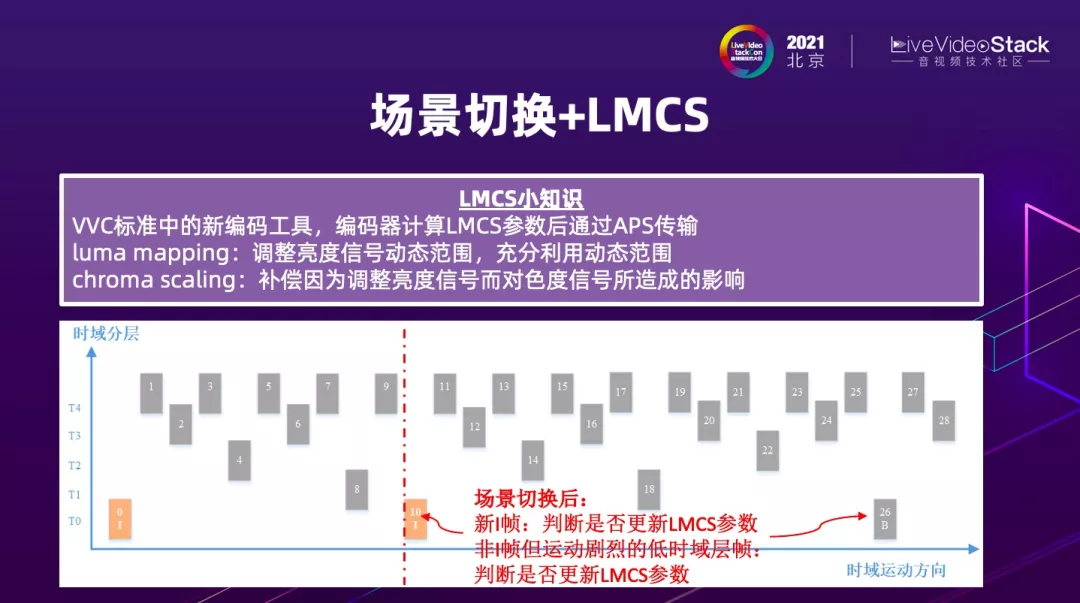

LMCS is a new coding tool in VVC. It requires the encoder to calculate the corresponding parameters and transmit it through APS. Here LM refers to luma mapping, which adjusts the dynamic range of the luminance signal to make the luminance signal more fully utilize the dynamic range, for example, 8bit is 0-255 dynamic range, 10bit is 0-1023 dynamic range.

Since the brightness signal is adjusted in the LM process, a CS process, that is, chroma scaling, is needed to adjust the chroma signal in the same block to compensate for the impact of the brightness signal adjustment on the chroma.

How to combine this tool and scene switching?

Using the example just now, it is found that there is a scene switch in the tenth frame, which is a new I frame. The dynamic range of the new scene may be completely different. Therefore, it will be judged on the new I frame whether LMCS parameter update is required, and in the corresponding GOP After the prediction structure is changed, the new frame will become a new low-time domain frame. For example, the 26th frame becomes a low-time domain frame in the case of GOP16. Then we will judge whether the motion is violent. The LMCS parameter update is also required on the time domain frame.

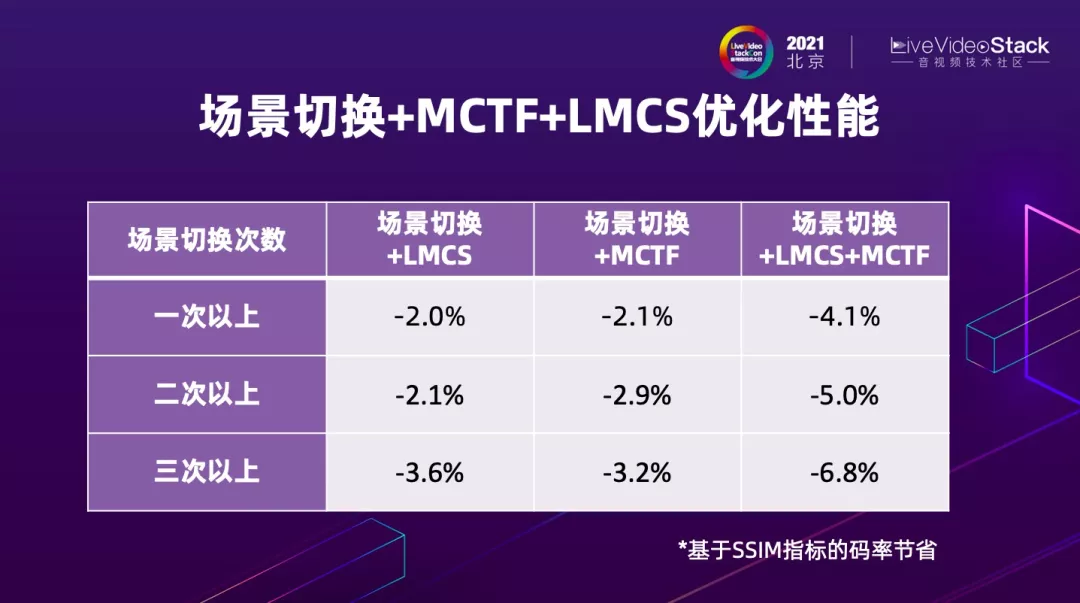

Through such optimization, what kind of performance can be achieved by the scene switching + pre-processing MCTF + LMCS joint optimization?

If the video is quite long, including more than one scene change, if optimized together with LMCS alone, a 2% bandwidth saving can be achieved; if optimized together with MCTF alone, a 2.1% bandwidth saving can be achieved; if three simultaneous optimizations are performed, you can The performance is superimposed perfectly, gaining 4.1% performance gain.

If the scene switching in a video is quite frequent, reaching more than 2 times, it can be seen from the table that there is a further performance improvement, from a single optimization of 2.1% and 2.9%, respectively, to a 5% performance gain when the three are optimized at the same time.

If there are more frequent scene switching, then the bonus of this joint optimization will be more, combined with LMCS, it can reach 3.6%; if it is combined with MCTF, it can reach 3.2%; if the three are jointly optimized together, you can get 6.8% Performance gains.

Everyone who is an encoder knows that the performance of 6.8% is quite impressive, and we can get it through the joint optimization of pre-analysis, pre-processing and core coding tools.

What I have just introduced is the optimization of encoding quality and performance. Next, I will look at how to encode the speed optimization from the second very important dimension.

Encoding speed optimization

First look at an example:

A very representative new tool for VVC is a flexible block partition structure. The above figure compares the division of VVC and HEVC for the same scene. VVC is on the left and HEVC is on the right. In the same scene, VVC can better describe the contours of objects through more flexible block division.

Let's take a look at the enlarged picture. In the case of HEVC, since only quadtree division is supported, each block is square.

VVC allows more flexible horizontal and vertical division of binary trees (BT) or ternary trees (TT). The two-way tree and the three-way tree are collectively referred to as MTT (mutli-type tree). Comparing the enlarged image on the left and the enlarged image on the right, VVC is more accurate for finger description through the rectangular division.

Although VVC uses more block division methods to obtain better object contour descriptions, the difficulty it brings to the encoder is that the encoder needs to try more options, so the decision of how to speed up the MTT division is very important to improve the encoding speed. .

Here we use the gradient-based MTT acceleration concept. If the texture of a block changes drastically in the horizontal direction, the possibility of dividing in the horizontal direction will be reduced, and the same is true for vertical division.

If you take the horizontal as an example, based on this observation, four directional gradients will be calculated before the specific decision of block division for each block, including the horizontal gradient, the vertical gradient, and the gradient on two diagonals.

In the horizontal direction, if I find that the gradient in the horizontal direction is greater than the gradient in the other three directions and exceeds a certain threshold, it means that the texture of the current block in the horizontal direction changes drastically, so the encoder will no longer make horizontal BT and TT decisions. , Speed up the encoding time.

We can see that the acceleration effect of this technology can be improved by 14.8% in terms of absolute frame rate and encoder speed, which is a considerable speed increase percentage.

Of course, because the decision to skip some block divisions will cause performance degradation, but because the performance loss is only 0.4%, this is a perfect fast algorithm in terms of overall acceleration and performance/price ratio.

We have a lot of other optimization work, because the length of the relationship is not more than one. Let me make a summary of the Ali266 encoder.

Now Ali266 supports two major grades:

Slow grade mainly suitable for offline applications. It is compatible with x265 veryslow grade. The encoding speed of Ali266 Slow grade is the same as x265 veryslow. At the same time, compared to x265 veryslow grade, it can achieve a 50% bit rate saving, which means bandwidth is reduced by half.

At the same time, Ali266 also supports the Fast grade , which is very important for commercialization. Commercial applications that require strict real-time encoding speed can achieve frames per second, leading the industry in VVC encoder speed and real-time benchmarking Application, compared with the x265 medium grade, 40% bit rate saving is a very large bandwidth bonus.

terms of encoding speed, we have not stayed at 720p30, and we are still developing real-time encoding capabilities for 2k, 4k, and 8k ultra-high-definition video.

In addition, in preparing for this sharing process, Ali266 has 2k , which is 1080p30 frames 161922fb25623e per second, which has increased our confidence in challenging ultra-high-definition real-time encoding.

The main objective of our follow-up is continuing to promote Ali266 remain VVC performance advantages, accelerate VVC commercial floor .

After talking about the encoder, let me talk about the decoder, , because we mentioned earlier that one of the main goals of developing Ali266 is to provide complete VVC encoding and decoding capabilities.

From a commercial point of view, the design goals of decoders are as follows. First, real-time decoding speed, even faster than real-time; secondly, the decoder needs to be very stable and robust; then, the concept of thin decoding, hope the decoder is lighter.

In order to achieve these design goals, we have optimized from four aspects, one of which is a very important dimension is to start from scratch.

This means that we have discarded all the previous open source or reference platform architecture design and data structure design, starting from scratch, in accordance with the VVC standard document to start a completely new data structure and frame design, in the design process, we used the more familiar Acceleration methods, including multi-threaded acceleration, assembly optimization, memory and cache efficiency optimization, etc. Improve the performance of Ali266 decoder through these four dimensions.

The figure above lists Ali266 decoding performance from four dimensions.

In terms of speed, we compare focus on the low-end machine (let VVC have the concept of Pratt and Whitney) , and then in the low-end machine test we found that Ali266 only needs three threads to achieve 720p real-time decoding, due to the higher thread occupancy rate Low, which can effectively reduce the CPU occupancy rate and the power consumption of the mobile phone, which is a very favorable indicator for actual commercial use.

From the perspective of stability, we have conducted tests on a variety of Apple phones and Android phones, covering the two major mobile operating systems, and comprehensive coverage of high, middle and low grade mobile devices to ensure stability.

In terms of robustness, we have used tens of thousands of error code streams to impact the Ali266 decoder to ensure that it has a perfect fast error recovery mechanism whether it is an error above or below the slice.

Finally, it is precisely because we started from scratch to give a satisfactory answer on thin decoder. Our Ali266 decoder package size is less than 1MB, and when decoding high-definition 720p, the memory usage only needs 33MB.

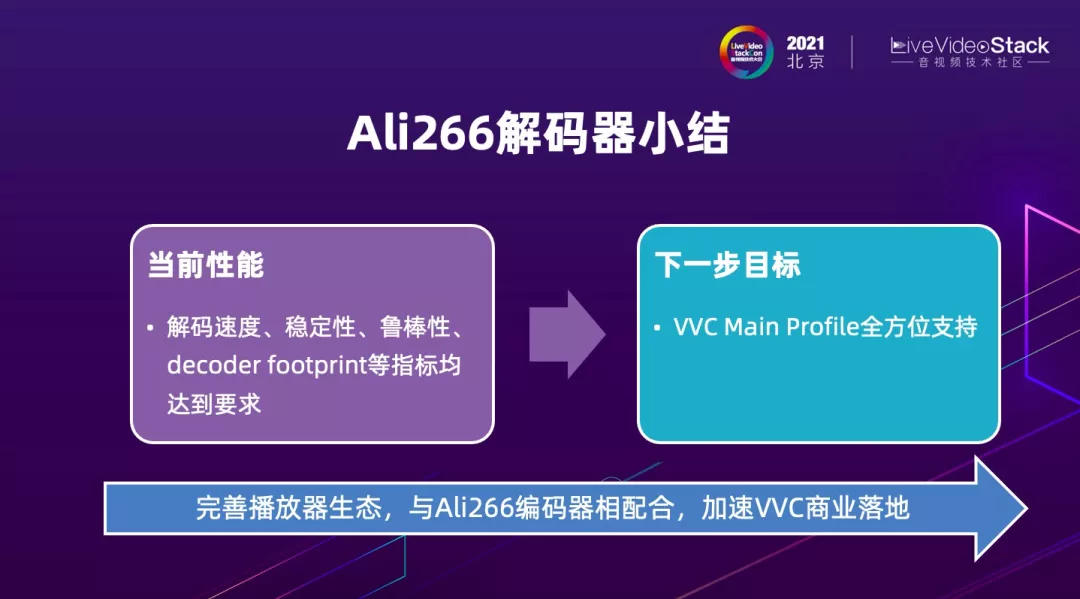

Let me make a summary of the Ali266 decoder.

From the current performance, Ali266's decoding speed, stability, robustness, decoder footprint and other indicators have reached the design goals and commercial requirements. In the next step, we hope to support VVC in all aspects of Main Profile, mainly referring to 10 -Full support for bit decoding.

In addition, we will also make every effort to improve the player ecology, and cooperate with the Ali266 encoder to accelerate the commercial implementation of VVC.

Since we have mentioned commercial landing many times before, let's take a look at the business outlook of Ali266.

3. Ali266 Business Outlook

First, let's look at the two to three years of implementation prospects at the VVC standard level.

Like HEVC and the previous H264, VVC is a universal standard, so it can fully cover a variety of video applications, including on-demand, video conferencing, live broadcast, IoT video surveillance and other existing video applications.

There are also many emerging video applications on the rise, including panoramic video, AR, VR, and the recently popular meta-universe. These applications also require a technical base for video encoding and decoding. Therefore, for such emerging applications, the VVC standard is also universal. .

Then let's take a look at the application prospect of Ali266.

We started from within the Alibaba Group. Here are four points: Youku, Dingding Video Conference, Alibaba Cloud Video Cloud, and Taobao.

My personal opinion on how to advance the application of Ali266 is that it will go from closed-loop applications to open applications. Why is this logic?

The reason is that the end-to-end controllability of the closed-loop business is stronger. When the new standard ecology is not perfect, it can be opened up through the closed-loop method. Among them, Youku and Dingding video conferencing are more perfect examples of closed-loop business.

After polishing Ali266 in a closed loop and getting through the overall link from content to playback, we will be more ready and mature to deal with open applications. When we start to promote large-scale open applications, VVC will have more comprehensive mobile and terminal hardware solution support. That will also be the time to truly demonstrate the compression power of the VVC standard on a large scale.

Just talked about Youku, here I would like to introduce to you Youku Frame Enjoy , which is an ultra-high-definition audiovisual experience jointly created by artists and scientists.

It relies on several very important ultra-high-definition technical indicators, including high frame rate, high frame rate from 60 to 120 frames per second. From the perspective of spatial resolution, 4K-8K is within the frame sharing range, and dynamic In terms of range, Frame Sharing fully supports HDR high dynamic range contrast and wide color gamut. Moreover, there must be audio for movies, and Youku Frame Sharing also includes support for 3D surround sound effects.

Another very new Youku application is Youku Free View, which mainly supports Free ViewPoint Video (FVV). FVV provides users with a very good Feature, because the video format it delivers is panoramic video, and users can do it on the screen with their hands. Swipe to choose the viewing angle you want to watch, and freely choose the content you want to watch from different angles. Youku's free perspective is supported in major CBA events and large variety shows "This is Street Dance".

Let's take a look at what value Ali266 can bring to Youku, and how to help frame sharing to increase the resolution, frame rate, and dynamic range.

The bandwidth bonus brought by the VVC standard exceeds 50% on HDR video. There is very good technical support for the 8k120 frame HDR UHD experience.

In terms of panoramic video free viewing angle, because natively supports 360 panoramic video , it can better improve the subjective quality and help Youku incubate new businesses in this regard.

In addition, although not mentioned before, VVC, like HEVC, also has a Still picture profile, so it can help save bandwidth and storage for still pictures. Therefore, youku thumbnails and cover pictures static scenes can also perfectly use Ali266's powerful compression capabilities. At present, our team has deep cooperation with Youku, and hope to report the results of Ali266's landing on Youku in the near future.

I just talked about what happened in the past year. Next, let’s take a look at the opportunities and challenges that the video industry has seen in the post-VVC era.

4. Opportunities and challenges in the post-VVC era

This is divided into two parts, technology and application. From a technical point of view, each generation of standards mainly pursues higher compression ratios, so VVC is not the end.

The exploration of higher compression rates includes exploring under the traditional codec framework and exploring the video codec framework and tool set under the support of AI technology. From an application point of view, let’s take a brief look at the opportunities and challenges presented by the emerging applications AR, VR, MR, cloud games, and meta-universe in the post-VVC era.

Higher Compression Force: The Framework Controversy

In order to pursue higher compression at the technical level, it is time to see whether the framework used in the video codec standard will continue to be used in the next generation.

On the left is the hand-built video codec framework for several generations of video standards, including different functional modules, block segmentation, intra-frame and inter-frame coding, loop filtering, etc.

On the right is the new Learning based framework, which is learned entirely through AI methods, and the encoder and decoder are implemented through a full neural network.

Under the traditional framework, the JVET Standards Committee recently established a reference platform for ECM (enhanced compression model) to explore the next generation of coding technologies.

The current ECM version is 2.0. In this table, the compression performance of ECM2.0 and VTM-11.0 is compared. It can be seen that ECM2.0 can achieve 14.8% performance gain on the luminance signal, and the chrominance signal has higher performance. Performance, encoder and decoder complexity have also increased, but now it is mainly to promote compression, complexity is not the most concerned dimension at this stage.

ECM is based on the traditional framework, and most of the tools have been seen when VVC was developed before. After further algorithm iteration and polishing, a performance gain of 14.8% was obtained.

The state of AI coding is divided into two parts: end-to-end AI and tool set AI.

The example diagram just now shows that the end-to-end AI is completely different from the traditional framework and adopts a new framework.

In terms of today’s end-to-end AI capabilities, the coding performance of a single picture can slightly exceed VVC, but if you consider real video coding, that is, taking the time domain dimension into account, the end-to-end AI performance is still relatively close to HEVC. There is still room for improvement.

In addition, AI technology can also be used as a tool set AI. Without changing the traditional framework, AI coding tools are developed on certain functional modules to replace or superimpose on existing traditional coding tools to improve performance.

The more examples in this part are intra-frame coding and intra-loop filtering tools. Taking today as an example, as we know, the NNLF loop filter technology based on the multi-neural network model can achieve a performance gain of 10% compared with VVC.

AI video coding has its own challenges, which are divided into three dimensions.

The first challenge is computational complexity , mainly because now we change the concept of performance parameters of the amount of gain, Google recently seen the paper gives guidance to quantify, if an AI tool can provide single-digit performance gains, then It is hoped that the parameter quantity of this tool will be controlled on the order of 50K. Many AI tools today have parameters ranging from 500k to 1 trillion, which are still orders of magnitude different from the target parameters and need to be simplified. In addition, the computational complexity also includes the need to consider the fixed-point parameters, the amount of calculation, especially the amount of multiplication.

The second challenge is the data interaction volume , especially the tool-level AI and other functional modules of the traditional encoder may have many pixel-level interactions, whether it occurs at the frame level or the block level, the throughput rate of the codec is very large. Challenge. The tools with better performance seen today rely on multi-neural network models. Multi-NN models require model exchange. When the amount of model parameters is relatively large, the amount of data interaction generated by the exchange model also poses a challenge to the throughput rate.

The third challenge is to mobile terminal of . It is a common behavior for everyone to watch videos on mobile phones. How to do a good job of decoding on the mobile terminal? I personally think that due to the amount of data interaction mentioned above, through the decoder + external NPU The method is not feasible, if you want to build a unified decoder, you must consider the hardware cost.

The same Google Paper said that the cost of a traditional decoder is equivalent to the cost of implementing a 2M parameter MobileNet model. We know that MobileNet is a relatively lightweight neural network. If a NNLF filter requires 1M parameters, it is half the decoder cost. Therefore, the cost reduction needs to work harder to achieve. Therefore, the main challenge of AI coding is the need to achieve a more reasonable price/performance ratio. In this regard, a large amount of R&D investment from various companies is required to achieve a reasonable price/performance ratio. We still have to wait and see when we can get a reasonable price/performance ratio and realize the potential of AI video coding.

Finally, I want to say a personal opinion.

One reason why AI coding has such a cost-effective challenge is because AI technology is originally Data Driven. Data Driven in a specific scenario is easier to design, and the main technology must be more challenging for general scenarios.

Therefore, I think I can take a look at the AI coding in a specific scenario, which may provide technological and business breakthrough opportunities faster. You may have noticed that Facebook and Nvidia perform end-to-end AI encoding of face videos. In this specific scenario, at ultra-low bitrates, AI encoding can restore face clarity compared to traditional methods. A relatively large breakthrough shows the potential of AI coding.

Emerging applications

Finally, I will talk about three examples of emerging applications, AR/VR/MR, cloud games, and meta universe. The first two are part of the meta universe, so let's look at the meta universe.

First, let's take a look at what the meta universe is.

When the term "meta universe" came up recently, I myself didn't know exactly what it meant, so I checked it out. This is taken from the New York Times article. What is called Metaverse is the metaverse. The New York Times defines it as a hybrid mode of virtual experience, environment, and property.

Here are five examples of meta-universe manifestations. Let’s look at it counterclockwise from the top: if you like the game you can build your own world and interact with others, this is the manifestation of meta-universe; if recently Participated in either meeting or party, there is no real person but a digital avatar, this is also the embodiment of the meta-universe; if you bring a helmet or glasses to experience the virtual environment endowed by AR and VR , Is also a manifestation of meta universe; if you own virtual assets such as NFT or crypto currency, it is also a manifestation of meta universe. Finally, I think the more interesting point is that the New York Times believes that most social networks are also manifestations of meta universe, because online It's not exactly the same as the offline you. The online you may have a certain virtual component, so it is also a manifestation of the meta-universe.

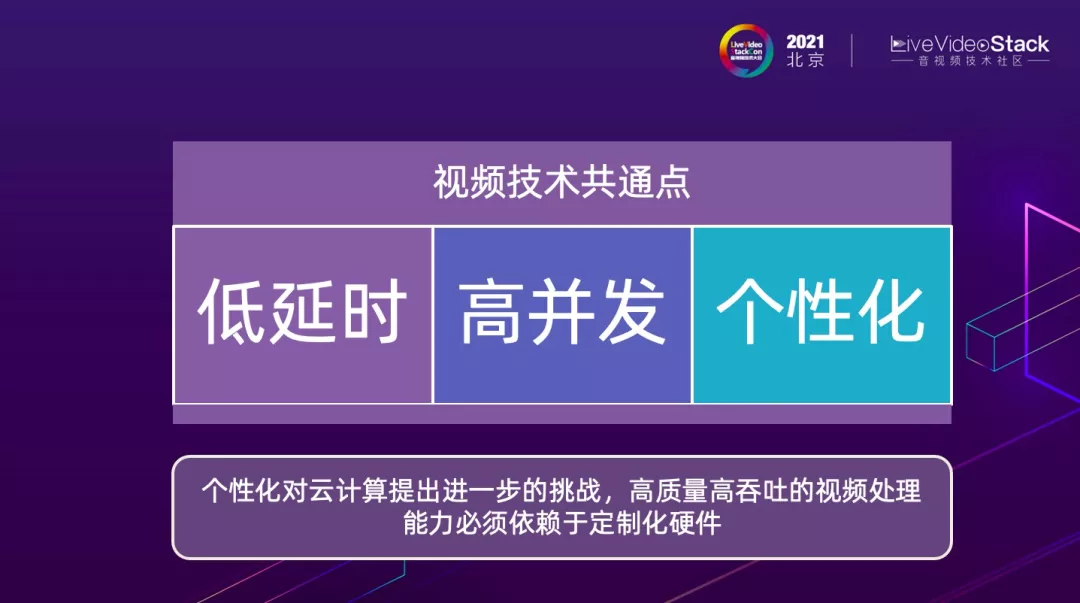

and various AR/VR experiences from the video technology point of view have several things in common: 161922fb2567eb low-latency , high-concurrency , and personalized .

The first two points are similar to the requirements of existing applications. For example, live streaming also has low latency and high concurrency requirements; but the third requirement is personalized requirements, which is a completely different brand new technical support.

Because in these virtual scenes, every user is pursuing his own experience and personalized choices. From the perspective of Alibaba Cloud Intelligence, personalization poses further challenges and higher requirements for cloud computing. Today we support tens of thousands or even millions of concurrency in a live broadcast and serve many customers at one time.

However, if each customer has its own personalized requirements, and each issue can only support more than a dozen or dozens of customers with similar requirements, then the quality and throughput rate of the cloud video processing capabilities are proposed to be higher. Requirements, require an order of magnitude improvement in processing capacity.

So I think that in the future, we need to provide technical support for video processing and deliver, and customized hardware on the cloud is an inevitable technology trend.

5. Summary

Finally, we make a summary of today's sharing.

First, we introduced Ali266, a self-developed VVC codec developed by Alibaba Cloud. First, Ali266 provides complete encoding and decoding capabilities for VVC, the latest video standard. The speed can reach real-time HD. At present, our fastest speed can reach 1080p30 frame encoding speed. .

Ali266 has excellent compression performance, reaching 50% bandwidth savings on the Slow grade and 40% bandwidth savings on the real-time Fast grade. Therefore, Ali266 can cover the needs of different services from quality priority to speed priority. At the same time, we are very pleased to report that we are in-depth cooperation with Youku, hoping to use Ali266 technology to land on Youku, help Youku reduce costs and increase quality, and empower new business technical support.

Looking to the future, technically speaking, the next-generation codec standards still need to get better compression rates, but we are still exploring how to choose the framework, and today there is no conclusion. ECM under the traditional framework can achieve a performance gain of 15% compared to VVC, but it is still far from the requirements of 40% and 50%. AI coding can give very good performance potential, but it has not yet met the requirements in terms of cost performance and needs to make great progress.

From an application perspective, Metaverse will bring you a richer virtual experience, and it can also support the growth of many new applications. To make Metaverse a reality, in terms of cloud computing, it is necessary to achieve high-quality and high-throughput personalized cloud computing capabilities as soon as possible to meet the challenges posed by emerging applications.

Finally, although it has not been mentioned before, the experience of the virtual world also needs to be more friendly, that is, lighter and more inclusive AR/VR terminal equipment will debut as soon as possible.

This is the end of this sharing. Thank you very much. I also want to thank the organizer LVS for giving me this opportunity to share. Due to the impact of the epidemic, I regret that I cannot have face-to-face communication with you. If you have any questions about the content I shared this time Or if you want to discuss further, you are welcome to leave a message in the backstage of the official account.

"Video Cloud Technology" Your most noteworthy audio and video technology public account, pushes practical technical articles from the front line of Alibaba Cloud every week, and exchanges and exchanges with first-class engineers in the audio and video field. The official account backstage reply [Technology] You can join the Alibaba Cloud Video Cloud Product Technology Exchange Group, discuss audio and video technologies with industry leaders, and get more industry latest information.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。