title: 音视频系列五:ffmpeg之rtmp推流阿里云转发vlc拉流播放

categories:[ffmpeg]

tags:[音视频编程]

date: 2021/11/30

<div align = 'right'>作者:hackett</div>

<div align = 'right'>微信公众号:加班猿</div>

在前两篇 阿里云服务器搭建Nginx+rtmp推流服务器中,我们已经配置把阿里云的rtmp推流服务搭建好了,用的是PC软件OBS来进行推流到阿里云服务器,接下来就用雷神的最简单的基于ffmpeg的推流器,rtmp方式推流,阿里云服务器转发流,VLC拉流的流程走一遍。

链接地址:最简单的基于FFmpeg的推流器(以推送RTMP为例) https://blog.csdn.net/leixiao...

一、RTMP简介

RTMP是Real Time Messaging Protocol(实时消息传输协议)的首字母缩写。该协议基于TCP,是一个协议族,包括RTMP基本协议及RTMPT/RTMPS/RTMPE等多种变种。RTMP是一种设计用来进行实时数据通信的网络协议,主要用来在Flash/AIR平台和支持RTMP协议的流媒体/交互服务器之间进行音视频和数据通信。支持该协议的软件包括Adobe Media Server/Ultrant Media Server/red5等。RTMP与HTTP一样,都属于TCP/IP四层模型的应用层。-- 百度百科

RTMP推流器(Streamer)的在流媒体系统中的作用可以用下图表示。首先将视频数据以RTMP的形式发送到流媒体服务器端(Server,比如FMS,Red5,Wowza等),然后客户端(一般为Flash Player)通过访问流媒体服务器就可以收看实时流了。

二、程序流程图

RTMP采用的封装格式是FLV。因此在指定输出流媒体的时候需要指定其封装格式为“flv”。同理,其他流媒体协议也需要指定其封装格式。例如采用UDP推送流媒体的时候,可以指定其封装格式为“mpegts”。

av_register_all():注册FFmpeg所有编解码器。

avformat_network_init():初始化Network。

avformat_open_input():输入(Input)。

avformat_find_stream_info():查找流信息。

avformat_alloc_output_context2():分配输出 AVFormatContext。

avcodec_copy_context():复制AVCodecContext的设置(Copy the settings of AVCodecContext)。

avformat_write_header():写文件头(Write file header)。

av_read_frame():获取一个AVPacket(Get an AVPacket)。

av_rescale_q_rnd():转换PTS/DTS(Convert PTS/DTS)

av_interleaved_write_frame():写文件尾。

三、代码

#include <iostream>

extern "C" {//包含C头文件

#include "libavformat/avformat.h"

#include "libavutil/time.h"

};

int main(int argc, char* argv[]) {

AVOutputFormat* ofmt = NULL;

//输入对应一个AVFormatContext,输出对应一个AVFormatContext

//(Input AVFormatContext and Output AVFormatContext)

AVFormatContext* ifmt_ctx = NULL, * ofmt_ctx = NULL;

AVPacket pkt;

const char* in_filename, * out_filename;

int ret, i;

int videoindex = -1;

int frame_index = 0;

int64_t start_time = 0;

//in_filename = "cuc_ieschool.mov";

//in_filename = "cuc_ieschool.mkv";

//in_filename = "cuc_ieschool.ts";

//in_filename = "cuc_ieschool.mp4";

//in_filename = "cuc_ieschool.h264";

in_filename = "./ouput_1min.flv";//输入URL(Input file URL)

//in_filename = "shanghai03_p.h264";

//out_filename = "rtmp://localhost/publishlive/livestream";//输出 URL(Output URL)[RTMP]

out_filename = "rtmp://阿里云服务器IP:1935/live";//输出 URL(Output URL)[RTMP]

//out_filename = "rtp://233.233.233.233:6666";//输出 URL(Output URL)[UDP]

//注册FFmpeg所有编解码器

av_register_all();

//Network

avformat_network_init();

//输入(Input)

if ((ret = avformat_open_input(&ifmt_ctx, in_filename, 0, 0)) < 0) {

printf("Could not open input file.");

goto end;

}

if ((ret = avformat_find_stream_info(ifmt_ctx, 0)) < 0) {

printf("Failed to retrieve input stream information");

goto end;

}

for (i = 0; i < ifmt_ctx->nb_streams; i++)

if (ifmt_ctx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}

av_dump_format(ifmt_ctx, 0, in_filename, 0);

//输出(Output)

avformat_alloc_output_context2(&ofmt_ctx, NULL, "flv", out_filename); //RTMP

//avformat_alloc_output_context2(&ofmt_ctx, NULL, "mpegts", out_filename);//UDP

if (!ofmt_ctx) {

printf("Could not create output context\n");

ret = AVERROR_UNKNOWN;

goto end;

}

ofmt = ofmt_ctx->oformat;

for (i = 0; i < ifmt_ctx->nb_streams; i++) {

//根据输入流创建输出流(Create output AVStream according to input AVStream)

AVStream* in_stream = ifmt_ctx->streams[i];

AVStream* out_stream = avformat_new_stream(ofmt_ctx, in_stream->codec->codec);

if (!out_stream) {

printf("Failed allocating output stream\n");

ret = AVERROR_UNKNOWN;

goto end;

}

//复制AVCodecContext的设置(Copy the settings of AVCodecContext)

ret = avcodec_copy_context(out_stream->codec, in_stream->codec);

if (ret < 0) {

printf("Failed to copy context from input to output stream codec context\n");

goto end;

}

out_stream->codec->codec_tag = 0;

if (ofmt_ctx->oformat->flags & AVFMT_GLOBALHEADER)

out_stream->codec->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

}

//Dump Format

av_dump_format(ofmt_ctx, 0, out_filename, 1);

//打开输出URL(Open output URL)

if (!(ofmt->flags & AVFMT_NOFILE)) {

ret = avio_open(&ofmt_ctx->pb, out_filename, AVIO_FLAG_WRITE);

if (ret < 0) {

printf("Could not open output URL '%s'", out_filename);

goto end;

}

}

//写文件头(Write file header)

ret = avformat_write_header(ofmt_ctx, NULL);

if (ret < 0) {

printf("Error occurred when opening output URL\n");

goto end;

}

start_time = av_gettime();

while (1) {

AVStream* in_stream, * out_stream;

//获取一个AVPacket(Get an AVPacket)

ret = av_read_frame(ifmt_ctx, &pkt);

if (ret < 0)

break;

//FIX:No PTS (Example: Raw H.264)

//Simple Write PTS

if (pkt.pts == AV_NOPTS_VALUE) {

//Write PTS

AVRational time_base1 = ifmt_ctx->streams[videoindex]->time_base;

//Duration between 2 frames (us)

int64_t calc_duration = (double)AV_TIME_BASE / av_q2d(ifmt_ctx->streams[videoindex]->r_frame_rate);

//Parameters

pkt.pts = (double)(frame_index * calc_duration) / (double)(av_q2d(time_base1) * AV_TIME_BASE);

pkt.dts = pkt.pts;

pkt.duration = (double)calc_duration / (double)(av_q2d(time_base1) * AV_TIME_BASE);

}

//Important:Delay 延时

if (pkt.stream_index == videoindex) {

AVRational time_base = ifmt_ctx->streams[videoindex]->time_base;

AVRational time_base_q = { 1,AV_TIME_BASE };

int64_t pts_time = av_rescale_q(pkt.dts, time_base, time_base_q);

int64_t now_time = av_gettime() - start_time;

if (pts_time > now_time)

av_usleep(pts_time - now_time);

}

in_stream = ifmt_ctx->streams[pkt.stream_index];

out_stream = ofmt_ctx->streams[pkt.stream_index];

/* copy packet */

//转换PTS/DTS(Convert PTS/DTS)

pkt.pts = av_rescale_q_rnd(pkt.pts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pkt.dts = av_rescale_q_rnd(pkt.dts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pkt.duration = av_rescale_q(pkt.duration, in_stream->time_base, out_stream->time_base);

pkt.pos = -1;

//Print to Screen

if (pkt.stream_index == videoindex) {

printf("Send %8d video frames to output URL\n", frame_index);

frame_index++;

}

//ret = av_write_frame(ofmt_ctx, &pkt);

ret = av_interleaved_write_frame(ofmt_ctx, &pkt);

if (ret < 0) {

printf("Error muxing packet\n");

break;

}

av_free_packet(&pkt);

}

//写文件尾(Write file trailer)

av_write_trailer(ofmt_ctx);

end:

avformat_close_input(&ifmt_ctx);

/* close output */

if (ofmt_ctx && !(ofmt->flags & AVFMT_NOFILE))

avio_close(ofmt_ctx->pb);

avformat_free_context(ofmt_ctx);

if (ret < 0 && ret != AVERROR_EOF) {

printf("Error occurred.\n");

return -1;

}

return 0;

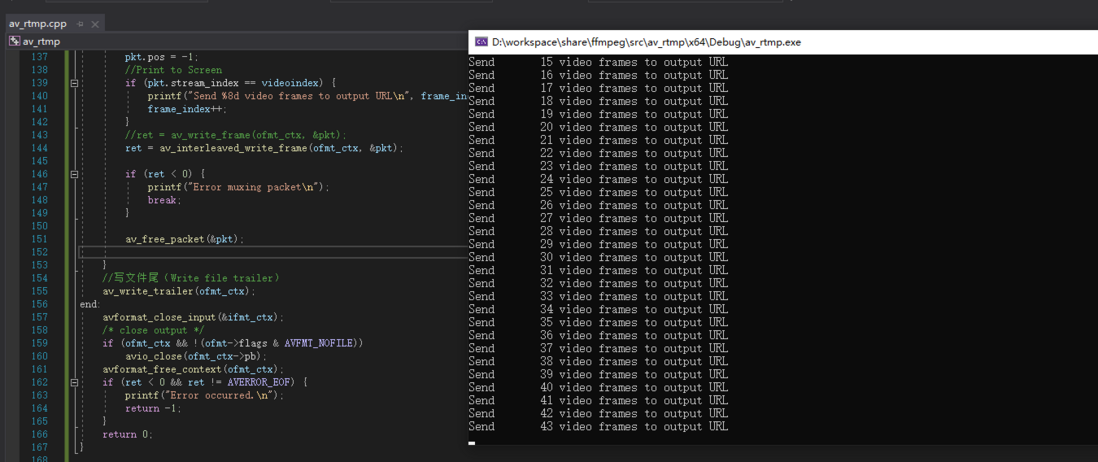

}四、运行结果

如果你觉得文章还不错,可以给个"三连",文章同步到以下个人微信公众号[加班猿]

我是hackett,我们下期见

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。