Source of inspiration

I saw an interesting video at station B before:

Everyone can take a look, it's very interesting.

The up master uses code to read the content of the barrage in the live broadcast room in real time, and then controls his computer, translating the barrage into instructions to control the "Cyberpunk 2077" game.

The audience grew more and more, and in the end even the direct indirection collapsed (of course, it was actually because the whole station at station B collapsed that day).

I am very curious how to do it.

The layman looks at the excitement and the insider looks at the doorway. As a half-expert, we will imitate the idea of the UP master and make one ourselves.

So my goal today is to reproduce a code that controls the live room through the barrage, and finally broadcast it in my live room.

Let me first show you the final video of my finished product:

[Station B] Imitate the UP master and make a barrage-controlled live studio!

Does it look decent?

First edition design ideas

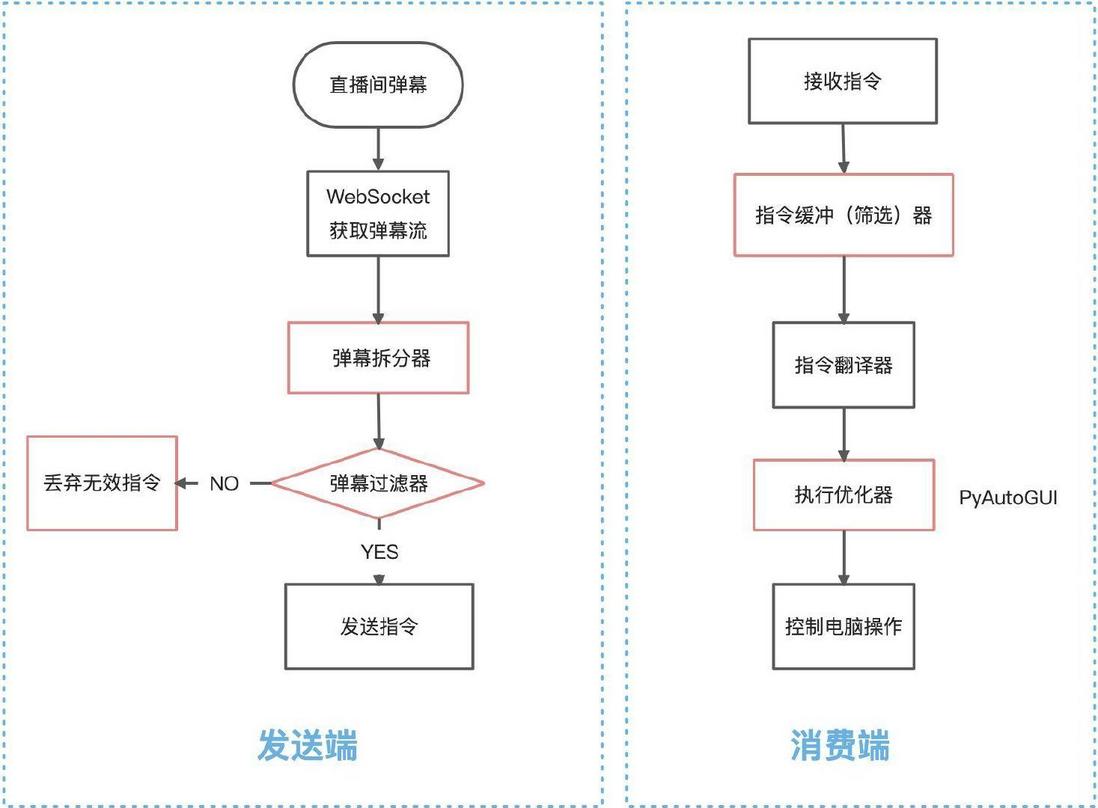

First, plan a general idea in your mind, as shown in the figure below:

This idea seems very simple, but we still have to explain. First of all, we have to figure out how to catch the content of the barrage.

On most of our common live broadcast platforms, on the browser side, the barrage is pushed to the audience through WebSocket. On mobile phones and tablets and other clients (non-web terminals), there may be some more complicated TCP pushes for barrage.

About TCP message delivery, there is a very good article, this is 's terminal message delivery service Pike's evolutionary path

In the final analysis, these barrages are realized by establishing a long link between the client and the server.

Therefore, all we need to do is to use the code as the client to make a long link with the live broadcast platform. This way you can get the barrage.

We just need to implement the entire barrage control process, so the grabbing of barrage is not the focus of this article, let's look for a ready-made wheel! After searching on Github, I found a very good open source library, which can get the bullet screens of many live broadcast platforms:

https://github.com/wbt5/real-url

Get the real streaming addresses (live source) and bullet screens of 58 live broadcast platforms such as Douyu&Huya&Bilibili&Tiktoin&Kaishou. The live source can be played in players such as PotPlayer and flv.js.

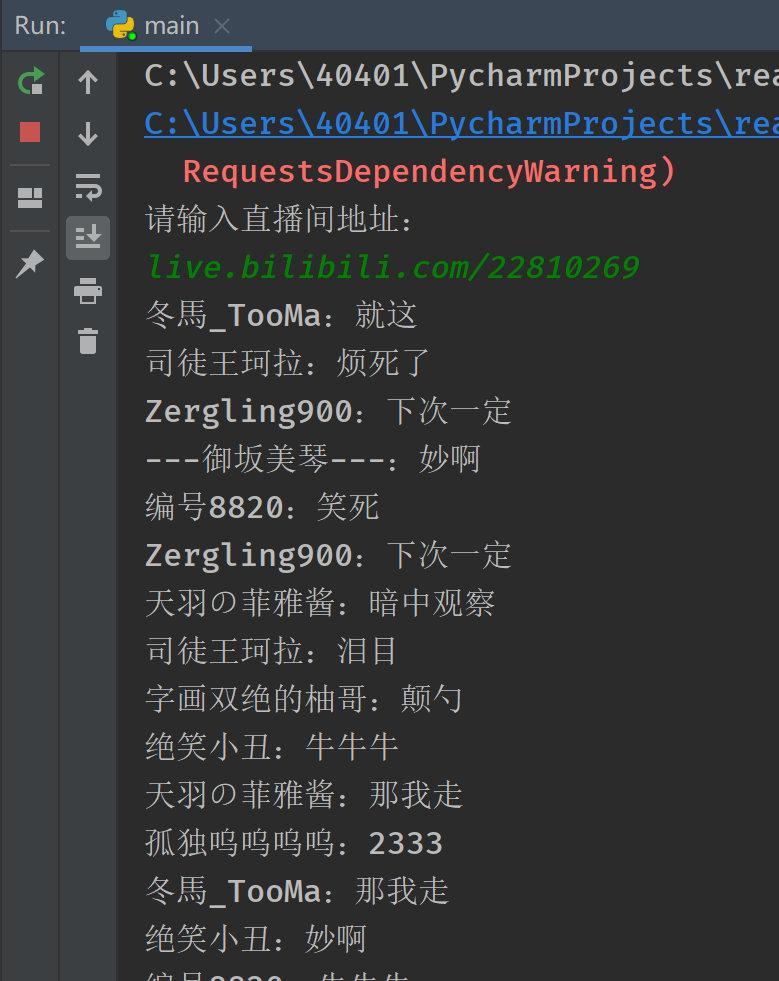

We clone the code, run the main function, and simply enter a Bilibili live room address to get the real-time barrage stream of the live room:

The barrage (including the user name) obtained in the code is directly printed on the console.

How did he do it? The core Python code is as follows (not familiar with Python? It doesn't matter, just treat it as pseudo code, it's easy to understand):

wss_url = 'wss://broadcastlv.chat.bilibili.com/sub'

heartbeat = b'\x00\x00\x00\x1f\x00\x10\x00\x01\x00\x00\x00\x02\x00\x00\x00\x01\x5b\x6f\x62\x6a\x65\x63\x74\x20' \

b'\x4f\x62\x6a\x65\x63\x74\x5d '

heartbeatInterval = 60

@staticmethod

async def get_ws_info(url):

url = 'https://api.live.bilibili.com/room/v1/Room/room_init?id=' + url.split('/')[-1]

reg_datas = []

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

room_json = json.loads(await resp.text())

room_id = room_json['data']['room_id']

data = json.dumps({

'roomid': room_id,

'uid': int(1e14 + 2e14 * random.random()),

'protover': 1

}, separators=(',', ':')).encode('ascii')

data = (pack('>i', len(data) + 16) + b'\x00\x10\x00\x01' +

pack('>i', 7) + pack('>i', 1) + data)

reg_datas.append(data)

return Bilibili.wss_url, reg_datasIt connects to Bilibili's live barrage WSS address, which is the WebSocket address, and then pretends to be a client to accept barrage pushes.

OK, after finishing the first step, the next step is to use the message queue to send the bullet screen. Open a separate consumer to receive barrage.

simple as possible, we will not go to those professional message queues. Here we use the redis list as the queue, and put the content of the barrage into it.

The sender's core code is as follows:

# 链接Redis

def init_redis():

r = redis.Redis(host='localhost', port=6379, decode_responses=True)

return r

# 消息发送者

async def printer(q, redis):

while True:

m = await q.get()

if m['msg_type'] == 'danmaku':

print(f'{m["name"]}:{m["content"]}')

list_str = list(m["content"])

print("弹幕拆分:", list_str)

for char in list_str:

if char.lower() in key_list:

print('推送队列:', char.lower())

redis.rpush(list_name, char.lower())After sending the content of the barrage, you need to write a consumer, consume these barrage, and extract all the instructions inside.

And, how do consumers consume after they receive the barrage? We need a way to control the computer with code instructions.

We continued to follow the principle of not making wheels and found a Python automation control library PyAutoGUI

PyAutoGUI is a cross-platform GUI automation Python module for human beings. Used to programmatically control the mouse & keyboard.

After installing this library and introducing it into the code, can control the computer mouse and keyboard to perform corresponding operations through its API. is perfect!

The core Python code of the consumer (controlling the computer) is as follows:

# 链接Redis

def init_redis():

r = redis.Redis(host='localhost', port=6379, decode_responses=True)

return r

# 消费者

def control(key_name):

print("key_name =", key_name)

if key_name == None:

print("本次无指令发出")

return

key_name = key_name.lower()

# 控制电脑指令

if key_name in key_list:

print("发出指令", key_name)

pyautogui.keyDown(key_name)

time.sleep(press_sec)

pyautogui.keyUp(key_name)

print("结束指令", key_name)

if __name__ == '__main__':

r = init_redis()

print("开始监听弹幕消息, loop_sec =", loop_sec)

while True:

key_name = r.lpop(list_name)

control(key_name)

time.sleep(loop_sec)Ok, you're done, we open the bullet screen to send the queue and consumers, and this queue of cyclic consumption starts to run. Once there are buttons in the barrage that are commonly used to control games like wsad, the computer will issue instructions to itself.

Problems in the operation of the first version

I excitedly opened my B station live broadcast room and started debugging, but I found that I was still too naive. This first version of the code exposed a lot of problems. Let's talk about the problems one by one and how I solved them.

Instructions are not humane

Aquamates actually like to send repeated words (redundant words) like www dddd, but the implementation of the first version only supports a single subtitle, and the Aquamates found that it was not good. After it didn't work, they left the live broadcast room.

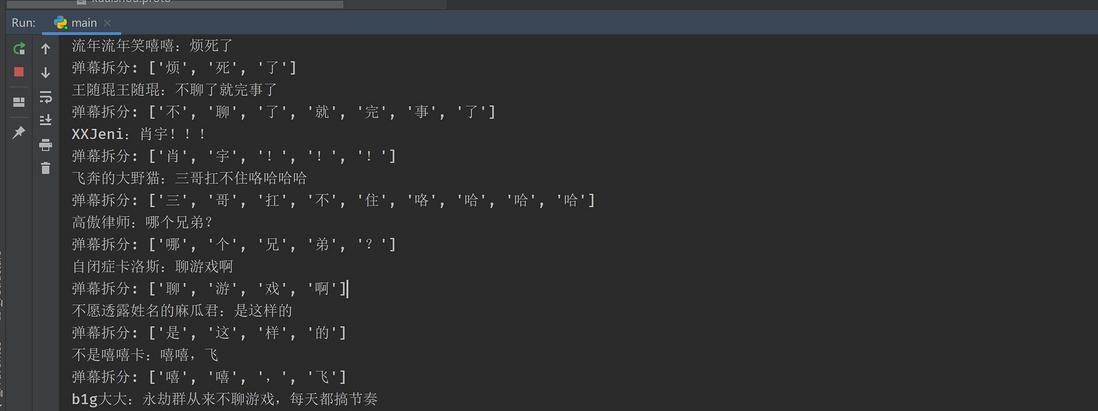

This is easy to solve, split the bullet screen content into each word, and then push it to the queue.

solution: disassemble the barrage, disassemble DDD into D, D, D, and send a consumer.

Hazard order

The first is that the player's instructions are beyond what they should have.

When I opened the cyberpunk game and let the barrage audience control the driving in the game, a mysterious audience entered the live broadcast room and silently sent an "F". . .

Then the V (name of the protagonist) in the game got out of the car. Gan, I asked you to drive, not to fight with the police. . .

solution: add a barrage filter.

# 将弹幕进行拆分,只发送指定的指令给消费者

key_list = ('w', 's', 'a', 'd', 'j', 'k', 'u', 'i', 'z', 'x', 'f', 'enter', 'shift', 'backspace')

list_str = list(m["content"])

print("弹幕拆分:", list_str)

for char in list_str:

if char.lower() in key_list:

print('推送队列:', char.lower())

redis.rpush(list_name, char.lower())After the above two problems are solved, the sender runs as follows:

Barrage command stacking

This is a big problem. If you deal with all the barrage commands sent by all the water friends, there will definitely be a problem of over consumption.

solution: needs a fixed time to process the barrage, and the others are discarded.

if __name__ == '__main__':

r = init_redis()

print("开始监听弹幕消息, loop_sec =", loop_sec)

while True:

key_name = r.lpop(list_name)

# 每次只取出一个指令,然后把list清空,也就是这个时间窗口内其他弹幕都扔掉!

r.delete(list_name)

control(key_name)

time.sleep(loop_sec)There is a delay from when the barrage is sent until the audience sees the result

In the first video, you can also feel that from the audience's instruction to the final being seen by the audience, there is probably a 5 second delay. Among them, there is at least 3 seconds, which is the delay of the live webcast stream, which is difficult to optimize.

Reworked version

After a series of tuning and involvement, our version can be considered from V0.1 to V0.2. The tiger cried.

The following is the structure diagram after reconstruction:

postscript

After writing this project, I tried it many times in the live broadcast room, and the experience is infinitely close to the video of the UP master at that time. I have been there for a long time, but when the popularity was the highest, there were only a few dozen people, a dozen bullet screens, and many of them were posted by me. I also hope that the audience will be able to bring more people in to play together, which is counterproductive.

From this, it can be concluded that I must have fans before I can play, oh oh oh. If you don't mind, you can follow my B station account, also called: Mansandaojiang. I will occasionally post interesting technical videos.

All the code implemented in this article has been open sourced on Github, you can try it in your own live broadcast room:

https://github.com/qqxx6661/live_comment_control_stream

I am an engineer who moves bricks in Ali@蛮三刀酱

continues to update high-quality articles, inseparable from your likes, reposts and sharing!

The only technical public account on the entire network: talk about back-end technology

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。