aircv is a small open source project released by NetEase, and it should be the most cited project for simple image matching. The previous article described how to use the find_template of aircv. However, it is not a mature project. There are many pits in it, which need to be improved. Let’s first analyze the code logic today.

Core functions find_template and find_all_template

The find_template function returns the first most matching result (the position may not be at the top), while find_all_template returns all results that are greater than the specified confidence level.

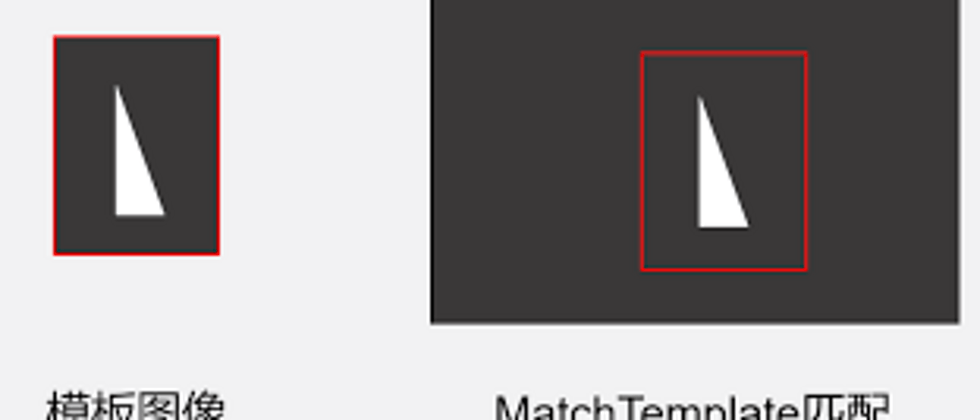

For example, you need to find in the screenshot of the Sif page

The result is as shown in the figure below:

If we go in and look at the code, we will find that find_template is written like this:

def find_template(im_source, im_search, threshold=0.5, rgb=False, bgremove=False):

'''

@return find location

if not found; return None

'''

result = find_all_template(im_source, im_search, threshold, 1, rgb, bgremove)

return result[0] if result else NoneGood guy! Call find_all_template directly, and then take the first return value. . .

So find_all_template is the real core. Let's exclude irrelevant code and look at the most critical part:

def find_all_template(im_source, im_search, threshold=0.5, maxcnt=0, rgb=False, bgremove=False):

# 匹配算法,aircv实际上在代码里写死了用CCOEFF_NORMED,大部分测试的效果,也确实是这个算法更好

method = cv2.TM_CCOEFF_NORMED

# 获取匹配矩阵

res = cv2.matchTemplate(im_source, im_search, method)

w, h = im_search.shape[1], im_search.shape[0]

result = []

while True:

# 找到匹配最大最小值

min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(res)

top_left = max_loc

if max_val < threshold:

break

# 计算中心点

middle_point = (top_left[0]+w/2, top_left[1]+h/2)

# 计算四个角的点,存入结果集

result.append(dict(

result=middle_point,

rectangle=(top_left, (top_left[0], top_left[1] + h), (top_left[0] + w, top_left[1]), (top_left[0] + w, top_left[1] + h)),

confidence=max_val

))

# 把最匹配区域填充掉,再继续查找下一个

cv2.floodFill(res, None, max_loc, (-1000,), max_val-threshold+0.1, 1, flags=cv2.FLOODFILL_FIXED_RANGE)

return resultAmong them, cv2.matchTemplate is the opencv method, and its return value is a matrix, which is equivalent to sliding on the big picture with a small picture, starting from the upper left corner, moving one pixel at a time, and then calculating a matching result, and finally forming a result matrix.

The resulting matrix size should be: (W-w + 1) x (H-h + 1), where W, H are the width and height of the large image, and w and h are the width and height of the small image.

The point with the maximum value in this matrix indicates that when the upper left corner of the small image is at this position, the matching degree is the highest, and the first matching result is obtained.

Finally cv2.floodFill is to fill the area of the maximum value of the result matrix with other numbers, so that the next maximum value can be found, and the phenomenon of area overlap is also avoided (otherwise the next maximum value may be in In the area just found).

A few small questions

- does not support grayscale images and

In fact, opencv's matchTemplate originally only supports grayscale images, but in most cases, we search for color images, so when aircv encapsulates, we separate the three color channels of bgr, call matchTemplate separately, and then merge the results.

However, this package is not compatible with the original grayscale image, and it will go wrong, and if the source image has a transparent channel, there will be an error. For this reason, I submitted a PR, but it has not been processed. It seems that the project is no longer maintained. Up.

- floodFill seems a bit exciting, numpy slices should be able to achieve

Write a small code to verify this - Can’t handle image scaling

The content of the template image and the original image may not be exactly the same size, so you need to do some scaling and repeat the attempt. - Image matching effect is not ideal

If it is exactly the same image, the find_template effect is very good, but when fuzzy matching is required, some people will see the same image at a glance, which cannot be recognized, and sometimes it will be misrecognized. Improvements:

- Adjustment algorithm: Using feature point detection algorithms such as SIFT can solve the problem of image matching with inconsistent sizes and angles; commercial software such as Halcon uses shape based matching for better matching results.

- Add information: In addition to the template image itself, sometimes we may know more information, such as the possible location range of the image, the style of the surrounding area of the image, etc., to help improve the recognition accuracy and reduce misunderstandings and missed recognition.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。