Summary : On how to construct a PXE server, and the practical application of the Ubuntu autoinstall function.

Note : You must have a certain knowledge of bash programming to read this article.

Postscript : This article is a New Year's Eve version, originally intended to be issued a year ago, but it has been delayed these two days, so it is now.

Preface

In the cloud environment, cloud service providers provide host templates (and server mirroring) to speed up the establishment of server nodes. There are several different architecture methods for this type of function (including Vultr or various VPS providers). Generally, it is mainly composed of the KVM underlying structure and the upper management module such as Cobble.

PXE

In order to trigger a fully automatic operation as soon as the customer places an order, the PXE mechanism is required, so that the new node host starts the following process from the power-on:

- Try to find a DHCP server, and obtain the DHCP IP address from the PXE Server, as well as additional BOOTP parameters

- Use BOOTP parameters (usually a file name "pxelinux.0") to obtain the boot file pxelinux.0 from the TFTP service of the PXE Server

The pxelinux.0 boot file starts a complete set of linux boot sequence, including:

- Find grub information and display the grub menu

- When the user selects the grub menu item or the default item hits, load the corresponding vmlinuz and initrd to boot the Linux kernel

- The install parameter is always matched when booting the kernel, so it will automatically enter the Linux standard installation interface

- The cloud-config parameter (ie user-data file) provided by autoinstall automatically provides response data during the installation process, so that the installation interface can be automatically advanced

- The cloud-init mechanism is responsible for interpreting meta-data data

- The cloud-init mechanism prompts later scripts to complete other tasks required by the service provider

Cloud service providers can provide a complete online boot service through the above mechanism.

Of course, there are still a lot of details inside, but that's the question of filling in human lives.

For other OS, pxelinux.0 can be another bootloader, even the file name does not need to be.

cloud-init

cloud-init is a complete set of booting mechanism for host nodes from scratch. It was developed by Canonical and is the de facto boot standard of current mainstream cloud service providers. Different OSs can reach unmanned users through the docking and decoration of this mechanism. Take care of the boot work.

In Ubuntu, the so-called autoinstall mechanism is now used to interface with cloud-init. Since the developers of both are the same, you might as well regard autoinstall as the specific implementation of the Ubuntu version of cloud-init.

The early Ubuntu and Debian systems used the preseed mechanism to install the operating system unattended, but it has now been taken over by cloud-init and autoinstall.

In the RedHat system, the Kickstart mechanism was used to do unattended installation tasks, but now on the cloud, it is done through cloud-init through a mechanism that conforms to the Openstack specification. Other operating systems have similar schemes.

These contents are far beyond the scope of this article.

text

Scope

We will only explain an example of simulating the corresponding scene locally and provide a set of basic scripts to achieve the purpose of showing this set of basic processes.

This is not only to prepare a PXE server, but also to provide a set of reusable and easy-to-adjust devops operation and maintenance paradigms. There is no need to use any known high-level wrappers at all.

In this article, you will see that we built a pxe-server, and then used it to support other new virtual machines to complete the operating system installation and working environment configuration unattended and fully automated.

summary

Our environment is built in VMWare. All VMs use a single network card to connect to the NAT mode. The NAT network is used to simulate the cloud service provider's network.

A PXE Server is running on the NAT network segment and provides DHCP+BOOTP, TFTP and WEB services. These three provide NewNode with all the materials needed for unattended installation tasks.

We created several new VM hosts on the same network segment, and set the BIOS-based UEFI method instead of the traditional method. Then directly turn it on, make it automatically search for DHCP to obtain an IP address, enter the installation sequence, and stay in the start-ready state after completing all installation tasks, thus achieving the purpose of simulation.

Prepare PXE server

PXE is pre-boot execution environment ( Preboot eXecution Environment , PXE , also known as , it provides a network interface for pre-execution environment This mechanism allows the computer to start without relying on local data storage devices (such as hard drives) or locally installed operating systems.

In our vision, a new host node in the LAN starts from the bare metal power-on, first obtains a boot environment through the PXE mechanism, and then provides it with an appropriate installation system, so that this bare metal enters the automated installation process, and finally gets one Operating nodes that are OS-ready are integrated into the current production environment and become part of the cloud facility.

So we need to run a PXE server in the LAN to provide DHCP+BOOTP service. The DHCP service waits for the bare metal network card to call for a new IP address through the UDP protocol. BOOTP attaches to the DHCP New IP Requested message and responds to the bare metal network card. The network card that supports the BOOTP protocol can retrieve the corresponding BOOTP file and load it. Start for the first time. The DHCP+BOOP service of the PXE server usually responds with the startup file name pxelinux.0, which can be customized.

The client network card uses this file name to request the file from the TFTP service of the PXE server, reads the file to a specific location in the memory, and transfers the CPU execution authority to the startup location, and enters the corresponding startup process.

Typical process of pxelinux.0:

- Generally speaking, this process obtains the /grub folder of the TFTP server, obtains grub.cfg and shows the user a GRUB boot menu with a countdown.

For the automatic installation system, the default value of this menu is to point to the specific location I WEB server, and pull the installation image back from this location to execute, thus entering the typical Linux system installation process. Note that we will allocate an unmanned answer file, so the Linux system installation process will automatically execute all the sequences without manual intervention.

Having said so much, now let’s take a look at how to prepare this PXE server specifically

| Host | IP | |

|---|---|---|

| PXE-server | 172.16.207.90 | |

| NewNode | - | |

Basic system

First, we install the basic system. Note that this article is only for Ubuntu 20.04 LTS, so PXE-server also uses this system.

But this is not necessary. In fact, you need a server to support DHCP, TFTP, and WEB services.

According to personal preference, we install zsh and plug-ins to help reduce the pressure of keystrokes.

Total entry code

We use a script vms-builder to do the overall PXE-server construction.

The explanations of some auxiliary functions

bash.sh(postscript: there is no), currently you can fill them up: for example, Install_packages is equivalent to sudo apt-get install -y, headline is equivalent to echo, fn_name of highlighted text Can get the current bash/zsh function name, etc.Some variables that need to be used may not be introduced at this time. You can refer to the script source code later.

The starting point in this script, that is, the entry code is this:

vms_entry() {

headline "vms-builder is running"

local ubuntu_iso_url="https://${ubuntu_mirrors[0]}/ubuntu-releases/${ubuntu_codename}/${ubuntu_iso}"

local alternate_ubuntu_iso_url=${alternate_ubuntu_iso_url:-$ubuntu_iso_url}

local tftp_dir=/srv/tftp

local full_nginx=-full

v_install # install software packages: tftp, dhcp, nginx, etc

v_config # and configure its

v_end #

}This is the difference between this article and other articles on the same topic: we provide a set of best examples for compiling bash scripts, you can easily adjust it, and you can easily use the template for other purposes.

In addition, this is a system configuration method that supports equivalence. You can execute the script multiple times without worrying about getting inexplicable results.

Therefore, we will explain the programming method synchronously.

v_install and v_config are the key entrances of the entire PXE-server structure. The meaning is self-evident.

Entrance to package installation

The following software packages are required in the system

| Package | Usage | |

|---|---|---|

| tftp-hpa | TFTP service provides system installation files such as pxelinux.0, grub, etc. | |

| isc-dhcp-server | DHCP+BOOTP service | |

| Nginx | Provide Ubuntu 20.04 installation image |

The PXE protocol combines DHCP and TFTP . DHCP is used to find a suitable boot server, and TFTP is used to download the network boot program (NBP) and additional files.

Since the Ubuntu installation program uses the iso mirroring method (this method is the most convenient for us), the web server is also required to provide the download function.

Okay, v_install will install them:

v_install() {

echo && headline "$(fn_name)" && line

v_install_tftp_server

v_install_dhcp_server

v_install_web_server

}

v_install_tftp_server() {

headline "$(fn_name)"

install_packages tftpd-hpa

}

v_install_dhcp_server() {

headline "$(fn_name)"

install_packages isc-dhcp-server

}

v_install_web_server() {

headline "$(fn_name)"

install_packages nginx$full_nginx

}

I won't repeat it again.

Configure the entry point of the package

v_config handles all configuration actions.

v_config() {

echo && headline "$(fn_name)" && line

v_config_dirs

v_download_iso

v_config_boot

v_config_grub

v_config_bash_skel

v_config_tftp

v_config_dhcp

v_config_nginx

v_config_aif # autoinstall files

}The goal we are going to achieve is to establish such a TFTP layout:

In addition, you need to configure DHCP, Web Server, etc.

The following chapters will be explained in the order given by v_config.

v_config_dirs

We will eventually build a whole tftp folder structure, so here we first make the basic structure:

v_config_dirs() {

$SUDO mkdir -pv $tftp_dir/{autoinstall,bash,boot/live-server,cdrom,grub,iso,priv}

}SUDO is a defensive measure. It is defined like this:

SUDO=sudo [ "$(id -u)" = "0" ] && SUDO=So for the root user it is equivalent to not having it, and for other users it is the

sudocommand.

v_download_iso

Then download the Ubuntu 20.04 live server iso file.

v_download_iso() {

headline "$(fn_name)"

local tgt=$tftp_dir/iso/$ubuntu_iso

[ -f $tgt ] || {

wget "$alternate_ubuntu_iso_url" -O $tgt

}

grep -qE "$tftp_dir/iso/" /etc/fstab || {

echo "$tftp_dir/iso/$ubuntu_iso on $tftp_dir/cdrom iso9660 ro,loop 0 0" | $SUDO tee -a /etc/fstab

$SUDO mount -a && ls -la --color $tftp_dir/cdrom

}

}A series of predefined variables are involved here, they are like this:

ubuntu_codename=focal ubuntu_version=20.04.3 ubuntu_iso=ubuntu-${ubuntu_version}-live-server-amd64.iso ubuntu_mirrors=("mirrors.cqu.edu.cn" "mirrors.ustc.edu.cn" "mirrors.tuna.tsinghua.edu.cn" "mirrors.163.com" "mirrors.aliyun.com")ubuntu_mirrors is a bash array variable, but in fact, only the first value of this list will be used by us:

# in vms_entry(): local ubuntu_iso_url="https://${ubuntu_mirrors[0]}/ubuntu-releases/${ubuntu_codename}/${ubuntu_iso}" local alternate_ubuntu_iso_url=${alternate_ubuntu_iso_url:-$ubuntu_iso_url}:ok:

At the beginning, we first test whether the file exists, and download the iso file as needed.

At the end of v_download_iso, we use grep to verify that fstab has not been modified, and then add an entry, the purpose is to mount the downloaded iso file to /srv/tftp/cdrom .

Auto-mounting does not delay anything, but we will be able to easily extract the files in the iso in the future.

v_config_boot

In the previous section, we have mounted the iso file to cdrom/

v_config_boot() {

# boot files

local tgt=$tftp_dir/boot/live-server

[ -f $tgt/vmlinuz ] || {

$SUDO cp $tftp_dir/cdrom/casper/vmlinuz $tgt/

$SUDO cp $tftp_dir/cdrom/casper/initrd $tgt/

}

}Simply do not explain.

v_config_grub

premise:

local tgt=$tftp_dir/grubThis part of the code first download and prepare the pxelinux.0 file;

[ -f $tftp_dir/pxelinux.0 ] || {

$SUDO wget http://archive.ubuntu.com/ubuntu/dists/${ubuntu_codename}/main/uefi/grub2-amd64/current/grubnetx64.efi.signed -O $tftp_dir/pxelinux.0

}Then copy the grub font file from cdrom/:

In the previous section, we have mounted the iso file to cdrom/

[ -f $tgt/font.pf2 ] || $SUDO cp $tftp_dir/cdrom/boot/grub/font.pf2 $tgt/Then the grub.cfg file is generated:

[ -f $tgt/grub.cfg ] || {

cat <<-EOF | $SUDO tee $tgt/grub.cfg

if loadfont /boot/grub/font.pf2 ; then

set gfxmode=auto

insmod efi_gop

insmod efi_uga

insmod gfxterm

terminal_output gfxterm

fi

set menu_color_normal=white/black

set menu_color_highlight=black/light-gray

set timeout=3

menuentry "Ubuntu server 20.04 autoinstall" --id=autoinstall {

echo "Loading Kernel..."

# make sure to escape the ';' or surround argument in quotes

linux /boot/live-server/vmlinuz ramdisk_size=1500000 ip=dhcp url="http://${PXE_IP}:3001/iso/ubuntu-${ubuntu_version}-live-server-amd64.iso" autoinstall ds="nocloud-net;s=http://${PXE_IP}:3001/autoinstall/" root=/dev/ram0 cloud-config-url=/dev/null

echo "Loading Ram Disk..."

initrd /boot/live-server/initrd

}

menuentry "Install Ubuntu Server [NEVER USED]" {

set gfxpayload=keep

linux /casper/vmlinuz quiet ---

initrd /casper/initrd

}

grub_platform

# END OF grub.cfg

EOFYou must view the source code instead of copying and pasting the code from the page, because the indentation function of heredoc requires tabs to indent, and the original appearance of these characters on the page may have been lost.

For advanced techniques of heredoc, please refer to: Know Here Document

In this grub menu, url="http://${PXE_IP}:3001/iso/ubuntu-${ubuntu_version}-live-server-amd64.iso" gives the iso image of the installation CD, which is accessed through the Web Server service. Later in v_config_nginx we will map the tftp folder to the listable page structure.

ds="nocloud-net;s=http://${PXE_IP}:3001/autoinstall/" specifies the autoinstall folder. The purpose is to provide meta-data and user-data files through the autoinstall specification. They are used for unattended automatic installation.

The iso file of ubuntu live server is about 1GB, so ramdisk_size=1500000 specifies the memory disk size to about 1.5GB to accommodate the iso, and a certain margin is left for the installation program. So each of your new host nodes needs at least 2GB of memory configuration, otherwise the automatic installation process may not be completed.

Privilege status and pipeline output

For bash writing, if a non-privileged user wants to generate a file through heredoc, the following idiom is needed:

cat <<-EOF | sudo tee filename

EOFThen there will be some variants, such as appending to filename:

cat <<-EOF | sudo tee -a filename

EOFThis idiom is to solve the problem that the output pipe character cannot be sudo:

echo "dsjkdjs" > filenameIf the filename is protected by privileges, the echo pipeline output will report an error. If you want to solve the problem, you need to use the cat heredoc | sudo tee filename syntax instead.

Since bash supports multi-line strings, when you don’t want to use heredoc, you can also:

echo "djask

djska

daskl

dajskldjsakl" | sudo tee filenameHowever, it is still usable in simple variable-free expansion scenarios. If your text content is large and may contain complex variable expansions, or surrounded by various single quotes and double quotes, then cat heredoc is the right way.

v_config_bash_skel

The purpose of v_config_bash_skel is to generate a minimal post-installation script boot.sh:

v_config_bash_skel() {

[ -f $tftp_dir/bash/boot.sh ] || {

cat <<-"EOF" | $SUDO tee $tftp_dir/bash/boot.sh

#!/bin/bash

# -*- mode: bash; c-basic-offset: 2; tab-width: 2; indent-tabs-mode: t-*-

# vi: set ft=bash noet ci pi sts=0 sw=2 ts=2:

# st:

#

echo "booted."

[ -f custom.sh ] && bash custom.sh

EOF

}

#

$SUDO touch $tftp_dir/priv/gpg.key

$SUDO touch $tftp_dir/priv/custom.sh

}boot.sh will be automatically executed when Ubuntu is installed and ready for the first boot.

In addition, we also create 0-length backup files gpg.key and custom.sh .

If you want to automatically infuse a dedicated key, for example, when you need to sign a devops distribution before deployment, then you can provide a valid gpg.key file, otherwise you can keep the length of 0.

If you need additional post-processing scripts, you can provide a valid custom.sh script file.

We also provide a more complete boot.sh, but we may need to introduce it next time.

v_config_tftp

v_config_tftp() {

cat /etc/default/tftpd-hpa

}do nothing!

The default configuration of tftp is to point to the /srv/tftp folder. We will use this without additional configuration.

v_config_dhcp

The main purpose is to configure the DHCP IP pool:

v_config_dhcp() {

local f=/etc/dhcp/dhcpd.conf

$SUDO sed -i -r "s/option domain-name .+\$/option domain-name \"$LOCAL_DOMAIN\";/" $f

$SUDO sed -i -r "s/option domain-name-servers .+\$/option domain-name-servers ns1.$LOCAL_DOMAIN, ns2.$LOCAL_DOMAIN;/" $f

grep -qE "^subnet $DHCP_SUBNET netmask" $f || {

cat <<-EOF | $SUDO tee -a $f

# https://kb.isc.org/v1/docs/isc-dhcp-44-manual-pages-dhcpdconf

subnet $DHCP_SUBNET netmask $DHCP_MASK {

option routers $DHCP_DHCP_ROUTER;

option domain-name-servers 114.114.114.114;

option subnet-mask $DHCP_MASK;

range dynamic-bootp $DHCP_RANGE;

default-lease-time 21600;

max-lease-time 43200;

next-server $DHCP_DHCP_SERVER;

filename "pxelinux.0";

# filename "grubx64.efi";

}

EOF

}

$SUDO systemctl restart isc-dhcp-server.service

}filename "pxelinux.0"; named the BOOTP file name.

The variables involved are mainly these:

LOCAL_DOMAIN="ops.local"

DHCP_PRE=172.16.207

DHCP_SUBNET=$DHCP_PRE.0

DHCP_MASK=255.255.255.0

DHCP_DHCP_ROUTER=$DHCP_PRE.2 # it should be a router ip in most cases

DHCP_DHCP_SERVER=$DHCP_PRE.90 # IP address of 'pxe-server'

DHCP_RANGE="${DHCP_PRE}.100 ${DHCP_PRE}.220" # the pool

PXE_IP=$DHCP_DHCP_SERVER

PXE_HOSTNAME="pxe-server" # BIOS name of PXE server, or IP addressSince the simulation is performed in the local VMWare virtual machine, a small network segment plan is used.

v_config_nginx

Simply append the nginx configuration and restart nginx:

v_config_nginx() {

local f=/etc/nginx/sites-available/default

grep -qE 'listen 3001' $f || {

cat <<-EOF | $SUDO tee -a $f

server {

listen 3001 default_server;

listen [::]:3001 default_server;

root $tftp_dir;

autoindex on;

autoindex_exact_size on;

autoindex_localtime on;

charset utf-8;

server_name _;

}

EOF

$SUDO systemctl restart nginx.service

}

}This configuration specifies the web service of pxe-server:3003, which is used in grub.cfg.

v_config_aif

v_config_aif can be described as the highlight, it constructs the files needed by autoinstall.

According to the Ubuntu autoinstall specification, meta-data can provide key:value pairs such as instance_id, but it can also provide nothing.

As for the user-data file, it is used to automatically respond to the installation process. It has more content, but it is not difficult to understand. The difficulty probably lies in the question of what can be adjusted, and sometimes no basis can be found. However, the following is provided in the form of functions, and the specific placeholders are already ready, so you can basically adjust it according to your wishes—just modify the bash variable value.

The following is a panoramic view of the function, slightly cut:

v_config_aif() {

# autoinstall files

$SUDO touch $tftp_dir/autoinstall/meta-data

declare -a na

local network_str="" str="" n=1 i

na=($(ifconfig -s -a | tail -n +2 | grep -v '^lo' | awk '{print $1}'))

for i in ${na[@]}; do

[[ $n -gt 1 ]] && str=", " || str=""

str="${str}${i}: {dhcp4: yes,dhcp6: yes}"

network_str="${network_str}${str}"

let n++

done

grep -qE '^#cloud-config' $tftp_dir/autoinstall/user-data || {

cat <<-EOF | $SUDO tee $tftp_dir/autoinstall/user-data

#cloud-config

autoinstall:

version: 1

interactive-sections: []

# https://ubuntu.com/server/docs/install/autoinstall-reference

# https://ubuntu.com/server/docs/install/autoinstall-schema

apt:

primary:

- arches: [default]

uri: http://${ubuntu_mirrors[0]}/ubuntu

user-data:

timezone: $TARGET_TIMEZONE

# Europe/London

disable_root: true

# openssl passwd -6 -salt 1234

# mkpasswd -m sha-512

chpasswd:

list: |

root: ${TARGET_PASSWORD}

runcmd:

- wget -P /root/ http://$PXE_HOSTNAME:3001/bash/boot.sh

- wget -P /root/ http://$PXE_HOSTNAME:3001/priv/gpg.key || echo "no gpg key, skipped"

- wget -P /root/ http://$PXE_HOSTNAME:3001/priv/custom.sh || echo "no custom.sh, skipped"

- bash /root/boot.sh

#- sed -ie 's/GRUB_TIMEOUT=.*/GRUB_TIMEOUT=3/' /target/etc/default/grub

identity:

hostname: $TARGET_HOSTNAME

# username: ubuntu

# password: "\$6\$exDY1mhS4KUYCE/2\$zmn9ToZwTKLhCw.b4/b.ZRTIZM30JZ4QrOQ2aOXJ8yk96xpcCof0kxKwuX1kqLG/ygbJ1f8wxED22bTL4F46P0"

username: $TARGET_USERNAME

password: "${TARGET_PASSWORD}"

keyboard: {layout: 'us', variant: 'us'}

# keyboard: {layout: 'gb', variant: 'devorak'}

locale: $TARGET_LOCALE

ssh:

allow-pw: no

install-server: true

authorized-keys: [$(n=1 && for arg in "${TARGET_SSH_KEYS[@]}"; do

# arg=\"$arg\"

[ $n -gt 1 ] && echo -n ", "

echo -n "\"$arg\""

let n++

done)]

packages: [$(n=1 && for arg in "${TARGET_PKGS[@]}"; do

# arg=\"$arg\"

[ $n -gt 1 ] && echo -n ", "

echo -n "\"$arg\""

let n++

done)]

storage:

grub:

reorder_uefi: false

swap:

size: 0

config:

# https://askubuntu.com/questions/1244293/how-to-autoinstall-config-fill-disk-option-on-ubuntu-20-04-automated-server-in

- {ptable: gpt, path: /dev/sda, preserve: false, name: '', grub_device: false, type: disk, id: disk-sda}

- {device: disk-sda, size: 536870912, wipe: superblock, flag: boot, number: 1, preserve: false, grub_device: true, type: partition, id: partition-sda1}

- {fstype: fat32, volume: partition-sda1, preserve: false, type: format, id: format-2}

- {device: disk-sda, size: 1073741824, wipe: superblock, flag: linux, number: 2,

preserve: false, grub_device: false, type: partition, id: partition-sda2}

- {fstype: ext4, volume: partition-sda2, preserve: false, type: format, id: format-0}

- {device: disk-sda, size: -1, flag: linux, number: 3, preserve: false,

grub_device: false, type: partition, id: partition-sda3}

- name: vg-0

devices: [partition-sda3]

preserve: false

type: lvm_volgroup

id: lvm-volgroup-vg-0

- {name: lv-root, volgroup: lvm-volgroup-vg-0, size: 100%, preserve: false, type: lvm_partition, id: lvm-partition-lv-root}

- {fstype: ext4, volume: lvm-partition-lv-root, preserve: false, type: format, id: format-1}

- {device: format-1, path: /, type: mount, id: mount-2}

- {device: format-0, path: /boot, type: mount, id: mount-1}

- {device: format-2, path: /boot/efi, type: mount, id: mount-3}

EOF

}

}The bash variables it uses are declared separately, and some excerpts are as follows:

TARGET_HOSTNAME="${TARGET_HOSTNAME:-ubuntu-server}"

TARGET_USERNAME="${TARGET_USERNAME:-hz}"

TARGET_PASSWORD="${TARGET_PASSWORD:-$_default_passwd}"

TARGET_LOCALE="${TARGET_LOCALE:-en_US.UTF-8}"

TARGET_SSH_KEYS=(

"ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDxjcUOlmgsabCmeYD8MHnsVxueebIocv5AfG3mpmxA3UZu6GZqnp65ipbWL9oGtZK3BY+WytnbTDMYdVQWmYvlvuU6+HbOoQf/3z3rywkerbNQdffm5o9Yv/re6dlMG5kE4j78cXFcR11xAJvJ3vmM9tGSBBu68DR35KWz2iRUV8l7XV6E+XmkPkqJKr3IvrxdhM0KpCZixuz8z9krNue6NdpyELT/mvD5sL9LG4+XtU0ss7xH1jk5nmAQGaJW9IY8CVGy07awf0Du5CEfepmOH5gJbGwpAIIubAzGarefbltXteerB0bhyyC3VX0Q8lIHZ6GhMZSqfD9vBHRnDLIL"

)

TARGET_PKGS=(

# net-tools

# lsof

curl

wget

# whois

)

TARGET_TIMEZONE=Asia/Chongqing_default_passwd you can generate it yourself:

$ mkpasswd -m sha-512Or simply write it like this:

_default_passwd="$(mkpasswd -m sha-512 'password')"TARGET_SSH_KEYS can give an array and adjust it yourself.

TARGET_PKGS can be adjusted, but it is not recommended. With curl and wget, you can further and better perform post-installation processing in boot.sh without having to do it during the system installation process. Because of the Ubuntu installation process, the mirror designation of the software source may not be fully effective during the installation process. Therefore, it is not advisable to install too many software packages during the installation process. The software source will not be problematic when the software source is operated after the first startup.

We provide a more complete boot.sh that contains practical functions such as automatic login to the console, secret-free sudo, and so on. In addition,TARGET_SSH_KEYSprovides the ability to log in remotely through SSH, so_default_passwdat will, basically you don’t use it yourself. Possibly, so presetting a super complex (but super hard to remember) password is conducive to server security.

Background: cloud-init and autoinstall

User-data is part of the cloud-init specification, but cloud-init and autoinstall are produced by the same family and can be used interchangeably in the Ubuntu language.

Note that we have adopted the yaml configuration structure. If you want, you can also use user-data script and other formats.

Expand the array in heredoc

Note that using the bash variable expansion syntax, we have written an embedded script to expand the TARGET_SSH_KEYS array:

ssh:

allow-pw: no

install-server: true

authorized-keys: [$(n=1 && for arg in "${TARGET_SSH_KEYS[@]}"; do

# arg=\"$arg\"

[ $n -gt 1 ] && echo -n ", "

echo -n "\"$arg\""

let n++

done)]The expanded effect is as follows:

ssh:

allow-pw: no

install-server: true

authorized-keys: ["ssh-rsa dskldl", "ssh-rsa djskld"]A similar approach is also useful in the packages section.

summary

All scripts are ready, run it!

Not surprisingly (of course there will be no), then pxe-server is now ready. Just waiting for you to open a new machine to test it.

Test effect

Now as long as the newly created host node is in the pxe-server network segment, the installation can be automatically completed through the pxe search of the network card.

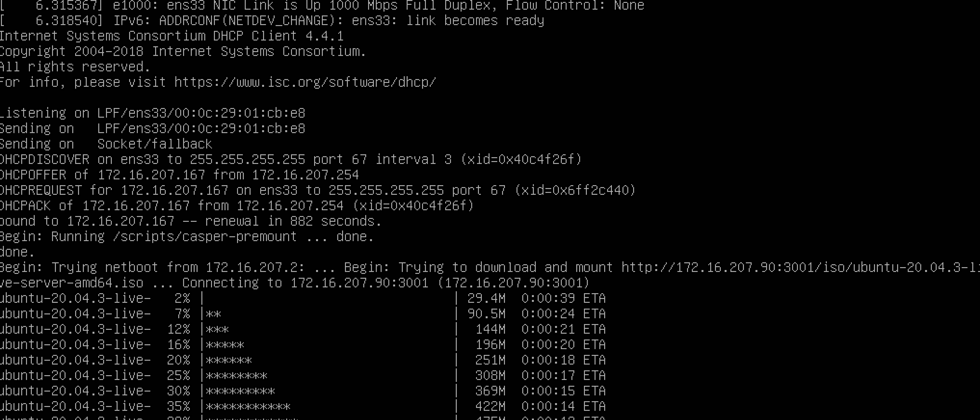

After the new host is powered on and obtains the DHCP+BOOTP startup parameters, when running pxelinux.0 and automatically executing the GRUB menu item (in the inittd and vmlinuz operating state in the figure):

The state that has entered the unattended system installation process is as follows:

The problems we did not solve are:

- Support multiple systems, multiple hardware configurations

- Support programmable management and maintenance of cloud facility architecture

- Use meta-data metadata set

- etc

These questions are not what this article should complete.

may be discussed in light weight in another article in the .

Install script after writing

We have provided a so-called more complete boot.sh post-installation script, which completes the basic environment preparation necessary for a working node. These environment configurations are for the operation and maintenance personnel to work happily in the node. You can Write it yourself to suit your network architecture.

Regarding the explanation of the post-installation script, may be discussed next time , this article is long enough.

bash.sh

Please refer to bash.sh , this is a separate file, it can be used as a basic skeleton for hand-written bash scripts.

The best example of using it is just like the vms-builder script in this article.

bash.sh provides a set of basic detection functions that can help you write scripts that are versatile. vms-builder additionally provides a brief package of package management operations to enable cross-platform applications.

I planned to introduce bash.sh itself to help you understand the code given in this article, but found that the length is already very long, and I am very dissatisfied with the "Long Words". Just look at the source code by yourself.

Tarball

The code mentioned in this article, such as vms-builder, other necessary files, and the folder structure for reference, etc., can be found in repo , welcome to use.

postscript

It should be mentioned that VMWare provides a dedicated Data Source that can provide data source services to cloud-init. From this perspective, this article does not actually have to be so troublesome-but that requires an enterprise-level platform such as VMWare vSphere.

This data source supply mechanism has been supported by major cloud service providers, so cloud-init is the de facto cloud service infrastructure preparation standard.

This is probably a rare wheel made by Canonical that everyone loves to see.

refer to

- Preboot Execution Environment - Wikipedia

- PXE specification – The Preboot Execution Environment specification v2.1 published by Intel & SystemSoft

- BIS specification – The Boot Integrity Services specification v1.0 published by Intel

- Intel Preboot Execution Environment – Internet-Draft 00 of the PXE Client/Server Protocol included in the PXE specification

- PXE error codes – A catalogue of PXE error codes

- Automated server install -- schema - Ubuntu

- Automated server install reference - Ubuntu

- cloud-init Documentation — cloud-init 21.4 documentation

- cloud-init.io

- hedzr/bash.sh: main entry template of your first bash script file

🔚

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。