Since its launch in 2012, hundreds of thousands of Amazon Cloud Technologies customers have chosen Amazon DynamoDB for their mission-critical workloads. Amazon DynamoDB is a non-relational database that allows you to store virtually unlimited amounts of data and retrieve it with single-millisecond performance at any scale.

To get the most value from this data, customers must rely on Amazon Data Pipeline, Amazon EMR or other solutions based on Amazon DynamoDB streams. These solutions often require building custom applications with high read throughput, which in turn incur high maintenance and operational costs.

Today, we're introducing a new feature that allows you to export Amazon DynamoDB table data Amazon Simple Storage Service (S3) - without writing code.

It is a new native feature of Amazon DynamoDB that can run at any scale without managing servers or clusters, and allows you to export data to any time in the past 35 days with second granularity across Amazon Cloud Tech regions and accounts point. Also, it does not affect the read capacity or availability of production tables.

Once you export your data Amazon DynamoDB JSON or Amazon Ion format, you can use favorite tools like Amazon Athena, Amazon SageMaker and Amazon Lake Formation

In this article, I'll show you how to export an Amazon DynamoDB table to Amazon S3 and then query it through Amazon Athena using standard SQL.

an Amazon DynamoDB table to an Amazon S3 bucket

The export process relies on Amazon DynamoDB's ability to continuously back up data in the background. This feature is called continuous backup : it supports point-in-time recovery (PITR) and allows you to restore a table to any point in time within the past 35 days.

You can click and Exports Streams (streams and export) tab Export to Amazon S3 (export to Amazon S3) start.

Unless you have continuous backups enabled, you must Enable PITR (Enable PITR).

Provide the bucket name in the destination Amazon S3 bucket , for example Amazon S3://my-dynamodb-export-bucket. Keep in mind that your buckets may also be in other accounts or other regions.

Feel free to check out the other settings, where you can configure a specific point in time, output format, and encryption key. I will use the default settings.

You can now confirm the export request Export

The export process begins and you can Streams and exports (Streams and Exports) tab.

After the export process is complete, you will find a new Amazon DynamoDB folder in your Amazon S3 bucket with a subfolder corresponding to the export ID.

This is the content of that subfolder.

You will find two manifest files that will allow you to verify integrity and discover data subfolder, which are automatically compressed and encrypted for you.

to automate the export process via the Amazon

If you want to automate the export process, such as creating a new export weekly or monthly, you can create a new export request by calling the Amazon ExportTableToPointInTime API through the Amazon CLI or Amazon SDK .

Below is an example using the Amazon CLI.

Bash

aws dynamodb export-table-to-point-in-time \

--table-arn TABLE_ARN \

--s3-bucket BUCKET_NAME \

--export-time 1596232100 \

--s3-prefix demo_prefix \

-export-format DYNAMODB_JSON

{

"ExportDescription": {

"ExportArn": "arn:aws:dynamodb:REGUIB:ACCOUNT_ID:table/TABLE_NAME/export/EXPORT_ID",

"ExportStatus": "IN_PROGRESS",

"StartTime": 1596232631.799,

"TableArn": "arn:aws:dynamodb:REGUIB:ACCOUNT_ID:table/TABLE_NAME",

"ExportTime": 1596232100.0,

"S3Bucket": "BUCKET_NAME",

"S3Prefix": "demo_prefix",

"ExportFormat": "DYNAMODB_JSON"

}

}*Swipe left to see more

After requesting an export, you must wait for the ExportStatus to become " COMPLETED ".

Bash

aws dynamodb list-exports

{

"ExportSummaries": [

{

"ExportArn": "arn:aws:dynamodb:REGION:ACCOUNT_ID:table/TABLE_NAME/export/EXPORT_ID",

"ExportStatus": "COMPLETED"

}

]

}*Swipe left to see more

exported data using Amazon

Once the data is securely stored in an Amazon S3 bucket, you can start analyzing it using Amazon Athena.

You'll find many gz-compressed objects in an Amazon S3 bucket, each containing a text file with multiple JSON objects, one object per line. These JSON objects correspond Item field, and have different structures depending on the export format you choose.

In the export process above, I chose Amazon DynamoDB JSON, and the items in the example table represent users of a simple game, so a typical object looks like this.

JSON

{

"Item": {

"id": {

"S": "my-unique-id"

},

"name": {

"S": "Alex"

},

"coins": {

"N": "100"

}

}

}

*Swipe left to see more

In this example, name is a string and coins is a number.

I recommend using the Amazon Glue crawler automatically discover the Amazon Schema data and create a virtual table in the Amazon Glue catalog.

However, you can also manually define virtual tables CREATE EXTERNAL TABLE

SQL

CREATE EXTERNAL TABLE IF NOT EXISTS ddb_exported_table (

Item struct <id:struct<S:string>,

name:struct<S:string>,

coins:struct<N:string>

)

ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe'

LOCATION 's3://my-dynamodb-export-bucket/AWSDynamoDB/{EXPORT_ID}/data/'

TBLPROPERTIES ( 'has_encrypted_data'='true');*Swipe left to see more

Now you can use normal SQL query it, you can even use the Create the Table AS the Select (CTAS) query to define a new virtual table.

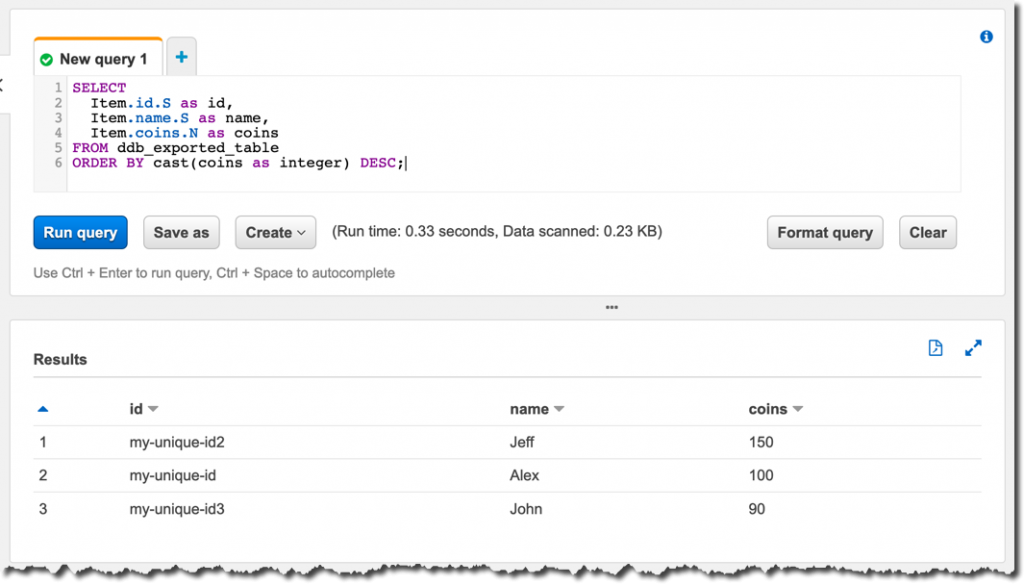

Your query looks like the following when using the Amazon DynamoDB JSON format.

SQL

SELECT

Item.id.S as id,

Item.name.S as name,

Item.coins.N as coins

FROM ddb_exported_table

ORDER BY cast(coins as integer) DESC;

You will get a result set as output.

performance and cost considerations

The export process is , which scales automatically and is much faster than a custom table scan solution.

The completion time depends on the size of the table and how the consolidated data is distributed in the table. Most exports complete within 30 minutes. For small tables up to 10GiB, the export should only take a few minutes; for very large tables in the terabyte range, it may take hours. This shouldn't be a problem since you're not using the in-lake data warehouse export for real-time analysis. Typically, data warehouses within the lake are used to aggregate data on a large scale and generate daily, weekly or monthly reports. Therefore, in most cases, you can wait a few minutes or hours for the export process to complete before proceeding with the analysis pipeline.

Due to the serverless nature of this new feature, there is no hourly cost: you only pay for the GB of data exported to Amazon S3, for example, $0.10 per GiB in the US East Region.

Since data is exported to your own Amazon S3 bucket and continuous backup is a prerequisite for the export process, keep in mind that you will incur additional charges associated PITR backup and Amazon S3 data storage All cost components involved depend only on the amount of data you are exporting. As a result, the overall cost is easy to estimate and much lower than the total cost of ownership of building a custom solution with high read throughput and high maintenance costs.

is now available

This new feature is now available in all Amazon cloud tech regions that offer continuous backup.

You can make export requests Amazon Cloud Management Console, Amazon Cloud Command Line Interface (Amazon CLI) and Amazon Cloud Development Kit This capability enables developers, data engineers, and data scientists to easily extract and analyze data from Amazon DynamoDB tables without having to design and build expensive custom applications for ETL (extract, transform, load).

You can now internal analysis tool to the Amazon DynamoDB data using Amazon Athena services such interim analysis, the use of Amazon QuickSight for data exploration and visualization using Amazon Redshift and Amazon SageMaker forecast analysis, etc. .

Scan the QR code above to register now

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。