introduction

As the data synchronization component of TiDB, TiCDC is responsible for directly synchronizing data changes from TiKV to downstream. The core problem is the correctness of data parsing, specifically, how to use the correct schema to parse the Key-Value data passed by TiKV, so as to restore it to the correct SQL or other downstream supported forms. This paper mainly analyzes the principle and implementation of TiDB Online DDL mechanism, and leads to the discussion of the current implementation of TiCDC data parsing.

Background and Issues

The data synchronization component is an indispensable ecological tool in the database ecology. The well-known open source stand-alone database MySQL uses data synchronization as part of the server capability, and implements asynchronous/semi-synchronous/synchronous master-slave replication based on MySQL binlog. Due to the existence of MySQL's pessimistic transaction model and table metadata locks, we can always think that the causal data and schema in MySQL binlog are in chronological order, namely:

New data commitTs > New schema commitTs

But for TiDB, which separates storage and computation, schema changes are persisted at the storage layer, and service layer nodes are multi-cache nodes, and there is always a time period when the schema state is inconsistent. In order to ensure data consistency and realize online DDL changes, most of the existing distributed databases adopt or learn from the Online, Asynchronous Schema Change in F1 mechanism. So the question we need to answer becomes, under the TiDB Online DDL mechanism, how does TiCDC correctly handle the corresponding relationship between data and schema, and whether the data and schema with causal relationship still satisfy:

New data commitTs > New schema commitTs

In order to answer this question, we first need to explain the core principle of the original F1 Online Schema Change mechanism, then describe the current TiDB Online DDL implementation, and finally we discuss the processing relationship between data and schema and possible differences under the current TiCDC implementation. unusual scene.

F1 Online Schema Change Mechanism

The core problem to be solved by the F1 Online Schema Change mechanism is how to realize the Online Schema change that satisfies data consistency under the architecture of single storage and multiple cache nodes, as shown in Figure 1:

Figure 1: Schema changes under a single-storage, multiple-cache node architecture

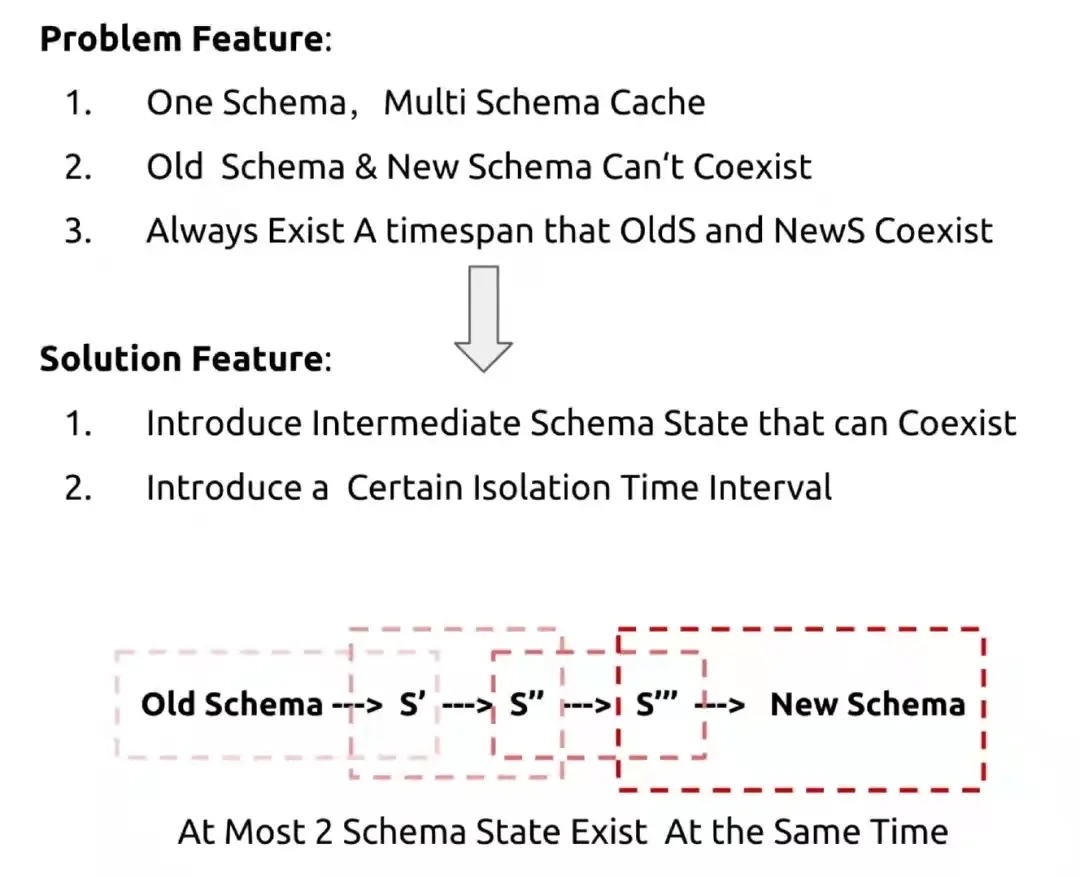

Here we define the data inconsistency problem as data excess (orphan data anomaly) and data missing (integrity anomaly). After the schema change ends, if there is excess data or missing data, we consider the data to be inconsistent. The characteristics of the schema change problem of such systems can be summarized into the following three points:

One schema store, multiple schema caches

Some new schema and old schema cannot coexist

When changing directly from the old schema to the new schema, there is always a time interval where both exist at the same time

Features 1 and 3 are caused by the system architecture and are easier to understand. A typical example of feature 2 is add index. When the service layer node loaded with the new schema inserts data, the index will be inserted at the same time, while the delete operation of the service layer node loaded with the old schema will only delete the data, resulting in an index that does not point to. , there is excess data.

Features 2 and 3 of the schema change problem seem to be contradictory dead ends. The new schema and the old schema cannot coexist, but they must coexist. The solution provided by the F1 Online Schema mechanism is also very ingenious, changing the conditions without changing the result. Therefore, there are two main points in the solution of this paper, as shown in Figure 2:

Figure 2: F1 Online DDL Solution

- Introduce coexisting intermediate schema states, such as S1->S2'->S2, S1 and S2' can coexist, and S2' and S2 can coexist;

- Introduce a certain isolation time interval to ensure that schemas that cannot coexist will not appear at the same time;

Specifically:

Introduce coexisting intermediate schema states

Because the direct change from schema S1 to schema S2 will lead to data inconsistency, the delete-only and write-only intermediate states are introduced, and the process from S1 -> S2 becomes S1 -> S2+delete-only -> S2+write -only -> S2 process, and use the lease mechanism to ensure that at most 2 states coexist at the same time. At this time, it is only necessary to prove that the two adjacent states can coexist to ensure data consistency, and then it can be deduced that the data is consistent during the change from S1 to S2.

Introduce definite quarantine time intervals

Define a schema lease. Nodes need to reload the schema after the lease duration is exceeded. Nodes that cannot obtain the new schema after the lease exceeds the lease time are directly offline and do not provide services. So it can be clearly defined that after 2 times the lease time, all nodes will be updated to the next schema.

Introduce an intermediate state of coexistence

What kind of intermediate states do we need to introduce? It depends on what problem we need to solve. Here we still use the DDL of add index as an example, other DDL details can be found in Online, Asynchronous Schema Change in F1.

Delete-only state

We can see that the old schema cannot see the index information, so it will lead to the abnormal situation of deleting data and leaving unpointed indexes. Therefore, the first intermediate state we want to introduce is the delete-only state. Gives the schema the ability to drop indexes. In the delete-only state, the schema can only delete the index during the delete operation, and cannot operate the index during the insert/select operation, as shown in Figure 3:

Figure 3: Introducing the delete-only intermediate state

The original paper defines delete-only as follows:

Assuming that we have introduced a clear isolation time interval (more on that in the next section), we can guarantee that at most 2 schema states appear at the same time. So when we introduce the delete-only state, the scenarios that need to be considered become:

old schema + new schema(delete-only)

new schema(delete-only) + new schema

For scenario 1, all service layer nodes are either in old schema state or new schema (delete-only) state. Since the index can only be operated at the time of delete, there is no index generation at all, so there will be no index problem left without pointing, and there will be no data missing problem. At this time, the data is consistent. We can say that old schema and new schema (delete-only) can coexist.

For scenario 2, all service layer nodes are either in the new schema (delete-only) state or in the new schema state. Nodes in the new schema state can insert and delete data and indexes normally. Nodes in the new schema (delete-only) state can only insert data, but can delete data and indexes. At this time, there is a problem that some data lack indexes, and the data is inconsistent. .

After the introduction of the delete-only state, the problem of redundant indexes mentioned above has been solved, but it can be found that nodes in the new schema (delete-only) state can only insert data, resulting in the lack of newly inserted data and existing historical data. Index information, there is still data inconsistency with missing data.

Write-only state

In scenario 2, we can see that for the scenario of add index, both the data inserted by the node in the new schema (delete-only) state and the existing data have the problem of missing index. The amount of existing data itself is deterministic and limited, and indexes can always be generated based on the data within a limited time, but the data of new inserts may continue to increase over time. In order to solve this problem of missing data, we also need to introduce a second intermediate state, the write-only state, to give the schema insert/delete indexing capabilities. Nodes in write-only state can insert/delete/update indexes, but select cannot see indexes, as shown in Figure 4:

Figure 4: Introducing write-only state

The definition of write-only state in the original paper is as follows:

After introducing the write-only state, the above scenario 2 is divided into scenario 2' and scenario 3:

2’: new schema(delete-only) + new schema(write-only)

3: new schema(write-only) + new schema

For scenario 2', all service layer nodes are either in new schema (delete-only) state or new schema (write-only) state. A service layer node in the new schema (delete-only) state can only insert data, but can delete data and indexes. In the new schema (write-only) state, data and indexes can be inserted and deleted normally. At this time, there is still the problem of missing index, but because the index is invisible to the user in the delete-only and write-only states, from the user's point of view, there is only complete data and no index, so the internal For the user, the missing index still satisfies the data consistency.

For scenario 3, all service layer nodes are either in new schema (write-only) state or in new schema. At this time, the data of the new insert can maintain the index normally, but the stock historical data still has the problem of missing index. However, the stock of historical data is deterministic and limited. We only need to complete the historical data index after all nodes transition to write-only, and then transition to the new schema state to ensure that the data and index are complete. At this time, the nodes in the write-only state can only see the complete data, while the nodes in the new schema state can see the complete data and indexes, so the data is consistent for users.

Section Summary

From the above description of the two intermediate states, delete-only and write-only, we can see that in the F1 Online DDL process, the original single-step schema change is separated by the two intermediate states. Every two states can coexist, each state change can ensure data consistency, and the data change of the whole process can also ensure data consistency.

Introduce definite quarantine time intervals

In order to ensure that at most two states can exist at the same time, it is necessary to agree on the behavior of the service layer node to load the schema:

All service layer nodes need to reload schema after lease;

If the new schema cannot be obtained within the lease time, the service will be denied offline;

Through the agreement on the loading behavior of service layer nodes, we can get a definite time boundary. After the time period of 2*lease, all normal working service layer nodes can transition from schema state1 to schema state2, as shown in Figure 5. :

Figure 5: All nodes can transition to the next state after a maximum of 2*lease duration

Intermediate state visibility

To correctly understand the intermediate state of the original paper, one needs to correctly understand the visibility problem of the intermediate state. In the previous section, for convenience, we have always used add index as an example, and then stated that the index in the delete-only and write-only states is invisible to the user's select, but in the write-only state, delete/insert can operate the index. If the DDL is replaced with add column, can the user insert explicitly specify the new column to be executed successfully when the node is in the write-only state? The answer is no.

In general, the delete/insert visibility of the intermediate state is internal visibility, specifically the visibility of service layer nodes to storage layer nodes, not user visibility. For the DDL of add column, the service layer node can see the new column in the delete-only and write-only states, but the operations are subject to different restrictions. For users, the new column can only be seen in the new schema state, and the new column can be manipulated explicitly, as shown in Figure 6:

Figure 6: Intermediate state visibility

For clarity of visibility, let's take an example, as shown in Figure 7. The original list information is, after the DDL operation, the list information is <c1,c2>.

Figure 7: Intermediate state transitions

In the small picture (1), the service layer nodes have transitioned to scenario 1, some nodes are in the old schema state, and some nodes are in the new schema (delete-only) state. At this time, c2 is invisible to the user, and whether it is insert<c1,c2> or delete<c1,c2> explicitly specifying c2 will fail. However, if there is data such as [1,xxx] in the storage layer, it can be deleted smoothly, and only row data with missing c2 such as [7] can be inserted.

In the small picture (2), the service layer nodes have transitioned to scene 2, some nodes are in the new schema (delete-only) state, and some nodes are in the new schema (write-only) state, at this time c2 is still invisible to the user. , either insert<c1,c2> or delete<c1,c2> explicitly specifying c2 fails. But for nodes in write-only state, insert [9] will be filled with the default value of [9,0] internally to insert into the storage layer. For nodes in delete-only state, delete [9] will be converted to delete [9,0].

In the small figure (3), after all nodes in the service layer are transitioned to write-only, c2 is still invisible to users. At this point, data filling starts to fill in the rows missing c2 in the historical data (the implementation may just mark a mark in the column information of the table, depending on the specific implementation).

In the small picture (4), the transition to scene 3 begins, some nodes are in the new schema (write-only) state, and some nodes are in the new schema state. For nodes in the new schema (write-only) state, c2 is still invisible to users. A node in the new schema state, c2 is visible to users. At this time, users connected to different service layer nodes can see different select results, but the underlying data is complete and consistent.

Summarize

Above, we briefly described the F1 online Schema mechanism through three subsections. The original single-step schema change was disassembled into multiple intermediate change processes, thereby realizing online DDL changes under the premise of ensuring data consistency.

For add index or add column DDL is the above state change, for drop index or drop column it is the complete opposite process. For example, the drop column is not visible to the user in the write-only phase and after, and can be correctly inserted/deleted internally. The visibility is exactly the same as the previous discussion.

TiDB Online DDL implementation

TiDB Online DDL is implemented based on F1 Online Schema. The overall process is shown in Figure 8:

Figure 8 TiDB Online DDL process

A brief description is as follows:

When the TiDB Server node receives the DDL change, it wraps the DDL SQL into a DDL job and submits it to the TIKV job queue for persistence;

The TiDB Server node elects the Owner role, obtains the DDL job from the TiKV job queue, and is responsible for implementing the multi-stage changes of DDL;

Each intermediate state (delete-only/write-only/write-reorg) of DDL is a transaction commit, which is persisted to the TiKV job queue;

After the schema change is successful, the DDL job state will be changed to done/sync, indicating that the new schema is officially seen by the user, and other job states such as canceled/rollback done, etc., indicate that the schema change failed;

The subscription notification mechanism of etcd is used in the schema state change process, which speeds up the schema state synchronization between nodes in the server layer and shortens the 2*lease change time.

After the DDL job is in the done/sync state, it means that the DDL change has ended and is moved to the job history queue;

For detailed TiDB processing flow, please refer to: schema-change-implement.md and TiDB ddl.html

The relationship between Data and Schema processing in TiCDC

We have described the principle and implementation of the TiDB Online DDL mechanism before, and now we can return to the question we raised at the beginning: under the TiDB Online DDL mechanism, can it still satisfy:

New data commitTs > New schema commitTs

the answer is negative. In the previous description of the F1 Online Schema mechanism, we can see that in the scenario of add column DDL, when the service layer node is in the write-only state, the node has been able to insert new column data, but the new column is not yet in the state. The user-visible state, that is, the emergence of New data commitTs < New schema commitTs, or the above conclusion becomes:

New data commitTs > New schema(write-only) commitTs

However, in the transition state of delete-only + write-only, TiCDC directly uses New schema (write-only) as the parsed schema, which may cause the data of the delete-only node insert to be unable to find the corresponding column meta-information or the meta-information type is different. match, resulting in data loss. Therefore, in order to ensure correct data parsing, it may be necessary to maintain complex schema strategies internally according to different DDL types and specific internal implementations of TiDB.

In the current implementation of TiCDC, a relatively simple schema strategy is selected, each intermediate state is directly ignored, and only the schema state after the change is completed is used. In order to better express the different scenarios that TiCDC needs to deal with under the TIDB Online DDL mechanism, we use quadrant diagrams for further classification and description.

Old schema New schema

Old schema data 1 2

New schema data 3 4

1 corresponds to the old schema state

At this time, old schema data and old schema are corresponding ;

4 Corresponding to new schema public and later

At this time, new schema data and new schema are corresponding;

3 Corresponding to the data between write-only ~ public

At this time, TiCDC uses the old schema to parse data, but TiDB nodes in the write-only state can already insert/update/delete some data based on the new schema, so TiCDC will receive the new schema data. Different DDLs have different processing effects. We select three common and representative DDLs as examples.

add column: state change abstract -> delete-only -> write-only -> write-reorg -> public. Since the new schema data is the default value filled by TiDB nodes in the write-only state, it will be discarded directly after parsing using the old schema, and the default value will be filled again when the new schema DDL is executed downstream. For dynamically generated data types, such as auto_increment and current timestamp, upstream and downstream data may be inconsistent.

change column: lossy state change abstract -> delete-only -> write-only -> write-reorg -> public, such as int to double, different encoding methods require data redo. In the TiDB implementation, a lossy modify column will generate an invisible new column, and in the intermediate state, the old column will be updated at the same time. For TiCDC, it only processes the delivery of the old column, and then executes the change column downstream, which is consistent with the processing logic of TiDB.

drop column: state change abstract->write-only->delete-only->delete-reorg->public. The newly inserted data in the write-only state has no corresponding column. TiCDC will fill in the default value and send it to the downstream. After the downstream executes drop column, the column will be discarded. Users may see unexpected defaults, but the data is eventually consistent.

2 corresponds directly from old schema -> new schema

It means that under such schema changes, the old schema and the new schema can coexist without intermediate states, such as truncate table DDL. After TiDB successfully executes truncate table, the service layer node may not have loaded the new schema yet, and data can be inserted into the table. This data will be filtered by TiCDC directly based on the tableid. In the end, there is no such table in the upstream and downstream, which satisfies the eventual consistency. sex.

Summarize

TiCDC is the data synchronization component of TiDB. The correctness of data parsing is the core issue to ensure the consistency of upstream and downstream data. In order to fully understand the various abnormal scenarios encountered by TiCDC in the process of processing data and schema, this article first starts from the principle of F1 Online Schema Change, describes in detail the data behavior at each stage of schema change, and then briefly describes the current implementation of TiDB Online DDL. . Finally, a discussion on the relationship between data and schema processing under the current TiCDC implementation is introduced.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。